Future Tech: Scenes From Research@Intel Day

Intel offers a glimpse of tomorrow's technology at its annual R&D demo, highlighting everything from ray tracing graphics to robot bartenders.

Researchers, analysts and journalists from around the globe descended upon the Computer History Museum in Mountain View, Calif. for this week's Research@Intel Day. Intel on Wednesday happily provided a comprehensive look at its long-term research plans, but the Santa Clara, Calif.-based chip giant played short-term developments much closer to the vest. "Clearly in areas like silicon technology, we say much less publicly than in other areas. Certainly we'll show you 32nm technology but probably not a lot about future architectures. Most of what you'll see, with exceptions, is five, six years in the future," said Intel CTO Justin Rattner in his keynote opening Research@Intel Day.

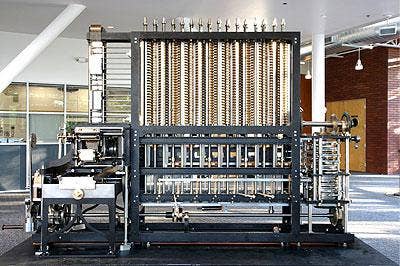

No, Intel isn't going this retro on its roadmap. Still, the working model of Charles Babbage's Difference Engine No. 2 which greets visitors at the entrance of the Computer History Museum serves as an appropriate starting point for a day dedicated to the chip maker's past and future RD efforts.

Rattner kicked off Research@Intel Day with quick historical sketches of three of Intel's most newsworthy current technologies. He described the beginnings of the new Atom family of low-power processors in Intel's Microprocessor Research Lab way back in 1999, the development starting in 2002 by the chip maker's Systems Terminology Lab and the Intel Communications Group of the remote management technology that would become the vPro platform, and the germ of an idea for WiMAX that emerged almost a decade ago at Intel Architecture Labs.

Intel CTO Justin Rattner outlined five areas the chip maker is focusing on for future product technologies -- visual computing, wireless, healthcare applications, environmentally friendly computing and long-term scientific research.

Rattner made perhaps his boldest statement of the day regarding Intel's long-term plans for graphics computing. Intel's contention is that traditional raster-based graphics will eventually be overtaken by ray-tracing technologies. Rattner further promised that all this would become clear when Intel presents a paper at August's Siggraph conference on "Larrabee," the chip maker's much-discussed but largely detail-deficient plans for a multi-core x86 graphics chip.

Larrabee is roadmapped for release in the second half of next year. That's quite a long ways off, and with competitors Nvidia and AMD-ATI ruling the market for discrete graphics in an increasingly visually-based computing world, Intel might feel the pressure to deliver actual product to supplement its ray-tracing vision even sooner.

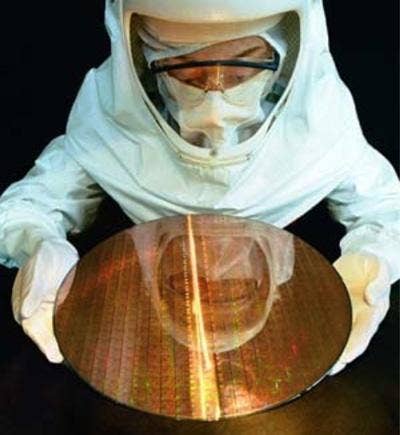

Intel may be tight-lipped about near-term product launches, but the chip giant is always happy to show off its latest silicon wafers and the steady drumbeat of Moore's Law. Even as Intel ramps its 45nm logic process, it's looking ahead to the initial production of 32nm chips scheduled for 2009. The 32nm process continues Moore's Law by doubling transistor density compared to that at 45nm, as measured by SRAM cell size, and also adds immersion lithography to the fabrication process for the first time at Intel. At the Research@Intel demo of 32nm process technology, Intel had a functional 291 Mbit SRAM wafer on hand.

Intel's had its "Smart Car" on display as eye candy at several big events now, but at Research@Intel Day the focus was squarely on developments to an extension of the C/C++ programming language called Ct that have been used by Intel and Neusoft to create "computer vision" to track objects for driver assistance and safety. The Ct research effort has helped programmers create highly-parallelized and scalable software that takes full advantage of Intel's current multi-core and future tera-scale processors, according to the chip maker.

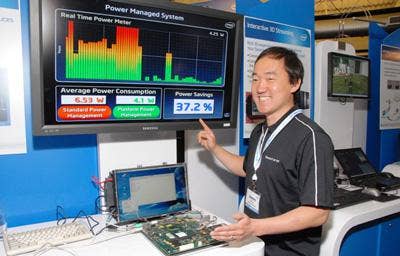

Intel's efforts in platform power management take on new urgency with each hike in the price of gas. In addition to lowering the straight power consumption of processors and platforms as related to performance, Intel continues to explore ways to continually monitor changes in platform operation and aggressively power down portions of a system that are not in use.

Intel's research aims include improving the management of hardware platforms to significantly reduce power consumption. With straightforward changes to existing platforms as demonstrated on this demo chipset, Intel claims it can achieve reductions in power consumption of more than 30 percent over unmodified boards. The chip maker further expects reductions of 50 percent or more on future platform re-designs. Such advances will benefit the full range of Intel products, including ultra mobile, laptop, desktop, server and Tera-scale devices, according to the company.

Assisting with the creation of health care applications and biomedical research are big areas of focus for Intel. To wit, the Gait Analysis System for Older People demo, showcasing results from the BioMOBIUS Research Platform of closely integrated, low-cost hardware and software components for building research tools. BioMOBIUS provided the key technology capabilities to facilitate the development of this state-of-the-art gait analysis system which helps to reveal the key factors in people's gait, determining their risk of falling.

Computer speech interfaces have been glitchy since HAL first misfired back in 1968, but Intel thinks it's on to something, well, small with the development of a voice-controlled mechanism for creating device connections. Speech interfaces are tailor-made for small mobile devices, Intel contends, due to the limitation of the physical input and output channels on such gadgets.

At Research@Intel Day, the chip maker demonstrated a speech interface controlling the task of creating connections between two mobile devices and a wireless display with the goal of sharing resources and services. For example, consumers can speak commands in a natural manner to synch their mobile device with a large screen television to share recent photos of their children with grandparents.

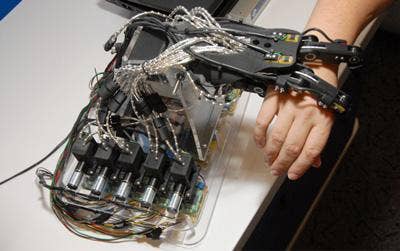

Robotic hands are always a sure-fire crowd-pleaser, and Intel didn't disappoint at its big RD event Wednesday. The Personal Robotics project at Intel Research Pittsburgh aims to enable robots to perform useful tasks in unstructured home and office environments. Who doesn't welcome our new robot arm-grabbing overlords?

Intel's BarKeep is an autonomous robot developed at IR Pittsburgh that demonstrates integrated perception, navigation, planning and grasping for the task of loading mugs from a mobile Segway into a dish rack, using an anthropomorphic robot arm.

Intel's Common Sense RD team demoed prototypes of mobile environmental sensing platforms that empower individuals and communities to gather, analyze, and share information in order to influence environmental policy, including a current deployment on street sweepers in San Francisco.

Intel has been talking up a storm about ray tracing, the chip maker's divergence from traditional raster-based graphics. At Research@Intel Day, the company demoed visual computing models for video games aimed at consumers emerging from the Real-Rime Ray Tracing research project as well as industrial applications.

"Our long-term view of visual computing is we have to move beyond raster graphics," said Rattner. "If you look at more powerful rendering techniques like ray tracing, you find that aggressive render techniques work better on CPUs than GPUs. Our new architecture will deliver vastly better visual compute experiences because they'll break the bond with the traditional raster pipeline."

Ray tracing uses computational modeling to simulate light rays in a 3D scene. According to Intel, multi-core central processors make it possible to use ray tracing for interactive 3D graphics for a variety of visual computing applications. In addition to video game demos, Intel on Wednesday showcased the results of collaboration with researchers at VRContext to enable the visualization of extremely complex industrial models using Intel multi-core processors.

We journos aren't going to do it, but maybe Intel can. With the horsepower of Tera-scale processors, the chip maker thinks it can enable computing devices to understand the contents of visual media in ways previously unimagined. The Intel China Research Center is developing techniques for the computational perception of people, objects, scenes and events, and is also a leading participant in the National Institute of Standards (NIST) competition on media mining. In recent months, Intel has been drumming up interest in the possibilities for media mining on ultra-mobile devices, particularly highlighting the potential usefulness of mapping and translation applications for the Internet-connected traveler.