Amazon CEO Jassy’s Annual Shareholder Letter: 5 Things To Know

'The amount of societal and business benefit from the solutions that will be possible will astound us all,' Amazon CEO Andy Jassy said.

Amazon Web Services, the cloud opportunity and artificial intelligence were some of the major themes of Amazon CEO Andy Jassy’s annual letter to shareholders.

The letter, released Thursday along with the AWS parent company’s 2024 proxy statement and annual meeting information, said generative AI (GenAI) “may be the largest technology transformation since the cloud (which itself, is still in the early stages), and perhaps since the Internet.”

“This GenAI revolution will be built from the start on top of the cloud,” Jassy said. “The amount of societal and business benefit from the solutions that will be possible will astound us all.”

[RELATED: Amazon CEO: AWS’ $100B Run Rate ‘More Than Any Other Cloud Provider’]

Amazon Grows AWS, AI Capabilities

Amazon will hold its virtual annual shareholders meeting May 22, according to the Seattle-based vendor. The vendor has about 130,000 channel partners worldwide, according to CRN’s 2024 Channel Chiefs.

CRN has reached out to Amazon and AWS for comment.

In 2023, AWS revenue grew 13 percent year over year to $91 billion, according to the vendor. The cloud computing business has a nearly $100 billion revenue run rate, he said.

Here’s more of what Jassy had to say in the annual letter.

Cloud Opportunity Still Strong

Jassy said that the cloud opportunity – which Amazon solution providers play a role in – remains strong with more than 85 percent of the global IT spend still on premises.

“These businesses will keep shifting online and into the cloud,” he said. “In Media and Advertising, content will continue to migrate from linear formats to streaming. Globally, hundreds of millions of people who don’t have adequate broadband access will gain that connectivity in the next few years.”

The GenAI rush is also another reason for more businesses to adopt the cloud, Jassy argued in the letter.

“Generative AI may be the largest technology transformation since the cloud (which itself, is still in the early stages), and perhaps since the Internet,” he said. “Unlike the mass modernization of on-premises infrastructure to the cloud, where there’s work required to migrate, this GenAI revolution will be built from the start on top of the cloud. The amount of societal and business benefit from the solutions that will be possible will astound us all.”

‘Cost Optimization’ Over

In the letter, Jassy reflected on AWS’ performance in 2023. He acknowledged a “substantial cost optimization” period that hit tech vendors and solution providers alike, leading to mass layoffs across the industry.

In 2023, AWS customers sought the vendor’s “more powerful, price-performant” Graviton chips, S3 Intelligent Tiering storage class that uses AI to move infrequently accessed objects to less expensive storage layers and Saving Plans of lower prices for longer commitments.

“This work diminished short-term revenue, but was best for customers, much appreciated, and should bode well for customers and AWS longer-term,” Jassy said. “By the end of 2023, we saw cost optimization attenuating, new deals accelerating, customers renewing at larger commitments over longer time periods, and migrations growing again.”

Amazon’s investments in its cloud business in 2023 included an expanded infrastructure footprint reaching 105 availability zones within 33 geographic regions globally, he said.

Amazon is at work on six new regions in Malaysia, Mexico, New Zealand, Saudi Arabia, Thailand and Germany.

A ‘Primitive’ AI Approach

Jassy laid out Amazon’s “primitive services” strategy to GenAI – a strategy employed in the early days of building out AWS, largely considered the No. 1 cloud in the market, as well as other parts of the Amazon business including logistics.

Thinking about the “primitives,” or the most foundational-level building blocks for software developers, Amazon has been breaking down each layer of GenAI to address the building blocks.

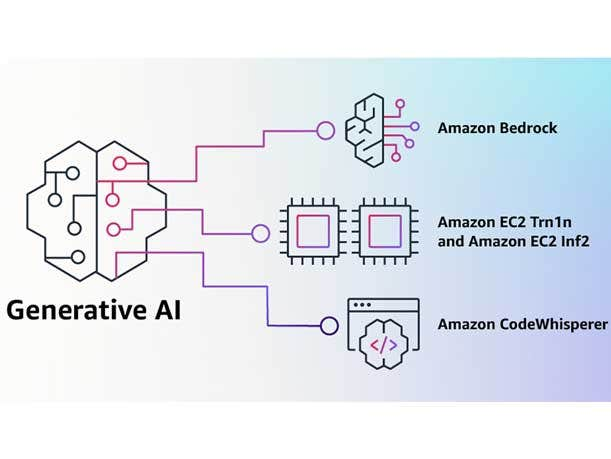

In the bottom layer of foundation models, Amazon views the primitives as the compute for training models, generating predictions and the software for building the models. Amazon has worked to offer “the broadest collection of Nvidia instances of any provider” and built its own Trainium AI training chips and Inferentia inference chips, with customers ranging from Anthropic and Hugging Face to Airbnb and Snap.

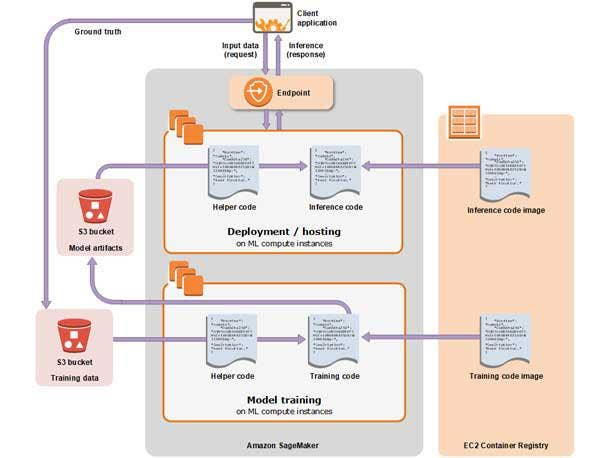

Amazon has also offered SageMaker as a managed, end-to-end service for developers preparing data for AI, managing experiments and training models, Jassy said.

And the vendor has its own Titan family of foundation models, although Jassy noted that customers “want access to various models and model sizes for different types of applications.

Amazon ‘Invented’ GenAI’s Builder Layer

Jassy says that Amazon “invented” the middle GenAI layer of customers building GenAI applications with their data and an existing foundation model with the Amazon Bedrock offering for building and scaling GenAI apps.

“Bedrock is off to a very strong start with tens of thousands of active customers after just a few months,” Jassy said. “The team continues to iterate rapidly on Bedrock, recently delivering Guardrails (to safeguard what questions applications will answer), Knowledge Bases (to expand models’ knowledge base with Retrieval Augmented Generation—or RAG—and real-time queries), Agents (to complete multi-step tasks), and Fine-Tuning (to keep teaching and refining models), all of which improve customers’ application quality.”

Bedrock has risen above the competition, Jassy said, because it allows customers to experiment and iterate in a simple fashion.

AWS GenAI Apps

As for GenAI’s top layer of actual GenAI applications, Jassy touched on how Amazon is bringing GenAI to AWS as well as its consumer business.

Jassy called coding companions “the most compelling early GenAI use case” and touted Amazon Q, a GenAI tool that helps users write code, debug it, test it and implement it.

Q can also move code to a newer version of Java and query various data repositories to answer questions and summarize, among other tasks. “Q is the most capable work assistant available today and evolving fast,” Jassy wrote.

Although he admitted that “the vast majority” of GenAI apps “will ultimately be built by other companies,” the CEO believes that “much of this world-changing AI will be built on top of AWS.” He also pointed to AWS’ security capabilities and track record as a reason customers will want to run GenAI on AWS.

“What we’re building in AWS is not just a compelling app or foundation model,” he said. “These AWS services, at all three layers of the stack, comprise a set of primitives that democratize this next seminal phase of AI, and will empower internal and external builders to transform virtually every customer experience that we know (and invent altogether new ones as well).”