AWS Partner: Amazon’s Bedrock AI Strategy Bests Google, Microsoft

“If you think about Microsoft or Google—they’re all-in on OpenAI or Google PaLM, which is their respective AI and ML models. The reality is, they’ve created a competitive issue for themselves in the market,” says Innovative Solutions’ Travis Rehl.

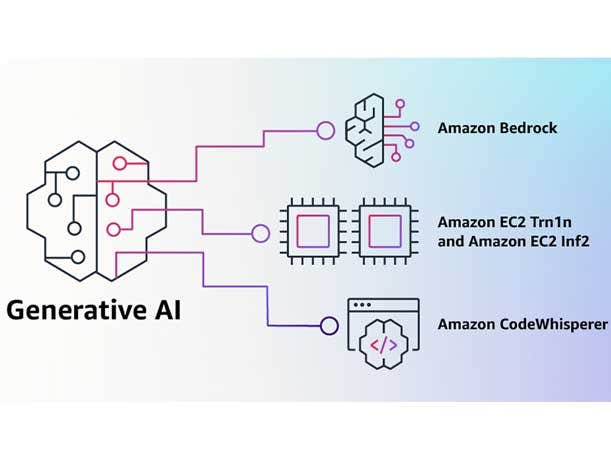

With new artificial intelligence solutions like Amazon Bedrock, the worldwide leader in cloud computing is making the right moves to best rivals Google and Microsoft in the booming generative AI market, says CEO Justin Copie.

“There was a moment in time where we were like, ‘AWS may not be a winner in this gen AI space,’” said Copie, CEO of Innovative Solutions, a top AI solution provider and AWS partner. “It is so great to see that they are continuing to put their money where their mouth is.”

AWS touts Amazon Bedrock as the easiest way for businesses to build and scale generative AI-based applications using foundation models (FMs) from Amazon and other leading AI companies such as Anthropic.

[Related: Google Hires 5 Key Execs From AWS, Microsoft: Here’s Who]

“What’s interesting about Bedrock is, if you think about Microsoft or Google—they’re all-in on OpenAI or Google PaLM, which is their respective AI and ML models. The reality is, they’ve created a competitive issue for themselves in the market,” said Travis Rehl, senior vice president of product and services for Innovative Solutions.

“Where there’s lots of other vendors like Anthropic, AI21 Labs, Midjourney, etc.—all these well-known AI companies in the market. But now they’re competing head-to-head with their own models,” he said. “Amazon took a different tactic.”

Generative AI Market

Since the launch of ChatGPT last year by Microsoft-backed OpenAI, the IT world has been fixated on what has become known as generative AI. The largest tech powerhouses on the planet, including AWS, Microsoft and Google, are pouring millions of dollars each quarter in new R&D and go-to-market strategies for generative AI due to its ability to automate tasks and drive operational efficiencies.

Rochester N.Y.-based Innovative Solutions recently launched a new generative AI offering, Tailwinds, which enables customers to use generative AI within their applications. The plug-and-play module quickly enables any application to be AI-powered without the complexity, high cost and time associated with traditional software development. Tailwinds is based on AWS Bedrock and Anthropic Claude technologies.

Innovative Solutions is an AWS Premier Tier Services Partner that focuses on cloud optimization and AI across North America. In an interview with CRN, Innovative Solutions leaders Copie and Rehl explain why AWS’ strategy and solutions in the growing generative AI marketing are better than Microsoft and Google.

What’s AWS’ market differentiation when it comes to AI and generative AI?

Rehl : What’s interesting about Bedrock is, if you think about Microsoft or Google—they’re all-in on OpenAI or Google PaLM, which is their respective AI and ML models.

The reality is they’ve created a competitive issue for themselves in the market. Where there’s lots of other vendors like Anthropic, AI21 Labs, Midjourney, etc.—all these well-known AI companies in the market. But now they’re competing head-to-head with their own models.

Amazon took a different tactic.

They said, ‘Let’s make a launching point, Bedrock, for all these different vendors. So if you sign up for Bedrock, you get access to all those vendors under one roof.’ And they made it consistent, easy to sign up for, and easy to integrate from.

So as new vendors appear and as new models appear, they go on to Bedrock and every company automatically has access to it. They don’t have to go fiddle or ask or sign up for things. They just have it in one place.

What’s so good about Amazon Bedrock versus Google PaLM and Microsoft OpenAI offerings?

Rehl : What makes Bedrock really nice is supreme flexibility.

So every model is not the perfect tool for every problem. You may need to choose different ones depending on the use cases you have.

If you’re all-in on OpenAI or you’re all-in on PaLM, you may run into a use case it doesn’t solve for. Versus with Bedrock, you can say, ‘It didn’t work over here. I can swap it out for something else and now does.’

The other added benefit is that Bedrock runs inside of every customer’s AWS account.

For example, if you think about the healthcare space or the financial sector—they don’t want their data going over the public internet. They don’t want it to go into some ISV somewhere else that maybe Microsoft or Google have partnered with. They want it in-house.

So when you deploy Bedrock, you get it in-house. It doesn’t leave your Amazon accounts. They’re literally copying ML models right into a customer’s environment. So it’s all secure for all their data privacy requirements.

What makes you bullish about AWS’ right now compared to Google and Microsoft in the generative AI space?

Copie : Based on workload and revenue, AWS’ is still the giant. Their business is the combination of Microsoft and Google’s business.

AWS just owns so much of this space. There’s this real expectation we all have as technologists, that they’re going to continue to innovate and they’re going to continue to push the envelope.

Truthfully, we didn’t know six months ago where AWS was going to stand in this.

There was a moment in time where we were like, ‘AWS may not be a winner in this gen AI space.’ It is so great to see that they are continuing to put their money where their mouth is. Where they’re continuing to prioritize from a product standpoint, because we get early access and early views into a lot of this stuff that’s coming under NDA, and it’s just wonderful to see AWS staying true to who they are as a business.

Culturally speaking, they want to continue to accelerate this and they’re not resting on their laurels. As a big company, you can get caught up in that. It’s very easy to get caught up in it and to get complacent. … What we’re seeing happening in the market, I know that six months from now we’re going to be having an even bigger conversation around AWS and AI.

You just released your AI Tailwinds offering powered by Amazon Bedrock and Anthropic Claude. What can Tailwinds solve in the generative AI market?

Rehl: Tailwinds was created based on three problems we saw.

The first was a lot of executives are being asked by the board, ‘What are you doing about generative AI?’ The answer is always, ‘I get a chatbot.’ In reality, that’s really skin deep and it’s not adding a lot of value or revenue or otherwise to the business itself. It may add to efficiencies here or there, but it’s not a monumental change. Oftentimes is they say the answer is a chatbot. Then they get told that’s not enough. Their engineering teams don’t know what to do because it’s a net new sort of technology landscape. So Tailwinds was built around really solving for education to senior leadership and board [members] around generative AI and ROI, and why they should care.

Second, is once they have a good grasp of what they want to do or how it’s impactful for them, we built an entire architecture that’s pre-made and pre-approved by AWS. So it goes right on top of their existing architecture. They can start implementing roughly 80 percent of typical gen AI use cases almost out of the gate. It’s been resonating really well with both senior leadership teams as well as VPs of engineering and CTOs, who just want to get started very quickly.

The third problem we found is: the industry is changing very quickly. Different services like [open-source framework] LangChain and now LangSmith, [ect.] are all cropping up. Quite frankly, our customers can’t do their day job and keep up with all the changes. So Tailwinds is also built so that it’s stamped on top of a customer’s environment. But we at Innovative and Amazon can iteratively deploy and add to it for our customer. So as their use cases improve or change, the architecture is also keeping up with that monumental change as well.

What’s Tailwinds’ market differentiation and price tag?

Rehl : We found that if an enterprise or SMB goes to a provider and says, ‘Hey, I want to do a generative AI thing.’ It’s six-figures to get started. They feel like it’s a big barrier.

So we price this really competitively. It’s like $15,000 to $25,000 depending on what you want to do.

It really gives a customer an easy way to taste test a proof of concept and to see the ROI. Then make a decision if they want to continue to invest in AI. It’s a really cost-effective, holistic way also to get started on generative AI.

The last bit is AWS really attaching themselves to it because a lot of their team are still learning about the technology suites. Today, they ask customers to stitch together 5 or 6 or 7 solutions to make something. Well, they don’t know what those solutions are. They don’t know how to describe it well to their customers because it’s so new. So they’re really looking for somebody to say, ‘Here’s the package you need.’

So that’s what Tailwinds helps solve. It’s a premade, stamped package to get generated AI up very quickly for really any application you may have.

Every customer that we’re bringing Tailwinds to, they get into Bedrock in the early days where they would not otherwise have the opportunity to. AWS wants that as they look to gain market share and capture the market. So partners like Innovative are a really important to that strategy. AWS understands that.