AWS Summit San Francisco: SageMaker Serverless Inference Access Expanded

‘SIs are a very core component of democratizing machine learning and making it accessible across all our customers. They are a very important part of our go-to-market motion,’ Bratin Saha, AWS’ vice president and general manager of ML services and Amazon AI, tells CRN.

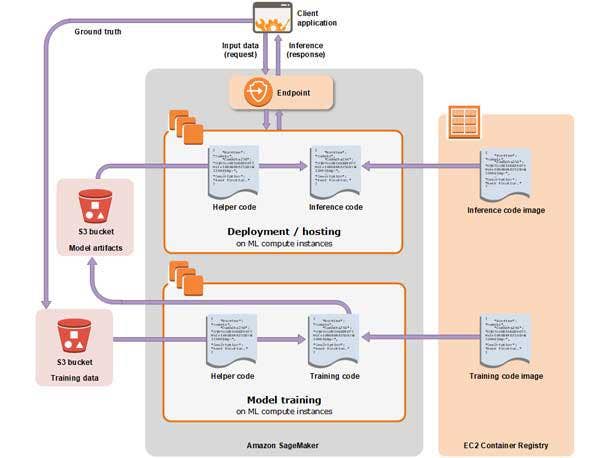

Amazon Web Services has made serverless inference for its SageMaker machine learning tool generally available with the goal of simplifying model deployment without configuring or managing the underlying infrastructure.

The Seattle-based cloud giant unveiled the expanded availability during its AWS Global Summit event in San Francisco this week.

Bratin Saha, AWS’ vice president and general manager of ML services and Amazon AI, told CRN in an interview that partners will be key to bringing AWS AI and ML products to customers in a variety of sizes and industries.

“We work very closely with partners and system integrators, SIs,” Saha said. “Many customers are looking for help in, let’s say, taking a POC [proof of concept] into production or just deploying some of the models and setting up some of the pipelines. And I think that is where SIs and partners can play a really important role. Because they have expertise in that. And they can just have a very repeatable motion,” he added.

[RELATED: AWS Summit: 5 New Aurora, IoT TwinMaker And Glue Offerings]

“SIs are a very core component of democratizing machine learning and making it accessible across all our customers. They are a very important part of our go-to-market motion,” Saha said.

The new inference option automatically provisions, scales and turns off compute capacity based on inference request volume, according to AWS. Users only pay for the duration of running inference code and the data amount processes, not for idle time.

Eran Gil, CEO of AWS partner AllCloud, which has offices in Denver, Romania, Germany and Tel Aviv and is a member of CRN’s 2022 Managed Service Provider 500, told CRN that his company has been investing in offerings around artificial intelligence, machine learning and data analytics.

AllCloud’s acquisition of Snowflake partner Integress during the summer helped propel the company’s offerings in the area. One major customer set demanding AllCloud’s AI and ML offerings are manufacturers in Europe, the MIddle East and Africa that are producing a high volume of data and want to make sense of it.

“It’s been explosive, our growth in the data and analytics world,” Gil said.

SageMaker includes purpose-built tools for each step of the ML development life cycle, including labeling, data preparation, feature engineering, statistical bias detection, auto ML, training, running, hosting, explainability, monitoring and workflows.

AWS CEO Adam Selipsky gave a shout-out to SageMaker at the company’s AWS re:Invent conference in December.

“Since we launched SageMaker in 2017, we’ve added 150 capabilities and features and we’re not slowing down,” Selipsky said during the conference.

“Tens of thousands of customers are using SageMaker today to train models, billions of parameters and to make hundreds of billions of predictions every month. … We also continue to build out direct integrations between the various services. For example, today you can access SageMaker from Redshift, Aurora or Neptune,” he said.

CRN also included SageMaker on its list of “10 Cool Cloud AI And ML Services.”

AWS used its Summit event in San Francisco this week to uneil other new offerings as well. Swami Sivasubramanian, vice president of data, analytics,\ and machine learning services at AWS, unveiled new Aurora Serverless, IoT TwinMaker, Amplify Studio and AWS Glue cloud offerings during his keynote address Thursday.