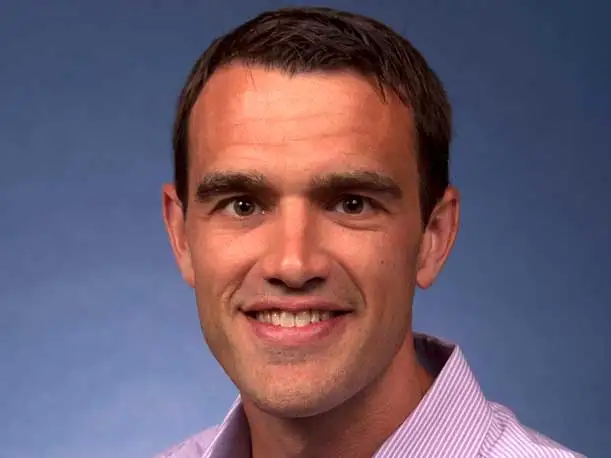

Intel Global Channel Chief Vickers On Fighting Nvidia With ‘Open’ AI Stack, Xeon’s ‘Ubiquity’

In an exclusive interview with CRN, Intel Global Channel Chief Trevor Vickers talks about how the chip giant is using its ‘open’ AI stack to help partners take advantage of Xeon CPUs to tackle inference workloads as part of its ‘AI everywhere’ strategy.

Intel Global Channel Chief Trevor Vickers says that the chip giant’s “open” AI stack is a big differentiator against rival Nvidia that will allow the company to seize on the “ubiquity” of its Xeon CPUs to grow in the fast-growing inferencing space.

“For us it is about how we are creating these ecosystems that are resilient, that are secure, that are high performance and that are open, which in my mind is a big, big part of what we offer with our partners,” said Vickers, who became vice president and general manager of Intel’s Global Partners and Support organization in January, in an exclusive interview with CRN.

[Related: Partners: Intel’s AI Efforts Could Get Boost From Kimrey’s New Sales Group]

“That to me is kind of the differentiator in that equation. We want to have an open AI stack. We want to have a ‘write once, deliver everywhere’ kind of ethos in how we bring products to market,” he added.

Vickers’ “write once, deliver everywhere” comments refer to Intel’s oneAPI open standard that allows developers to use a single programming model for different kinds of processors, whether they’re Intel CPUs or GPUs made by Intel or rivals. This stands in contrast with the proprietary nature of Nvidia, whose CUDA platform only supports processors designed by Nvidia.

While Nvidia surpassed Intel in annual revenue last year thanks to soaring data center GPU revenue driven by generative AI development, Intel is hoping to strike back with an “AI everywhere” strategy that not only involves accelerator chips like its Gaudi processors and data center GPUs but also its CPUs, whether its Xeon for servers or Core Ultra for PCs.

Vickers said he and his team have been collaborating with partners on building AI solutions that take advantage of the “ubiquity” of Xeon processors in data centers. The company believes these chips are more economical and pragmatic for a broader constituency of organizations running inference on AI models for live applications.

“I was on a phone call with a huge Indian GSI [global systems integrator] partner with a local ISV [independent software vendor], where they were coming up with a solution that would take a 15-billion parameter model or less and have a drastic reduction in cost and power with comparable performance by doing the optimization work on the hardware that they have, which is Xeon,” he said.

Another example is ISV partner Numenta, which is working with American Airlines on an AI proof of concept.

“The results were pretty staggering in terms of reduction in memory footprint, reduction in power and just the ability to scale because they already have Xeon deployed in their infrastructure,” said Vickers. “It was a pretty compelling story. We’re going to do a lot more of that.”

While Intel has said up to 70 percent of CPUs used for inferencing are Xeon processors, Vickers said the company has more work to do in getting a broader set of partners behind the company’s push into such workloads with CPUs.

“I think we’ll do more and more with our partners to actually put [proof of concepts] into development looking at what’s a good viable solution, where they might be able to leverage the infrastructure they already have,” he said.

What follows is a lightly edited transcript of CRN’s interview with Vickers, who also talked about getting partners behind its nascent but growing software and services business, helping partners sell AI PCs, and new resources in the Intel Partner Alliance program.

What is the open ecosystem story?

For us, it is about how we are creating these ecosystems that are resilient, that are secure, that are high performance and that are open, which in my mind is a big, big part of what we offer with our partners. That to me is kind of the differentiator in that equation. We want to have an open AI stack. We want to have a “write once, deliver everywhere” kind of ethos in how we bring products to market. Heterogenous compute is really, really important to us.

Where we want to go to in the future is this whole notion of value-based selling or outcome-based selling. Everyone knows our history. It is kind of a one-time transactional piece of hardware, you sell it once. We celebrate that. That’s great. That’s a critical ingredient.

We are keen to get more into enterprise software, second party software, providing actual solutions and digging in deeper to help actually solve real problems with our partners. That is a pretty big mind set shift and cultural paradigm shift for us even within the Sales and Marketing Group. That has been underway for the last year or two, but it is really kicking into gear and becoming a much bigger focus for us.

Given Intel’s open AI ecosystem, what is the danger of going all in on an Nvidia ‘proprietary’ AI strategy?

To get to the kind of scaled deployment that we all want to see cost is going to be incredibly important. Scarcity is a problem. You need the ubiquity of the infrastructure in many cases that is already available and the experience that a lot of software developers have. We think that when you’re working with us, you are bringing a multitude of different solutions and software stacks and OSes and different applications and different run times. We really do also think from your access point— your access point maybe being your Meteor Lake- or Core Ultra-based PC—to the edge where Intel does have a profound leadership position back into the data center.

Intel announced a new Edge platform for scaling AI Applications at Mobile World Congress in Barcelona. What is the channel opportunity there?

It is on standard hardware with oneAPI [Intel’s unified programming model]. The whole goal is to make it easier to get to a solution because it is not a proprietary closed stack.

I think it is going to be a big opportunity. We are still early days, so this isn’t the time to over-sell. At Intel, we have had so much work going on in the software realm, but in kind of a more disparate fashion. There is a lot more emphasis now to think about what that solution would help get defined by a COE [Center of Excellence] within Intel, and we’re going to be fairly judicious about how many of those we want to support because we want to be focused. And then what is the actual software solution that pairs with the hardware. That kind of mind set is going to be a lot more pervasive.

How is the channel program changing with regards to solution selling with partners?

The idea of solution selling where our IP gets integrated into a stack, which could be first-party software, third-party software or a service like how do you manage a fleet of servers or is there a VMI [virtual machine interface] that is optimized to certain transistors in our hardware that aren’t getting exposed by a CSP [cloud service provider). There’s a lot that we can do in that space. We’re willing to do a lot more experimentation with our partners.

We know that [software and services] is a different sell. It is a different engagement level. Ultimately working with our customer base and our partners we think there are longer lasting and stickier relationships that are built. Obviously, we are driving toward an outcome that is favorable for both of us. It sounds like a cliché but it is a win-win position.

What were some of the highlights from Mobile World Congress in Barcelona?

A featured theme at Barcelona MWC is the whole notion of AI Everywhere. We are really excited because we led the commercial launch (there) of our Core Ultra product line. The product used to be known as Meteor Lake.

We aim to sell a lot of those and put it in the hands of our partners to see what they can do. We are really excited about the partner match-making and magic that can happen when we expose that NPU and the capabilities that are in that silicon to our ISV partners so they can actually start developing applications that are going to be exciting ultimately for our end customers and end users. We think partners play a huge role in that. It is going to be kind of a higher-touch sell to bring that vision to life.

The classic kind of edge is an area that I think we have always been a pretty strong leader in. We have always been promoting open standards either through one-API or OpenVINO [Intel’s open-source inference optimization toolkit] and how to do a more modular design that is open.

In the data center, clearly we are very excited about the ubiquity and pervasiveness of Xeon especially in examples of inferencing. Today GPUs are great. Obviously, that is a pretty hot topic. But it doesn’t end with the GPU. We really think that there is a lot more that can be done.

I was on a phone call with a huge Indian GSI [global systems integrator] partner [and] a local Indian ISV where they were coming up with a solution that would take a 15 billion parameter model or less and have a drastic reduction in cost and power with comparable performance by doing the optimization work on the hardware that they have, which is Xeon.

Another example is an ISV partner, Numenta, who has a big program with American Airlines on how to actually bring down their costs by scaling some of their AI POCs and the results were pretty staggering in terms of reduction in memory footprint, reduction in power and just the ability to scale because they already have Xeon deployed in their infrastructure. It was a pretty compelling story. We’re going to do a lot more of that. We use those accelerators.

How ready are partners now to sell AI solutions?

There’s a lot of work that needs to be done on the inferencing side. I think we’ll do more and more with our partners to actually put POCs into development looking at what’s a good viable solution where they might be able to leverage the infrastructure they already have.

Intel has said that up to 70 percent of CPUs installed for inferencing are Xeon processors. Is there already a lot of Xeon-based inferencing happening?

Ninety six percent of all AI in the enterprise today is inferencing. That is one of Intel’s strengths. There is the trust and security element. We feel pretty good about that.

We have had acceleration on our silicon with AMX [Advanced Matrix Extensions, a dedicated hardware block on Xeon that optimizes inferencing workloads] for multiple generations. So it is something that we have always thought about in terms of how we allocate our transistor budget. So the capability is there. There is usage happening today. We do have an opportunity to do more awareness building on how far you can take it.

Are there any specific partner initiatives to drive more inferencing through Xeon?

Our AI Accelerator [program] would be one example of that. We want to get more ISVs into that program and then potentially do match-making with other GSIs. Then we can build solutions that actually show the workload improvement on three different vectors that you get by porting that to Xeon, by using Xeon. It is ubiquitous. Partners should have a mindset of use what you’ve got and make sure they don’t over-engineer.

There is more education that is needed there. Our AI Accelerator has a big, big emphasis on that. There are so many conversations going on between partners where you take the magic of what a local ISV can do with a GSI and what they can bring ultimately to an enterprise customer is compelling.

We want to have solutions in a catalogue with the hardware configuration that you would need with an optimal solution by a partner with an optimal solution for doing video, for example, or for doing threat prevention. We’ve got it! It is optimized. It is through one of our partners and you can access it through IPA [Intel Partner Alliance].

Part of the AI PC push is helping the customer figure out who needs that AI PC and what do they need to do to get a return on their investment. How are you enabling partners to help educate customers?

At our launch event, half of our keynote was focused on our ISV partners with the 100 ready to go. So when the OEMs come to market, it is coming loaded with experiences. There are applications that are available that can actually take advantage of the technology. Copilot is obviously the big one. I think Microsoft is doing a great job making that as intuitive as possible.

I think people just playing around with ChatGPT has kind of disarmed the technology a little bit, where this is kind of fun, you can just go type in a question and see what you get. We’ll have to make sure that other uses are more readily apparent. We know there are things, like you are going to be able to modify your background with enhancements through Zooms or Teams to create a more immersive-like experience. I think it is just going to be more about showcasing ISV partners and what they have created that is going to really bring the experience to life.

The new vPro platform was just launched with Core Ultra. What are the ways you are getting Intel partners behind the new Intel vPro PC push?

From a partner standpoint, clearly it is around our [Intel Partner University], through our assets in [Intel Partner Marketing] Studio, where we want to direct our MDF [market development fund] dollars in terms of doing real market development and awareness. An AI PC is our number one, number two and number three focus.

When you are in IPA, you’ll really feel that experience. There will be opportunities for partners like an ISV to be able to come and do some match-making with an actual builder on what we want to get preloaded on a device or how can we get a certain experience enabled and tune it—maybe a vertical. For example, our Partner Activation Zones where we set up a curated experience where if it is going to be for manufacturing, we would look at what you would need in a manufacturing environment that would be a good use case for how you would take advantage of AI. That could be something that does defect detection at a faster rate. We would look at what are the applications you would need to do that.

There are a lot of techniques in the AI and ML space for forecasting and demand so we could look at some of the software that we could bundle with a PC to show that off.

What is the kind of information that is curated in the Activation Zones?

I would say if someone comes in and there is a specific solution they are looking to build or sell and you are going into one of those three portals [Networking, Intelligent Edge, and Manufacturing] there should be technical content around not only our product but maybe a partner solution that is already pre-determined and spec’d out, access to technical resources if you actually need someone on the Intel side to consult with. Think of it as a place to go to workshop solutions, a way to be able to get answers and get connected with individuals that can help you get to market.