Sorry, Moore's Law: Multicore Is The New Game In Town

Not long ago, dual-core processors were considered cutting-edge and were found mainly in servers and high-end workstations. Today, dual-core CPUs are the new minimum for most midrange PCs and laptops, quad-core chips are standard in many new Apple MacBooks, and Apple's desktops can be had with as many as 12 processor cores.

Heck, virtually all new smartphones now come with two cores, and some even have four. What's more, Google and Microsoft in their forthcoming tablets set the bar at four application cores plus as many as 12 more for graphics processing. By comparison, Apple's latest iPad 3 had "just" two application cores and another four for graphics. More on what's inside Google's Nexus 7 and Microsoft's Surface for Windows RT later.

Multicore processors and multiprocessor systems are now as commonplace as smartphones themselves. But how did this happen? Why did processor makers depart from the ever-increasing clock speeds through the 1990s and move toward multicore designs instead? The answers may surprise you.

[Related: Intel Launches First 22-nm Ivy Bridge Processors ]

As chip designers scurried to stay ahead of Moore's Law, which prescribes that computer-processor transistor count doubles every two years, the complexities of doing so became apparent. In the days of the Pentium, Intel and its competitors continued to improve processor performance by adding transistors and logic to their CPUs and increasing clock frequencies.

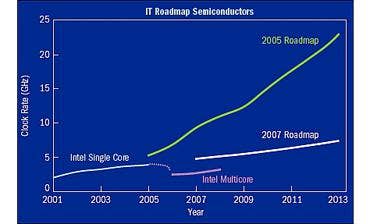

For a while, the strategy worked, and the International Technology Roadmap for Semiconductors (ITRS) and other organizations that track the semiconductor industry predicted that clock rates would reach 12GHz by 2013.

But by around 2002, according to "The Manycore Revolution: Will HPC Lead or Follow?" a research report conducted by the SciDAC Review, the performance curve began to flatten -- and chip makers were hitting a power wall. Indeed, in 2004 when Intel canceled Tejas, the high-speed processor that was to be the successor to Pentium 4, President and then-COO Paul Otelinni cited power concerns. "You just can't ship desktop processors at 150 W," he said at the time. Soon after, Intel, AMD and others all had multicore processor strategies.

NEXT: Striving For Parallelism

The writing was on the power wall before Tejas lost its statehood, and chip makers were seeking ways to improve processing throughput that wasn't tied to increases in clock speed, or power consumption. By 1995, Intel's Pentium Pro processor was marketed with features such as an execution pipeline, out-of-order instruction processing, second-level cache and other techniques to give program execution a boost. This was a predecessor to the dual-core Pentium II and Xeon processors.

As Intel's troubled Pentium processor so clearly illustrated, the laws of nature and diminishing returns were catching up to the Law of Moore. The complex logic required to implement execution pipelines and other throughput techniques required lots of expensive silicon real estate and were highly prone to post-manufacture errors, according to the SciDAC report.

It was clear to chip makers that it was becoming exceedingly impractical (and sometimes impossible) to verify all that complex logic; the cost of these designs was reaching into the hundreds of millions of dollars, the report stated. "The likelihood of chip defects will continue to increase, and any defect makes the core that contains it non-functional." Manufacturers were seeing that the larger and more complex a chip's design, the lower its yield would be.

The SciDAC report cited "A View of the Parallel Computing Landscape,’ a paper published in 2006 by a team of computer scientists from Lawrence Berkeley National Laboratory (LBNL) and the University of California (UC), Berkeley. The Berkeley scientists offered the following solutions for these processor issues:

Power: Parallelism is an energy-efficient way to achieve performance. Many simple cores offer higher performance per unit area for parallel codes than a comparable design employing smaller numbers of complex cores.

Design Cost: The behavior of a smaller, simpler processing element is much easier to predict within existing electronic design-automation workflows and more amenable to formal verification. Lower complexity makes the chip more economical to design and produce.

Defect Tolerance: Smaller processing elements provide an economical way to improve defect tolerance by providing many redundant cores that can be turned off if there are defects.

NEXT: Looks Like Intel Listened

Perhaps Intel took note of this report, as it appears to have implemented all three of the scientists' suggestions in its third-generation processors. Core counts also were expected to rise when Intel's 22nm process came on line. And rise they have. The Ivy Bridge design with its breakthrough Tri-Gate transistor has created a new breed of powerful, energy-efficient laptops and desktops with more cores than ever before while operating at about half the power of its equivalent using prior technology.

The Intel Core i7 3770K is among the first processors available that incorporates Tri-Gate technology, which creates three-dimensional transistors that maximize current flow when in the on state and consume close to zero when off. Performance results show significantly faster speeds than prior generations; Ivy Bridge processors are off to a running start.

In 2011, when Intel said it was able to mass-produce the parts using a 22nm process, the company estimated performance increases of as much as 37 percent compared with 32nm planar-transistor devices, and power consumption at about half or less. Such parts would be highly desirable for small handheld units such as smartphones and tablets, medical devices, media players and portable gaming systems.

In fact, anything that can benefit from the ability to switch between high performance and low power consumption could take advantage. These days, that includes just about everything, and we're beginning to see two- and four-core Ivy Bridge processors in a multitude of laptops -- including Ultrabooks -- as well as many desktop systems. And as Intel promised, Ivy Bridge processors use the same sockets as Sandy Bridge parts.

What's more, the ability to mass-produce the 3-D technology using the 22nm process makes possible a further transition to 14nm and even 10nm nodes. Ivy Bridge chips can be soldered onto a motherboard without a socket to further reduce a system's profile.

This capability will no doubt benefit Microsoft's Surface for Windows 8, a full-featured tablet that's built around Ivy Bridge. Perhaps more impressive is the Surface for Windows RT, which will incorporate Nvidia's Tegra 3 quad-core processor.

Technically considered to be a system-on-chip, the Tegra 3 casts four 1.4GHz Cortex A9 cores on the die along with a fifth low-power core that can perform all functions during device standby. The Tegra 3 system-on-chip also includes a GeForce GPU with as many as 12 graphics processor cores and supports a maximum resolution of 2,560 x 1,600 pixels. Google's Nexus 7 tablet also will feature the Tegra 3.

NEXT: A Smartphone Supercomputer?

In an article about multicores, there's another technology that also bears mention. Last year Intel began showing the Many Integrated Core architecture. Intel MIC puts 32 cores on a PCIe board and makes them available for highly parallel applications in high-performance computing such as those for climate simulation, energy research and genetic analysis. According to Intel, applications written in standard programming languages can still take advantage of these extremely high levels of application performance.

Code-named Knights Ferry, the Intel MIC boards are even being experimented with to run applications that use cloud-based ray tracing, a compute-intensive light rendering technique used in video games that's currently limited to dedicated, high-end graphics processors. This allows laptops, smartphones and other lightweight computing devices to experience sophisticated games without a heavy GPU. Once such a technology becomes mainstream, tasks that were once the province of supercomputers will be available from the average smartphone.

PUBLISHED JULY 4, 2012