7 Big Announcements Nvidia Made At SIGGRAPH 2023: New AI Chips, Software And More

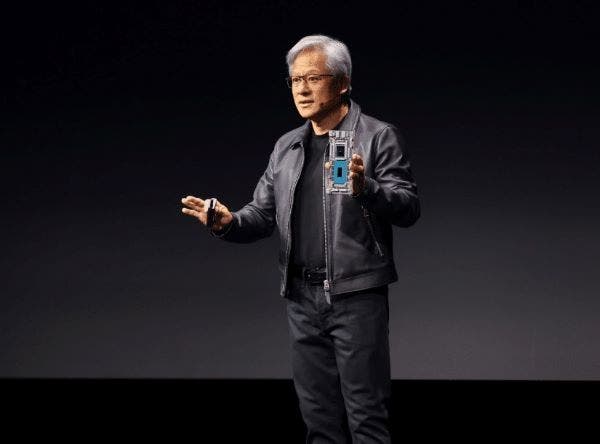

Nvidia CEO Jensen Huang used his keynote at the SIGGRAPH 2023 event as somewhat of a victory lap for the GPU giant, whose company has become the foundation for many generative AI applications, as the veteran semiconductor exec unveiled new chips and software capabilities to power AI, graphics and simulation workloads.

Nvidia CEO Jensen Huang took to the stage at the annual SIGGRAPH conference on Tuesday for the first time in five years to showcase the GPU giant’s “full-stack computing” prowess with a bevy of new chips and software capabilities for AI, graphics and simulation applications.

Huang’s keynote at the SIGGRAPH 2023 event in Los Angeles was somewhat of a victory lap for the veteran semiconductor executive, whose Santa Clara, Calif.-based company has become the foundation for the many generative AI applications that have become in high demand over the past several months.

[Related: The ChatGPT-Fueled AI Gold Rush: How Solution Providers Are Cashing In]

The chief executive said this moment wouldn’t have been possible if it wasn’t for the way all the individual components came together over the last 12 years: the open-source frameworks, the GPUs tuned for general-purpose computing, the network components to tie them together and the software to develop and manage GPU-accelerated applications.

“The generative AI era is upon us. The iPhone moment of AI, if you will, where all of the technologies of artificial intelligence came together in such a way that it is now possible for us to enjoy AI in so many different applications,” he said.

It was only five years ago, Huang noted, that Nvidia demonstrated the chip designer’s first capabilities in using GPUs to render ray-traced graphics in real time—that is, graphics that realistically simulate the way light interacts with objects.

Since then, Nvidia’s definition of a GPU has expanded, going from a single graphics chip to eight powerful GPUs linked together in a server, according to Huang. The GPUs forming the larger GPU system are Nvidia’s H100 chips, based on the company’s Hopper architecture, that are in high demand due to their ability to run generative AI applications fast and efficiently.

The company has also made a large expansion into systems, software and cloud services over the past several years with products like the Nvidia DGX AI servers, the Nvidia AI Enterprise software suite and the Nvidia DGX Cloud AI supercomputing service.

“We want to have the ability to not just do generative AI in the cloud, but to be able to do it literally everywhere: in the cloud, data centers, workstations or PCs. And we want to do this by making it possible for these really complicated stacks to run,” he said.

The announcements Nvidia made around an updated Grace Hopper Superchip with extra high-bandwidth memory, the versatile L40S data center GPU and a new version of Nvidia AI Enterprise, among several other software updates, reinvigorated one executive at a top Nvidia channel partner.

“I love what Nvidia is doing. They keep progressively pushing the envelope in every area, and they’re not resting on their laurels,” said Andy Lin, CTO at Houston, Texas-based Mark III Systems.

What follows are the most important things you need to know from the announcements Nvidia made at SIGGRAPH 2023, ranging from the updated Grace Hopper and the new L40S GPU to big updates for the Nvidia AI Enterpise and Nvidia Omniverse software platforms, plus more.

Nvidia Reveals New Grace Hopper Superchip With 141GB Of HBM3e Memory

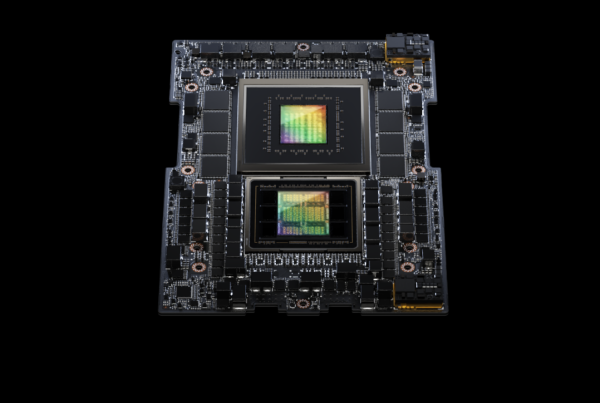

A few months after beginning production for its Grace Hopper Superchip, Nvidia revealed a new version of the CPU-GPU hybrid chip that comes with a massive 141GB of HBM3e high-bandwidth memory, allowing it to run generative AI models 3.5 times larger than the original version.

The revamped GH200’s memory is a significant upgrade over the 96GB HBM3 capacity of the original Grace Hopper, and it’s the first processor to use HBM3e technology, which is 50 percent faster than the original Superchip’s HBM3 format, according to Nvidia.

Like the original GH200, this upcoming iteration comes with a 72-core Grace CPU based on Arm’s Neoverse V2 architecture and a Hopper H100 GPU that are connected by a high-bandwidth NVLink chip-to-chip interconnect that runs at 900GB/s.

In his SIGGRAPH 2023 keynote, Nvidia CEO Jensen Huang said by connecting two revamped GH200s using an NVLink interconnect in a server, it turns the two chips into a “super-sized Superchip” that has 144 CPU cores, 10TB/s of frame buffer bandwidth and 282GB of HBM3e memory.

Huang promised that the revamped GH200 will enable massive cost savings for running inference on large AI models for live applications.

“You could take just about any large language model you like and put it into this and it will inference like crazy. The inference cost of large language models will drop significantly because look how small this computer is. And you could scale this out in the world’s data centers,” he said.

Nvidia said systems with the revamped GH200 chip are expected to arrive in the second quarter of next year, adding that the Superchip is fully compatible with its MGX server specification.

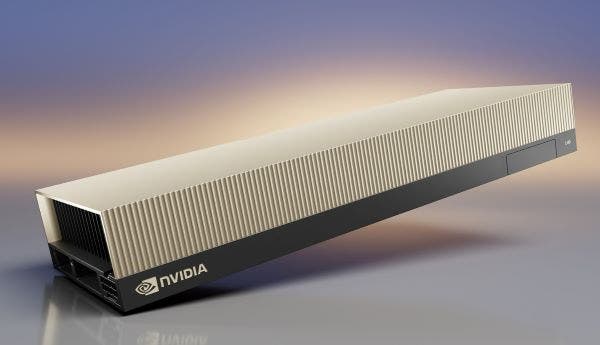

Nvidia Announces New L40S GPU For AI And 3-D Applications

Nvidia revealed a versatile data center GPU that can run AI training and inference workloads faster than its previous flagship A100 GPU in addition to demanding graphics and simulation applications.

Called the L40S, the GPU is based on Nvidia’s Ada Lovelace architecture, and it comes with 48GB of memory, fourth-generation Tensor Cores and an FP8 Transformer Engine to provide more than 1.45 petaflops of “tensor processing power,” according to Nvidia.

These attributes help the processor deliver up to 20 percent faster inference performance and up to 70 percent faster training performance for generative AI models compared to the A100.

For high-fidelity visualization workloads like real-time rendering and 3-D content creation, the L40S takes advantage of 142 third-generation RT Cores to provide 212 teraflops of ray-tracing performance, which Nvidia said enables “immersive visual experiences and photorealistic content.”

The L40S is also a workhorse when it comes to engineering and scientific simulation applications, thanks to its 18,176 CUDA cores, which gives the GPU nearly five times better single-precision floating-point (FP32) performance than the A100 for “complex calculations and data-intensive analyses.”

The L40S will be available starting this fall in systems based on Nvidia’s OVX reference architecture from Asus, Dell Technologies, Gigabyte, Hewlett Packard Enterprise, Lenovo, Supermicro and other vendors.

Hugging Face Uses Nvidia DGX Cloud For New AI Training Service

Nvidia announced a new partnership with AI development platform Hugging Face that will allow the platform’s developers to train and tune large language models on the Nvidia DGX Cloud platform.

Used by more than 15,000 organizations, Hugging Face will enable the capability through a new service called Training Cluster as a Service, which Nvidia said is meant to “simplify the creation of new and custom generative AI models for the enterprise.”

The GPU designer said Training Cluster as a Service will give Hugging Face’s users “one-click” access to Nvidia’s “multi-node AI supercomputing platform” through DGX Cloud, which is currently expanding to cloud service providers after being made initially available on Oracle Cloud Infrastructure.

“With Training Cluster as a Service, powered by DGX Cloud, companies will be able to leverage their unique data for Hugging Face to create uniquely efficient models in record time,” Nvidia added.

The service is expected to launch in the “coming months.”

Nvidia Launches Free AI Workbench Toolkit To Customize Generative AI Models

Nvidia revealed a free toolkit that will let developers create, test and customize pre-trained generative AI models on a PC or workstation and then scale it to any data center or cloud infrastructure.

Called Nvidia AI Workbench, the toolkit provides a “simplified interface” that eliminates the busywork of “hunting through multiple online repositories for the right framework, tools and containers” that can bog down developers when fine-tuning AI models, according to Nvidia.

The toolkit simplifies AI model customization by giving developers easy access to enterprise-grade models, frameworks, software development kits and libraries from open-source repositories.

Nvidia said several AI infrastructure providers, including Dell Technologies, Hewlett Packard Enterprise, HP Inc., Lambda, Lenovo and Supermicro, plan to offer Nvidia AI Workbench in the latest generation of their multi-GPU-capable desktop workstations, mobile workstations and virtual workstations.

Nvidia AI Enterprise 4.0 Comes With New LLM, Kubernetes Features

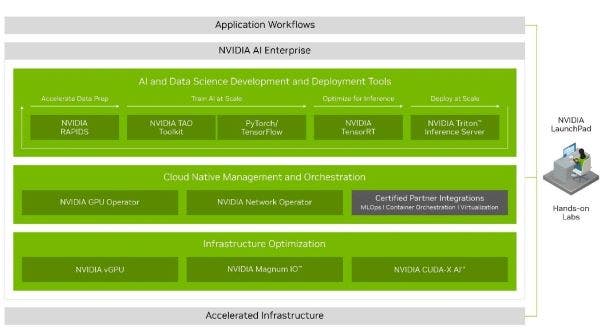

Nvidia is rolling out a new version of its Nvidia AI Enterprise software suite with new features for creating and customizing large language model applications and deploying AI models in Kubernetes containers.

Launched in 2021, Nvidia AI Enterprise gives organizations the AI frameworks, pre-trained models and tools they need, among other components, to develop and deploy AI applications.

The fresh features in Nvidia AI Enterprise 4.0 include Nvidia NeMo, the company’s cloud-native framework for building, customizing and deploying large language models for a variety of generative AI use cases such as rendering images, videos and 3-D models from text prompts.

The new version will also include Nvidia Triton Management Service, which automates the process of deploying AI models through multiple Triton Inference Server instances in Kubernetes clusters to optimize performance and hardware utilization.

Another incoming feature is Nvidia Base Command Manager Essentials, which is cluster management software that helps organizations “maximize performance and utilization of AI servers across data center, multi-cloud and hybrid-cloud environments.”

Nvidia AI Enterprise has been certified to run on Nvidia-certified systems, Nvidia DGX systems as well as major cloud platforms and newly announced Nvidia RTX workstations, according to the company.

The software suite has been integrated into services from ServiceNow, Snowflake and Dell Technologies as well as the partner marketplaces of Amazon Web Services, Google Cloud and Microsoft Azure. Integration is also coming to several MLOps tools, including Microsoft’s Azure Machine Learning, ClearML, Domino Data Labs, Run:AI and Weight & Biases.

Nvidia Reveals New RTX Workstation GPUs

Nvidia announced four new Nvidia RTX desktop GPUs that use the company’s Ada Lovelace architecture to power heavy-duty applications for AI, graphics and real-time rendering.

The new GPUs consist of the RTX 5000, RTX 4500 and RTX 4000. They each include CUDA cores for computationally complex workloads like scientific simulations, third-generation RT cores for graphics-heavy workloads, and fourth-gen Tensor Cores for AI training and inference.

The RTX 5000 comes with 32GB of GDDR6 memory while the RTX 4500 has 24GB of GDDR6 memory and the RTX 4000 has 20GB of GDDR6 memory. They also come with support for high-resolution augmented reality and virtual reality devices.

The RTX 5000 is available now in desktop PCs from HP Inc. as well as distributors like Leadtek, PNY and Ryoya Electro. The RTX 4500 and RTX 4000 will be available this fall in systems from Boxx, Dell Technologies, HP and Lenovo as well as through global distributors.

Nvidia also announced that PC workstations with up to four RTX 6000 GPUs as well as its Nvidia AI Enterprise and Nvidia AI Omniverse Enterprise software will be available from system builders, including Boxx, Dell, HP and Lenovo, starting this fall.

Nvidia Doubles Down On OpenUSD 3-D Framework With Omniverse Platform

Nvidia said it’s bringing new features to its Omniverse 3-D application development platform to accelerate the “creation of virtual worlds and advanced workflows for industrial digitalization.”

With Nvidia calling the new batch of features a “major release,” the platform will soon include Omniverse Kit Extension Registry, which makes it easier for developers to build custom apps by equipping them with a central repository for accessing, sharing and managing extensions.

Omniverse will also receive new application and experience templates to help developers get started with Omniverse and OpenUSD, the open 3-D framework that lets Omniverse connect with other 3-D applications made by other organizations.

New rendering optimizations are coming to the platform, too, which will let Omniverse take advantage of enhancements in the latest Nvidia RTX GPUs. These optimizations include the integration of Nvidia’s AI-based upscaling technology, DLSS 3, into the Omniverse RTX Renderer as well as a new AI denoiser for enabling “real-time 4K path tracing of massive industrial scenes.”

The new release of Omniverse will also receive new extended reality developer tools and updates to applications such as Omniverse USD Composer, which lets users create large-scale, OpenUSD-based scenes, and Omniverse Audio2Face, which creates facial animations and gestures from audio.

In addition to the new features, Nvidia said it was rolling out a “broad range of frameworks, resources and services for developers and companies to accelerate the adoption” of OpenUSD, which stands for Universal Scene Description. It also announced new Omniverse Cloud APIs to help developers “seamlessly implement and deploy OpenUSD pipelines and applications.”

“Just as HTML ignited a major computing revolution of the 2D internet, OpenUSD will spark the era of collaborative 3D and industrial digitalization,” said Jensen Huang, founder and CEO of Nvidia.