8 Big Announcements At Nvidia’s GTC 2023: From Generative AI Services to New GPUs

At Nvidia’s GTC 2023 event, the chip designer revealed new cloud services meant to help enterprises build generative AI and metaverse applications as well as new GPUs, systems and other components. CRN outlines the biggest announcements that will open new opportunities for partners.

New Cloud Services, GPUs And More

Nvidia unveiled new cloud services aimed at helping enterprises build generative AI and metaverse applications alongside new GPUs, systems and other components at the chip designer’s GTC 2023 event.

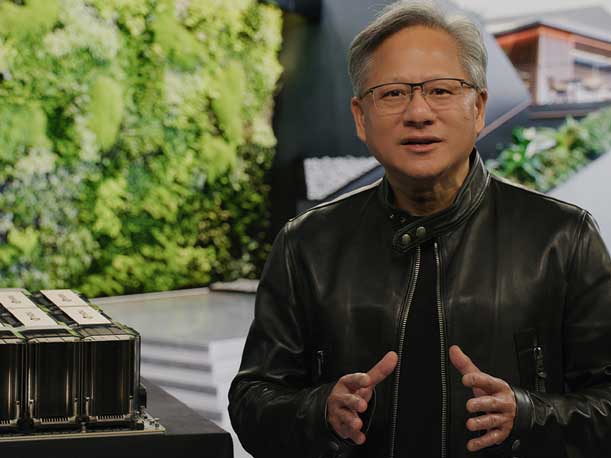

Jensen Huang, the company’s CEO and co-founder, used his Tuesday keynote to position Nvidia as the preeminent provider of solutions for accelerated computing needs, including the massively popular category of generative AI, and said that traditional CPUs aren’t well-suited for these increasingly demanding workloads, both from a performance and efficiency perspective.

[Related: Raja Koduri, Main Driver Of Intel’s GPU Efforts, To Leave Company]

He claimed that Moore’s law is slowing down and coming to an end, referring to the observation championed by rival Intel and made by co-founder Gordon Moore that the number of transistors on an integrated circuit will double every two years.

“As Moore’s Law ends, increasing CPU performance comes with increased power. And the mandate to decrease carbon emissions is fundamentally at odds with the need to increase data centers,” Huang said. “Cloud computing growth is power-limited. First and foremost, data centers must accelerate every workload. Acceleration will reclaim power. The energy saved can fuel new growth.”

While multiple delays of Intel’s latest chip manufacturing technologies over the past several years have raised questions about the staying power of Moore’s law, its CEO, Pat Gelsinger, has said that his company can “maintain or even or even go faster than Moore’s law for the next decade.”

Nvidia announced several new products and services at GTC 2023 to expand the applications enabled by GPUs, improve GPU performance and make GPU computing more accessible. These included the DGX Cloud AI supercomputing service, the AI Foundations services for custom generative AI applications, the L4 and H100 NVL specialized GPUs, and the Omniverse Cloud platform-as-a-service. The company also disclosed more details around its Arm-based Grace and Grace Hopper Chips and said it has begun production for its BlueField-3 data processing unit.

What follows is a roundup of eight big announcements made at Nvidia’s GTC 2023 that will create new opportunities for channel partners, which includes the new products and services as well as the cloud service providers and server vendors who are backing them.

Nvidia Launches DGX Cloud With Support From Oracle, Microsoft And Others

After teasing DGX Cloud at Nvidia’s last earnings call, the GPU giant marked the launch of the new AI supercomputing service at GTC 2023 with initial availability from Oracle Cloud Infrastructure.

DGX Cloud is a service designed to give enterprises quick access to the tools and infrastructure they need to train deep learning models for generative AI and other applications. It does this by combining Nvidia’s DGX supercomputers and AI software and makes them available in a web browser through instances hosted by cloud service providers.

“We are at the iPhone moment of AI. Startups are racing to build disruptive products and business models while incumbents are looking to respond. Generative AI has triggered a sense of urgency in enterprises worldwide. To develop AI strategies, customers need to access Nvidia AI easier and faster,” Nvidia CEO Jensen Huang said during his GTC 2023 keynote.

DGX Cloud instances are available now from Oracle Cloud Infrastructure. The service is expected to land at Microsoft Azure in the third quarter, and Nvidia said it will “soon expand to Google Cloud and more.”

At the software layer, DGX Cloud includes Nvidia’s Base Command platform, which manages and monitors training workloads, and it lets users right-size the infrastructure for what they need. The service also includes access to Nvidia AI Enterprise, a software suite that includes AI frameworks, pretrained models and tools for developing and deploying AI applications, among other components.

At launch, each DGX Cloud instance will include eight of the A100 80GB GPUs, which were introduced in late 2020. The eight A100s combined bring the node’s total GPU memory to 640GB. The monthly cost for an A100-based instance will start at $36,999, with discounts available for long-term commitments.

Nvidia plans to make available DGX Cloud instances with Nvidia’s H100 80GB GPU at some point in the future.

New Services Help Enterprise Build Generative AI Models From Proprietary Data

Nvidia revealed a new umbrella of AI cloud services, collectively known as Nvidia AI Foundations, that are designed to help enterprises build and run custom large language models and generative AI models using proprietary data sets.

The services, which run on Nvidia’s new DGX Cloud AI supercomputing service, come with pretrained models, frameworks for data processing, vector databases and personalization features, optimized inference engines, APIs and enterprise support.

Nvidia NeMo is a large language model customization service that includes methods for businesses to extract proprietary data sets and use them for generative AI applications such as chatbots, enterprise search and customer service. NeMo is available in early access.

Nvidia Picasso is focused on generating AI-powered images, videos and 3D models from text prompts for creative, design and simulation applications. The service allows enterprises to train Nvidia’s Edify foundation models on proprietary data. The service also comes with pre-trained Edify models that are based on fully licensed data sets. Picasso is available in preview mode.

New Specialized Data Center GPUs For AI, Graphics

Nvidia expanded its portfolio of data center GPUs with products that are specialized for AI-powered video performance and large language models.

Designed for AI-powered video applications, Nvidia is pitching the L4 GPU as much more faster and energy efficient than CPUs for those workloads. Compared to a dual-socket server with two Intel Xeon Platinum 8380s, from 2021’s third generation of Xeon Scalable processors, a server with eight L4 GPUs is 120 faster and 99 percent more energy efficient, according to Nvidia. The GPU is built for video decoding and transcoding, video streaming, augmented reality, AI-generated video and other video workloads.

Google Cloud has launched a private preview of L4-powered instances with the G2 virtual machines. The GPU is also available in systems from more than 30 vendors, including ASUS, Atos, Cisco Systems, Dell Technologies, Gigabyte, Hewlett Packard Enterprise, Lenovo and Supermicro.

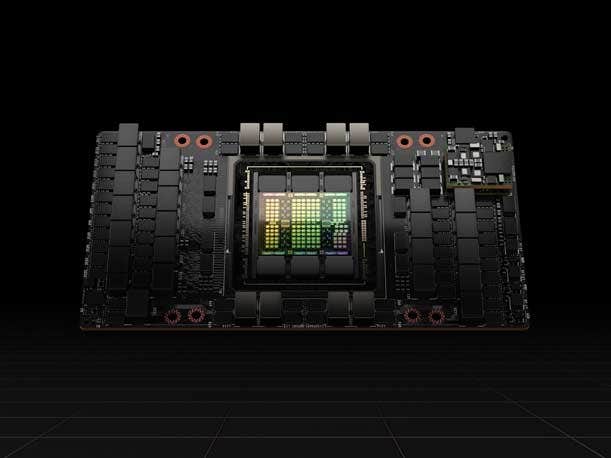

The company also revealed the H100 NVL, which combines two H100 PCIe cards and connects them with an NVlink bridge. Designed to running inference on massive large language models like the popular ChatGPT at scale, the GPU comes with 94GB of memory, thanks to Hopper’s Transformer Engine, provides up to 12 times faster inference performance for the GPT-3 model compared to the A100.

The H100 NVL is expected to launch in the second half of the year.

Grace, Grace Hopper Superchips: New Details, Delayed To Late 2023

Nvidia used GTC 2023 to highlight how its CPU-based Grace superchip and its Grace Hopper superchip, which combines a CPU and GPU, will accelerate new workloads.

The company also disclosed that while the two chip modules are now sampling with customers, it has delayed their availability within systems to the second half of the year after promising multiple times in 2022 that systems would arrive in the first half of 2023.

Server vendors expected to support the Grace Superchip consist of Asus, Atos, Gigabyte, Hewlett Packard Enterprise, QCT, Supermicro, Wistron and ZT.

While Nvidia CEO Jensen Huang emphasized at GTC 2023 that traditional CPUs aren’t well suited for many accelerated computing applications, he said the company designed the Grace CPU, which consists of 72 Arm-compatible cores, to “excel at single-threaded execution and memory processing” and provide “high energy efficiency at cloud data center.” These facets make the CPU “excellent for cloud and scientific computing applications,” Huang added.

The Grace superchip combines two Grace CPUs to provide a total of 144 cores, which are connected over a 900 GB/s low-power chip-to-chip coherent interface. For memory, the superchip has “server-class” LPDDR5X, which Nvidia said is the first data center CPU to do so.

Huang said Nvidia tested Grace on a popular Google benchmark, which measures performance for cloud microservices, as well as a suite of Apache Spark benchmarks that test memory-intensive processing, which the CEO called “foundation for cloud data centers.”

Compared to the newest generation of x86 CPUs, Grace is, on average, 30 percent faster at microservices and 20 percent faster at data processing while “using only 60 percent of the power measured at the full server node,” according to Huang, who didn’t identify the rival CPU.

“[Cloud service providers] can outfit a power-limited data center with 1.7 times more Grace servers, each delivering 25 percent higher throughput,” he said.

While Nvidia is designed the Grace superchip for cloud and scientific computing, its tailoring the Grace Hopper superchip for “processing giant data sets, like AI databases for recommender systems and large language models,” according to Huang.

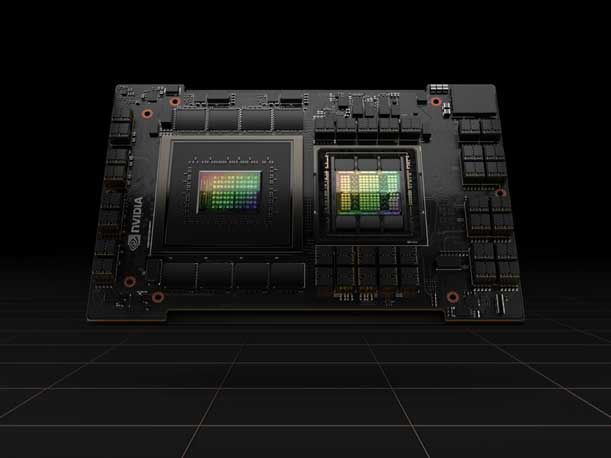

It’s well-suited for these data-heavy applications because of how the Grace Hopper brings together a Grace CPU and a Hopper GPU onto one module and connects them with a high-speed interface. This interface is “seven times faster than PCI Express,” Huang said, which means the superchip is better suited for these applications than a discrete CPU and discrete GPU that communicate over PCIe.

“This is Nvidia’s inference platform, one architecture for diverse AI workloads and maximum data center acceleration and elasticity,” he said.

Availability Expands For H100-Based Services And Systems

Nvidia announced that availability is expanding for products and services running on its powerful, Hopper-based H100 data center GPU for AI training and inference workloads.

First announced last year, the H100 is the successor to the Ampere-based A100 GPU, which debuted in 2020. Thanks to Nvidia’s new Hopper architecture and its built-in Transformer Engine, the H100 is capable of running training workloads nine times faster and inference workloads 30 times faster on large language models compared to the A100, according to Nvidia.

In cloud computing, Nvidia said Oracle Cloud Infrastructure has launched new OCI Compute bare-metal GPU instances with H100 GPUs in limited availability. Amazon Web Services plans its own H100-powered services with the forthcoming EC2 UltraClusters of EC2 P5 instances, which Nvidia said can scale up to 20,000 H100 GPUs. Microsoft Azure last week launched a private preview of H100-based virtual machines with the new ND H100 v5 instance.

H100-based cloud instances are currently available from Cirrascale and CoreWeave. Nvidia said H100-powered instances are coming from Google Cloud, Lambda, Paperspace and Vultr.

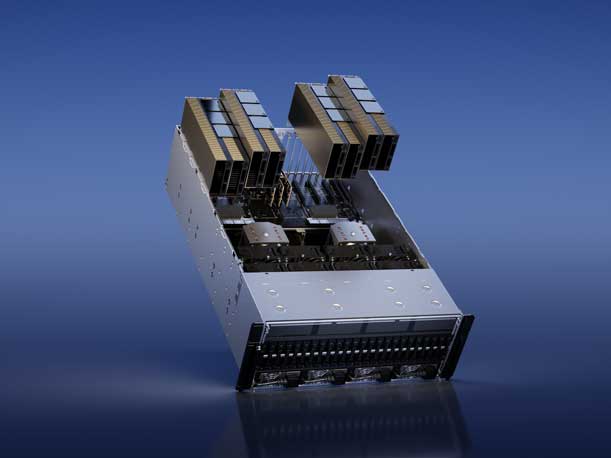

As for on-premises servers, Nvidia said systems using H100 GPUs are now available from Atos, Cisco Systems, Dell Technologies, Gigabyte, Hewlett Packard Enterprise, Lenovo and Supermicro. Nvidia’s DGX H100, which features eight H100 GPUs connected with NVLink interconnects, is also available.

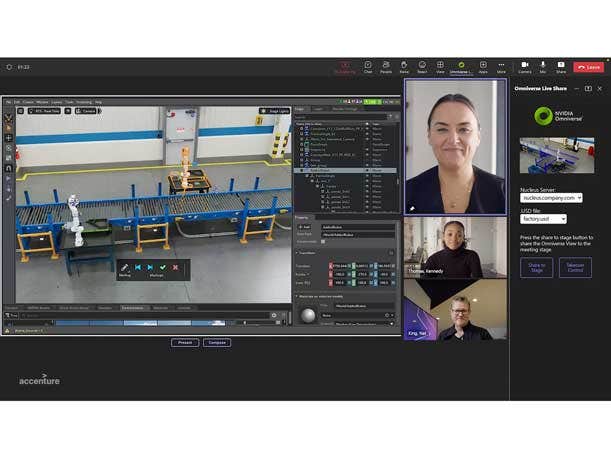

Omniverse Cloud Limited Availability Begins With Microsoft Azure

Nvidia has made available to select enterprises Omniverse Cloud, its new platform-as-a-service for creating and running 3-D internet applications, which the company refers to as the “industrial metaverse.”

Microsoft Azure is the first cloud service provider to offer the subscription-based Omniverse Cloud, which provides access to Nvidia’s full suite of Omniverse software applications on its OVX infrastructure. The chip designer’s OVX servers use its L40 GPU, which is optimized for graphics-heavy applications as well as AI-powered generation of 2-D images, video and 3-D models.

While Nvidia has marketed Omniverse as benefiting a wide swath of industries in the past, the company is focusing its promotion efforts for Omniverse Cloud around the automotive industry, saying it lets design, engineering, manufacturing and marketing teams digitize their workflows.

Applications enabled by Omniverse Cloud include connecting 3-D design tools to speed up vehicle development, creating digital twins of factories to simulate changes in the manufacturing line and performing closed-loop simulations of vehicles in action.

The service will become widely available in the second half of this year.

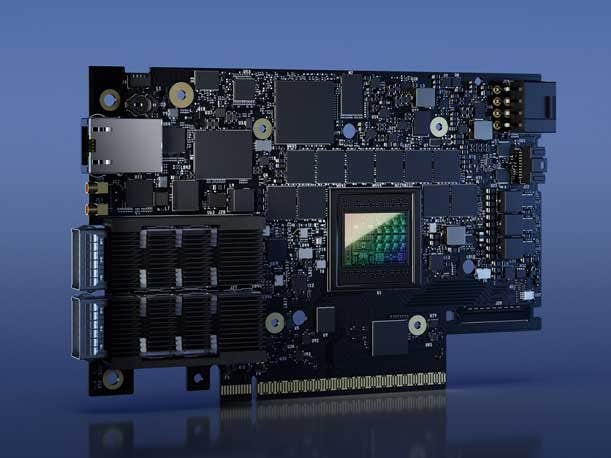

BlueField-3 DPU Now In Production, Adopted By Oracle Cloud

Nvidia announced that its BlueField-3 data processing unit is now in full production and has been adopted by Oracle Cloud Infrastructure into its networking stack.

The BlueField-3 is Nvidia’s third-generation DPU, and it’s designed to offload critical networking, storage and security workloads from the CPU and accelerate them while also enabling new security and hypervisor capabilities. The company said tests have showed servers with BlueField DPUs have led to power reductions of up to 24 percent compared to systems without the component.

BlueField-3 supports Ethernet and InfiniBand connectivity running up to 400 gigabits per second, and it provides four times faster computing speed, up to four times faster crypto acceleration, two times faster storage processing and four times more memory bandwidth than the BlueField-2.

Beyond Oracle Cloud, BlueField-3 is also being used by Microsoft Azure and CoreWeave. Nvidia said solutions using BlueField DPUs are in development from Check Point Software, Cisco Systems, Dell Technologies, Juniper Networks, Palo Alto Networks, Red Hat and VMware, among others.

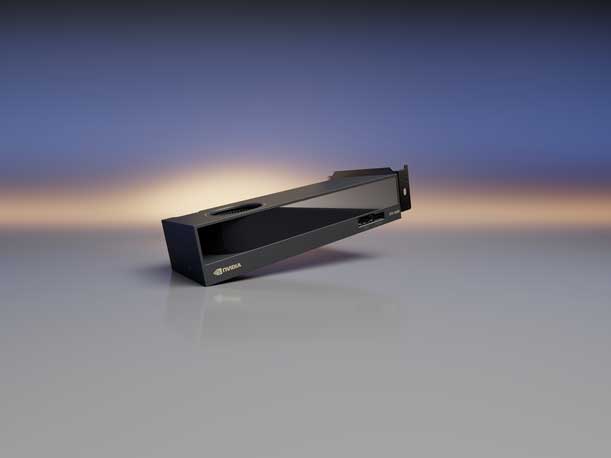

New RTX 4000 GPUs For Laptops, Compact GPU For Workstation

Nvidia revealed six new RTX GPUs for laptops and compact workstations running heavy-duty content creation, design and AI applications.

The company said laptops are coming later this month with the new RTX 5000, RTX 4000, RTX 3500, RTX 3000 and RTX 2000 GPUs, all of which use the same Ada Lovelace generation that powers Nvidia’s consumer-focused GeForce RTX 40 series graphics cards.

These GPUs come with up to 16GB of memory to handle large data sets, and they can provide up to two times faster single-precision floating point performance, up to two times faster ray tracing performance and up to two times faster AI training performance, compared to the previous generation of Ampere-powered RTX GPUs for workstation applications, according to Nvidia.

Nvidia is enabling powerful compact desktop workstations with the new RTX 4000 Small Form Factor GPU, which comes with the same performance improvements from the Ada Lovelace architecture. The company said global distributors such as PNY and Leadtek will make the compact workstation GPU available for an estimated price of $1,240 starting in April. Workstation vendors are expected to release systems with the new GPU later this year.