AMD Launches Instinct MI300 AI Chips To Challenge Nvidia With Backing From Microsoft, Dell And HPE

The MI300 chips—which are also getting support from Lenovo, Supermicro and Oracle—represent AMD’s biggest challenge yet to Nvidia’s AI computing dominance. It claims that the MI300X GPUs, which are available in systems now, come with better memory and AI inference capabilities than Nvidia’s H100.

AMD said its newly launched Instinct MI300X data center GPU exceeds Nvidia’s flagship H100 chip in memory capabilities and surpasses it in key AI performance metrics.

But while the company said the chip provides the same training performance for a large language model, its improved memory capabilities will enable big cost savings compared to its rival.

The chip designer announced the launch of the Instinct MI300X alongside its CPU-GPU hybrid chip, the Instinct MI300A data center APU, at a Thursday event in San Jose, Calif., in its biggest challenge yet to Nvidia’s dominance of the AI computing space.

[Related: LLM Startup Embraces AMD GPUs, Says ROCm Has ‘Parity’ With Nvidia’s CUDA]

“It’s the highest performance accelerator in the world for generative AI,” AMD CEO Lisa Su said of the MI300X in her keynote at the event.

The MI300X will go in servers from several OEMs, including Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro. Dell said its MI300X servers are available today while other vendors are expected to release their designs over the next several months.

The third generation in AMD’s Instinct data center GPU family, the MI300X will also power upcoming virtual machine instances from Microsoft Azure and bare metal instances from Oracle Cloud Infrastructure.

Other cloud service providers planning to support the MI300X include Aligned, Akron Energy, Cirrascale, Crusoe and Denvr Dataworks.

The MI300A, on the other hand, will go into servers arriving next year from HPE, Supermicro, Gigabyte and Atos subsidiary Eviden.

AMD also announced the release of its ROCm 6 GPU programming platform, which it promotes as an “open” alternative to Nvidia’s CUDA platform.

The latest version of the platform comes with advanced optimizations for large language models, updated libraries to improve performance and expanded support for a variety of frameworks, AI models and machine learning pipelines.

The chip designer launched the new Instinct chips as Nvidia experiences significant demand for its GPUs to power AI workloads, particularly large language models and other types of generative AI models.

High demand for Nvidia’s most powerful GPUs such as the H100 has resulted in shortages, prompting businesses to look for alternatives, including those from the AI chip giant’s rivals.

Instinct MI300X Specs And Performance Metrics

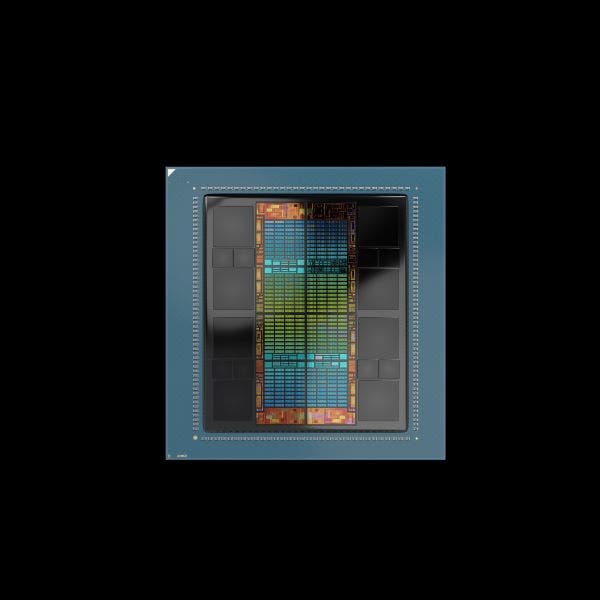

The Instinct MI300X is based on the CDNA 3 architecture, the third generation of AMD’s GPU architecture purpose-built for AI and HPC workloads in the data center.

But while AMD is putting a greater emphasis on workloads at the convergence of HPC and AI for the MI300A, the company believes the MI300X will have greater appeal for training and running inference on large language models such as Meta’s open-source Llama 2 family.

It comes with 192GB of HBM3 high-bandwidth memory, which is 2.4 times higher than the 80GB HBM3 capacity of Nvidia’s H100 SXM GPU from 2022. It’s also higher than the 141GB HBM3e capacity of Nvidia’s recently announced H200, which lands in the second quarter of next year.

The MI300X’s memory bandwidth is 5.3 TB/s, which is 60 percent higher than the 3.3 TB/s capacity of the H100 and also greater than the 4.8 TB/s capacity of the H100.

But whereas the SXM form factor of the H100 requires 700 watts of power, the MI300X is slightly more demanding with a power envelope of 750 watts.

In terms of HPC performance, AMD said the MI300X can achieve up to 163.4 teraflops for matrix operations with double-precision floating point math, also known as FP64. For FP64 vector operations, the chip can hit 81.7 teraflops. These figures represent a 2.4 increase over Nvidia’s H100.

For single-precision floating point math, also known as FP32, the MI300X can hit 163.4 teraflops for both matrix and vector operations. The chip’s vector performance is 2.4 times greater than what the H100 can achieve, according to AMD. It added that the H100 is not capable of FP32 tensor operations, so there is no comparison for matrix operations.

As for key AI performance metrics, AMD said the MI300X is 30 percent faster than the H100 for TensorFloat-32 or TF32 (653.7 teraflops), half-precision floating point or FP16 (1307.4 teraflops), brain floating point or BFLOAT16 (1307.4 teraflops), 8-bit floating point or FP8 (2614.9 teraflops), and 8-bit integer or INT8 (2614.9 teraflops)

When it comes to performance for the most common large language model kernels, the MI300X is slightly faster than the H100, according to AMD.

For the kernel of Meta’s 70-billion-parameter Llama 2 model, the MI300x is 20 percent faster for the medium kernel and 10 percent faster for the large kernel. For Flash Attention 2, the MI300X is 10 percent faster for the medium kernel and 20 percent faster for the large kernel.

“What this means is the performance at the kernel level actually directly translates into faster results,” Su said.

Instinct MI300X Platform And System-Level Performance

AMD plans to make the MI300X available to OEMs in the Instinct MI300X Platform, which will consist of eight MI300X chips, provide roughly 10.4 petaflops of peak FP16 or BF16 performance, offer 1.5TB of HBM3 and about 896 GB/s of Infinity Fabric bandwidth.

That gives the MI300X Platform 2.4 times greater memory capacity, 30 percent more compute power and similar bi-directional bandwidth compared to Nvidia’s H100 HGX platform, according to AMD.

The MI300X Platform also supports PCIe Gen 5 with a bandwidth of up to 128 GB/s, a maximum of 400 gigabit Ethernet and 440 GB/s of single node ring bandwidth, which the company said is comparable to the H100 HGX platform.

For training the 30-billion-parameter MPT model, AMD said MI300X Platform offers the same throughput as the H100 HGX for tokens per second.

But for inference performance of large language models on a single eight-GPU server, the MI300X offers a clearer advantage against the H100, AMD said.

For the 176-billion-parameter Bloom model, the chip offers 60 percent higher throughput. For the 70B Llama 2 model, it offers 40 percent lower chat latency.

While Su said the MI300X Platform offers the same throughput for training a large language model like the 176B Bloom model, its real advantage comes from improved memory capabilities.

Higher memory capacity and bandwidth allows the MI300X Platform to run twice as many models and double the model size compared to the H100 HGX platform, according to Su.

“This directly translates into lower [capital expenditures], and especially if you don’t have enough GPUs, this is really, really helpful,” Su said.

AMD said the platform is an industry-standard design that will allow OEMs to “design-in MI300X accelerators into existing AI offerings and simplify deployment and accelerate adoption of AMD Instinct accelerator-based servers.”

Instinct MI300A Specs And Performance Metrics

Shipping in volume today, the Instinct MI300A is what AMD is calling the “world’s first data center APU for HPC and AI.” APU is the company’s nomenclature for a chip that combines CPU cores and GPU cores on the same die, and it has been relegated to processors for PCs until now.

The MI300A contains the same x86-based Zen 4 cores that power AMD’s latest Ryzen and EPYC processors. Those cores are combined with GPU cores based on AMD’s new CDNA 3 architecture as well as 128GB of HBM3 memory, which is 60 percent higher than the H100’s 80GB HBM3 capacity but lower than the 141GB HBM3e capacity of the H200 arriving next year.

The chip’s memory bandwidth is the same as the MI300X at 5.3 TB/s.

Server vendors can configure the MI300A’s thermal design power between 550 watts and 760 watts. The H100, by contrast, is 700 watts.

In terms of HPC performance, AMD said the MI300A can achieve up to 122.6 teraflops for FP64 matrix operations. For FP64 vector operations, the chip can hit 61.3 teraflops. These figures represent an 80 percent increase over the H100, according to AMD.

For FP32 math, the MI300A can hit 122.6 teraflops for both matrix and vector operations. The chip’s vector performance is 80 percent higher than the H100, according to AMD.

As for AI performance, AMD said the MI300A provides almost the same performance but slightly worse than the H100 for TF32 (490.3 teraflops), FP16 (980.6 teraflops), BFLOAT16 (980.6 teraflops), FP8 (1961.2 teraflops), and INT8 (1961.2 teraflops).

But while the MI300A has less GPU compute power than the MI300X, AMD said it shines when it comes to energy efficiency, shared memory between the chip’s CPU and GPU cores, an easily programmable GPU platform and “fast AI training.”

“The key advantage of the APU is no longer needing to copy data from one processor to another because the memory is unified, both in the in the RAM as well as in the cache,” said Forrest Norrod, executive vice president and general manager of AMD’s data center solutions business unit.

AMD demonstrated the MI300A’s energy efficiency prowess by comparing it to Nvidia’s GH200 Grace Hopper Superchip, which combines an Arm-based CPU and its H200 GPU on a module. The company said the MI300A offers two times greater peak HPC performance.

“The second advantage is the ability to optimize power management between the CPU and the GPU. That means dynamically shifting power from one processor to another, depending on the needs of the workload, optimizing application performance,” Norrod said.

The company also showed the benefit of combining unified memory with high memory bandwidth and GPU performance using the HPC benchmark OpenFOAM, for which the MI300A, configured at 550 watts, provides four times faster performance than the H100.

Across other HPC benchmarks, the 550-watt MI300A is 20 percent faster on MINI- NBODY, 10 percent faster on HPCG and 10 percent faster on GROMACS, according to AMD.

AMD did not provide details on the platform OEMs will use to integrate MI300A chips into servers.