IBM: Power10 CPU’s ‘Memory Inception’ Is Industry’s ‘Holy Grail’

Big Blue reveals its new 7nm Power10 server CPU, which enables servers to share multiple petabytes of memory. ‘Instead of having to put a lot of memory into every box, I could actually plug in a more reasonable amount of memory in each box, saving lots and lots of infrastructure costs,’ the chip’s lead architect said.

IBM has revealed its 7-nanometer Power10 processor for hybrid cloud workloads, bringing a feature that its lead architect is calling “a holy grail of the industry:” the ability to pool petabytes of memory between multiple servers, potentially enabling significant cost savings for data centers.

Armonk, N.Y.-based Big Blue said the server processor, announced Monday, provides up to three times greater energy efficiency, workload capacity and container density than Power9. Beyond its new “Memory Inception” feature for multi-petabyte memory clusters, the upcoming chip also comes with new memory encryption and artificial intelligence capabilities.

Power10-based servers are expected to become available in the second half of 2021, IBM said. That would put IBM ahead of Intel in releasing a 7nm server processor, though Intel has previously said its 10nm process is similar to 7nm nodes used by IBM and AMD, and its first 10nm server processors are set to begin shipping by the end of this year. AMD, on the other hand, has had 7nm server processors on the market for roughly a year.

IBM said its Memory Inception feature allows multiple Power10-based systems to share multiple petabytes of memory, which can improve cloud capacity and economics for memory-intensive applications from vendors like SAP and SAS Institute, as well as inferencing for large-scale AI models.

Bill Starke, distinguished engineer and Power10 processor architect, said Memory Inception essentially allows the Power10 processor to “trick a system into thinking that memory in another system belongs to this system,” and it has significantly lower latency than traditional methods like remote direct memory access over Mellanox InfiniBand interconnects.

“This is an extremely low latency way to basically use just the speed of electricity through a cable and nothing more in your way to get to other systems’ memories,” he said. “And what this really means that this has kind of been a holy grail of the industry.”

Starke said Memory Inception has major implications for server costs, particularly because the ability to pool memory among several systems means each rack doesn’t need to be overloaded with memory.

“The benefit of this is kind of two-fold, especially in a cloud setting where you’re at a massive scale,” he said. “Instead of having to put a lot of memory into every box so that I can make sure I could meet the peak demands of that box, I could actually plug in a more reasonable amount of memory in each box, saving lots and lots of infrastructure costs. And then, in the case where I have a spike [in] demand for memory, I can borrow that memory from my neighbors.”

Memory Inception can also enable new kinds of workloads that require massive amounts of memory that normally wouldn’t fit into one server box, according to Starke.

“What if I want one computer to talk to a petabyte of memory? Well, nobody knows how to build that today,” he said. “With this, by clustering things I could actually get to huge amounts of memory and enable whole new kinds of workloads to run on the computers in my cloud and actually provide that as a service to people.”

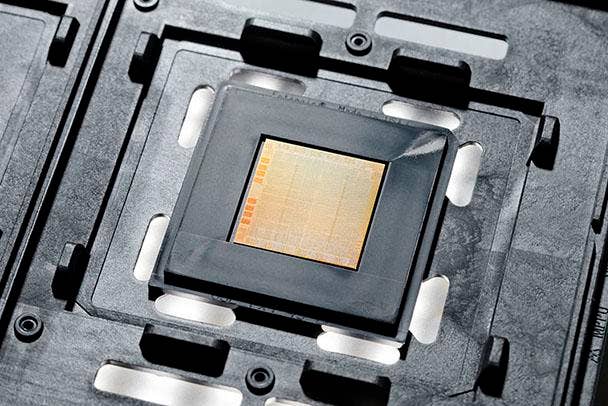

The Power10 processor will come in two types. The first is 602-square millimeter single-chip module with 18 billion transistors that has more than 4.0 GHz in frequency and packs up to 15 cores and 120 simultaneous threads, thanks to each core’s ability to handle eight threads. The second is a 1,204-square millimeter dual-chip module with 36 billion transistors that has more than 3.5 GHz in frequency and packs up to 30 cores and 240 threads.

Both chips will support PCIe 5.0 connectivity, with the single-chip module supporting 32 lanes and the dual-chip module supporting 64 lanes. For memory, the chips are capable of gigatransfers per second, and they will be able to support a seamless transition from DDR4 to DDR5, thanks to their technology-agnostic support. They will also come with up to 120 MB in L3 cache, an SMP interconnect to support up to 16 sockets and an OpenCAPI interface for directly connecting the CPU to accelerators.

The Power10’s other significant feature is a set of new components that can accelerate AI inference workloads anywhere from 10 to 20 times faster than the Power9.

Power10 also comes new security features like new ASES encryption engines as well as transparent memory encryption, which comes with no performance impact and enables systems to protect containers from each other within the same virtual machine.

The Power10 is IBM’s first commercialized processor made using Samsung’s 7nm manufacturing node as part of a long-term strategic partnership for process technology research and development. The company’s previous processor, Power9, is based on GlobalFoundries’ 14nm process.

“Moving to seven nanometers obviously gives us a lot more efficiency and a lot of the capabilities and the density in the chip design that enables us to build the capabilities in here,” said Steve Sibley, vice president of Power offering management for IBM Power.

Patrick Moorhead, president and principal analyst at Moor Insights and Strategy, said Power processors are “getting a lot of traction and attention” with large IBM customers as well as organizations that have big data and machine learning needs. To him, Power10’s Memory Inception feature could be a game changer that could convince more hyperscalers to adopt.

“It changes the calculus because you could actually save an incredible amount of money if you‘re a hyperscaler with shared multi-tenant workloads,” he said.