5 Cool Chip Solutions For Edge AI

For the massive number of IoT devices coming online, processing AI workloads at the edge is better than the cloud for providing near-real-time inference and response times in addition to slashing data transport and processing costs and ensuring data privacy and security, Deloitte recently said. CRN rounds up five cool chip solutions that are making edge AI a reality.

As the hype around generative AI has created new demand for powerful chips in data centers, the need for smaller and more efficient chips remains strong as organizations plan to spend larger sums of money on IoT deployments that will require edge AI processing capabilities.

Last month, research firm Gartner said increased use of AI-based applications in edge infrastructure and endpoint devices is a significant driver in its estimate that AI chip revenue will grow 20.9 percent this year to $53.4 billion. Sales are expected to expand further, by 25.6 percent, to $67.1 billion in 2024.

[Related: AMD Acquires Mipsology To Ramp Up AI Inference Rivalry With Nvidia]

For the massive number of IoT devices coming online, processing AI workloads at the edge is better than the cloud for providing near-real-time inference and response times in addition to slashing data transport and processing costs and ensuring data privacy and security, senior staff at global consulting firm Deloitte wrote in a recent article for The Wall Street Journal.

“By distributing the cloud’s scalable and elastic computing capabilities closer to where devices—and users—generate and consume data in the physical world, leading-edge technologies such as 5G, edge computing, and computer vision can enable enterprises to modernize applications across operational sites and help to enhance customer experience, operational efficiency, and productivity,” they said.

As part of CRN’s IoT Week 2023, here are five cool chip solutions for edge AI applications from leading and emerging vendors in the semiconductor space: AMD, Axelera AI, Intel, Nvidia and SiMa.ai.

AMD

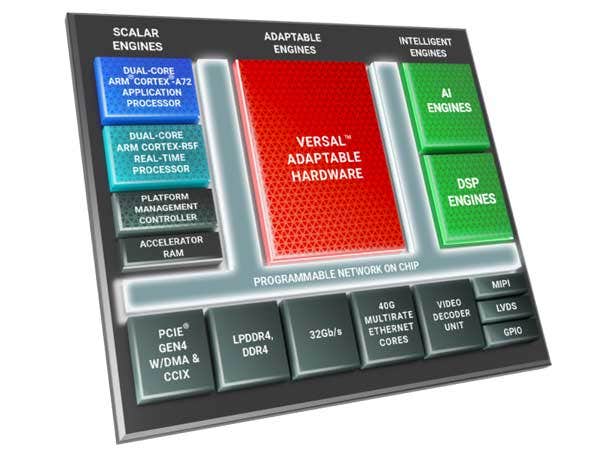

AMD is tackling edge AI opportunities with its Versal AI Edge system-on-chips, which are designed to provide competitive performance per watt against GPUs for real-time systems in autonomous vehicles, health care systems, factories and aircraft.

The chips consist of three main parts: scalar engines that include two dual-core Arm processors for running Linux-class applications and safety-critical code, adaptable engines capable of determinism and parallelism to process data from sensors, and intelligence engines that can run common edge workloads such as AI inference, image processing and motion control.

Developers can take advantage of the Versal AI Edge chips using AMD’s Vitis unified software platform, which comes with open-source libraries, a model zoo, a single programming model for developing applications on all of AMD’s chip architectures and a video analytics software development kit.

Axelera AI

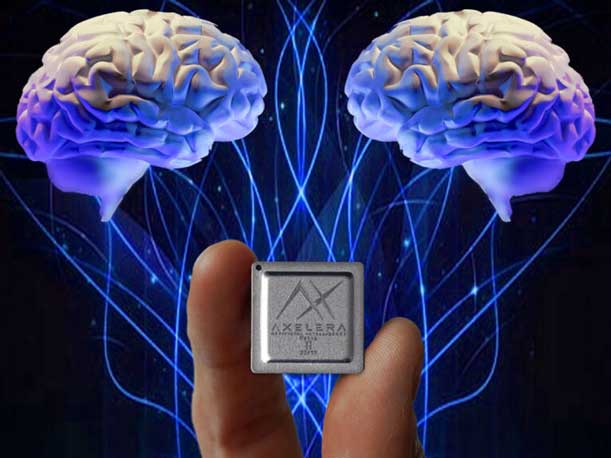

Axelera AI enables edge AI applications with a novel chip architecture that takes advantage of the startup’s proprietary in-memory computing and RISC-V controlled dataflow technologies.

By using in-memory computing, Axelera can treat each memory cell of SRAM memory on its Metis AIPU as a compute element, reducing the need to move data between different places and radically increasing the number of operations per compute cycle. The AIPUs are available in a two form factors: an M.2 accelerator module with a single AIPU and PCIe accelerator cards with one or four AIPUs.

The AIPU can deliver up to 214 tera operations per second, 15 TOPs per watt and inference model accuracy that is equivalent to the single-precision floating point format, also known as FP32.

Developers can take advantage of Axelera’s Metis AIPUs using the startups Voyager software development kits, which comes with several components needed to run edge AI applications such as a runtime, an inference server, compilers and optimization software.

Intel

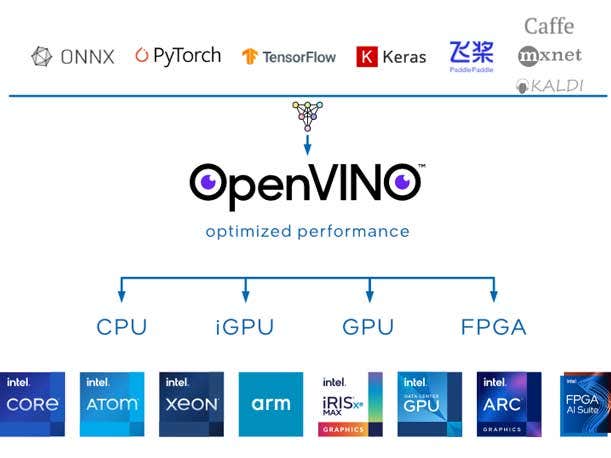

Intel supports edge AI applications through a variety of chips, thanks to its OpenVINO software toolkit, which optimizes deep learning models to run on CPUs and GPUs.

Among the processors supported by OpenVINO is the Intel Celeron 6305E CPU, which comes with two cores, a base frequency of 1.8 GHz and integrated graphics in a 15-watt power envelope.

Using the Celeron’s integrated graphics alone, the chip can hit 111.95 frames per second in the yolo_v8n model for object detection and image classification using an 8-bit numerical format.

Developers can take advantage of the Celeron 6305E and other Intel processors for edge AI applications using OpenVINO, which can automatically select the best processor for a given task as well as split inference workloads among several processors.

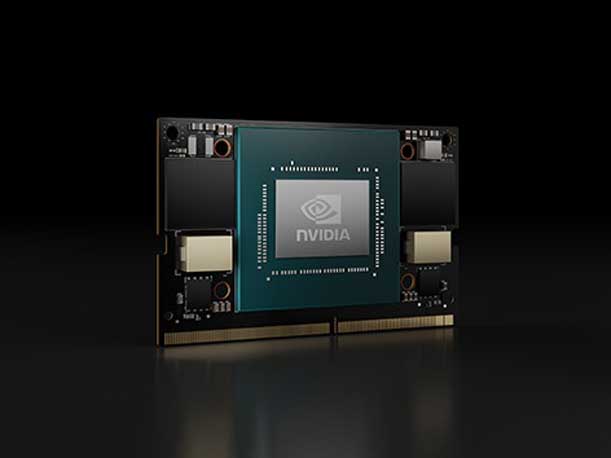

Nvidia

Nvidia provides several chip solutions for edge AI use cases, and they include the Jetson Orin Nano series, which packs up to 40 tera operations per second (TOPS) in a credit card-sized system-on-chip.

Measuring at 69.6 millimeters wide by 45 millimeters tall, the Jetson Orin Nano features an Ampere architecture GPU with 1,024 CUDA Cores and 32 Tensor Cores plus a 6-core Arm Cortex-A78E CPU and 8GB of LPDDR5 memory. This only requires a power envelope that ranges from 7 watts to 15 watts.

In addition to providing 40 TOPS of AI inference performance, the chip can also support up to two video encoding streams running at 30 frames per second in a 4K resolution per one to two CPU cores. For video decoding, the chip can support one 4K video stream with 60 frames per second, two 4K streams with 30 frames, five 1080p streams with 60 frames and 11 1080p streams with 30 frames.

Developers can take advantage of the Jetson Orin Nano chips using Nvidia’s Jetson software stack, which includes software libraries such as TensorRT and cuDNN for AI inference, a container runtime, a Linux kernel and the CUDA toolkit for building GPU-accelerated applications.

SiMa.ai

SiMa.ai is focused on providing power efficient machine learning chip solutions for the edge with its software-centric, purpose-built MLSoC chip.

The MLSoC comes with dedicated processors for machine learning accelerator and high-performance application processor as well as computer vision processors for image pre- and post-processing.

These elements make the MLSoC capable of delivering up to 50 tera operations per second (TOPS) and 10 TOPS per watt for machine learning, up to 30 frames per second in a 4K resolution for video encoding, up to 60 frames per second in a 4K resolution for video decoding and up to 600 16-bit giga operations per second for computer vision.

Developers can take advantage of the MLSoC using SiMa.ai’s Palette low-code integrated development environment, which is capable of compiling and evaluating any machine learning model as well as deploying and managing applications on silicon.