10 Hot Generative AI Products And Companies At RSAC 2023

Generative AI has been a major theme at RSA Conference 2023, both as a topic of discussion and in many of the show’s biggest product launches.

Generative AI At RSAC 2023

While it’s been clear for months that generative AI could have a major upside for cyberdefense, just a few cybersecurity vendors had unveiled products leveraging the technology—until this week. At RSA Conference 2023, generative AI has been everywhere, both on the show floor and as a ubiquitous topic of discussion. Vendors unveiled generative AI-powered products for security operations teams, code security, threat intelligence and more in connection with RSAC 2023.

[Related: RSA Conference 2023: News And Analysis]

OpenAI’s hugely popular ChatGPT may be recognized as a useful tool for threat actors, but the applications on the cyberdefense side are growing, as well—particularly when it comes to improving the productivity of security analysts. At RSAC 2023 in San Francisco, cybersecurity companies showcasing new products powered by generative AI include SentinelOne, Google Cloud, Recorded Future and Veracode.

They join other vendors such as SlashNext, which had launched its generative AI email security tool in late February; Orca Security, which was quick to integrate GPT-3 into its cloud security platform; and Microsoft, which unveiled its Security Copilot tool in late March. Security Copilot uses the latest version of OpenAI’s large language model, GPT-4, combined with Microsoft’s own security-focused AI model. A prominent backer of OpenAI, Microsoft has revealed plans to embed generative AI technology throughout its broad portfolio of security products.

But while Microsoft has received plenty of attention for its efforts to bring generative AI to the cybersecurity realm, the company now has some competition. What follows are 10 generative AI products and companies we’ve been tracking at RSAC 2023.

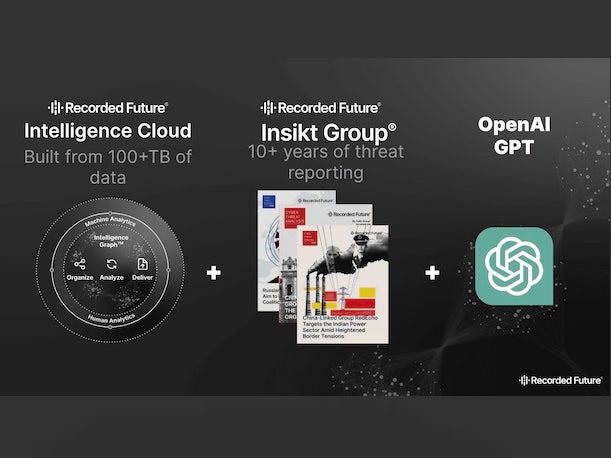

Recorded Future AI

In January, threat intelligence firm Recorded Future released research that helped to confirm what many had suspected— that ChatGPT is being used by malicious actors intent on carrying out cyberattacks with the help of the tool. Among the “most pressing and common threats” from the use of ChatGPT by cybercriminals are phishing, social engineering and malware development, Recorded Future researchers said at the time.

However, that’s no reason to not use the technology on the cyberdefense side. Earlier this month, the company disclosed that it’s now leveraging GPT technology in its Intelligence Cloud, with the debut of its Recorded Future AI capability. The offering enables users to quickly identify and prioritize important threats and vulnerabilities, the company said.

Specifically, Recorded Future said it has added AI to automate manual threat analysis tasks. Analysts should spend less time searching, summarizing and writing reports, while executives should get analyst-grade real-time analysis and reporting, according to Recorded Future.

Recorded Future AI has been trained on more than a decade of threat analysis data from the company’s threat research unit, Insikt Group, the company said. “Recorded Future automatically collects and structures data related to both adversaries and victims from text, imagery and technical sources, and uses natural language processing and machine learning to analyze and map insights across billions of entities in real time,” the company said in a blog post.

Talon Enterprise Browser

In the lead-up to RSAC 2023, startup Talon Cyber Security launched an integration between its Enterprise Browser and Microsoft Azure OpenAI Service. The integration should provide more enterprise-grade access to text-generator ChatGPT, according to Talon.

“The productivity gains that ChatGPT enables for organizations are too game-changing for us to not make an enterprise-level version of this easily available to our customers,” Talon CEO Ofer Ben-Noon said in a statement. “By embedding Microsoft Azure OpenAI Service in Talon’s Enterprise Browser, organizations can embrace ChatGPT for enterprise use in a manner that prioritizes security, compliance, and is ultimately within their control.”

Talon and ChatGPT users can leverage existing Azure resources, maintain data protection, keep data put into ChatGPT within a perimeter and prevent third-party services transfers, according to Talon. Administrators can block Enterprise Browser users from pasting data or inputting certain data into an embedded ChatGPT window, according to Talon. Administrators can also forbid the entering of credit card numbers, source code, keys and other information.

The productivity benefits include the ability to right click on an open email and have AI compose a response or summarize a message, according to Talon. Organizations can generate reports to demonstrate compliance with query logs, and they can block extensions that use public ChatGPT.

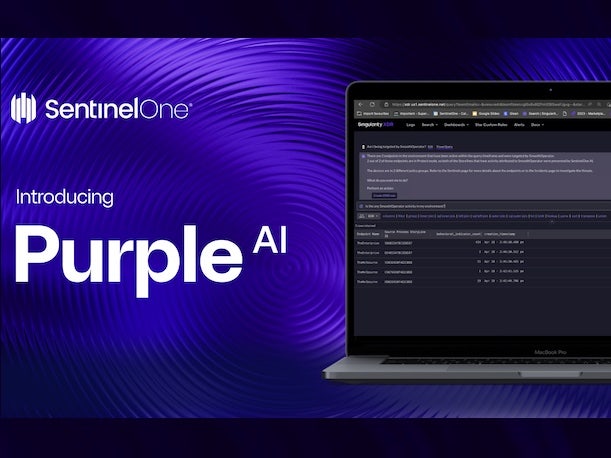

SentinelOne Purple AI

In connection with RSAC 2023, SentinelOne announced a new threat hunting tool for its Singularity platform that utilizes a large language model (LLM) in an effort to dramatically improve productivity for security analysts. SentinelOne is referring to the new generative AI-powered threat hunting tool as “Purple AI,” the company told CRN.

Analysts will be able to use the new generative AI interface in the Singularity Skylight platform to ask questions about threats in a customer’s environment—for instance, “Is a certain threat actor present in this environment?” or “Are there threat actors affiliated with China in my environment?” The ability to use natural language to query a system will offer massive time-savings to analysts and will allow security teams to respond to more alerts and catch more attacks, said Ric Smith, chief product and technology officer at SentinelOne.

Ultimately, a main goal of implementing generative AI technology in this way is around “making threat hunting more accessible,” he told CRN. With existing threat hunting platforms, “it’s pretty daunting” to use them, Smith said. With the addition of this generative AI technology, however, SentinelOne believes the technology can now enable security operations teams to scale up their threat-hunting activities, he said.

The large language model that’s helping to power the new threat hunting tool leverages both open-source and proprietary offerings in the space, Smith said. One of the LLMs being utilized by SentinelOne is OpenAI’s GPT-4, the company confirmed to CRN. SentinelOne is also training the model on its own data and is doing “quite a bit of fine-tuning” on the LLM to customize it for the security domain, Smith said.

The new SentinelOne threat hunting tool will initially be offered as an add-on to the Singularity Skylight platform and is now in limited preview. Details about wider availability are not being released yet.

BigID BigAI

On Tuesday, BigID introduced BigAI—a large language model (LLM) powered feature to improve the quality of data environments—and the BigChat virtual assistant. The company promises that BigAI can automatically generate data table and column names for improved accuracy, interpretation, data clustering and indexing. BigAI can also automatically give document clusters better names and short descriptions summarizing the documents. The titles are generated based on the documents in each cluster for improved indexing and searching.

BigChat, meanwhile, comes as a pop-up window in the lower right corner and can answer questions around data classification, privacy compliance automation, classification types and other subjects. BigChat uses existing BigID documentation to quickly get users’ answers, according to the company.

Google Cloud Security AI Workbench

At RSAC 2023, Google Cloud unveiled its Security AI Workbench offering that’s powered by a new, security-specific large language model known as Sec-PaLM. The model utilizes Google Cloud’s security intelligence via Google’s broad visibility into threat data and Mandiant’s esteemed threat intel around vulnerabilities and malware, as well as threat actors and threat indicators, according to Google Cloud.

“We have a unique opportunity in Google where we actually have both the infrastructure to cost-effectively deliver next-generation AI, but also to infuse it with threat intel, and a lot of data to train our own large language model,” said Sunil Potti, vice president and general manager for Google Cloud’s security business, in an interview. “So rather than just say we’re using a Google version of the large language model, we’ve actually built a new security LLM.” While Sec-PaLM is based on Google’s LLM, “it’s customized and purpose-built—custom-trained—using security-related data coming from all of our sources that we have currently,” Potti told CRN.

The Google Cloud Security AI Workbench is aimed at helping to reduce the overload from threat data and the large number of security tools in use, the company said. Customers will be able to provide their private data to the Security AI Workbench platform only at inference time to enhance privacy, Google Cloud said.

The first place Google Cloud will be implementing Security AI Workbench is with a new offering, VirusTotal Code Insight, that uses the technology to analyze potentially malicious scripts and explain their behavior, ultimately helping to improve the detection of which scripts are a real threat, Google Cloud said. The offering is now in preview. Other offerings using Security AI Workbench “will be available in preview more broadly this summer,” the company said in a post.

Veracode Fix

Just ahead of RSAC, Veracode unveiled a new product that utilizes generative AI to provide remediation suggestions for application security flaws, including flaws in both code and open-source dependencies. The new Veracode Fix product is designed to help developers and security teams identify and fix vulnerabilities more quickly and effectively, the company said. Veracode Fix uses GPT technology—“the same transformer architecture on which ChatGPT is built”—and is trained on the company’s proprietary dataset that includes more than “85 million fixes over nearly two decades,” the company said in a blog post. Ultimately, the product “dramatically reduces the work and time needed to remediate flaws,” Veracode’s Devin Maguire, senior product marketing manager, said in the blog.

SecurityScorecard GPT-4 Integration

SecurityScorecard unveiled an integration between its ratings platform and OpenAI’s GPT-4 system, promising users cyber risk answers from natural language queries. Users can ask open-ended questions about vendors and their business ecosystem to manage risk, according to SecurityScorecard. The AI-powered search continuously learns and improves and takes away manual analysis time by working across monitored organizations. Users can find out which vendors were breached, which has the lowest ratings and other information, according to the company.

Flashpoint Ignite Updates

Flashpoint has a partnership with Google Cloud to roll out the hyperscaler’s generative AI and intelligence offerings within the Flashpoint product suite. The road map includes bringing Google generative AI to Flashpoint’s Ignite risk intelligence platform, a platform Flashpoint unveiled Monday.

Flashpoint believes that its user base can use generative AI to get more out of security and intelligence and mitigate risk faster than with the traditional boolean search-based interaction model, according to the company. The vendor already leverages Google Vision AI, Translation AI and other Vertex AI services for in-platform video search and Google BigQuery and Looker for analysis and visualizations in the Flashpoint platform.

Through the collaboration with Google Cloud, “we aim to empower organizations with faster and more comprehensive insights into potential cyber, physical, and fraud threats, enabling them to stay one step ahead in the ever-evolving landscape of cybersecurity,” Flashpoint CEO Josh Lefkowtiz said in a statement.

SlashNext Generative HumanAI

In late February, SlashNext unveiled what it said was the first cybersecurity product to utilize independently developed generative AI. The company’s Generative HumanAI offering is aimed at blocking email-based attacks that are created by technology such as ChatGPT. The company said it had spent the past two years developing its own large language model akin to OpenAI’s GPT-3.

At RSA 2023, SlashNext CEO Patrick Harr (pictured) told CRN that “the early results have been pretty phenomenal” in terms of demand for Generative HumanAI.

Specifically, Generative HumanAI has been focused on thwarting a type of email impersonation attack known as business email compromise (BEC). The scams typically target executives or other employees of a company, and involve an attacker pretending to be a colleague that is requesting a transfer of funds. The offering works by leveraging the company’s large language model algorithm to proactively anticipate potential BEC threats created using generative AI. The product automatically generates thousands of new variants of BEC attacks and then consults that information when deciding which incoming emails to block on behalf of users.

Harr told CRN that the technology is applicable to much more than just email-based attacks, and can also protect against threats delivered via messaging apps on the web and mobile. When it comes to communications that could be at risk from phishing and impersonation attacks, “this is a multi-channel world,” he said.

Cohesity AI-Ready Data Structure

On April 11, data security and management company Cohesity unveiled details about forthcoming offerings that will leverage generative AI, through an integration with Microsoft’s Azure OpenAI service that will enable the company to offer an “AI-ready data structure.” The integration with Cohesity’s platform—and specifically with its “unique” distributed file system—will provide enhancements to areas such as anomaly detection, the company said. Generative AI will also enable analysis of threats to be presented in human language on the Cohesity platform, ultimately helping organizations to “streamline recovery time,” the company said in a blog post. As an example, using Cohesity’s DataHawk threat intelligence with log data, the Azure OpenAI collaboration will provide AI-generated reports that improve productivity and security, Cohesity said. The company has not provided specifics about the planned release date for the Azure OpenAI integration.