Palo Alto Networks To Tackle Security’s ‘Hard Problems’ With Generative AI: CPO Lee Klarich

In an interview with CRN, Klarich says the cybersecurity giant’s use of the technology will go beyond some of the ‘superficial’ applications of generative AI in security that we’ve seen so far.

Klarich On The Record

Generative AI may be spreading rapidly throughout the cybersecurity industry, but not every AI-focused security vendor has played their hand just yet. Cybersecurity giant Palo Alto Networks, for instance, has not been among those to rush out new capabilities powered by large language models such as OpenAI’s GPT-4 — despite the company’s heavy focus on using AI/ML in its products over the years. And that is very intentional, according to Lee Klarich, the company’s chief product officer . Palo Alto Networks sees a “critical role” for generative AI in cyber defense in the future, and is taking the time it needs to bring the technology into its product portfolio in a way that addresses some of cybersecurity’s toughest problems , Klarich said in an interview with CRN at the RSA Conference 2023.

In other words, Palo Alto Networks’ use of generative AI technology will go far beyond what we’ve seen so far in security, Klarich said. “I think that right now, what you’re seeing is a very superficial application of it — which is interesting for demos, but it doesn’t necessarily solve the real hard problems,” he told CRN. “That is where the real value will come in. What we’re doing is looking at, what are those hard problems we want to go solve? How do we architecturally approach that and leverage these new AI technologies to help us get there?”

Additionally, it’s worth keeping in mind that Palo Alto Networks is “providing security for enterprises, which have very high standards for quality,” Klarich said. “You can’t just take an LLM, out of the box, slap it on something and say, ‘Here you go.’”

During the interview, the Palo Alto Networks CPO also discussed why having abundant data is so essential for generative AI; why product consolidation in cybersecurity will be increasingly critical going forward; and what makes the company’s Cortex XSIAM (extended security intelligence and automation management) offering so different from SIEM (security information and event management) technology.

What follows is an edited portion of CRN’s interview with Klarich during RSAC 2023, which took place in San Francisco in late April.

What’s the No. 1 issue you’re focused on at the conference this week?

One is being able to deliver security in the form of platforms, as opposed to lots of different point products. This has been a huge challenge in the security space for a very long time. And I just don’t believe that we collectively can accomplish the outcomes that are needed from a cybersecurity perspective, if we don’t make progress on that.

We’re talking to customers about how we actually leverage the cloud more for how capabilities are delivered. Cloud provides this infinite scale that just can’t be accomplished if you have to figure out how to put a DAT file onto an endpoint, and that’s the entirety of your security intelligence that you’re going to use to combat an attacker.

And then of course, there’s the unofficial theme of the RSA Conference this year, which is AI. But for us, this is an area that we’ve been doing a lot of work on, more on the machine learning side of AI, for 10+ years — to great success. And we are very excited about what these new large language models and other advances mean, in terms of what will now be possible for the future of how we use AI to solve some of the hardest security challenges.

What are some of the obstacles to making progress on tool consolidation?

The biggest challenge is that, even companies that are bought into the strategy — which is a growing set of companies out there — still often have the traditional way of project-based decisions. It’s just part of how things have been done for so long, that it takes time to change. And so, that’s actually one of the biggest challenges: How do we make sure that we continue to work with our customers more at the strategic level, building multi-year roadmaps with them on how to get there? While at the same time, still winning the hearts and minds of the individual architects and engineers that are looking at solving specific problems. And showing them how we can solve those problems as a component of a broader platform.

It also requires that we have partners who are proficient in helping our customers along this journey — and being able to wrap the technology with the services and solutions that are needed to help our customers. These transformations don’t happen overnight. [Partners] are looking at how to build the expertise, in partnership with us, in order to help our customers on this journey. That’s a conversation that was hard to have, say, five years ago, when the market was largely made up of lots of different point solutions. [The platform approach] is a game changer for our partners and for us.

You haven’t announced generative AI-powered capabilities yet, but what are the biggest ways you’ve already been using AI in your products?

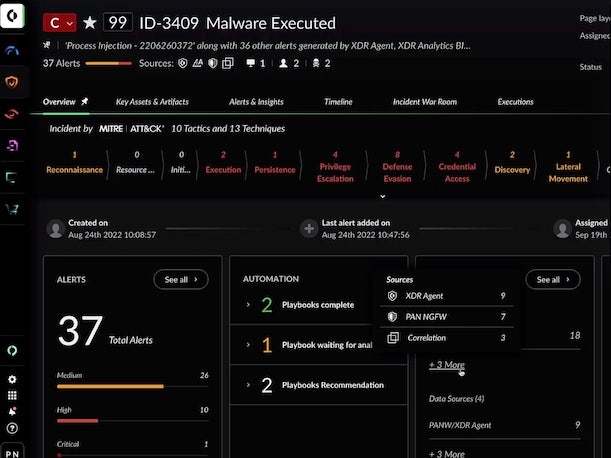

A lot of the ways in which we’ve operationalized AI in our products is focused on, how do we detect attacks? Initially, it was just, how do we detect new attacks? Then it became, how do we detect new attacks, in real time, in line — in a way that we can actually do detection and prevention in the same place? With XSIAM, we then extend that to say, how do we detect attacks based on behavior? Not just [based on] a technique. Malware is more of a technique — and obviously, we do that within XSIAM. But also, how do we look for the multi-stage behavior of a potential attack as it’s happening? That’s a fairly hard problem to solve. But those are the types of problems that XSIAM is solving today.

What the large language models are starting to open up — and what you’re seeing here with lots of amazing technologies — is using large language models to create almost a different user experience. But we also have to understand that what we’re providing [at Palo Alto Networks] is security, which is mission critical. We’re providing security for enterprises, which have very high standards for quality. And you can’t just take an LLM, out of the box, slap it on something and say, “Here you go.” A lot of the time it will give you a good answer — and some of the time it will make something up. So there’s a lot of work that will be needed to really extract the value and the potential out of these large language models. But when we do that, they do have the power to solve some really interesting challenges.

How do you see AI being applicable to your products going forward?

In a typical day, we will analyze about 3.5 billion events. Out of those events, we’ll detect about 275,000 new attacks that we’ve never seen before — in a day. And then out of that, we’ll block about 5 billion attacks a day. That scale only comes from being able to leverage cloud-based architectures and everything else — you can tie into on-prem and bridge the gap. The second piece is, the data that is ingested in order to do those analytics and drive that AI actually becomes the next set of training data, in order to continue to improve the models for detecting the next attempts. And so, I think one of the things that is perhaps not as fully understood as it needs to be is, AI is going to be driven by data. And the more data you have — in particular, the more great data you have — the better the AI outcomes will be. And so part of how we’ve been using AI over the last many years, including both with XSIAM and other products, is that it has allowed us to solve really hard problems for our customers today, while building that curated set of data that allows us to drive the next generation of AI. I think we’re uniquely positioned because of these investments we’ve made over the last several years to build these large, well-understood datasets.

Do you see generative AI becoming a very visible part of the user interface for some of your products in the future?

Yes. I think the generative AI, the large language models, have a very critical role to play in the future. I think that right now, what you’re seeing is a very superficial application of it — which is interesting for demos, but it doesn’t necessarily solve the real hard problems. That is where the real value will come in. What we’re doing is looking at, what are those hard problems we want to go solve? How do we architecturally approach that and leverage these new AI technologies to help us get there?

Are you saying anything about what that might look like?

Not yet. It will be different than just a little box in the corner of the UI where you can type in something, and you get a [response].

I’d be interested to hear how that connects to the idea of security being “solvable” as you mentioned in your keynote. Do you see generative AI as a critical part of the equation in making security solvable?

Absolutely. The reason I believe that cybersecurity is solvable is, the core technical elements needed to solve these challenges are now available. Leveraging the cloud to achieve machine-scale means we can start to use compute to be able to do a lot of these tasks that would otherwise just be unfathomable to try to have a person do. Let’s say, we’ve seen a billion malware over the last many years. I want to be able to prevent all 1 billion of those, everywhere. That requires a whole different approach to scale.

The second piece becomes, with that scale, can I collect the right data to drive AI? And so this is to your question. Now all of a sudden, I can start to use machines to do [more]. What we call “programmatic automation” — meaning, I program the machine to do Step A every time it sees [certain activity] — that’s fine, that’s useful. But can I start to use AI to actually understand the problem space, and start to solve some of these problems? That starts to become transformative. So that’s largely where XSIAM comes in, and how we’re thinking about revolutionizing the SOC. And really, truly delivering an autonomous SOC, through the use of AI and automation — that will require these new approaches to AI in addition to the more machine learning-based approaches.

And these new approaches include generative AI?

Generative AI, large language models [are included]. And this will not be solvable if security stays a highly fragmented space. It doesn’t mean that you have to be all-in on Palo Alto Networks in order to get the value. It just means we have to start making concerted progress toward having less point products that are not designed to work together — to something where there’s more native integration, and the right data is being collected and analyzed. And with that we’ll actually start to be able to exert some control that allows us to achieve the outcomes that are needed.

In terms of the amount of data and quality of data that you have, do you feel like no one else really has that in cybersecurity?

One of the things that I consider to be critical is, we need to have data across all the different types of data that are useful. As a result of the industry being so fragmented, what you generally find is that [a vendor will] know everything there is to know about identity data, for instance. That’s good, but that’s only one data source. There’s companies that know a lot about what’s happening in the network. That’s good, too — but it’s a different set of companies than the identity ones. Others understand endpoint data. What I often see is a company will try to take a single data source, correlate it with alerts and more-superficial information and drive the analytics. What you’ll get from that is analytics on one data source.

Because of our approach across different cybersecurity areas — across network security, cloud security, endpoint security and security operations — we understand these core data sources intimately down to the detail. We understand how to generate data from our security products in order to feed the analytics engines. And then there are places, like identity, that we don’t do — but where we’ve spent a ton of time understanding that space — where we’re not just thinking about it as a log source, but thinking of it as a data source. We’re understanding, what kinds of data do we need? How do we understand that data? And how do we correlate it with the other data sources that we have data for? That’s the approach that I believe is going to work. We have to be able to start looking at events from all data perspectives in order to drive the most accurate outcomes from our solutions. And our solutions are becoming increasingly AI-driven.

How does data come into play for your XSIAM offering?

The way I describe XSIAM, we start by saying, we’re going to collect real data. Yes, we’ll collect alerts and logs too — we’ll collect all of that stuff. But we’re going to start collecting real data on top of that. We’re going to collect endpoint data that otherwise would go into an EDR solution, because the SIEM doesn’t know how to collect EDR data. We’re going to collect network data, which would otherwise go into an NTA or an NDR-type solution as opposed to the SIEM. We’re going to collect identity data that would otherwise go into like an ITDR or UEBA solution, versus the SIEM. We’re going to collect cloud data that would otherwise go into a cloud product. We’re going to start collecting data from all these sources that would otherwise go into data silos. We’re going to stitch that data together, such that when we perform our analytics or machine learning models and everything else, we’re going to apply those to the stitched data. So when we look at an event, we’re going to look at it from the perspective of the endpoint, from the network, from identity, from the cloud. We’re going to look at it from all perspectives and say, “Is this good, or is this not good?” That becomes the new way of doing detection, and scoring and prioritization.

The SIEM is designed to collect alerts, with lots of other solutions cropping up around it to do silo-based analytics. Then people try to put automation on top of all of that. Or [you can] collect real data, stitch it together, use that to drive the analytics with automation natively built in — taking on the vast majority of the repetitive work. And then we use people to oversee that and bring in their judgment. What’s left for the SOC [team] to actually investigate and respond to is very doable and manageable. That’s XSIAM.