CEO Raghu Raghuram On VMware’s Gen AI Strategy And How Partners Should Prep For The Post-Broadcom Era

“We have teamed up with Nvidia to create a joint solution that we call the VMware Private AI Foundation with Nvidia. It’s going to obviously have the Nvidia GPUs but we’re going to build on that to say, “Look, what else do we need to build so that customers can run these language models on top of a VMware Cloud Foundation architecture?’ And then using Nvidia AI software as well as Nvidia AI hardware, how do we almost make it like an appliance that you can work with Dell and Lenovo and HPE and others so customers can deploy it wherever they need to?” says VMware CEO Raghu Raghuram.

VMware CEO Raghu Raghuram said VMware is opening the door for customers to harness the power of generative AI with a blockbuster new offering, VMware Private AI Foundation, in partnership with AI powerhouse Nvidia.

“It’s going to obviously have the Nvidia GPUs but we’re going to build on that to say, ‘Look, what else do we need to build so that customers can run these language models on top of a VMware Cloud Foundation architecture?’” said Raghuram. “’And then using Nvidia AI software as well as Nvidia AI hardware, how do we almost make it like an appliance that you can work with Dell and Lenovo and HPE and others so customers can deploy it wherever they need to?”

Raghuram’s comments came during an interview with CRN ahead of the VMware Explore conference, being held this week in Las Vegas.

Partners can deploy VMware Private AI Foundation on-premises, in the data center, at the edge, and even in the public cloud. “It could be in the public cloud as well because your data is all over the place, your private cloud and public cloud, etc,.” he said.

VMware Private AI Foundation is based on the concept of “private AI,” which focuses on the need for customers to protect their data and intellectual property, said Raghuram.

“If you think about knowledge, with enterprise and how they differentiate themselves, it is their core intellectual property,” he said. “So if I put my intellectual property into any general-purpose language model, how does that work?”

VMware is also infusing generative AI into each and every one of its products with a family of intelligent assist products.

As to his plans post the Broadcom acquisition, Raghuram, a 20-year VMware veteran, said now is not the time to address that.

“The keynote is not the place to talk about who is going to be in charge,” he said. “The keynote is not the place to focus on that. What we’re going to focus the keynote on—and pretty much the whole conference on—are all of these innovations that they’ll be able to take advantage of, regardless of the corporate ownership of VMware.”

Broadcom this week said it has waited the time required under statute for the Federal Trade Commission to raise objections to the $61 billion transaction it first proposed in May 2022. The U.S. regulator had been involved in a second-request investigation of the deal since July 2022, but had no comment yesterday when reached by CRN. Now Broadcom said, in absence of any objection, it will close the deal on Oct. 30.

Following is an edited version of the conversation.

How is VMware looking at Gen AI?

So obviously this is super exciting. It’s the next major advent and a whole new class of applications and platforms in the industry. And because of the general-purpose nature of these language models and foundation models, you can think of applications in marketing. You can think of applications in content generation. You can think of applications for software developers, to write code, applications for even legal teams. Any knowledge worker.

At VMware, we got all excited. And the big challenge became, ‘OK, what about my IP?’ If you think about knowledge, with enterprise and how they differentiate themselves, it is their core intellectual property. So if I put my intellectual property into any general-purpose language model, how does that work?

How do I make sure my data is not shared in any fashion to train somebody else’s model? And then once the model is trained and I can use it, how do I make sure that it’s controlled access to the networking point?

We call this class of problems ‘private AI.’ This is a key concept that we’re going to be talking about. What enterprises need is private AI. So how do you deliver private AI?

It could be in the public cloud as well because your data is all over the place, your private cloud and public cloud, etc.

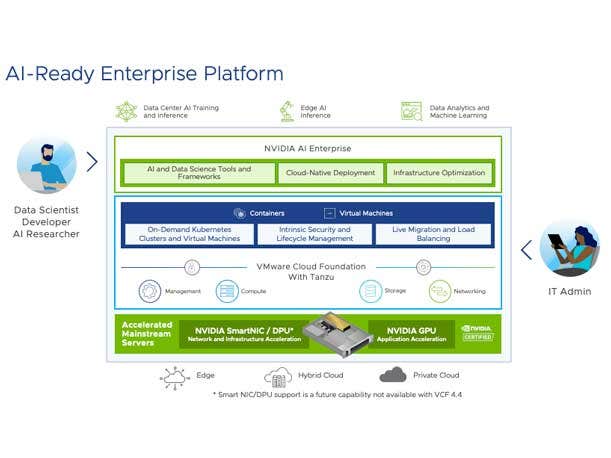

We have teamed up with Nvidia to create a joint solution that we call the VMware Private AI Foundation With Nvidia.

It’s going to obviously have the Nvidia GPUs but we’re going to build on that to say, ‘Look, what else do we need to build so that customers can run these language models on top of a VMware Cloud Foundation architecture?’

And then using Nvidia AI software as well as Nvidia AI hardware, how do we almost make it like an appliance that you can work with Dell and Lenovo and HPE and others so customers can deploy it wherever they need to?

They can deploy it on-premises, in the data center, you can deploy it at the edge, they can use it in the cloud, etc.

That is a big, big announcement. And [Nvidia CEO] Jensen [Huang] will be on stage for that. And so that’s something to look forward to.

We also work very closely with the open-source community. And we recognize that there are times people are not going to use Nvidia stacks. And so we are creating a reference architecture and so that’s how we help customers to run those models.

There is another exciting opportunity to infuse generative AI into each and every one of our products. For example, how do you infuse Gen AI into our Tanzu product line so that it’s very easy for platform operators to understand the configurations and ask questions and get answers and so on?

We’re going to call this a family of intelligent assist products. So there’ll be an intelligent assist for Tanzu. There’ll be an intelligent assist for the networking and security. There’ll be an intelligent assist for end-user computing. And also one of the things that especially as partners do a lot is they generate scripts and code to help a customer deploy and operate a vSphere environment.

So we are working with [the open-source AI collaboration platform] Hugging Face to actually help operators or partners generate code that automates the task of running a data center.

So this is what we call an intelligent assist family.

Let’s automate and make it easy for them to do the work that needs to be done. It is deploying applications, deploying infrastructure, managing the network and security, managing the user experience in a nutshell.

Where should partners be positioning themselves to be ready for AI?

What we are telling customers is one of the key ways you can become AI-ready is by putting your data in the cloud. But as we all know, customers have tons and tons and tons and tons of data. You tell them, ‘Hey, get all of your data to the cloud,’ it could be five years. You also have to meet them where they are. And Private AI Foundation does both.

So it is not only are you ‘AI-ready’ for enterprises and businesses, it is are you ‘private AI’-ready? Because let’s face it, if you didn’t care about privacy, you could go to Open AI today and type in your thing and get an answer just like we can do as consumers.

For businesses to be AI-ready, it’s going to be private AI and, No. 2, you have to meet them where they are. These are two important things, and this Private AI Foundation does both.

There are some big advances coming around application development where you are shortening the delivery of apps, right?

The first thing as we all know is that customers are accelerating their digital transformation, which means they are adding a bunch of new applications or modernizing new applications and rewriting them. Typically, that’s done on Kubernetes.

And what we have found with Tanzu, which is our brand that deals with that audience of platform operators that are responsible for helping developers and the developers themselves.

Typically, the feedback we hear is for developers who want to build and write these applications fast, Kubernetes is too low level an interface. So what we are announcing is a new addition to the Tanzu product family, called Tanzu App Engine. It’s going to be an extension of our Tanzu App platform, and what the App Engine does is it makes it dramatically easier for developers, once they have written the code, to deploy the code into production.

Not only deploy the code into production, but have the platform teams automatically ensure the code is always running so when it is time to update the underlying infrastructure, the application does not get disrupted.

It’s a fundamental advancement and it’s derived from some of the ideas that we had in the early days.

It is literally one command to deploy the application and then the platform operator can scale it, ensure it’s high availability, ensure it’s patched and lower the cost of operating these applications by a significant amount. Best of all, this was built in a multi-cloud fashion.

You have some really big announcements around multi-cloud and cloud infrastructure that are supposed to help manage this cloud chaos?

Here our partners are helping customers drive the modernization of infrastructure. We do three things here. One is if you look at our offerings in the marketplace today, there are lots of different offerings and partners and customers are saying, ‘Look can you simplify this for me?

Give me a prescriptive set of offerings, no more than five that look the same on-premises as they do in the cloud so that I can very easily tell a customer or a customer can find out for themselves if they ask, Hey, I’m just interested in a managed virtualization environment. What do I need? I’m interested in building a private cloud environment. What do I need?’

So the first thing we are doing is we are using these things called cloud packs. There are five cloud packs. This brings together 17 or 20 of the combinations that were previously possible to make it very easy for the partner to tell the customer, ‘This is what you need.’

The customer can say, ‘I want it on-premises in our data center and I want it on my AWS or I want it on Equinix.’

The second thing we have been working in the HCI space for some time is vSAN and vSphere put together, and that’s proven to be a great hit. Customers love the user experience of HCI and the simplicity of HCI. The challenge with HCI first is you cannot scale the storage independent of the compute. So in other words the most storage you can put is constrained by the amount of storage on the server.

So customers have been asking for this for a long time, but it’s a hard thing to do architecturally, which is allow the customers to scale the storage independent of the compute.

This has a couple of great benefits. One, they’re still preserving the simplicity of a vSAN model. So for a vSphere administrator it just looks like vSAN, but it allows them to break free of the constraints of a single server. Petabytes and petabytes.

And second is it can be elastic, so I can scale it up, I can scale it down, independent buying more servers. That’s very useful for partners who say, ‘My customer wants the simplicity of the vSAN model, but they have a lot of data. They’re going to have to scale out their database, object storage, whatever it is.’

One partner I t alked with was very excited about the capabilities of NSX+. What can you tell me about that?

It provides the full networking stack, from Layer 2 to Layer 7, as they say. But here is an interesting challenge that customers are running into. They have applications on AWS. They have applications on Azure. They have applications on-premises. Google, Oracle, etc. Now how are you as an administrator going to set firewall rules, load-balancing policies, network security policies uniquely in all of these different places? It’s a big hassle for network security admins. NSX+ builds this policy layer in the cloud, and it can dynamically program the NSX networking and security, the VMware Cloud on AWS networking and security. The Equinix networking and security.

In the future, it will even program the native AWS and the native Azure. This can literally be one policy layer that you can program that can dynamically adjust the policies to all your applications. This is going to be fantastic for partners and fantastic for customers. Now partners can go help those customers and say, ‘Look, let me help you with multi-cloud networking.’ This is something they have always wanted.

VMware was one of the leaders in shifting to hybrid work. Can you talk about this new way to tackle some of the challenges there for solution providers?

So admins are having to still deal with the hybrid workplace. The hybrid workforce is not coming back to work anytime soon. They’re distributed all over. So how do you simplify their lives?

We have this vision that we call the Autonomous Workspace. The new innovation that we are adding allows these end-user computing admins to provide a better digital experience. We have incorporated a lot of AI here.

Say you’re having a very poor experience on your laptop working from home. Instead of you having to file a ticket or call the end-user computing team, there is software that automatically watches, that knows that you’re having a poor experience and then notifies the end user’s computing admin and says, ‘They are having a very poor experience and we think it is because of the network or the OS, and here is a runbook that you can pick from to fix it.’

Then boom, off they go. So it’s a dramatic simplification of the hybrid work experience.

It looks like there are some new features coming to the edge for VMware partners as well?

Customers are turning the edge from hardware to software-driven. One of the most common problems at the edge is you have all these machines and possibly multiple manufacturing plants. Let’s say you have to update a patch or deploy new software.

What we have created is a zero-touch update capability. The machine itself wakes up and asks a central control plane, ‘Hey, do you have anything new for me?’ It’s pull-based. The central management layer keeps track of what everybody has and what everybody needs. So this dramatically simplifies the management of all these edges.

Where should partners be doubling down to make sure they are ready for the Broadcom acquisition? Let’s hear from the VMware CEO, where is the most important part for them to double down on to make sure they are ready for Broadcom?

I would say, look, we’ve talked about these five areas. They’re all great opportunities for partners, and Broadcom CEO Hock Tan has talked about the multi-cloud strategy that he intends to pursue. And the sector on the VMware Cloud infrastructure, etc.

So that is where I thaink partners should begin. All of the things we just talked about, the multi-cloud infrastructure with the VMware Cloud Foundation, the cloud packs, the NSX, vSAN, the Private AI Foundation, that’s tremendous opportunity for our partners.

You told us last October that VMware channel partners can expect a lot more empowerment under Broadcom. Have you seen anything in the last year that has made you change your mind about that?

I’ve not seen anything different. Hock Tan has been very public about what he wants to do with partners. And he’s also been public about the investments he wants to make to help partners deploy VMware technologies to VMware customers even faster. All of that is still in play.

Are you willing to tell partners, ‘Don’t worry. I’m going to be here, post-acquisition?’

The keynote is not the place to talk about who is going to be in charge. The keynote is not the place to focus on that. What we’re going to focus the keynote on, and pretty much the whole conference on, are all of these innovations that they’ll be able to take advantage of, regardless of the corporate ownership of VMware.

I’ll give you the opportunity right now. You can say it to me.

[Chuckles] Yes. I’m sure there will be a time and place to talk about all those things.