The 10 Coolest Big Data Tools Of 2023 (So Far)

Here’s a look at 10 new, expanded and improved big data tools, platforms and systems that solution and service providers should be aware of.

Big Data, Cool Tools

As data volumes continue to explode, the global “datasphere”—the total amount of data created, captured, replicated and consumed—is growing at more than 20 percent a year and is forecast to reach approximately 291 zettabytes in 2027, according to research firm IDC.

Many businesses and organizations are deriving huge value from all that data. They are analyzing it to gain insight about markets, their customers and their own operations. They are using the data to fuel digital transformation initiatives. And they are even using it to support new data-intensive services or packaging it into new data products.

But wrangling all this data is a major challenge. That’s why there is a steady stream of new big data tools and technologies—from established IT vendors and startups—to help businesses access, collect, manage, move, transform, analyze, understand, measure, govern, maintain and secure all this data.

What follows is a look at 10 cool big data tools designed to help customers carry out all these big data tasks. They are either entirely new products introduced in late 2022 or in the first half of this year or big data products that have undergone significant upgrades or now offer ground-breaking new capabilities.

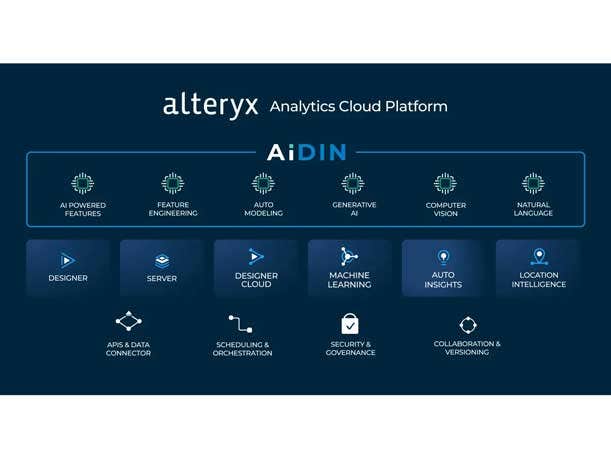

AiDIN

Owner: Alteryx

Focus: The AI engine improves analytics efficiency and productivity

In May data analytics technology developer Alteryx launched a generative AI engine that works with the Irvine, Calif.-based company’s flagship Alteryx Analytics Cloud Platform to boost analytical efficiency and productivity. AiDIN integrates AI, generative AI, Large Language Models and machine learning with Alteryx analytics.

AiDIN powers Magic Documents, a new Alteryx Auto Insights feature that uses generative AI to improve insight reporting and sharing by providing in-context data visualization summaries and PowerPoint, email and message generation capabilities.

The AiDIN engine also supports the new Workflow Summary for Alteryx Designer, the data preparation and integration toolset within the Alteryx platform. Workflow Summary allows users to document workflow data processes and data pipelines. And AiDIN underlies the new OpenAI Connector, which provides a way for users to incorporate generative AI into Alteryx Designer workflows.

ClickHouse Cloud

Owner: ClickHouse

Focus: Brings the ClickHouse OLAP database system to the cloud

With an emphasis on speed and simplicity, ClickHouse in December unveiled the general availability of ClickHouse Cloud, a cloud-based data analytics service based on the company’s online analytical processing (OLAP) database management system.

The underlying ClickHouse technology provides fast query speeds—analyzing petabytes of data in milliseconds—for large-scale, near-real-time analytical tasks. The serverless ClickHouse Cloud architecture decouples storage and compute and scales automatically to handle analytical workloads of different sizes.

ClickHouse Cloud also includes a number of new product features that enhance the service’s security, reliability and usability. It offers a new SQL console based on technology from ClickHouse’s acquisition of database client developer Arctype. And the cloud edition adds a tier optimized for development use cases, tuned for smaller workloads and enabling rapid prototyping of new features and data products. The company is based in Portola Valley, Calif.

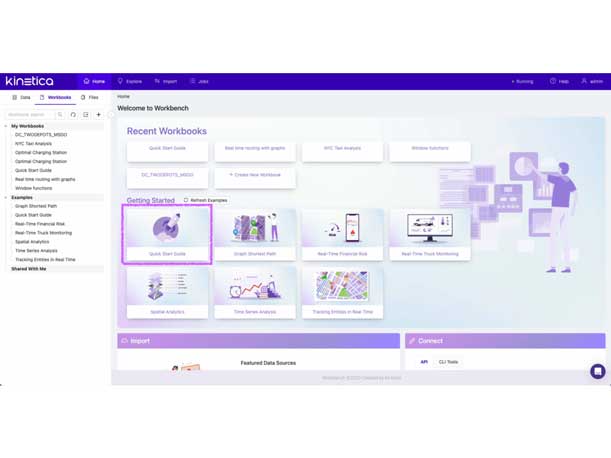

Kinetica Analytical Database With ChatGPT

Owner: Kinetica

Focus: ChatGPT converts natural language questions into SQL queries

In May Kinetica said that it had integrated its high-performance analytics database with ChatGPT, making it among the first to use the popular AI technology for “conversational querying” that converts natural language questions into Structured Query Language queries.

Kinetica develops a real-time, vectorized database for analyzing and observing huge volumes of streaming time series, spatial and graph data. The use of ChatGPT makes it possible for users to ask complex questions of proprietary, complex data sets in the Kinetica system and receive answers in seconds, according to the Arlington, Va.-based company.

SQL is the standard development language for building queries for retrieving data in relational databases. But with the exception of some “power users” who can develop their own SQL queries, SQL-based queries are largely developed by programmers and data engineers either for prebuilt queries and reports or in response to business users’ requests—all limitations on generating timely value from data.

The ChatGPT interface improves the ease of use of the Kinetica database by providing a way for users to directly ask ad hoc questions of proprietary data in a database system—even complex queries not previously developed—and receive answers in seconds without the need to pre-engineer data. The new capabilities also boost productivity by providing real-time access to information and offers the ability to more quickly identify patterns and insight within the data.

Komprise Hypertransfer for Elastic Data Migration

Owner: Komprise

Focus: Accelerates the transfer of unstructured data files to the cloud

In December Komprise debuted Komprise Hypertransfer for Elastic Data Migration, a tool for accelerating the movement of unstructured data files—including user data, electronic design automation (EDA) files and other multimedia workloads—to the cloud while strengthening cloud security.

Hypertransfer for Elastic Data Migration accelerates data migrations, particularly for data sets with large file counts, or small files or workloads using the SMB (server message block) protocol—data transfers that the Campbell, Calif.-based company says can take as long as weeks or even months.

Such data transfers are a challenge because the SMB protocol requires lots of back-and-forth “handshakes” that increase administrative traffic over the network. Hypertransfer for Elastic Data Migration uses dedicated virtual channels across a WAN to minimize WAN roundtrips, mitigating SMB protocol “chattiness” and accelerating data transfers, according to the company. Komprise says that tests using a data set dominated by small files showed migration times 25 times faster than other alternatives.

Hypertransfer for Elastic Data Migration is targeted at enterprises with petabytes of unstructured data in file storage and the need to tier or migrate data to lower-cost cloud storage.

Kyligence Zen

Owner: Kyligence

Focus: The Zen intelligent metrics platform addresses the inconsistent metrics problem

In April San Jose, Calif.-based Kyligence boosted the capabilities of its data analytics offerings with the general availability of Kyligence Zen, an intelligent metrics platform for developing and centralizing all types of data metrics into a unified catalog system.

A common data analytics problem within businesses is a lack of shared definitions for key metrics in such areas as financial and operational performance. Kyligence Zen provides a way to build a common data language across an organization for consistent key metrics and what data managers call a “single version of the truth.”

Kyligence Zen is designed to tackle the problem of inconsistent metrics by providing unified and intelligent metrics management and services across an entire organization.

A key component of Kyligence Zen is the Metrics Catalog, which provides a central location where business users can define, compute, organize and share metrics. The intelligent metrics store component of Kyligence Zen was in private beta since June 2022.

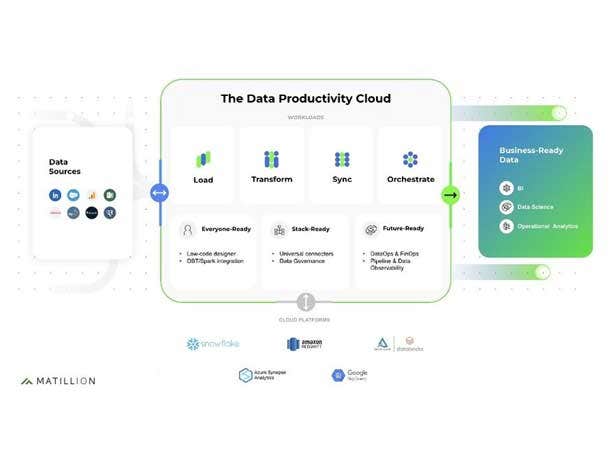

The Data Productivity Cloud

Owner: Matillion

Focus: An integrated platform for improving data team productivity and efficiency

In October 2022 Matillion, a provider of cloud-based data transformation and data pipeline technology, unveiled The Data Productivity Cloud, which welds the company’s products into a central platform for collecting, loading, transforming, synchronizing and orchestrating data.

The Data Productivity Cloud improves the productivity and efficiency of data teams with its wizard-based approach to building customer connectors for data loading and providing universal connectivity to any sources without coding.

While the platform incorporates Matillion’s existing products, it also offers new enterprise application connectors for SAP, Workday and Anaplan systems. Also new is code orchestration written in dbt, working alongside other low- or high-code jobs in Matillion, allowing data engineers to execute code from tools such as dbt in Matillion ETL for Snowflake.

The platform also boasts extended workflow scalability through the new Unlimited Scale functionality that allows data teams to collect, enrich and recirculate data at scale within an organization’s cloud environment. Matillion has dual headquarters in Manchester, U.K., and Denver.

Momento Serverless Cache

Owner: Momento

Focus: Accelerate cloud database response time by delivering frequently used data faster

Momento emerged from stealth in November with its Momento Serverless Cache offering that optimizes and accelerates any database running on Amazon Web Services or the Google Cloud Platform.

A cache accelerates database response time by delivering commonly or frequently used data faster. But Momento’s founders argue that today’s caching technology wasn’t designed for today’s modern cloud stack. The highly available Momento cache technology can serve millions of transactions per second, according to the company, and operates as a back-end-as-a-service platform, meaning there is no infrastructure to manage.

Momento, based in Seattle, was co-founded by CEO Khawaja Shams and CTO Daniela Miao, who previously worked at AWS and were the engineering leadership behind AWS DynamoDB, Amazon’s proprietary NoSQL database service.

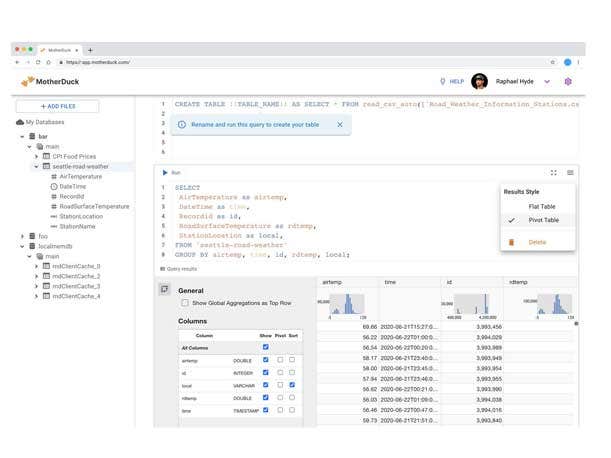

MotherDuck Cloud Analytics Platform

Owner: MotherDuck

Focus: Combine cloud and embedded database technology to make it easy to analyze data no matter where it lives

On June 22 startup MotherDuck launched the first release of its serverless cloud analytics platform based on the company’s DuckDB open-source, embeddable database. The cloud system makes it easy to analyze data of any size, regardless of where it resides, by combining the speed of an in-process database with the scalability of the cloud, according to the company.

MotherDuck makes the argument that most advances in data analysis in recent years have been geared toward large businesses and organizations with more than a petabyte of data while neglecting small and midsize companies with like-sized data volumes.

DuckDB, which the company says is analogous to SQLite for analytics workloads, enables hybrid query execution using local and cloud compute resources, according to the company. The software can run everywhere (even on a laptop), can query data from anywhere without preloading it, and boasts very fast execution of analytical queries.

MotherDuckwas co-founded by Google BigQuery founding engineer Jordan Tigani, who is now the company’s CEO. The Seattle-based company has raised $47.5 million in funding from such investors as Andreessen Horowitz, Redpoint, Amplify Partners, Altimeter and Madrona.

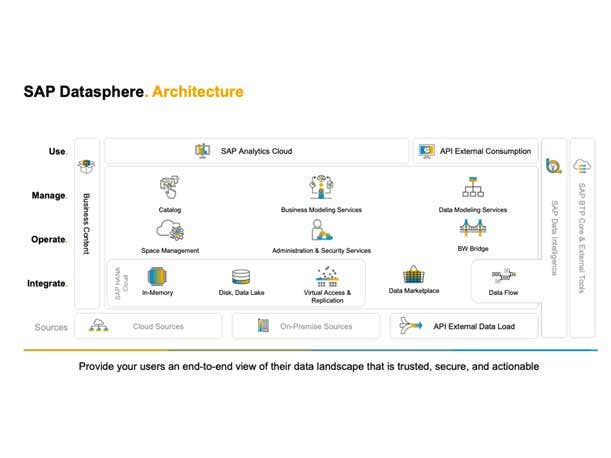

SAP Datasphere

Owner: SAP

Focus: Next generation of the SAP cloud data warehouse

In March SAP unveiled SAP Datasphere, the next generation of the company’s cloud data warehouse service with new data cataloging, simplified data replication and enhanced data modeling capabilities. The software giant said Datasphere is intended to help overcome the fundamental error in data management and analytics of too much focus on technology and not enough on data.

Building on the earlier SAP Data Warehouse Cloud, Datasphere offers new and extended capabilities to provide what SAP calls a more unified experience for data integration, data federation, data warehousing, semantic modeling, data cataloging and data virtualization.

Datasphere is built on the SAP Business Technology Platform (BTP) that provides underlying capabilities including database security, encryption and governance.

SAP, based in Walldorf, Germany, also struck strategic partnerships with four data management and AI technology developers: Collibra, Confluence, Databricks and DataRobot, developing links with Datasphere to make data in those vendors’ systems available to SAP users.

The Datasphere cloud service and its new capabilities, along with the technology partner integrations, are designed to help businesses develop a business data fabric architecture that relies more on the use of metadata to provide access to data where it resides rather than traditional data collection and extraction approaches. Data is also delivered with its business context and logic intact.

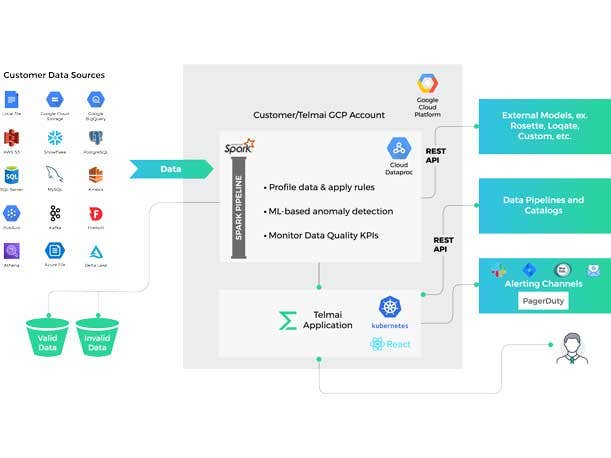

Telmai

Owner: Telmai

Focus: Data observability for hybrid data environments

Telmai’s data observability software is used to monitor and maintain data quality, reliability and accuracy—an increasingly critical task as more businesses and organizations rely on valuable data assets for operational and analytical purposes. Data has even become part of the product and service offerings at many businesses.

Data observability is an active space for startups and Telmai is one of the more recent entrants. The Telmai platform helps data teams automate the process of monitoring data pipelines, using a range of data quality metrics and KPIs, and proactively detect and investigate data anomalies in real time.

The platform supports an open architecture and is designed to work across hybrid data environments, co-founder and CEO Mona Rakibe told CRN in an interview. Among the technology’s strengths is its ability to detect data anomalies at the columnar level and work with more data types than competing products, Rakibe said.

In June Telmai, founded in 2020 and based in San Francisco, raised $5.5 million in seed funding from Glasswing Ventures, .406 Ventures, Zetta Venture Partners and others.