The 10 Hottest Big Data Tools Of 2023

Here’s a look at 10 big data tools that either debuted or were significantly enhanced this year that solution providers should be aware of.

Big Data, Hot Technologies

Data volumes continue to explode and the global “datasphere”—the total amount of data created, captured, replicated and consumed—is growing at more than 20 percent a year and is forecast to reach approximately 291 zettabytes in 2027, according to market researcher IDC.

Many businesses are deriving huge value from all that data. They are analyzing it to gain insight about markets, their customers and their own operations. They are using the data to fuel digital transformation initiatives. And they are even using it to support new data-intensive services or packaging it into new data products.

Data—lots of it—is also a critical component of AI and machine learning initiatives. Some of the software products on this list are specifically designed to assist with the use of data within AI and ML systems.

But wrangling, managing and analyzing all this data is a major challenge. That’s why there is a growing number of established and startup companies developing leading-edge technologies to help businesses access, collect, manage, move, transform, analyze, understand, measure, govern, maintain and secure all this data.

Here’s a look at 10 big data tools that caught our attention in 2023 that solution providers should be aware of.

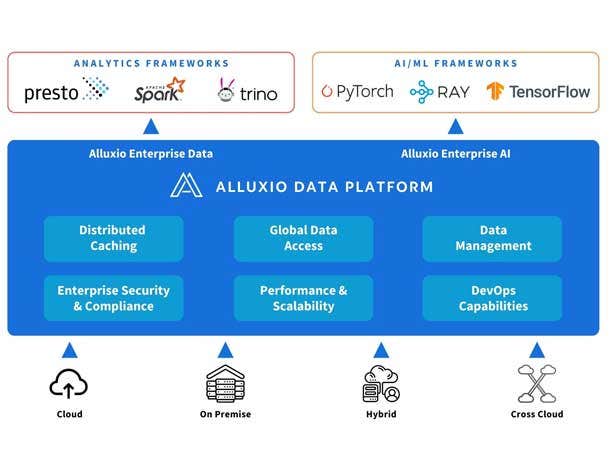

Alluxio Enterprise AI

Data accessibility and data volume and complexity are major hurdles to the implementation and efficient operation of AI systems, especially model training and model serving.

To overcome those hurdles Alluxio in October launched Alluxio Enterprise AI, a new data management platform for data-intensive AI and ML tasks, built on the company’s core data orchestration technology and expertise.

Alluxio Enterprise AI offers the data accessibility and performance needed by the growing number of data-driven applications, including generative AI, large language models, computer vision and natural language processing, that are generating more demanding workloads for IT and data infrastructure.

While the company’s flagship Alluxio Enterprise Data was originally developed for a range of big data tasks, including data analytics and AI/ML, Alluxio said it became clear a new platform was needed to specifically meet the demands of AI workloads such as deep learning and large-scale model training and deployment.

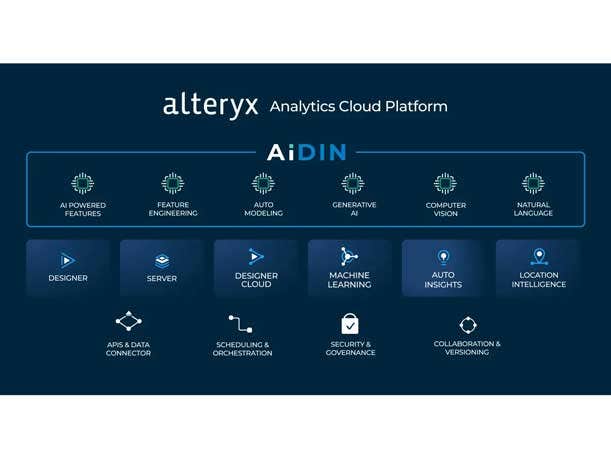

Alteryx AiDIN

Alteryx AiDIN, introduced in May, combines AI, generative AI, large language models and ML technology with the company’s flagship Alteryx Analytics Cloud Platform—all with the aim of improving data analytics efficiency and productivity.

AiDIN is a generative AI engine that works with the company’s flagship Alteryx Analytics Cloud Platform to boost analytical efficiency and productivity. AiDIN integrates AI, generative AI, large language models and ML with Alteryx analytics.

AiDIN powers Magic Documents, a new Alteryx Auto Insights feature that uses generative AI to improve insight reporting and sharing by providing in-context data visualization summaries and PowerPoint, email and message generation capabilities.

The AiDIN engine also supports the new Workflow Summary for Alteryx Designer, the data preparation and integration toolset within the Alteryx platform. Workflow Summary allows users to document workflow data processes and data pipelines. And AiDIN underlies the new OpenAI Connector, which provides a way for users to incorporate generative AI into Alteryx Designer workflows.

In October Alteryx expanded AiDIN’s functionality with Alteryx AI Studio, a deployment-agnostic interface that makes it possible for no-code users to leverage generative AI. AI Studio allows organizations to bring large language models that work with custom business data into the analytics process.

Databricks LakehouseIQ

At its annual Data + AI Summit event in June, data lakehouse technology developer Databricks unveiled LakehouseIQ, a generative AI knowledge engine that makes it possible for anyone in an organization to use natural language to search, understand and query data, making data analytics more available to a wider audience of users.

LakehouseIQ uses generative AI to learn what makes an organization’s data, data usage patterns, operations, organizational structure, culture and jargon unique, allowing it to answer questions within the context of the business. LakehouseIQ is integrated with the Databricks Unity Catalog for unified search and data governance.

“LakehouseIQ will help democratize data access for every company to improve decision-making and accelerate innovation. With LakehouseIQ, employees can simply write a question and find the data they need for a project or get answers to questions relevant to their company’s operations. It removes the roadblocks inherent in traditional data tools and doesn’t require programming skills,” Databricks co-founder and CEO Ali Ghodsi said in a statement at the time of the LakehouseIQ launch.

At the Data + AI Summit Databricks also debuted Lakehouse AI, a suite of generative AI tools for building and governing generative AI applications, including large language models, that run within the Databricks Lakehouse Platform. And the company debuted Delta Lake 3.0 of the company’s data storage format that the company said eliminates data silos through its new Universal Format capability.

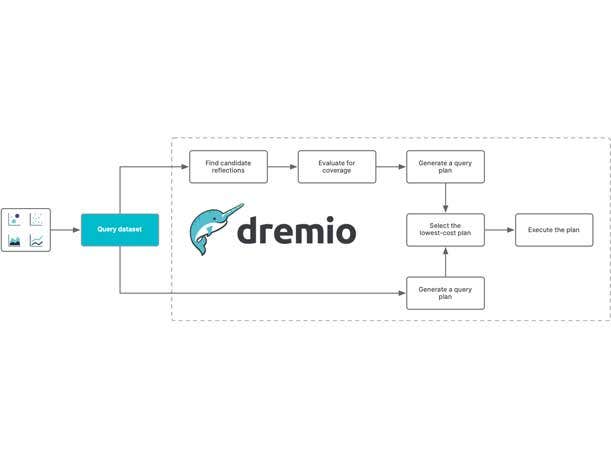

Dremio Reflections

Data lakehouse platform developer Dremio says its Reflections next-generation SQL query acceleration technology, launched in September, paves the way for sub-second data analytics performance across an organization’s entire data ecosystem, regardless of where data resides.

Queries using Reflections often run 10 to 100 times faster than unaccelerated queries, “ensuring that insights can be derived swiftly and efficiently and at one-third the cost of a cloud data warehouse,” according to the company.

A core component of Reflections is Reflection Recommender, a new capability that accelerates business intelligence workloads by automatically evaluating an organization’s SQL queries and generates a recommended Reflection to accelerate them, eliminating arduous manual data and workload analysis.

Hex

The Hex platform, developed by Hex Technologies, provides a modern data workspace system for collaborative analytics and data science tasks.

The platform includes AI-powered tools, collaborative data notebooks, tools for building applications with data visualizations, and data integration technology—all making it possible to connect and analyze data and share work using interactive data applications and stories.

In October Hex launched Hex 3.0 with new AI capabilities, a new compute engine, a new metadata engine and the App Builder tool for turning insight into interactive experiences. Earlier in the year the company debuted Hex Magic tools that bring the power of large language models directly into the Hex workspace.

HPE Ezmeral Software

Hewlett Packard Enterprise significantly enhanced its Ezmeral data and analytics software portfolio in May. HPE Ezmeral Software, originally launched in 2020, provides a SaaS-based analytics and AI foundation across hybrid cloud environments to access, analyze and govern data globally.

The HPE Ezmeral update included the new HPE Ezmeral Unified Analytics Software with data engineering, data science and data analytics capabilities delivered through a SaaS, self-service experience. The toolset allows developers and analysts to build data pipelines, develop and deploy analytical models, and visualize data using popular open-source tools such as Apache Spark, Apache Airflow, Kubeflow and Trino.

The update also offers Ezmeral Data Fabric as a SaaS service for the first time. Data Fabric provides access to data across multiple data sources and formats, including files, objects, tables and streams, as well as across hybrid cloud deployments.

The new release was also designed to make it easier and more cost-effective to install, build and deploy AI and machine learning applications.

Kyligence Zen

In April Kyligence boosted the capabilities of its data analytics offerings with the general availability of Kyligence Zen, an intelligent metrics platform for developing and centralizing all types of data metrics into a unified catalog system.

A common data analytics problem within businesses is a lack of shared definitions for key metrics in such areas as financial and operational performance. Kyligence Zen provides a way to build a common data language across an organization for consistent key metrics and what data managers call a “single version of the truth.”

Kyligence Zen is designed to tackle the problem of inconsistent metrics by providing unified and intelligent metrics management and services across an entire organization.

A key component of Kyligence Zen is the Metrics Catalog, which provides a central location where business users can define, compute, organize and share metrics.

Momento Serverless Cache

Momento emerged from stealth in November 2022 with its Momento Serverless Cache offering that optimizes and accelerates any database running on Amazon Web Services or the Google Cloud Platform.

A cache accelerates database response time by delivering commonly or frequently used data faster. But Momento’s founders argue that today’s caching technology wasn’t designed for today’s modern cloud stack. The highly available Momento cache technology can serve millions of transactions per second, according to the company, and operates as a back-end-as-a-service platform, meaning there is no infrastructure to manage.

Momento, was co-founded by CEO Khawaja Shams and CTO Daniela Miao, who previously worked at AWS and were the engineering leadership behind AWS DynamoDB, Amazon’s proprietary NoSQL database service.

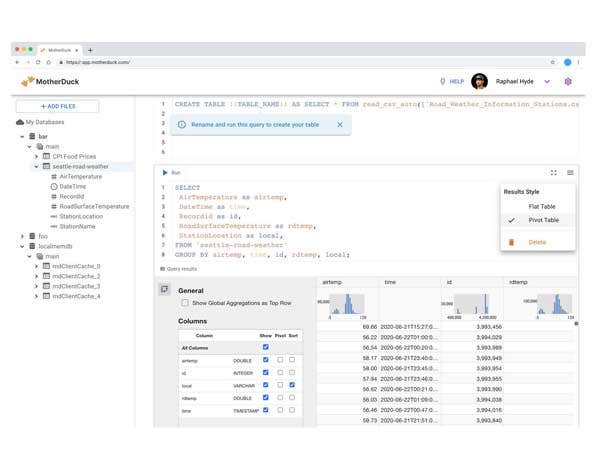

MotherDuck Cloud Analytics Platform

Startup MotherDuck in June launched the first release of its serverless cloud analytics platform that combines cloud and embedded database technology to make it easy to analyze data no matter where it lives.

MotherDuck is based on the company’s DuckDB open-source, embeddable database. The cloud system makes it easy to analyze data of any size, regardless of where it resides, by combining the speed of an in-process database with the scalability of the cloud, according to the company.

MotherDuck makes the argument that most advances in data analysis in recent years have been geared toward large businesses with more than a petabyte of data while neglecting small and midsize companies with like-sized data volumes.

DuckDB, which the company says is analogous to SQLite for analytics workloads, enables hybrid query execution using both local and cloud compute resources, according to the company. The software can run everywhere (even on a laptop), can query data from anywhere without pre-loading it, and boasts very fast execution of analytical queries.

MotherDuck was co-founded by Google BigQuery founding engineer Jordan Tigani. Today he is the company’s CEO.

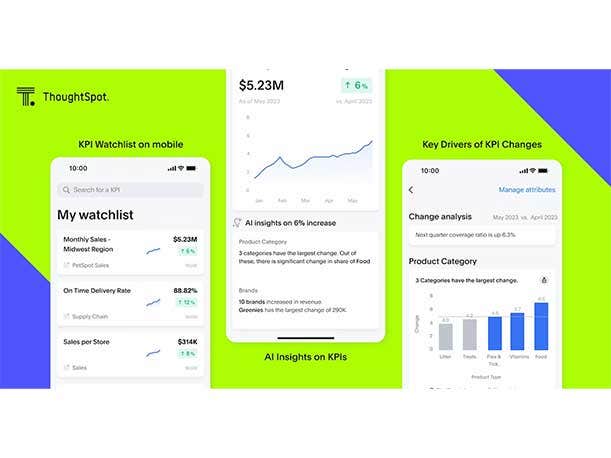

ThoughtSpot Monitor for Mobile

At its ThoughtSpot Beyond 2023 virtual event in May, ThoughtSpot launched ThoughtSpot Monitor for Mobile, a mobile application that works with the ThoughtSpot analytics application to deliver key performance indicators (KPIs) and other business metrics to users.

With the new ThoughtSpot Monitor for Mobile, users subscribe to specific, personalized KPIs and business metrics and the system pushes notifications to users when metrics change. Users run the ThoughtSpot mobile application on their Apple or Google Android devices while data teams set up the underlying data models and infrastructure.

Monitor for Mobile uses generative AI to help users drill down on business metrics, analyzing hundreds of attributes behind each KPI and leveraging machine learning to identify why metrics changed, according to the company.