Paul Maritz's 10 Commandments Of Big Data

Making A Big Data Revolution Real

Paul Maritz, the CEO of Pivotal, the new EMC-VMware venture aimed at providing solution providers and businesses with everything they need to build next-generation big data applications and services, is scheduled to formally launch the company this week. In an exclusive interview with CRN, the technology visionary, who has ushered in game-changing products and strategies from the PC era to the cloud computing era, detailed the principles that will form the foundation for Pivotal. Here, then, are Maritz's ten commandments of big data.

1. Pivotal Is The Operating System For The Cloud

It's bold and brash but it is Maritz's view of Pivotal as the operating system for the cloud that separates the company from any and all comers in the big data market. "If infrastructure as a service is the new hardware, we are the new OS on top of it," says Maritz. That is a blockbuster statement and Maritz does not use the word OS (operating system) lightly. But it's just what the big data market needs to unleash a new era of big data business applications and services innovation. Maritz, by the way, has built application ecosystems before for both PCs and client server networks. It requires a leader like Maritz with knowledge of how to build support among application developers, solution providers and third-party companies looking to score big in the big data applications and services era.

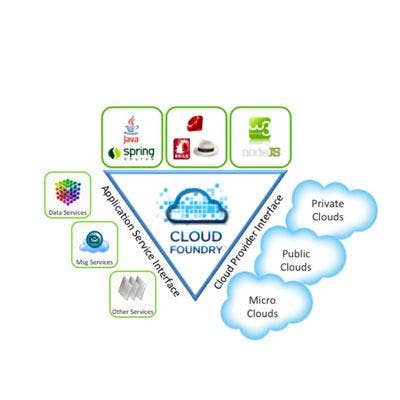

2. Cloud Independence: No Cloud Lock-In Here

Maritz is determined not to let the next generation of computing be defined by cloud lock-in. At the heart of Maritz's Pivotal vision is a cloud-independent platform that will allow big data applications and services to run on all the top infrastructure-as-a-service platforms from Microsoft Azure to Amazon Web Services. "We have a layer called Cloud Foundry that allows you to in some sense virtualize the cloud," says Maritz. "So you can write your apps and not care about whether it is running on Amazon or Azure or VMware or whatever. And we think that is very important because we don't want this world to be like the bad old days of the mainframe: When you wrote a COBOL CICS app, you were condemned to pay IBM a tax for all eternity. We don't want to make it so when you write an app in Amazon you are condemned to pay Amazon a tax for all eternity."

{C}

3. Follow The Big Data Lead Of Google, Amazon And Facebook

Maritz says succeeding in the big data business applications and services era means taking a good look at what Internet disruptors did and then putting the same basic principles to work for business applications. "They are the ones who have really been delivering a post-paper experience if you like," says Maritz. "What you can do in Amazon you couldn't have done with a traditional mail-order catalog. There is something radically different about the way they do it. Facebook is beyond what you can do with paper-based mail, etc. It is a different experience. So, increasingly enterprises and businesses are going to have to figure out ways to either deliver those experiences or participate in those kinds of experiences. And that is really what is going to drive the change going forward. At the end of the day, what drives the whole IT industry is the applications and the data that underlies those applications."

4. Go Beyond The Traditional Data Warehouse

Maritz says that in order to be successful in the big data era, businesses must go beyond the traditional data warehouse approach favored today by most enterprises. That's because the data sets in the big data era are far beyond a "traditional data warehousing approach," said Maritz. "And they have to because the data sets they are dealing with you couldn't do through a traditional data warehousing approach. It just wouldn't be cost effective."

{C}

5. Get All Your Data In One Place

Enterprises grappling with thorny big data architecture issues need to realize that in order to be successful in the big data era they need to get their data in one place. Easier said than done. But that ability to corral that legacy data with unstructured new media sources will be what differentiates Pivotal. "If you have your data fragmented all over the place, it is hard to get value out of it. It is hard to really correlate, extract value if things are fragmented everywhere," says Maritz. "So increasingly businesses are going to look to either implement data repositories that are larger and more scalable and get more of their data into it or look at using services that can give them that capability."

6. Big Data Applications Must Be Based On Intel Processor-Based Clouds

Maritz saw the power of the Intel processor revolution in the PC, client server and Internet era. And, it is one of the tenets for building new-era big data services.

"The new infrastructure level clouds, most of which are based on Intel processors, are critical," he says. "Because when you are trying to do things, as I said at a level of scale, automation and cost effectiveness, you need a new generation of infrastructure to do that. And that infrastructure is going to be cloud and x86 based. You show me one consumer cloud that is built on [IBM] Pure or [Oracle] Exadata or [IBM mainframe] Z Series or anything else."

7. Big Data Applications/Services Demand Fast Real-Time Data

The ultimate defining characteristic of the new big data applications/services is a real-time dynamic that redefines the business experience. "It is a new class of applications that are characterized by needing to look at much bigger data sets, deal with real-time data, and be able to react in real time to that data," says Maritz. "So bigger data sets that are coming from more sources, bigger data sets that are coming from both structured and unstructured sources, coming from sources both inside your enterprise and outside your enterprise, and then data that is arriving in large quantities very quickly where you have to react to it in real time and then be able to build experiences that drive that."

8. Big Data Applications/Services Require Fast Development

It's no small matter that Pivotal is named after Pivotal Labs, which EMC acquired just over a year ago. One of the crown jewels of Pivotal Labs is a big data software development tool called Pivotal Tracker (pictured) used by 240,000 developers worldwide with a blue-ribbon big data customer list that includes Twitter. Look for Pivotal to provide a platform that will let service providers and business build big data applications and services at a breakthrough rate. Maritz says big data demands "fast [big] data application development." He points to Amazon as an example of the dynamic quality of new-era big data applications. "What is different about Amazon is they don't expose an analytics capability to their end customer; they expose a shopping experience that is constantly adapting to who you are and what you are doing," says Maritz. "And that is going to become even more pronounced as you want to extend to other use cases, real-time location-based advertising, industrial control, etc."

{C}

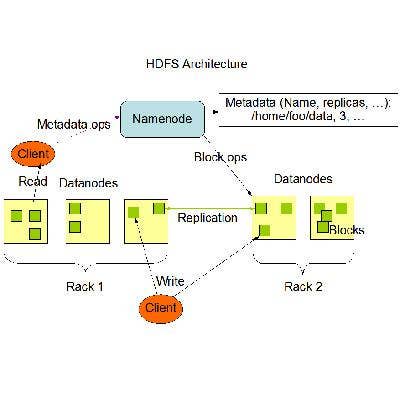

9. Hadoop Distributed File System (HDFS) Is Key To Big Data Success

One of Pivotal's first product shots across the bow of the big data market is Pivotal HD, a new Apache Hadoop open source big data distribution aimed at making Hadoop queries as easy as SQL database queries. Maritz, for his part, calls Pivotal HD a "future-generation architecture product" that brings "scale-out query capability" to HDFS. "The data fabrics are radically changing," says Maritz. "They are going to go from being monolithic to being stratified and based on HDFS. So that is one big change that is occurring in the industry. And, it is all about enabling this issue of let's get more of our data and more types of data in one place, and then have different ways to access it rather than balkanizing the data all over the place."

{C}

10. Big Data Is Not For The Faint Of Heart

Maritz says the big data bet is going to require deep pockets and patience. That is why EMC and VMware are putting all of the key technology assets and employees dedicated to the big data mission under Pivotal and making a $400 million investment. "These are early days, so how do you help develop this market without running out of funds in the interim," says Maritz. "As we said, this is not for the faint of heart. We announced this is going to take over the next 2 years or so at least $400 million worth of investment. So you really have got to believe in this one." Maritz sees the next 1 to 3 years as the early stages of the big data revolution. "Certainly by 10 years from now the transformation will be very profound," he says. "So the good news is you have got time. But at the end of the day, if you want to stay relevant, it is not going to be optional."