The 10 Coolest Big Data Platforms And Tools Of 2019 (So Far)

Big data software for machine-learning tasks and managing data in hybrid IT systems are among the products that have caught our attention in the first half of this year.

Connecting Data Across Hybrid, Multi-Cloud Systems

Ever-increasing volumes of data, new data-generation sources like Internet of Things networks, and the growing use of artificial intelligence and machine learning to manage and analyze all that data, are just some of the drivers behind the continuing evolution of big data technology.

Spending on big data and business analytics products is expected to reach $210 billion by 2020, according to market researcher IDC, up from $150.8 billion in 2017. That growth is fueling a continuous stream of startups in the big data arena. But it's also pushing more established players to maintain a rapid pace of developing and delivering new and updated big data products.

A new generation of software tools that help businesses and organizations manage data proliferation across hybrid on-premise, private cloud and public cloud systems are hitting the market. Machine learning and artificial intelligence are also big drivers in the big data space with many vendors launching big data-focused AI and ML software or adding AI/ML capabilities to their products.

Here are 10 big data platforms and tools unveiled so far in 2019 that have caught our attention.

Actian Avalanche

Actian, which offers a broad range of data management, integration and analytics software, made a bold move in March with the launch of Actian Avalanche, a fully managed cloud data warehouse that is competing against Snowflake Computing, AWS Redshift and other cloud data warehouse services.

Actian said that Avalanche offers data warehouse performance with scalability, flexibility and ease of use, along with "breakthrough economics" that make the system cost-competitive with other cloud data warehouse services. Avalanche runs on Actian's patented X100 analytics query engine and uses the company's FlexPath technology to provide computing resource elasticity.

DataStax Constellation

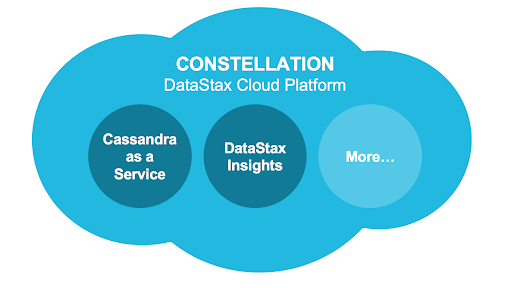

DataStax develops a distributed cloud database built on the open-source Apache Cassandra database. In May, at its inaugural DataStax Accelerate conference, the company debuted the DataStax Constellation cloud platform with services for developing and deploying cloud applications architected for Apache Cassandra.

The platform initially offers two services: DataStax Apache Cassandra-as-a-Service and DataStax Insights, the latter a performance management and monitoring tool for DataStax Constellation and for DataStax Enterprise, the vendor's flagship database product.

Domo IoT Cloud

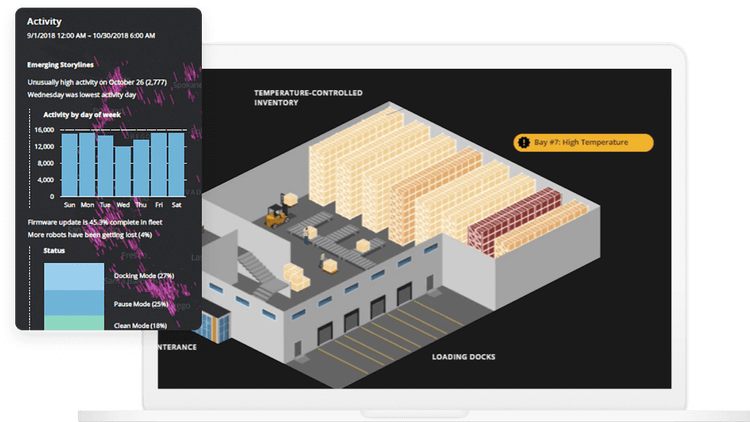

In March Domo launched Domo IoT Cloud, a new service that uses the popular Domo cloud platform to help users capture, visualize and analyze real-time data generated by connected Internet of Things devices.

Domo IoT Cloud includes a set of prebuilt connectors to link Domo to data sources such as AWS IoT Analytics, Azure IoT Hub, Apache Kafka and others. It also includes the first two of an expected series of IoT-focused applications: the Domo Device Fleet Management App for organizing fleets of devices and the Production Flow App for monitoring the production process of shop floor devices.

Fivetran Transformations

Fivetran, a developer of software used to replicate data into cloud data warehouses such as Snowflake Computing and AWS Redshift, debuted in June its Fivetran Transformations in-warehouse data transformation software. An extension of the company's data connectors software, Transformations provides a simplified, fail-safe way to orchestrate SQL data transformations when data arrives or on a schedule as part of Fivetran's automated data pipeline system.

Fivetran Transformations performs SQL-based transformations within a cloud data warehouse, as opposed to older technologies that apply transformations before data enters a data warehouse. The difference is significant because original data is protected and available should data restoration be required, improving scalability and the ability to recover from system failure.

Immuta Automated Data Governance Platform

Data governance, adhering to data security, legal and compliance policies, is a major challenge for IT organizations. Immuta's new Automated Data Governance Platform provides no-code, automated data governance capabilities that enable business analysts and data scientists to securely share and collaborate using data, dashboards and scripts without the fear of violating data usage policies and industry regulations.

The Immuta system ensures compliance with the European General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), and the Health Insurance Portability and Accountability Act (HIPAA).

MongoDB Atlas Data Lake

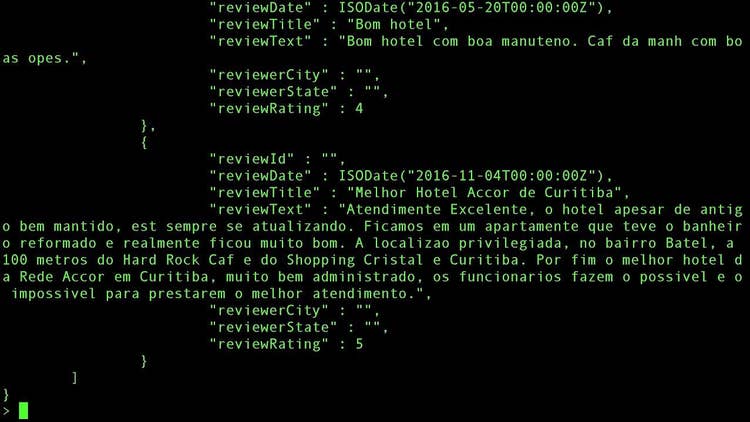

MongoDB, developer of one of the leading next-generation distributed databases, unveiled in June beta versions of its MongoDB Atlas Data Lake and MongoDB Atlas Full-Text Search software—new services that expand the ways developers can use the MongoDB database to work with data.

Atlas Data Lake accesses and queries data in AWS S3 "storage buckets" in any format (JSON, Avro, Parquet and others) using the MongoDB Query Language. (Support for Google Cloud Storage and Azure Storage is in the works.) Atlas Full-Text Search provides rich text search capabilities (based on the Apache Lucerne technology) against MongoDB databases with no additional infrastructure needed.

Splice Machine ML Manager

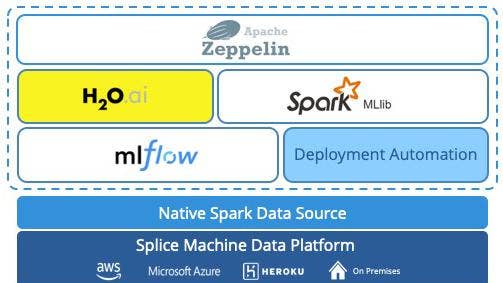

Splice Machine offers an operational AI data platform that combines a SQL relational database, a data warehouse and a machine-learning platform.

In April the company released a beta edition of ML Manager, a native data science and machine-learning system running on the Splice Machine platform that data science teams use to maximize the performance of their machine-learning models. That means data scientists can experiment on 10 times as many data pipelines to optimize their models and put those models into production more quickly for predictive analytical tasks.

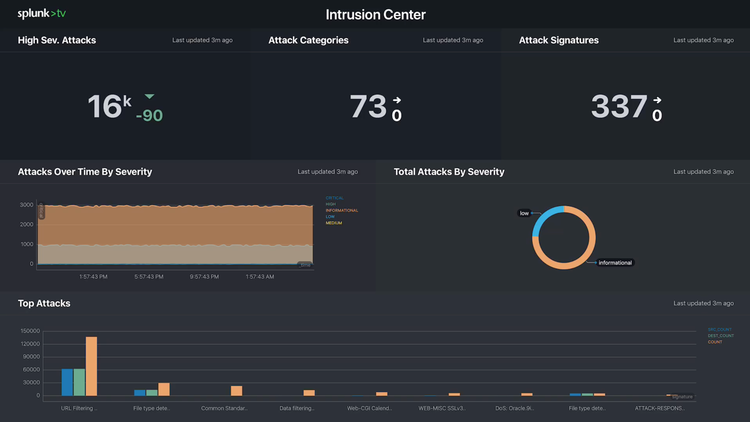

Splunk Connected Experiences and Splunk Business Flow

Getting data into the hands of everyday information workers is a major challenge. Splunk launched two products in April that make it easier for users to gain value from their data.

Splunk Connected Experiences makes information available to nontechnical users, providing access to Splunk dashboards through augmented reality, mobile applications and mobile devices. Connected Experiences is made up of several components including Spunk Cloud Gateway, Splunk Mobile, Splunk TV and Splunk Augmented Reality.

Splunk Business Flow serves operations professionals who oversee business processes—particularly those involving customer experiences. The software provides easy-to-use process mining capabilities to discover process bottlenecks that threaten business performance and identify opportunities for process improvement. The product works by correlating data from multiple systems, providing visualization of end-to-end business processes, and investigating the root causes behind process issues.

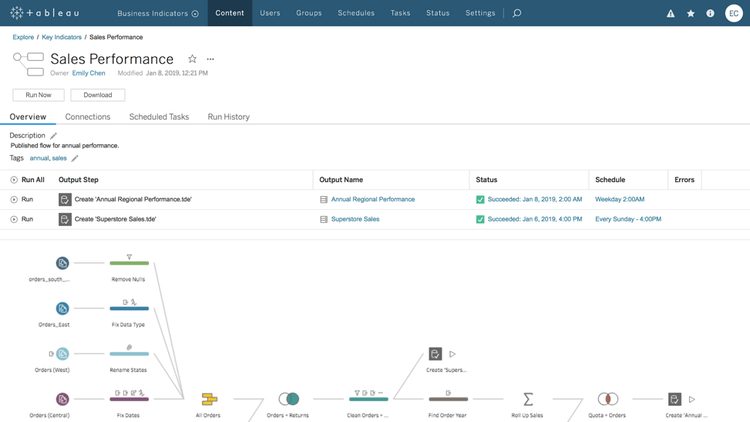

Tableau Prep Conductor

Business analytics software developer Tableau Software, which is in the process of being acquired by Salesforce.com, has been expanding beyond data visualization and analytics in the last year to provide more tools for preparing data for analysis.

In February Tableau launched Tableau Prep Conductor, software for scheduling, monitoring and administering data flows, making it easier to share data sources using Tableau Server or Tableau Online. Tableau Prep Conductor is part of the Tableau Data Management Add-On toolkit.

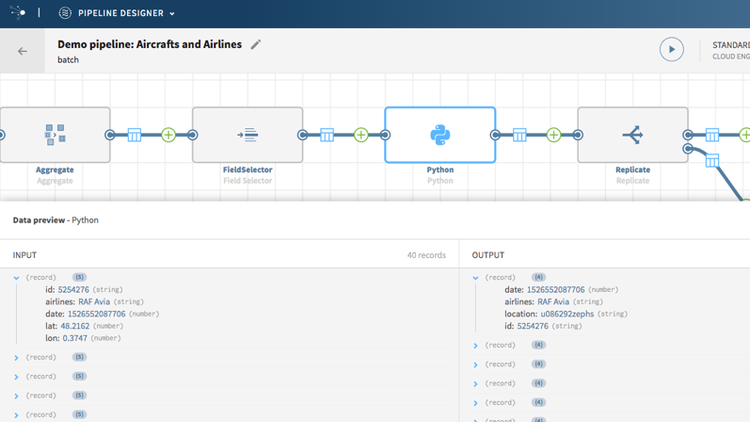

Talend Pipeline Designer

In April Talend debuted Pipeline Designer, a web-based graphical design tool for building data pipelines used to view and transform batch, live and streaming data. The Pipeline Designer was an addition to the company's Talend Cloud integration Platform-as-a-Service system.

Pipeline Designer targets data engineers and software developers who are charged with designing pipelines that integrate data across hybrid cloud and multi-cloud environments, developing links between cloud services such as Amazon Web Services, Microsoft Azure and Snowflake Computing and with on-premises databases and other data sources.