Google Cloud Unveils 9 New Tools At First Data Cloud Summit

‘We fundamentally want to change…how companies are thinking of data from a technology-centric view to an ability-centric view,’ says Gerrit Kazmaier, Google Cloud’s new general manager and vice president for data analytics, databases and Looker.

At its inaugural Data Cloud Summit today, Google Cloud unveiled three new database and data analytics products designed to make working with data “simple.”

The new data cloud technologies include Dataplex, an intelligent data fabric to help customers analyze and manage their distributed data at scale; Datastream, a new serverless change data capture and replication service; and Analytics Hub, which will allow companies to securely create, curate and manage analytics exchanges in real time.

Google Cloud also announced the preview of BigQuery Omni for Microsoft Azure, the general availability of Looker for Microsoft Azure and BigQuery ML Anomaly Detection, and several other data products and updates, including a lower entry price for Cloud Spanner.

While every company is on a journey to become data-driven, the reality is many have yet to succeed, according to Gerrit Kazmaier, Google Cloud’s new general manager and vice president for data analytics, databases and Looker.

“We fundamentally want to change…how companies are thinking of data from a technology-centric view to an ability-centric view,” Kazmaier said. “And if you think about an ability — like the ability of hearing, for instance — it requires a set of interconnected skills…to be able to work and to act on that ability.”

The promise of data is massive, and Google Cloud is known for the strength of its data analytics capabilities. Data analytics stands to have a $15.4 trillion impact annually on the global market, according to a McKinsey & Co. study, but only 32 percent of companies say they’re getting measurable value from their data.

“There is still a big gap,” said Kazmaier, who joined Google Cloud in April from SAP, where he spent more than six years and held several president and vice president roles involving SAP Hana and analytics.

Google Cloud wants to connect the full data lifecycle — from where applications are run, to analytical systems where patterns are recognized and analyzed, to machine learning (ML) for automation and prediction, to people making decisions.

“This means we are also connecting the different roles of working with data,” Kazmaier said. “There is the software developer writing the application, there are data engineers, there are data scientists and there are business analysts, and they need to all work on a common platform to build upon. We need to think about these interconnected areas and skills, because data actually represents a value flow.”

That means also connecting the Google Cloud ecosystem.

“It’s all about openness when working with data, so that means openness for the multi-cloud, it means connecting the ecosystem from third-party solutions to be running on Google Cloud and as a part of our integrated cloud system, and open-source systems,” Kazmaier said.

Tom Galizia, Deloitte’s global chief commercial officer, referred to Dataplex, Datastream and Analytics Hub as a “powerhouse trifecta.”

“Deloitte will be a leading teammate in delivering these solutions with industry-critical tools for enterprise customers and institutions,” he said. “What is truly powerful here is that Google Cloud solves for disparate and bespoke systems housing hard-to-access siloed data with enhanced data experiences. They’ve also simplified implementation and management for better decision making.”

Here’s a more detailed look at Google Cloud’s new data and data analytics products and the Cloud Spanner pricing news.

Dataplex

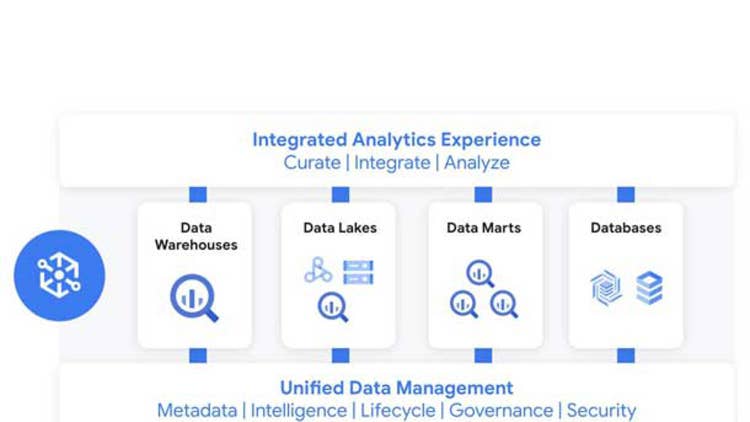

Dataplex, now in preview, is an intelligent data fabric that allows organizations to centrally manage, monitor and govern their data across data lakes, data warehouses and databases from a single view to help automate data management.

Enterprise data is and will remain distributed and reside across on premises and one or more cloud environments, but enterprises lack the tools to build an integrated data platform across that footprint, according to Irina Farooq, director of product management for smart analytics at Google Cloud.

“Each of these systems comes with its own way to handle metadata, data quality, security and governance,” she said. “At the same time, the number of analytics and AI (artificial intelligence) users in the enterprise is growing exponentially. Each is looking for self-service access to high-quality data in an ever-growing set of tools of their choice. What this results in is the constant operational overhead of moving data around, duplicating data and creating homegrown processes to track data and users. The problem is that those processes become outdated the moment they’re put in, diminishing users trust in data and undermining key organizational priorities like financial governance and planning.”

Dataplex is built from the ground up for distributed data, requiring no data movement or duplication, according to Farooq. It focuses on three key areas of value: intelligent AI power in data management, centralized security and governance, and integrated analytics experiences that combine the best of Google Cloud Platform (GCP)-native tools such as BigQuery or Dataflow with the best of open-source tools such as Apache Spark.

Datastream

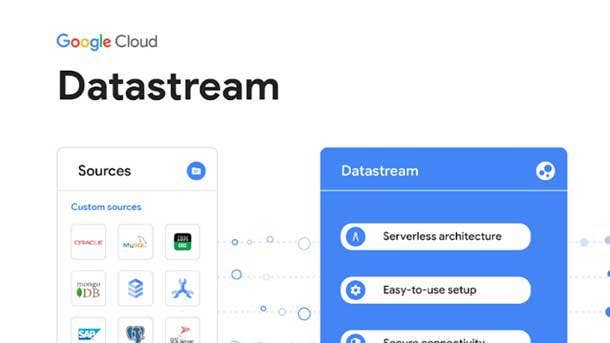

DataStream, also in preview, is a new serverless, cloud-native change data capture (CDC) and replication service with advances in multi-cloud support. Google Cloud developed it to make it easier for customers to manage all their data silos and move data across them. It allows customers to ingest data streams in real-time from Oracle and MySQL databases, to Google Cloud services such as BigQuery, Cloud SQL for PostgreSQL, Google Cloud Storage and Cloud Spanner.

To date, customers wanting to replicate data as needed primarily having been using batch jobs or a “clunkier” kind of CDC technologies, according to Andi Gutmans, general manager and vice president of database engineering for Google Cloud.

“The problem, though, is that the existing technologies have all been very costly, very hard to manage and really lacked a lot of flexibility on what to do with the data once the data change is actually captured,” he said. “And so we set out to solve this problem.”

Since Datastream is serverless, customers don’t have to manage it like they’ve had to manage CDC services in the past, according to Gutmans.

“We seamlessly scale up and down, and we make it incredibly easy for customers to connect data from one data store to the other,” he said. “We also replicate with minimal latency, so customers who really need to make real-time decisions — whether that’s through analytics or through event-based architectures — they get those changes in real time and can act on them in real time. Last, but not least, we’ve made it very, very flexible. The output of change to this experience can either be into very specific systems like Google Cloud Storage, but we’ve also made it very open, so customers can integrate it into BigQuery, Cloud SQL, Spanner. And we have plans to add additional ways for customers to leverage these data changes to build their modern applications.”

Analytics Hub

Analytics Hub is a fully managed service built on BigQuery that efficiently and securely exchanges data and analytics assets across organizations. Slated to be available in preview in the third quarter, it allows data providers to control and monitor how their data is being used and enables organizations to create and access a curated library of internal and external assets, including unique datasets from Google.

“It ushers in a new era for the world of data- and insight-sharing,” said Debanjan Saha (pictured), general manager and vice president of data analytics in Google Cloud. “This is not your grandfather’s data-sharing platform. Analytics Hub allows companies to publish, discover and subscribe shared data assets. It allows publishers to create exchanges that combine unique Google data sets with commercial, industry and public datasets, as well as data sets that belong to these organizations themselves. Publishers will be able to queue their data exchanges internally and externally, and they’ll be able to view aggregated usage metrics on how popular their exchanges are, and that’s very, very important.”

While the cloud makes data sharing across organizations much more viable, doing so at scale still can be very challenging, according to Saha. Traditional data sharing requires the extraction of data from databases, storing it into flat files and then transmitting them to consumers where they can ingest that into another database. This results in multiple copies of data and unnecessary costs, especially when a customer has multiple petabytes of data sets, he said.

“Also, traditional data-sharing techniques bypass data governance processes,” Saha said. “As providers of data, how do you know...how (that) data actually (is) being used and who has access to it? If you want to monetize your data, how do you know how to manage subscriptions and entitlement? Altogether, these challenges mean that organizations are unable to realize the true potential to transform the business with data sharing.”

BigQuery, Google Cloud’s fully managed, serverless and multi-cloud data warehouse, has had cross-organizational, in-place data-sharing capabilities since its inception in 2011.

“Over the last seven days, 3,000 different organizations shared more than 200 petabytes of data using BigQuery,” Saha said. “Data sharing in BigQuery is already very, very popular, and we wanted to make it easier and even more scalable, and that’s why we built Analytics Hub.”

Google Cloud is not calling Analytics Hub a data exchange by design, according to Saha.

“Our vision is bigger than just sharing data,” he said, citing BigQuery ML and its built-in machine learning capabilities that are used by more than 80 percent of Google Cloud’s top 100 customers. “This opens it up for more opportunities, as more organizations can not only share data, but also can share machine learning models, rich, dynamic dashboards, etc.”

BigQuery Omni And Looker For Microsoft Azure

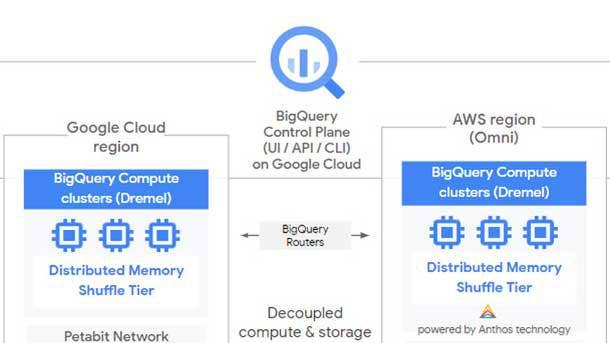

Google Cloud introduced BigQuery Omni last July as a multi-cloud analytics solution that lets customers access and securely analyze data across Google Cloud and Amazon Web Services (AWS) using standard SQL and without leaving the BigQuery user interface. It runs on Anthos clusters – Anthos is Google Cloud’s hybrid and multi-cloud application platform — that are fully managed by Google Cloud.

BigQuery Omni now also is in preview for Microsoft Azure.

“With BigQuery Omni, you don’t really have to have your data, for example, on GCP,” Saha said. “You can have it in AWS and Azure and still can use the power of BigQuery.

Looker for Microsoft Azure also is now generally available. Looker is an enterprise platform for business intelligence, data applications and embedded analytics acquired by Google in 2019.

BigQuery ML Anomaly Detection And Dataflow Prime

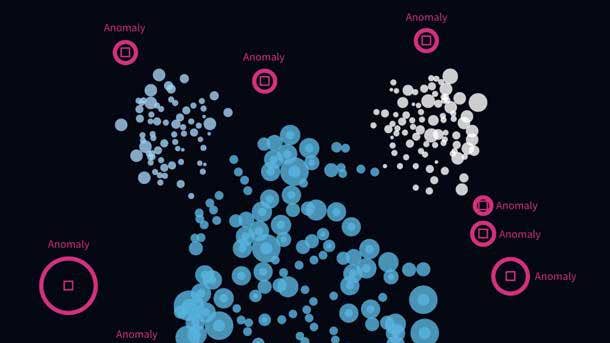

BigQuery ML Anomaly Detection, developed to allow customers to more easily detect abnormal data patterns using BigQuery’s “built-in” ML capabilities, is now generally available. Customer use cases include bank fraud detection and manufacturing defect analysis.

BigQuery ML, which became generally available in 2019, enables users to create and execute machine learning models in BigQuery by using standard SQL queries.

Dataflow, meanwhile, is a managed service for executing a wide variety of data-processing patterns. The new Dataflow Prime, which Google Cloud plans to make available in the third quarter, will embed AI and ML capabilities to provide streaming predictions such as time series analysis, smart diagnostics that proactively identify bottlenecks and auto-tuning for increased utilization.

Lower Entry Price For Cloud Spanner And Other Updates

Google Cloud said it will lower the entry price for Cloud Spanner, its fully managed, mission-critical relational database, by 90 percent. It will soon offer customers granular instance sizing that it says will reduce the barrier of entry, while providing the same scale and reliability to support business growth.

BigQuery federation to Spanner also is coming soon. It will allow users to query transactional data residing in Spanner from BigQuery, for richer, real-time insights.

And Key Visualizer, now in preview, provides interactive monitoring that allows developers to quickly identify trends and usage patterns in Spanner.

“Spanner is just an incredible technology, which is infinitely scalable, and you can scale with zero downtime,” Gutmans said. “One of the challenges we’ve had with Spanner is the entry point…was around $600 per month. So if you’re a developer who’s trying to build casual games and checking out multiple casual games and trying to get to that successful hockey stick, the Spanner entry point was sometimes cost-prohibitive.”