Google I/O 2021 Recap: 11 Big Product, Service And Feature Updates

Vertex AI, Multitask Unified Model technology, the LaMDA open-domain language model for dialog applications, Google Maps updates and AR Athletes on Google Search were among the highlights from the developer conference.

Google I/O wraps today, leaving developers to digest a wide variety of new product, service and feature announcements, ranging from a managed machine learning platform and other artificial intelligence-fueled advances to Google Workspace updates to new Multitask Unified Model technology.

The livestreamed conference, held virtually after being cancelled last year amid coronavirus restrictions, fielded registrations from some 200,000 developers from 181 countries, with 80 percent from outside the United States.

“I/O has always been a celebration of technology and its ability to improve lives, and I remain optimistic that technology can help us address the challenges we face together,” Alphabet and Google CEO Sundar Pichai said. “At Google, the past year has given renewed purpose to our mission to organize the world’s information and make it universally accessible and useful. We continue to approach that mission with a singular goal: Building a more helpful Google for everyone. That means being helpful in moments that matter, and it means giving you the tools to increase your knowledge, success, health and happiness.”

Here’s a look at 11 of the new product, service and feature announcements made during the Google I/O conference, with more from Pichai found here and Android updates found here.

Vertex AI

Vertex AI, now generally available, is designed to help developers more easily build, deploy and scale machine learning (ML) models faster with pre-trained and custom tooling within a unified artificial intelligence (AI) platform.

The managed ML platform brings together AutoML and AI Platform into a unified API, client library and user interface. It requires almost 80 percent fewer lines of code to train a model versus competitive cloud providers’ platforms, according to Google Cloud.

Google Cloud has been working on the new product for two years to allow data scientists and ML engineers across ability levels to implement MLOps to build and manage ML projects throughout a development lifecycle. The objective of Vertex AI is to accelerate the time to ROI for Google Cloud customers, according to Craig Wiley, director of product management for Google Cloud AI.

“Two years ago, we realized there were two significant problems we had,” Wiley told CRN. “The first issue we had was that we had dozens of machine learning services for customers…and none of them worked together. We’ve been running so fast to build them all, that there was no internal compatibility. If you used product A to build something, you couldn’t then take that and do more on top of it with product B, and we realized that was a huge problem. So we committed internally that we would all go back, and we would define one set of common nouns and verbs, and we would refactor all of these services so that they were internally compatible.”

The second issue was that cloud providers and other ML platform companies were telling developers they had everything they needed to be successful, while not providing the important MLOps tools necessary for success.

In addition to completely refactoring all of its former services, Google Cloud has launched a series of new applications or capabilities around MLOps. Most are in preview, with the expectation that they’ll move into general availability in about 90 days.

Developers can more quickly deploy useful AI applications with new MLOps features such as Vertex Vizier, a black-box optimization service that helps tune hyperparameters in complex ML models; Vertex Feature Store, which provides a centralized repository for organizing, storing and serving ML features; and Vertex Experiments to accelerate the deployment of models into production with faster model selection.

Other MLOps tools include Vertex Continuous Monitoring and Vertex Pipelines to streamline the end-to-end ML workflow.

“We also are launching a series of Google ‘secret sauce’ pieces, a series of capabilities that are part of how Google is able to produce some of the output and capabilities it does -- so things like neural architecture search and our nearest neighbors matching engine and things of that nature,” Wiley said.

Multitask Unified Model (MUM)

In 2018, Google introduced Bidirectional Encoder Representations from Transformers (BERT), a neural network-based technique for natural language processing pre-training that allows anyone to train their own state-of-the-art, question-and-answering system.

With Multitask Unified Model (MUM), Google said it’s reached its next AI milestone in understanding information, advances that will be applied to Google Search.

Like BERT, it’s built on the Transformer architecture, but it “turns up the dial,” said Prabhakar Raghavan, senior vice president responsible for Google Search, Assistant, Geo, Ads, commerce and payments products (pictured above).

“MUM is 1,000 times more powerful than BERT, but what makes this technology groundbreaking is its ability to multitask in order to unlock information in new ways,” Raghavan said,

MUM can acquire deep knowledge of the world. It can understand language and generate it. It also can train across 75 languages at once, unlike most AI models which train on one language at a time, Raghavan said.

“What makes MUM even more amazing is that it’s multimodal, which means it can simultaneously understand different forms of information like text, images and video,” he said. “We’ve already started some internal pilots to see the types of queries it might be able to solve and the billions of topics it might help you explore. We’re in the early days of exploring this new technology. We’re excited about its potential to solve more complex questions, no matter how you ask them.”

LaMDA

Pichai described LaMDA, an open-domain language model for dialog applications, as a “huge step forward in natural conversation.”

“While it’s still in research and development, we’ve been using it internally to explore novel interactions,” he said. “Natural conversations are generative, and they never take the same path twice, and LaMDA is able to carry a conversation no matter what we talk about. You can have another conversation without retraining the model.”

LaMDA’s natural conversation capabilities have the potential to make information and computing “radically more accessible and easier to use,” according to Pichai. “We look forward to incorporating better conversational features into products like Google Assistant, Search and Workspace,” he said. “We’re also exploring how to give capabilities to developers and enterprise customers.”

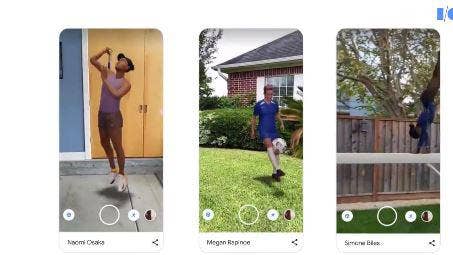

AR Athletes On Google Search

Google introduced augmented reality (AR) to Search two years ago to help people explore concepts visually, up close and in their own spaces.

“Last year, when many of us first started sheltering in place, families around the world found joy in AR,” Raghavan said. “From tigers to cars, people interacted with this feature more than 200 million times. Now, we’re bringing some of the world’s best athletes to AR, so you can see how they perform some of their most impressive feats right in front of you.”

With AR Athletes on Google Search, users can see a 3D AR depiction of how American professional soccer player Megan Rapinoe juggles a soccer ball or American gymnast Simone Biles lands a triple double -- one of her most difficult tumbling combinations -- or a double-double dismount from the beam.

“AR is a powerful tool for visual learning,” Raghavan said. “With the new AR Athletes on Google Search, you can see signature moves from your favorite athletes up close.”

New TPU v4 Chip For ML

Pichai announced Google’s next-generation TPU v4 chip, designed specifically for ML.

“Our compute infrastructure is how we drive and sustain these advances, and Tensor Processing Units (TPUs) are a big part of that,” Pichai said. “These are powered by the v4 chip, which is more than twice as fast as the v3 chip (introduced in 2018).”

TPUs are connected together into supercomputers called pods, Pichai explained, and a single v4 pod contains 4,096 v4 chips.

“Each pod has 10x the interconnect bandwidth per chip at scale compared to any other networking technology,” he said. “This makes it possible for a TPU v4 pod to deliver more than one exaFLOP -- 10 to the 18th power floating point operations per second of computing power. Think about it this way: If 10 million people were on their laptops right now, then all of those laptops put together would almost match the computing power of one exaFLOP.

It’s the fastest system that Google ever has deployed, according to Pichai.

“Previously, to get an exaFLOP, you needed to build a custom supercomputer,” he said. “But we already have many of these deployed today, and we’ll soon have dozens of TPU v4 pods in our data centers, many of which will be operating at or near 90 percent carbon-free energy.”

The TPU v4 pods will be available to Google Cloud customers later this year.

About This Result

Google will start rolling out its About This Result feature to all of its English-language Google Search results worldwide this month, with more languages to come. The new feature, unveiled in February, makes it easier to check out potential sources of information by providing helpful details about websites that show up in its Google Search results.

“We’re building features that make it easier for you to evaluate the credibility of information right in Google Search,” Raghavan said.

User can tap the three dots next each Search result to see the details about the website, including its description, when it was first indexed and whether their connection to the site is secure.

“This context is especially important if it’s a site you haven’t heard of and want to learn more about it,” Raghavan said. “And later this year, we could add even more detail, like how the site describes itself, what other sources are saying about it and related articles to check out. This is part of our ongoing commitment to provide you the highest quality of results and help you evaluate information online.”

Lens Translate

Google has updated its Translate filter in Google Lens to make it easy to copy, listen to or search translated text to help students access educational content from the web in 100-plus languages.

Google Lens lets users search what they see from their cameras, photos or a Google Search bar, and people use it to translate more than a billion words every day.

“This translation feature has been especially useful for students, many of whom might be learning in the language they’re less comfortable with,” Raghavan said. “Now, thanks to our Lens team in Zurich, we’re rolling out a new capability that combines visual translation with educational content from the web to help people learn in more than 100 languages. For instance, you can easily snap a photo of a science problem, and Lens will provide learning resources in your preferred language. It brings to life the power of visual information, especially for learning.”

Google Maps Updates

Google added new routing updates to Google Maps.

Using its understanding of road and traffic conditions, Google Maps soon will give users the option of taking eco-friendly routes that are the most fuel-efficient.

“At scale, this has potential to significantly reduce car emissions and fuel consumption,” Pichai said.

Powered by AI, the new safer routing feature in Maps can identify road, weather and traffic conditions where users likely will have to suddenly brake.

“We aim to reduce up to 100 million of these events every year,” Pichai said.

Google also is using ML to provide expanded details for streets – including road widths to scale, sidealks, crosswalks, urban trails and pedestrian islands -- in 50 additional cities this year.

That information could be helpful for users taking young children out on a walk or essential for those using wheelchairs, according to Liz Reid, vice president of Google Search (pictured above).

The new Area Busyness feature, which will roll out globally in the coming months, will help users quickly understrand how busy certain streets or neighborhoods are.

“Say you’re in Rome and want to head over to the Spanish Steps and its nearby shops,” Reid said. “With Area Busyness, you’ll be able to understand, at a glance, if it’s the right time for you to go based on how busy that part of the city is in real time. We use our industry-leading differential privacy techniques to protect anonymity in this feature.”

Google also will tailor Maps to highlight the most relevant places based on times of day and whether the users are traveling.

New Live View updates, meanwhile, will include the addition of prominent virtual street signs to help users navigate complex intersections. A second new feature will give users helpful cues to show where they are in relation to key landmarks and places that are important for them, like the directions of their home, work or hotel.

“Third, we’re bringing (Live View) indoors to help you get around some of the hardest-to-navigate buildings, like airports transit stations and malls,” Reid said. “Indoor Live View will start rolling out in top train stations and airports in Zurich, this week, and will come to Tokyo next month.”

Password Manager Updates

The single most common security vulnerability today is still bad passwords, and consumer research has shown that two-thirds of people admit to using the same password across accounts, which multiplies their risk, according to Jen Fitzpatrick, senior vice president of core systems and experiences at Google (pictured above).

“Ultimately, we’re on a mission to create a password-free future,” she said. “That’s why no one is doing more than we are to advance phone-based authentication. And, in the meantime, we’re focused on helping everyone use strong, unique passwords for every account.”

Fitzpatrick unveiled four new upgrades to Google Password Manager, which creates, remembers, saves and auto-fills passwords across the web, and already has been used by more than half a billion people.

The first is a new tool to make it easier to import passwords saved in files or other password managers.

“Next, we’ll have deeper integration across both Chrome and Android, so your secure passwords go with you from sites to apps,” Fitzpatrick said. “Third, automatic password alerts will let you know if we detect any of your saved passwords have become compromised in a third-party breach.”

And, with a new quick-fix feature in Chrome, Google Assistant will help users navigate directly to their compromised accounts and change their passwords in seconds.

Google Workspace And Smart Canvas

Google described Smart Canvas as the “next evolution for collaboration for Google Workspace (formerly G Suite).” Included are enhancements to Google Docs, Sheets and Slides that make them more flexible, interactive and intelligent.

“With Smart Canvas, we’re bringing together the content and connections that transform collaboration into a richer, better experience,” said Javier Soltero, vice president and general manager of Google Workspace. “For over a decade, we’ve been pushing documents away from being just digital pieces of paper and toward collaborative, linked content inspired by the web. Smart Canvas is our next big step.”

Google disclosed a dozen Google Workspace updates, including incorporating Meet directly into Doc, Sheets and Slides for the first time this fall, allowing teams to see and hear each other while collaborating. Content now can be presented in a Google Meet call on the web directly from the Doc, Sheet or Slide where a team is already working.

Other new features, including new smart chips, templates and checklists along with a pageless format in Docs and emoji reactions, will be added through the end of the year.

Currently, when a user @-mentions a person in a document, a smart chip shows information including the person’s location, job title and contact information. Now, users can insert smart chips in Docs for recommended files and meetings, allowing web and mobile users to skim associated meetings or linked documents without changing tabs or contexts. Google will introduce smart chips to Sheets in the coming months.

A smarter meeting notes template in Docs will automatically import any relevant information from a Calendar meeting invite, including smart chips for attendees and attached files. And with new table templates in Docs, teams can use top-voting tables for feedback and project-tracker tables to mark project milestones and statuses.

Checklists are now available for Docs on web and mobile, allowing users to assign checklist items to people and see those to-do list action items in Google Tasks.

The new pageless Docs format will allow users to remove page boundaries to create a surface that expands to the device or screen that they’re using to make it easier to work with wide tables, large images or detailed feedback in comments. To print or convert to a PDF, users can easily switch back to a paginated view.

Google expects to add emoji reactions to Docs in the next few months.

An assisted writing feature will suggest more inclusive language recommendations -- using the word “chairperson” rather than “chairman,” or “mail carrier” in lieu of “mailman,” for example – and other stylistic suggestions, such as avoiding the passive voice or offensive language.

“New assisted writing capabilities in Google Workspace offer suggestions so you can communicate more effectively,” Soltero said. “Not only are we helping with language suggestions, we’re also making it easy to bring the voices and faces of your team directly into the collaboration experience to help them share ideas and solve problems together.”

Google will launch companion mode in Google Meet later this year, giving each participant their own video tile, so they can stay connected to remote colleagues, and everyone can participate in polls, chat and question-and-answer sessions in real time.

“Previously, the fully integrated experience in Google Workspace was available only to our customers, but it will soon be available to everyone, from college students, to small businesses, to friends and neighbors wanting to stay connected and get more done together,” Soltero said, noting more details will be announced in the coming weeks.

AI-Powered Dermatology Assist Tool

Google previewed an AI-powered dermatology assist tool designed to help people research and identify common skin issues with the goal of reducing skin disease. The tool uses many of the same techniques used to detect diabetic eye disease or lung cancer in CT scans.

Google sees billions of Search queries each year related to dermatologic issues, which is no surprise considering dermatologic conditions affect about 2 billion people annually, according to Dr. Karen DeSalvo, Google’s chief health officer.

“We wondered how can AI help when you’re searching and asking, ‘What is this’?” DeSalvo said. “Meet our AI-powered dermatology assist tool, a class 1, CE- marked medical device that uses machine learning to help find answers to common derm conditions right from your smartphone or computer.”

From their phone, users can upload three different photos taken from different angles of the skin, hair or nail issue that they want to learn about, and also answer some basic questions about their symptoms. An AI model handles the rest, according to DeSalvo.

“In a matter of seconds, you will have a list of possible matching dermatologic conditions, and then we can help you get relevant information to learn more,” she said. “It seems simple, but developing an effective AI model for dermatology requires the capability to interpret millions and millions of images, inclusive of a full range of skin types and tones.”

When available, the tool will be accessible from a browser and cover 288 conditions, including 90 percent of the most commonly searched dermatological-related questions on Google. The company is working to make it available to on Google Search to European Union consumer as early as the end of this year.