Intel, AMD, Ampere and Nvidia Leaders on Oracle’s Next-Gen Cloud Lineup

Next year, Oracle will outfit its public infrastructure cloud with cutting-edge processors from Intel, AMD, and Ampere; and its new Nvidia A100 powered GPU platform comes online next week.

Oracle’s Next-Gen OCI Lineup

Oracle unveiled its coming lineup of cloud infrastructure services Tuesday, showcasing four new instances at the center of a cloud strategy focused on powering resource-demanding workloads with unrivaled flexibility.

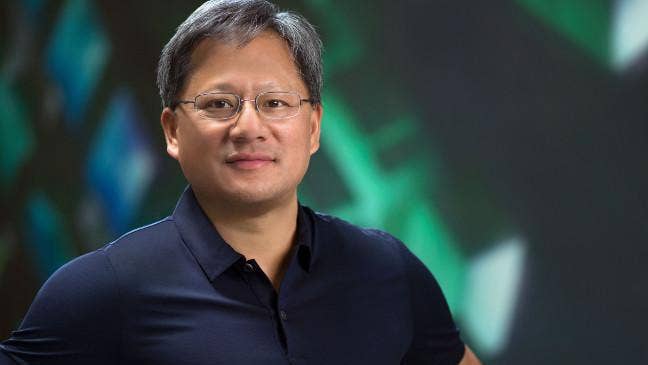

Oracle Cloud Chief Clay Magouyrk introduced the new instances in a virtual keynote joined by Intel CEO Bob Swan, AMD CEO Lisa Su, Ampere CEO Renee James, and Nvidia CEO Jensen Huang.

Next year, Oracle will outfit its public infrastructure cloud, which has gone in six years from skunkworks project to spanning two dozen regions globally, with cutting-edge processors from Intel, AMD, and Ampere; and its new Nvidia A100 powered GPU platform comes online next week.

Those hardware options look to improve the cost-performance dynamics for customers running high performance computing (HPC), artificial intelligence, edge and traditional workloads on Oracle Cloud Infrastructure, Magouyrk said.

Nvidia Enables Cutting-Edge AI

Next week, Oracle Cloud will make generally available its new GPU platform powered by Nvidia A100s.

The accelerated compute instances, developed with feedback from customers increasingly adopting artificial intelligence, look to differentiate from competitors in their ability to network large clusters running on bare-metal servers. Oracle also will add the option to attach up to 25 terabytes of local storage to those instances, and up to 2 terabytes of memory, Magouyrk said.

Customers can create clusters of up to 512 GPUs to train large machine learning models, he said.

Nvidia CEO Jenson Huang congratulated Oracle on the launch in a discussion with Magouyrk.

Nvidia’s data center business has been doubling every year “off of really large numbers,” Huang said, thanks to the recognition about a decade ago that “software trained with AI really wants to be accelerated with GPUs.”

While accelerated computing “starts with an amazing processor,” it’s also reliant on software, acceleration libraries and the ML models sitting on top of those tools.

The deep learning software propelling Nvidia’s growth is “the big bang of AI today,” Huang said.

Oracle’s unique architectural approach allows extremely large numbers of GPUs, rapidly moving data between those nodes with Nvidia Mellanox network interfaces, to effectively work in parallel churning through machine learning models.

AI researchers want “the ability to iterate on very large models to make the perfect model,” Huang said.

“We’re going to put this technology in the hands of enterprise customers all over the world. We think this is the next great adventure for us and we’re really excited to do it with Oracle,” Huang said.

Intel Accelerates High Performance Computing

Based on what Oracle is seeing from large manufacturing and automotive customers like Nissan Motors, which is now running crash simulations and 3D visualizations on tens of thousands of production cores in the OCI Japan region, Oracle believes a significant migration of HPC workloads to the cloud has finally begun.

To accelerate those migrations, in early 2021 Oracle will replace its X7 instances with new X9s powered by Intel Ice Lake processors. Those latest HPC instances should boost performance by more than 30 percent from the previous generation at roughly the same cost, Magouyrk said.

Oracle and Intel have worked closely together on joint development for some 25 years, Intel CEO Bob Swan said.

That relationship is more important than ever thanks to the advent of high performance computing in the cloud.

“The integration of Intel’s technologies across Oracle Cloud and Engineered Systems is an extension of our long-term partnership at every layer of hardware and software development,” Swan said, noting Intel worked with Oracle to win the deal migrating Nissan’s on-prem workloads to Oracle infrastructure.

Oracle’s “industry first bare-metal offering” leveraging Intel’s “highly optimized Xeon silicon” will allow Oracle-platformed applications to support more users, operate on ever-larger data sets, and respond in real-time to complex queries, Swan said.

“Intel and Oracle both strive to give customers the very best in flexibility, performance and cost savings,” Swan said.

Ampere Delivers Arm On The Edge

Oracle will soon introduce the first Arm instances in OCI, with chipsets provided by Ampere Computing.

Oracle will be “sticking a couple” of Ampere’s 80-core CPUs, buttressed by up to a terabyte of memory, on its next-gen Arm servers in the cloud, Magouyrk said.

Ampere CEO Renee James told Magouyrk: “we’re very focused on cloud software, not enterprise, not legacy, not backward-looking.” To achieve that goal, “it just happened Arm was the furthest along architecture for us to use.”

Ampere’s 80-core approach to its chips “allows for a level of isolation and security that people are excited about, it also allows for a level of density and scalability that’s really oriented around how people sell cloud instances today,” James said.

“We believe we can offer better security, higher performance and really great value for not only yourself, but your customers,” she told Magouyrk.

Oracle will extend to those instances the ability for customers to granularly choose the number of cores and memory so they can better realize the benefits in cost and power consumption that make ARM architectures well-suited for enterprises investing in mobile and Internet of Things technologies, Magouyrk said.

Oracle’s cloud chief said Arm is finally seeing progress in penetrating the server and desktop markets. He credited the work AWS has done on its Graviton and Graviton2 processors, as well as Apple making Arm chips available on its new laptop line.

“Demand for server-side Arm computing is going to be astronomical,” Magouyrk said.

James noted the device ecosystem, phone ecosystem, edge and “emerging devices becoming smart devices” is the reason Arm has culled such a large development community.

Developers can now come to the OCI cloud with new or migrated apps and quickly bring to market “something that can serve to all of those devices and emerging opportunities,” she said.

AMD For General Purpose Flexibility

For enterprises looking to cost-effectively run general purpose workloads like web applications or microservice-based apps, Oracle will introduce new E4 instances, powered by AMD’s Milan third-generation Epyc processors, early next year. Those build off the E3s, which deployed AMD Rome-generation processors to power the first OCI instances allowing customers the flexibility to mix-and-match memory and CPU.

AMD CEO Lisa Su congratulated Magouyrk and the Oracle team “on driving success in innovation on price-performance.”

Oracle and AMD have collaborated for many years across hardware, software and solutions, Su said, with a strong joint focus on performance optimization at every layer of the stack.

AMD’s mission is “to develop high performance computing products to solve some of the world’s toughest challenges,” she said.