The 10 Hottest Enterprise Cloud Services Of 2020 (So Far)

Here are the 10 most impressive cloud services of the year so far, including new offerings from AWS, Google Cloud, HPE and Microsoft Azure.

New sixth-generation EC2 instances from Amazon Web Services, Google Cloud’s hybrid and multi-cloud Anthos on AWS and HPE GreenLake “building block” cloud services are among CRN’s picks for the 10 hottest enterprise cloud computing services so far in 2020.

Spending on cloud services, including IaaS, SaaS and PaaS, has increased at a 28 percent compound annual growth rate -- four times the rate of overall enterprise IT spending – in the last four years, reaching $233 billion in 2019. It now represents about 15 percent of total IT spending in the $1.5 trillion enterprise IT market, according to research firm IDC.

Kubernetes, the open-source container-orchestration system for automating application development and management, figured big in new cloud services rolled out this year, with offerings from Microsoft Azure, AWS and Red Hat, and VMware.

Here is CRN’s take on those new cloud services and others that are standing out this year.

Anthos On AWS

Google Cloud added to the capabilities of its hybrid and multi-cloud Anthos platform in April with the general availability of Anthos on AWS, which allows customers to run workloads on rival AWS’ cloud.

The news came approximately one year after Google Cloud unveiled the Kubernetes-based Anthos at its Next ’19 conference in San Francisco. Anthos allows customers to build and manage applications across environments -- in their on-premises data centers, on Google Cloud and on rival third-party clouds. Anthos on Microsoft Azure, meanwhile, is being tested with customers.

Google Cloud also announced deeper Anthos support for virtual machines (VMs) with Anthos Config Management, which allows users to extend Anthos’ management framework to the types of workloads that make up the majority of existing systems, according to Jennifer Lin, Google Cloud’s vice president of product management.

Google Cloud is working on Anthos Service Mesh support for applications running in VMs, so customers can consistently manage security and policy across different workloads in Google Cloud, on-premises and in other clouds, according to Lin.

Later this year, Google Cloud plans to give customers the ability to run Anthos with no third-party hypervisor, “delivering even better performance, further reducing costs and eliminating the management overhead of yet another vendor relationship,” Lin wrote in a blog post in April.

Google Cloud and Hewlett Packard Enterprise (HPE) last month also made generally available HPE GreenLake for Anthos, a new service that combines Google Cloud’s container hosting and management in hybrid and multi-cloud environments with HPE’s on-premises architecture.

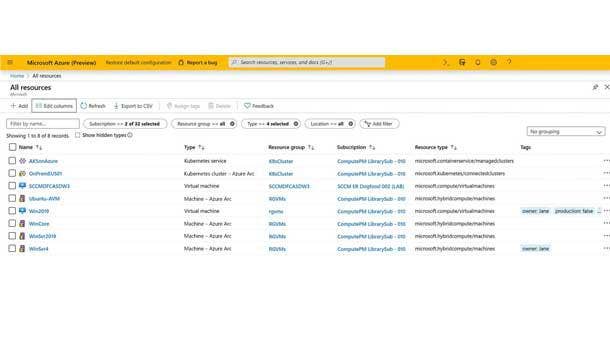

Azure Arc-Enabled Kubernetes

Microsoft heralded the public preview of Azure Arc-enabled Kubernetes during its Build 2020 developer conference in May.

Azure Arc, rolled out in public preview in November, allows for the hybrid deployment of Azure services and management to any infrastructure across datacenters, edge and multi-cloud. Azure Arc-enabled Kubernetes allows users to attach and configure any Kubernetes cluster in those same environments inside or outside of Azure. Support for most Cloud Native Computing Foundation-certified Kubernetes distributions works “out of the box.”

Azure Arc-enabled Kubernetes supports industry-standard SSL to secure data while in transit, and data is stored encrypted at rest in an Azure Cosmos DB database.

The tool, which is not recommended for production workloads, currently is available in Microsoft’s East U.S. (Virginia) and West Europe (Netherlands) cloud regions.

The next Azure Arc preview is expected to bring Azure data services -- Azure Arc-enabled SQL Managed Instance and Azure Arc-enabled PostgreSQL Hyperscale -- to Kubernetes clusters.

Google Cloud Confidential VMs

Google Cloud last month unwrapped new Confidential VMs -- the first product of its confidential computing portfolio. Confidential VMs allow users to run workloads in Google Cloud while ensuring their data is encrypted while it’s in use and being processed, not just at rest and in transit.

Available in beta for Google Compute Engine, the solution helps remove cloud adoption barriers for customers in highly regulated industries, according to Google Cloud CEO Thomas Kurian.

Vint Cerf, Google’s chief internet “evangelist,” called confidential computing a “game-changer” that has the potential to transform the way organizations process data in the cloud, while significantly improving confidentiality and privacy.

Google Cloud already employed a variety of isolation and sandboxing techniques as part of its cloud infrastructure to help make its multi-tenant architecture secure, but “confidential VMs take this to the next level by offering memory encryption, so that you can further isolate your workloads in the cloud,” according to a blog post by Google Cloud senior product manager Nelly Porter, confidential computing engineering director Gilad Golan and Sam Lugani, lead security product marketing manager for G Suite and the Google Cloud Platform (GCP).

Confidential computing can unlock computing scenarios that have previously not been possible, according to the trio, and organizations now can share confidential data sets and collaborate on research in the cloud while preserving confidentiality.

The Confidential VMs are based on Google Cloud‘s N2D series instances and leverage AMD’s Secure Encrypted Virtualization feature supported by its 2nd Gen AMD EPYC CPUs. Dedicated per-VM encryption keys are generated in hardware and are not exportable.

“We worked closely with the AMD Cloud Solution engineering team to help ensure that the VMs’ memory encryption doesn’t interfere with workload performance,” the blog post stated. “We added support for new OSS (open-source software) drivers -- NVMe and gVNIC -- to handle storage traffic and network traffic with higher throughput than older protocols. This helps ensure that the performance metrics of Confidential VMs are close to those of non-confidential VMs.”

HPE GreenLake Cloud Services

HPE took a “huge step forward” with its GreenLake pay-per-use channel model in June, moving from a bespoke, customized model to a standardized group of 17 “building block” offerings for small, medium and large businesses.

The new HPE GreenLake cloud services offerings -- which include container management, machine learning (ML) operations, data protection, networking, VMs, storage and compute -- will help customers modernize their applications and data in the environments of their choice, from the edge to the cloud. Based on pre-integrated building blocks, they’re being sold in small to large configurations.

The building blocks offering should allow partners to reduce the sales cycle to 14 days or less from price quote to delivery, from what previously took as long as six months, according to HPE.

The company also brought the full GreenLake Cloud experience to HPE.com, allowing customers to try and price packages ranging from disaster recovery/backup to private cloud and containers as a service, and then purchase them through HPE partners.

“This is really built for the channel,” HPE senior vice president Keith White told CRN’s Steven Burke. “This gives them much more standardized opportunities to deliver these services, such as virtual machines or containers or machine-learning-type capabilities, depending on what they want to deliver to their customer set. It gives them that flexibility.”

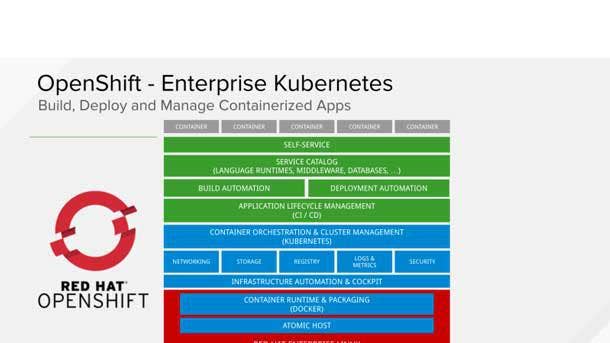

Amazon Red Hat OpenShift

AWS and Red Hat extended their partnership with May’s introduction of Amazon Red Hat OpenShift, a jointly managed and supported enterprise service that combines AWS-native capabilities with Red Hat’s enterprise Kubernetes platform.

The fully managed service is designed to help IT organizations more quickly build and deploy containerized applications in AWS on Red Hat’s Kubernetes platform for hybrid deployments using the same tools and APIs. The joint offering integrates with AWS’ 175-plus cloud services, and it’s the first that allows enterprises to provision, license and bill for OpenShift through the AWS console, Sathish Balakrishnan, Red Hat’s vice president for hosted platforms, told CRN’s Joseph Tsidulko.

Red Hat expects to preview Amazon Red Hat OpenShift in the coming months and make it publicly available in the second half of 2020. To leverage OpenShift on AWS today, enterprises can use Red Hat OpenShift Dedicated, a fully managed service from Red Hat available on AWS, or OpenShift Container Platform, a version they could either self-install and self-manage, or implement through a systems integrator, Tsidulko reported.

The new solution will give partners who don’t have Kubernetes specialists and resources to create container clusters a chance to work in that market by allowing them to focus exclusively on higher-order business priorities and applications, Balakrishnan said.

Expanded VMware Tanzu Portfolio

VMware in March introduced its expanded VMware Tanzu portfolio of Kubernetes-based services and VMware Cloud Foundation 4 with Tanzu.

The expanded VMware Tanzu portfolio allows enterprises to adopt cloud-native technologies and automate the application lifecycle on any cloud. The portfolio’s first products include VMware Tanzu Kubernetes Grid, which helps customers install and run a multi-cluster Kubernetes environment on any infrastructure; VMware Tanzu Mission Control, a centralized management platform for operating and securing Kubernetes infrastructure and modern applications across multiple teams and clouds that was previewed last August; and VMware Tanzu Application Catalog. The latter, originally previewed as Project Galleon last August, features customizable, open-source software from the Bitnami catalog that is verifiably secured, tested and maintained for use in production environments.

VMware, which closed its $2.7 billion acquisition of Pivotal Software in December, also rebranded Pivotal Application Service as Tanzu Application Service, Wavefront by VMware as Tanzu Observability by Wavefront, and NSX Service Mesh as Tanzu Service Mesh.

Meanwhile, VMware Cloud Foundation 4 with Tanzu, an automated hybrid cloud platform, now supports traditional VM- and container-based applications featuring the new VMware Cloud Foundation Services using Tanzu Kubernetes Grid and the re-architected VMware vSphere 7, which has direct integration of the container orchestrator into the vSphere virtualization platform.

Commenting during a conference call, VMware chief operating officer Sanjay Poonen said the new products represent a major leap for the company in executing a strategy formulated years ago to offer truly hybrid and multi-cloud infrastructure. VMware aims to offer the industry’s best Kubernetes platforms as containers blur “much of the world we invented for virtualization,” he said, according to a report by CRN’s Tsidulko.

Amazon Honeycode

AWS last month released Amazon Honeycode, which allows customers to build mobile and web applications without writing programming code, in beta.

Amazon Honeycode allows customers to bypass hiring developers – or resorting to emailing spreadsheets or documents -- to build often-costly custom applications for tasks ranging from approving purchase orders, inventory management and conducting simply surveys, to managing complex project workflows for multiple teams or departments, according to AWS.

Designed for non-professional “citizen” developers who are end users inside enterprises, the fully managed service combines the familiar interface of a spreadsheet with the data management capability of an AWS-built database. Customers can use Amazon Honeycode’s visual application builder to create interactive web and mobile apps to perform tasks including tracking data over time, notifying users of changes, routing approvals and facilitating interactive business processes.

With the release, AWS joins Microsoft and Google Cloud in the no-code application competition.

AWS’ 6th-Gen EC2 Instances

AWS’ new sixth-generation Amazon EC2 C6g and R6g instances, launched in June, are powered by Arm-based AWS Graviton2 processors.

AWS designed the compute-optimized C6g instances for compute-intensive workloads, including high-performance computing, batch processing, video encoding, gaming, scientific modeling, distributed analytics, ad-serving and CPU-based ML inference. The memory-optimized R6g instances are designed for workloads that process large data sets in memory, including open-source databases such as MySQL, MariaDB and PostgreSQL, in-memory caches such as Redis, Memcached and KeyDB, and real-time, big data analytics.

AWS CEO Andy Jassy tweeted about the new EC2 instances when they were released: “Excited to bring customers the next gen of Amazon EC2 instances powered by #AWS-designed, Arm-based Graviton2 processors that deliver up to 40% better price/performance than comparable current x86 instances. Should be a game changer!”

The new instances have a 40 percent better price performance over comparable current-generation x86-based Amazon EC2 C5 instances, according to AWS. Built on the AWS Nitro System of AWS-designed hardware and software, they’re each available in nine instance types, including bare metal.

James Hamilton, vice president and distinguished engineer at AWS, said he even was slightly surprised by the Graviton performance – “despite the fact that I was there on day one.”

“We have up to 64 cores, up to 25 gigabits per second of enhanced networking and up to 19 gigabits per second of EBS (Elastic Block Store) storage bandwidth,” Hamilton said during his announcement of the new instances.

The Arm-based AWS Graviton2 processors allow for up to 7x greater performance, 4x more compute cores and 5x faster memory than the Arm-based EC2 A1 instances powered by AWS’ first-generation Graviton processor released a year ago.

The Amazon EC2 C6g and cR6g instances are available in AWS’ U.S. East (northern Virginia and Ohio), U.S. West (Oregon), Europe (Frankfurt), Europe (Ireland) and Asia Pacific (Tokyo) cloud regions.

Native Google Cloud VMware Engine

The native Google Cloud VMware Engine, an integrated, first-party solution for customers to migrate their VMware applications to Google Cloud without refactoring or rewriting them, was announced in May and released at the end of June.

The new offering provides a fully managed VMware Cloud Foundation stack -- including vSphere, vCenter, vSAN, NSX-T and HCX -- in a dedicated environment on Google Cloud’s infrastructure for enterprise production workloads. It allows customers to provision a VMware software-defined data center (SDDC) with “just a few clicks” and, 30 minutes later, have a fully functional SDDC that‘s come directly from the Google Cloud Console, according to June Yang, general manager of Google Cloud’s compute business. Customers gain access to the No. 3 cloud provider’s services, including the hybrid and multi-cloud Anthos platform, BigQuery, Cloud AI, its operations suite and Google Cloud Storage.

“As we look at many enterprises, they have a fairly sizable VMware on-premise environment to power their various types of applications, from ERP to CRM to databases, etc.,” Yang told CRN. “We want to make this really easy for customers to be able to migrate these workloads…to the cloud with really minimal change, so that they can focus on running their applications without managing their overall infrastructure.”

In July 2019, Google Cloud opened early customer access to Google Cloud VMware Solution by CloudSimple, a startup focused on helping VMware customers easily migrate workloads to the cloud. The VMware-as-a-service offering, which leveraged VMware Cloud Foundation running on GCP, was listed on the GCP Marketplace late last year. Google Cloud then acquired CloudSimple in November.

“Essentially we have taken the CloudSimple technology and enhanced it, and made it native to Google Cloud, so now it‘s a first-party service offering that’s really operated and run by Google,” Yang said.

Google Cloud VMware Engine is part of Google Cloud’s enterprise focus under Kurian.

“We have worked very closely to really enable a customer to move their SAP, Oracle and Microsoft workloads onto (Google Cloud), and VMware environments really are one additional significant piece of this particular puzzle,” Yang said, noting there’s approximately 70 million VMs running on VMware, and the majority are sitting on premises. “A number of them is going to move onto the cloud, and we expect a good chunk of those will land on Google Cloud.”

The service so far is available in Google Cloud’s U.S. East4 (Ashburn, Virginia) and U.S. West2 (Los Angeles) regions.

Oracle Dedicated Region Cloud@Customer

Oracle last month introduced the newest offering for Oracle Cloud@Customer, its on-premises cloud service: Oracle Dedicated Region Cloud@Customer.

Oracle Dedicated Region Cloud@Customer is billed as a fully managed cloud region that brings all of Oracle’s 50-plus cloud services -- including Oracle Autonomous Database -- to customers’ data centers, along with the same APIs, service-level agreements and security available from Oracle’s public cloud regions.

“You get your own region in your own data center with the same economics and fully managed by Oracle,” Vinay Kumar, an Oracle Cloud vice president, told CRN’s Tsidulko.

Oracle is looking to advance its position in a hybrid cloud market increasingly contested by hyper-scalers, but competitive on-premises offerings such as AWS Outposts or Microsoft Azure Stack only make available a small subset of the services available in the AWS or Microsoft Azure public clouds, according to Kumar.

“It’s never the same,” he said. “This is a radically different approach to offering cloud like no other providers have even tried to do it.”

The pricing for Oracle Dedicated Region Cloud@Customer requires a minimum commitment of $500,000 per month for a three-year term.