AMD Ups Gaming Wow Factor With Cinema 2.0

When Rick Bergman and Advanced Micro Devices introduced "Cinema 2.0" in San Francisco on June 16, skeptics may have wondered if moviemaking hadn't already leapt several versions past that rather unflattering designation. The advent of color, sound, video and computer-generated effects -- not to mention the original shift from hand-cranked Kinematoscopes and nickelodeons to modern projectors -- could all be said to be worthy of game-changing status.

But let's put aside the notion that we're probably already at Cinema 8.0 or higher and humor AMD's marketing team for a moment. The cinematic revolution proposed by Bergman, general manager of the chip maker's Graphics Product Group, involves nothing short of marrying the superior visual quality of filmed entertainment with the user participation of video gaming, all in real-time and powered by hardware and software being developed by AMD and its partners.

Credit AMD for historical perspective -- part of the Cinema 2.0 event was a walk down gaming's memory lane. The Sunnyvale, Calif.-based chip maker outfitted a room off the main floor with about 20 working gaming rigs, from first-generation consoles like Pong and the Atari 2600 through to the Xbox 360 and Nintendo Wii.

Event attendees were invited to relive childhood memories on the ColecoVision, Sega Dreamcast or as any of the evolving Marios on Nintendo's various consoles.

"It's amazing how the gaming industry has progressed from Pong back in 1972," said Bergman, who came to AMD with the chip maker's acquisition of graphics house ATI in late 2006. "When I was growing up in New Jersey, I worked at the amusement park Fun City and next door there was a bowling alley. Now my 14-year-old daughter can go bowling in our living room with the Nintendo Wii."

As the director of developer relations at AMD and a presenter at the Cinema 2.0 event, Neil Robison lives on the cutting edge of graphics and gaming. But that didn't stop him from firing up Mario Kart 64 on the Nintendo 64 console provided by the hosts.

"The Holy Grail of Cinema 2.0 is taking the visual fidelity of films and pairing that with the interactivity of games," Robison said, not long after dredging up assorted 4-, 8- and 16-bit memories in the console room. While gaming enthusiasts themselves may think the industry is five to 10 years away from achieving that goal, according to Robison, "we're a lot closer than these gamers think with Cinema 2.0."

"A lot of things have happened in cinema in the past few years. If you look at CGI today, animation is absolutely indistinguishable from the real thing, whether it's cities or in some cases the actors themselves," said Bergman, holding up AMD's dime-size next-generation graphics processor, codenamed RV77. "But it's lacking one thing. You're passive when you watch films. The game industry is interactive. But there are also limitations in video games. They don't match the visuals of the cinema. Yet.

"In both mediums, the content directors want to take their medium to that next level. Unfortunately, until today, they just didn't have the technology," he said, strongly implying that the RV77 is just the ticket to blurring all lines between film and games.

"My role, really, is to make technology disappear," said AMD's Charlie Boswell, describing his work with film directors like Robert Rodriguez and George Lucas as the chip maker's director of Digital MediaEntertainment. The point seems sound enough -- as Rodriguez attested in a video clip, he's interested in making his cinematic ideas a visual reality as quickly and as painlessly as possible, as stripped of technological barriers as the simple act of putting pencil to paper.

To that end, Boswell would seem to have his work cut out for him in making the technology completely disappear. You know, what with the giant pulsing chip logos that seem to follow AMD around wherever it goes, as pictured here.

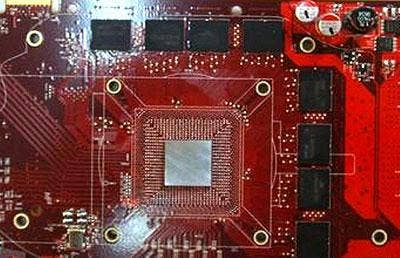

Hardware like AMD's ATI Radeon HD 3870 and HD 3870 X2 graphics cards, pictured left, are part of what's driving the current explosion of high-fidelity PC games. On the software side, developers like Jules Arbach of OTOY and pre-visualization artist Daniel Gregoire are driving the possibilities of real-time visual fidelity in gaming to levels that match those of film.

"My background is from the video game side of things. I never imagined we would one day be this close to the convergence of these film and game worlds," said Arbach, who appeared at the Cinema 2.0 event Monday.

A leaked shot of a portion of AMD's next-gen RV77 graphics card, which OTOY's Arbach said has enabled him to develop real software tools in areas of visual computing that developers have had to jerry-rig cheats for up until now.

"We had tricks to do ray tracing in the past. They were tricks, but nevertheless it was real-looking and heuristic," he said. "But I think that today, at this point, with the imminent launch of new hardware, we're going to be able to do these things we've only imagined before. Like real ray tracing without cheating. I'm surprised you can do that on the GPU, but the rate at which these things have been improving has been phenomenal."

AMD's "Ruby" character had her eyes on the Cinema 2.0 proceedings as the comparison between the 2003 "Ruby: Double Cross" ATI graphics demo to a new one devised by Jules Arbach drew oohs and aahs from the crowd.

The original Ruby demo was pretty exciting for its time, but it's blown completely out of the water by the new demo screened at Cinema 2.0. The crazy thing, aside from her weird ATI dog collar, is that "Ruby: Double Cross" only came out five years ago yet it already seems closer to Pong than to what Jules Arbach's team has come up with. Even "Ruby: Whiteout," the ATI demo from last year, seems antiquated by comparison.

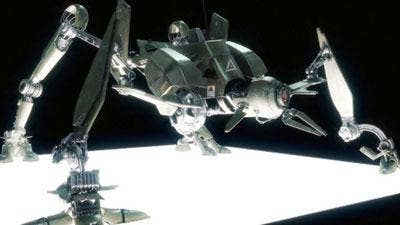

Here's a shot of the Cinema 2.0 version of Ruby, from a brief snippet of a new demo from Jules Arbach and his OTOY team. The demo shows cinema-quality digital images rendered in real-time with interactivity. The clip, constructed as if shot from a handheld video camera, also showcases modern cinematic features like frame shaking, quick zooms and on-the-fly focus adjustments. AMD will release a video of the full Ruby Cinema 2.0 demo this summer.

The monster in the new Ruby demo is what you might expect from a big-budget action flick and its surroundings are almost indistinguishable from an actually-filmed city street. Yet none of this is real and what's more, it was all rendered in real-time by a single PC equipped with two ATI RV770 graphics cards powered by an AMD Phenom X4 9850 processor and AMD 790FX chipset.

A detailed view of the robot that serves as Ruby's nemesis in the new AMD Cinema 2.0 demo. AMD describes the "Cinema 2.0 experience" as something that "punches a sizeable hole in the sensory barrier that separates today's visionary content creators and the interactive experiences they desire to create for audiences around the world."

Another Cinema 2.0 demo from AMD, "Bug Snuff" showcases a scorpion in a terrarium. In this frame capture of the real-time and interactively-rendered scene, note particularly how the light playing through the scorpion's translucent body takes the realism to a whole new level.

"We did six or seven or eight ads for 'Transformers,' all directed in real-time," said Arbach, describing what his company's been up to over the past year or so. "Paramount asked us to actually render ILM's models in real-time. And when given those assets, we were able to take Starscream, these giant assets and render them in real time.

"We're doing it with this incredible fidelity. We did the same thing with the Spider-Man 3 assets," Arbach said. "We're not quite there yet. Where all this is leading is, how do you get people to look real? It's the next frontier and I think the hardware we have today is going to take us there. The convergence of this hardware and a little more work on the software side is going to make it happen."