8 Big Intel Data Center Announcements: From Cooper Lake To Xe

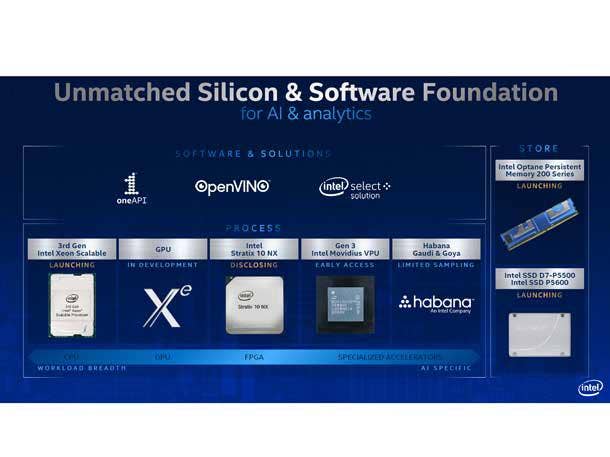

The semiconductor giant just made several announcements for its data center business, including the launch of its new Xeon Scalable processors and Optane Persistent Memory modules as well as new disclosures around Intel's upcoming Xe GPUs and Habana AI processors.

Updates, Launches Galore For Intel's Data Center Biz

Intel unleashed its next generation of data center products Thursday, consisting of a new set of Xeon Scalable processors code-named Cooper Lake, Optane Persistent Memory modules and SSDs.

But the Santa Clara, Calif.-based company also provided an update on more products coming out in the future, including a new generation of Xeon processors code-named Sapphire Rapids and Xe data center GPUs, the latter of which are expected to create more competition for rivals Nvidia and AMD.

[Related: Nvidia's 5 Biggest GTC 2020 Announcements: From A100 To SmartNICs]

The company also disclosed a new artificial intelligence-focused FPGA called the Intel Stratix 10 NX and provided more details on its third-generation Movidius visual processing unit and Gaudi and Goya AI processors in development from Intel's $2 billion acquisition of Habana Labs last year.

What follows are the eight biggest data center announcements Intel made during a mid-June briefing.

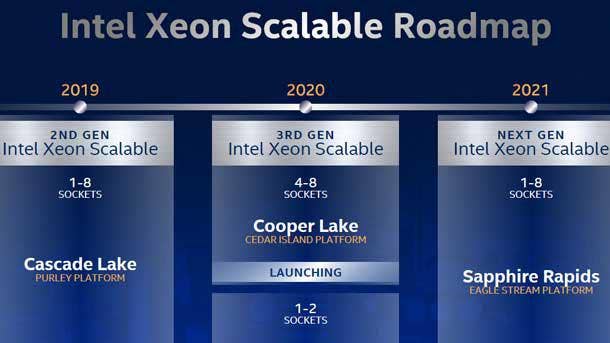

Third-Gen Intel Xeon Scalable Processors

Intel is promising performance gains and new artificial intelligence capabilities with its third-generation Xeon Scalable processors, but they will only be available for four- and eight-socket servers.

The 11 new SKUs cover nearly the entire stack of Xeon Scalable processors, from Xeon Platinum to Xeon Gold, and support up to six channels of DDR4-3200 memory with ECC support, up to 3.1 GHz in base frequency, up to 4.3 GHz in single-core turbo frequency and up to 38.5 MB of L3 cache. Other features include six-way Intel Ultra Path Interconnect support for up to 10.4 gigatransfers per second, Intel Turbo Boost 2.0 Technology and Intel Hyper-Threading Technology.

While third-generation Xeon Scalable will initially only support four- and eight-socket servers, it will expand to one- and two- socket servers later this year with the launch of Intel's Ice Lake CPUs.

The headline feature for Cooper Lake, however, is an expansion of the processors' Deep Learning Boost capabilities that were introduced with second-generation Xeon Scalable. The new processors will support an additional instruction set for built-in AI acceleration called Bfloat16, which Intel said boosts training performance 1.93 times and inference performance 1.9 times compared to previous generation processors performing single-precision floating point math, also known as FP32.

The new Platinum processors consist of Xeon Platinum 8380HL (2.9 GHz base, 4.3 GHz turbo, 28 cores, 56 threads, 250W thermal design power, $13,012 recommended customer pricing), Xeon Platinum 8380H (2.9 GHz base, 4.3 GHz turbo, 28 cores, 56 threads, 250W TDP, $10,009 RCP), Xeon Platinum 8376HL (2.6 GHz base, 4.3 GHz turbo, 28 cores, 56 threads, 205W TDP, $11,722 RCP), Xeon Platinum 8376H (2.6 GHz base, 4.3 GHz turbo, 28 cores, 56 threads, 205W TDP, $8,719), Xeon Platinum 8354H (3.1 GHz base, 4.3 GHz turbo, 18 cores, 36 threads, 205W TDP, $3,500 RCP) and Xeon Platinum 8453H (2.5 GHz base, 3.8 GHz turbo, 18 cores, 36 threads, 150W TDP, $3,004 RCP).

The new Gold processors consist of Xeon Gold 6348H (2.3 GHz base, 4.2 GHz turbo, 24 cores, 48 threads, 165W TDP, $2,700 RCP), Xeon Gold 6328HL (2.8 GHz base, 4.3 GHz turbo, 16 cores, 32 threads, 165W TDP, $4,779 RCP), Xeon Gold 6328H (2.8 GHz base, 4.3 GHz turbo, 16 cores, 32 threads, 165W TDP, $1,776 RCP), Xeon Gold 5320H (2.4 GHz base, 4.2 GHz turbo, 20 cores, 40 threads, 150W TDP, $1,555 RCP) and Xeon Gold 5318H (2.5 GHz base, 3.8 GHz turbo, 18 cores, 36 threads, 150W TDP, $1,273).

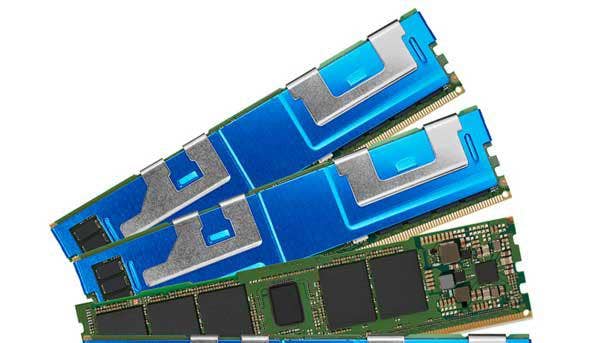

Intel Optane Persistent Memory 200 Series

To complement the launch of Intel's third-generation Xeon Scalable processors, the company is putting out a new generation of its Optane Persistent Memory.

Called the Optane Persistent Memory 200 Series, the chipmaker is promising an average of 25 percent more memory bandwidth over the first generation, supporting capacities of up to 512 GB in a thermal design power envelop that ranges from 12-15 watts. The company launched its first generation of Optane Persistent Memory last year, introducing a new tier of memory that combines the persistent qualities of storage with performance that nearly rivals DRAM.

Optane Persistent Memory, which is only support by the new Xeon Scalable processors, is available in 128 GB, 256 GB and 512 GB modules and sit alongside traditional DDR4 memory DIMMS in a server motherboard. Supporting motherboards can support up to six Optane modules per socket, enabling up to 3 TB of persistent memory per socket and a total memory capacity of 4.5 TB per socket.

Intel said the new generation of Optane Persistent Memory provides more than 225 times faster access to data versus a mainstream NAND SSD made by the company. This can have benefits across three application types: database, AI and analytics and virtualized infrastructure.

When paired with the new Xeon Scalable processors, Optane Persistent memory can improve performance of SAP in-memory databases by up to 2.4 times versus a three-year old server while speeding up Apache Spark analytics workloads by up to 8 times versus a system only using DRAM memory and hard disk drive storage, according to Intel.

Intel SSD D7-P5500, P5600

Intel is upgrading its 3D NAND storage portfolio for servers with two new U.2 NVMe SSDs, the D7-P5500 and D7-P5600, supporting up to 7.6 TB of storage.

The chipmaker called the new SSDs its most advanced triple-level cell, or TLC, 3D NAND storage yet, providing up to 40 percent lower latency and 33 percent more performance over the company's previous generation of storage products. The D7-5500 comes in 1.92 TB, 3.84 TB and 7.68 TB at one drive write per day while the D7-P5600 comes in 1.6 TB, 3.2 TB and 6.4 TB at three drive writes per day.

The D7-P5500 and D7-P5600 come with support for PCIe 4.0 connectivity and features like dynamic namespace management for increased user counts and scalability and SMART monitoring for reporting drive health status without impacting throughput. They also come with security features like TCG Opal 2.0 and built-in AES-XTS 256-bit encryption.

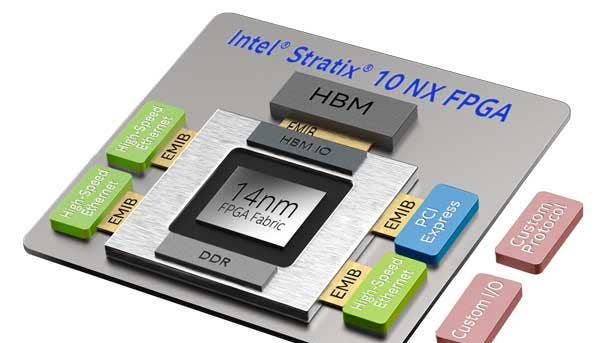

Intel Stratix 10 NX FPGA

Intel is pitching its new Intel Stratix 10 NX as the chipmaker's first FPGA that is optimized for artificial intelligence, providing high bandwidth and low latency for those who need customizable hardware.

The chipmaker said the Stratix 10 NX, which will start shipping later this year, provides up to 15 times more INT8 performance than Intel's existing Stratix MX, thanks to the FPGA's new AI Tensor Blocks, which consist of commonly used precision math formats that accelerate AI applications.

The company said the Stratix 10 NX can also deliver big performance gains over Nvidia's V100 GPU, delivering up to 2.3 times faster BERT batch 1 performance for natural language processing, up to 9.5 times faster LSTM batch 1 performance for fraud detection and up to 3.8 times faster for ResNet50 batch 1 performance for image recognition.

The Stratix 10 NX comes with integrated high-bandwidth memory and embedded memory hierarchy to ensure model persistence. It also supports high-bandwidth networking, sporting up to 57.8 Gbps PAM4 transceivers that allow multiple Stratix 10 NX chips to be pooled together for multi-node designs.

Sapphire Rapids CPUs, Movidius, Xe GPU, Habana AI Processors

While Intel focused most of its attention on new Xeon, Optane, Stratix and SSD products for its data center update, the chipmaker provided some updates on future products.

For Intel's next generation of Xeon processors, code-named Sapphire Rapids, company executive Lisa Spelman told reporters that the chipmaker plans to launch the new CPUs next year for servers ranging from one to eight sockets. She said the company has successfully powered on its first Sapphire Rapids processor, a major milestone, and that the new lineup will include expanded artificial intelligence capabilities, namely a new feature called Intel Advanced Matrix Extensions, or AMX.

"It will further increase the training and the inference performance as well and continue to improve that total cost of ownership for our customers, said Spelman, who is vice president and general manager of Intel's Xeon and Memory Group.

On the GPU front, Spelman said that some partners are testing the chipmaker's upcoming line of Xe GPUs through the company's OneAPI DevCloud, which allows developers to test different kinds of hardware on different kinds of workloads. The company's Xe GPU architecture is expected to yield products across multiple segments, including data center and gaming.

Spelman did not provide an updated timeline for the Xe GPU products, but the company has previously said that it would launch a 7-nanometer data center GPU in 2021.

As for Habana, the new artificial intelligence hardware unit created through a $2 billion acquisition last year, Intel is sampling the division's deep learning training processors with large cloud service providers. In addition, the company has started an early access program for a third generation of Intel's Movidius visual processing unit, which is designed for computer vision applications.