Forrest Norrod On Why AMD EPYC 'Rome Kicks A**'

‘There's a class of customers for whom I think they're going to really be surprised to see how much this thing kicks a** in Java,’ says AMD Senior Vice President Forrest Norrod.

Fired Up About EPYC 'Rome'

AMD Senior Vice President Forrest Norrod was clearly fired up when he hit the stage at the chipmaker's second-generation EPYC Rome launch event in San Francisco last week.

Norrod, who is also general manager of AMD's Data Center and Embedded Solutions Business Group, kept a calm, respectful executive tone throughout his talk, in which he boasted of the server processors' single- and dual-socket advantages over Intel's Xeon lineup.

[Related: Forrest Norrod Outlines AMD's Plan To Fight Intel In The Data Center]

But suddenly, toward the end of his presentation, Norrod turned things up to 11 and belted, "Rome kicks a**!"

"I don't normally do that at external events, but that's sort of been my thing for 20 years," he said in an interview with CRN after the presentation. "People were texting me, 'C'mon, Forrest, do 'kick a**' in public!' They never expect it."

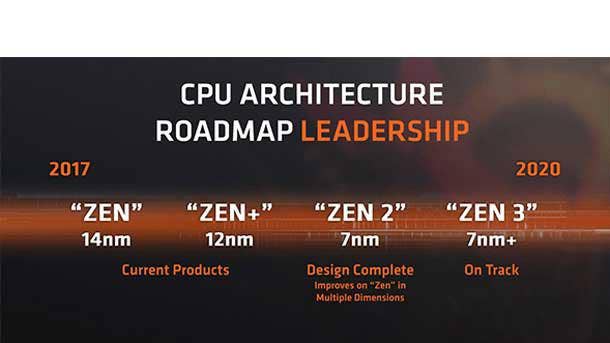

In his interview with CRN, Norrod gave examples of how businesses can benefit from the new EPYC processors' 7-nanometer architecture, an increase in transistor density over the company's 14-nanometer, first-generation EPYC Naples as well as Intel's current 14nm Xeon Cascade Lake and its upcoming Xeon Cooper Lake processors.

"It means, given a certain level of performance, a certain number of transactions per second, a certain number of virtual machines, a certain number of cash registers that you need to support with the central IT system, you can do with fewer servers," he said. "Or with the same amount of money and power, you can add a lot more capacity."

Intel expects to launch its 10-nanometer Xeon processors, code-named Ice Lake, after Xeon Cooper Lake in 2020. The semiconductor giant has previously said its 10nm process technology is comparable to the 7nm technology AMD is using. Intel does have its own plans for 7nm products, however, starting with products in 2021.

Here is an edited transcript of CRN's interview with Norrod, who went into detail on AMD's data center strategy and his thoughts on Intel's data-centric strategy.

What is your strategy for winning market share with second-generation EPYC Rome?

I think we're pretty open with it. Look, the fastest adoption is in areas where our advantage is most acute and where the purchase decisions are most influenced by the technical attributes of the product. So I would say that is certainly [high-performance compute], cloud, hosting, any sort of massive scale-out deployment, where it's either for HPC, where the system is a core part of the mission, or it's for hosting or cloud, where the cluster is essentially the factory.

And so those are the areas where we expect to see the most rapid ramp. But Rome is quite broad in its applicability. I want to ramp quickly in those segments with that strategy, and then I want to make sure that we're simultaneously investing such that the longer-to-adopt, but more stable, less lumpy, business builds up quickly over the next several quarters as well.

Scott Alylor, corporate vice president of data center products, was talking about how AMD is trying to get its foot in the door with emerging workloads, and the idea is that you get into those workloads and then, for instance, the hyper-converged infrastructure folks in the same company hear about the processors and that ideally leads to expansion. Is that part of the strategy?

Yeah. By the way, that's a great example. There's a large automotive manufacturer where we got in on the product design side and the modeling side in their HPC cluster, and now we're everywhere, even on Naples [first-generation EPYC]. So I think he's absolutely right: getting in with either an emerging workload or a workload for which you've got the greatest affinity and then work to broaden it out.

Because, particularly, when we get a part like Rome, the thing that will slow us down more than anything else at this point is unfamiliarity: [People who are] concerned about, ‘Oh, it looks great, is it really safe?’ And, ‘This is my IT infrastructure for the company. I want to save money, and I want to run fast, but [running on AMD is an unknown].’ It's a legitimate concern.

If they haven't been buying AMD, it's a legitimate concern. We and our OEMs need to work to overcome it, but it's rational.

What would you consider the least appreciated feature of the new processors?

Well, that's a great question. You challenged me to think out of the box for my answer. I think two things: The [original] Zen [core architecture] wasn't well known for its Java performance, but we really fixed it with Zen 2. There's a class of customers for whom I think they're going to really be surprised to see how much this thing kicks a** in Java. So that would be one.

AMD showed some slides demonstrating the improvement for Java workloads.

That's right. We got a bunch of 68 [percent to] 88 percent improvements in Java. And if you look at the notes, I think we spell it out. Some of those workloads that [AMD CTO] Mark [Papermaster] was talking about, the [instructions per clock] advances, some of the ones on the far right [of the slide] were more dramatic.

The second least appreciated feature?

I think the SEV, Secure Encrypted Virtualization, is going to be huge. It does require the rest of the ecosystem to enable it. It [interfaces with] the hypervisor and the OS and the enterprise key manager, and there's a lot of pieces that have to all be in place to get the full value. But I think that it in three to four years, it will be ridiculous to even consider deploying a [virtual machine] in the cloud if you can't control and isolate that thing cryptographically from the cloud provider. I think that your risk management guys [will say], ‘What are you talking about?’

EPYC is the first processor to offer that, and we've been working on the rest of those pieces. VMware's a critical part of that. That's why that part was so important. You're going to be able to have a whole new level of security that you can control independent of your cloud provider.

Can you remind me how it works?

The virtual machine is encrypted. So the virtual machine or container or even a process—actually the way that we've implemented it you can make it any one of those three—and it's independent. It's managed by a separate key manager in our security processor. So the systems administrator for the server does not control that key. The user of that VM running their workloads on Amazon [Web Services] can control that key, so all of their VMs work as normal, work full performance, there's no performance impact, but even if Amazon wanted to, they couldn't look into that virtual machine.

And it's only in Rome?

It's in Naples [first-generation EPYC], but it was limited to two things. It was limited to 16 keys, and then there's a couple of things that VMware asked us to do to change it on Rome. So it's now 509 keys. It's a lot of keys, and there's also a couple changes to make it a little easier for them. They gave us some really good feedback we incorporated into our second generation.

What does 7 nanometer mean in the data center?

It allows you to get up to twice the number of cores, running a higher frequency in the same power envelope. It's pretty much that simple.

Can you break that down on a server level?

It means, given a certain level of performance, a certain number of transactions per second, a certain number of virtual machines, a certain number of cash registers that you need to support with the central IT system, you can do with fewer servers. Or with the same amount of money and power, you can add a lot more capacity, so you can take the same amount of money and support twice as many stores or twice as many cash registers. You can add new features and capabilities for your end customer.

Why is it important that AMD can outperform Intel with a single-socket configuration versus your rival's dual-socket configuration?

Because I think the industry is accustomed to dual socket. People know what they know. They know, ‘Hey, I've been buying mainstream servers, dual socket. That's what I should buy. That's what I'm going to buy.’ And even through last generation, with the single-socket server, we could fulfill the performance of the vast majority of the systems sold. With the 32-core first generation, we could deliver that much performance, but still it's new, it’s novel, it's not as much performance as this, so I think for me being able to come out and say unequivocally, we have the numbers to back it up.

You're running a workload on an Intel dual-socket server. I don't care what the workload is. I don't care what Intel dual-socket server you're talking about. I can beat that performance with a single-socket Rome, offering much lower power consumption, much lower Capex, better reliability because there's fewer things to fill.

A dual-socket server is not more reliable than a good one-socket server. In fact, it's less reliable. There's no objection. It's anything that [Intel] can do, we can do cheaper, simpler, less power dissipation, much better TCO [total cost of ownership], single-socket server. Mic drop. That's it. There's no objection. And if you do need more performance, great, we got dual socket, too.

That's why dual socket is still important, right?

Certainly, there are a number of workloads and a lot of users that have an insatiable demand for performance. There's never too much performance. If you're Google, you can always use more performance. So having the ability to scale up in performance is hugely important for them, for HPC, for a large number of corporate customers.

But there's a lot of customers that just need a few servers, five, 10, 100 servers to power their enterprise. They can do that better, cheaper, more reliable and with a dramatically lower TCO with single socket. It's a better choice. That's why you see Lenovo, HPE, Dell, they all get it. They're all deploying single-socket configurations as well as Dell and HPE already announcing dual socket.

Was there a reason none of the benchmarks compared second-generation EPYC to Intel's Xeon Platinum 9200 series?

I can't buy one. I tried. I don't mean to oversimplify. I can't get one. We have been trying to buy one for some time, and I have yet to be able to get the [Intel Xeon Platinum] 9282. So there's that aspect.

But secondarily, we think they're almost irrelevant for the general market because they're 400 watts, they're only available as board-level products from Intel, and so if you're an HPE, Dell or Lenovo customer, it's an unfamiliar system. It doesn't have any of the attributes as the rest of your system. It's essentially a white box from Intel. So we just think that it's a highly niche product.

The thing that I would point out for anybody that [wants to compare], we published our SPEC_int benchmarks. Guess what? They're higher than the benchmarks that Intel has publicly disclosed for the [Xeon] 9282. They still haven't published a certified set of results, at least as of [Aug. 4], for the 9282. But what they have publicly disclosed at their estimated performance, we exceed.

Intel is heavily leaning into its data-centric platform strategy, where it's no longer just about the CPU, it's about a wider ecosystem of products that include CPUs, Optane memory, accelerators like FPGAs and the Nervana processors, connectivity solutions and software. Does Intel have any advantage over AMD with that strategy?

Intel clearly does have, internally, a larger set of products. No question about it. They have more categories, no question about it. I think there is a very valid question, though, in any of those, do they have actually have world-class technology? Are they the best?

So let's take Omni-Path. They have pretty much admitted that Omni-Path is now dead. The fabric, they tried. It looks like, I'm speculating, that they're going to try again with Barefoot Networks. I suspect they're going to emulate something like what Cray did with Slingshot, which is a pretty cool fabric.

But look at their storage technology in the past, their Ethernet technology in the past: This is not new. They have tried to be a systems company or offer systems ingredients and bundle them together for a long time. I would suggest that they have not demonstrated the ability to be world class in all of those elements. And so if you're bundling them together, you're asking the customer to accept the less-than-world-class solution in one or more attributes.

And so our approach is a little bit different. We are not a world-class fabric company. We are not a world-class memory company. We are not a world-class Ethernet company. I'm not pretending to be, but what I am going to do is I'm going to open my ecosystem, and I'm going to work with Mellanox, I'm going to work with Broadcom, I'm going to work with Micron, I'm going to work with Samsung. And we're going to make sure that world-class technology from those companies is extremely well supported on the open EPYC ecosystem. And I think generally that wins.

How did you feel about the internal Intel memo that talked about AMD? Did you read that?

Yeah, I read it.

What did you think? Was it a pretty good analysis?

I don't want to comment on their internal analysis. Let me stress one thing: Intel is a great company. They're a formidable competitor. I do not take them lightly at all, and we should never take them lightly. If my team shows the slightest hint of getting complacent, I quickly disabuse [them] of that notion.

We're in a super tough industry with a super tough competitor. We've got a fantastic product. But we should never, ever be complacent, and we're always going to treat them with the utmost respect. I'm going to put up direct, factual comparisons. I think that's appropriate. But I do not need to slam them in any way. And look, I'm not going to comment on an internal leaked memo just as I would hope they would not comment anything that leaked out of AMD. That's just not appropriate.