Ian Buck On 5 Big Bets Nvidia Is Making In 2020

'We're reaching a point where supercomputers that are being built now are all going to be AI supercomputers,' Nvidia data center exec Ian Buck tells CRN of the chipmaker's AI ambitions.

Nvidia Has Big Ambitions For 5G, Autonomous Driving, Healthcare

Ian Buck is fired up.

As Nvidia's top data center executive and the creator of the chipmaker's CUDA parallel computing platform, Buck is leading a business that has transformed the way computing infrastructure works. While Nvidia's GPUs continue to dominate gaming and content creation, they have become paramount to many high-performance computing and artificial intelligence workloads in the past several years.

"Today, with the advent of AI […] the data centers are changing. They're becoming compute-intensive supercomputers in some cases," Buck, whose title is general manager and vice president of accelerated computing at Nvidia, told CRN in an interview.

The increasing relevance of GPUs within the data center market was demonstrated most recently with Nvidia's announcement on Tuesday that the U.S. Postal Service will use GPU-accelerated servers from Hewlett Packard Enterprise and Dell EMC to enable AI-driven mail sorting.

But Nvidia's data-driven ambitions go beyond just traditional data centers, ranging from edge servers and medical devices to autonomous vehicles and robots.

"I think AI has shown a capability far beyond what traditional algorithms can do, they enable new use cases, new capabilities, whether it be smart cities or conversational AI, but they need compute to process," Buck said. "They also need a new software stack, and that's where all the innovations happen. So it's really fun being in the space, because you're at the center of that redefinition, that innovation."

What follows are five big bets Buck said Nvidia is making for 2020, ranging from new innovations to enable 5G network infrastructure to new software frameworks to support AI in healthcare.

Software-Defined 5G Infrastructure

One area that Nvidia is betting on is 5G, a technology Buck admitted can be subject to a lot of hyperbole.

"It's so exciting that people don't know why it's exciting," he told the audience at Nvidia's GTC conference in Washington, D.C. on Tuesday.

But Buck said 5G will be important, and all the hype shouldn't distract from that. That's because, he said, 5G will improve various workloads, including AI at the edge, thanks to higher bandwidth, significantly lower latency, greater reliability and increased device density.

"Effectively what that means is that promise of IoT can be made real, because I can make my device incredibly cheap and low power," he said. "It doesn't need to have any intelligence. All it needs is a 5G connection, and I can move all its AI, all its processing, anything else, to the edge. As a result, 5G is enabling us to build low-cost, ubiquitous […] IoT devices, whether they be cameras or doorbells or shoelaces, if you will, that are connected and as intelligent as an entire data center."

Nvidia's investments in 5G include Aerial, a new software development kit that can run on the company's EGX edge platform to enable GPU-accelerated, software-defined wireless radio access networks for 5G infrastructure. This will allow telecom companies to provide more flexibility in the breadth of services they offer.

One of Nvidia's early partners in building virtualized 5G radio access networks is Ericsson, which will allow the company to provide new services such as augmented reality, virtual reality and gaming.

"With 5G, as we move all these capabilities to the edge, the edge changes to be much more software defined," Buck said.

AI-Accelerated Healthcare

Another area of focus for Nvidia is healthcare, for which the chipmaker is building out its own software stack to enable GPU-accelerated AI processes for things like medical imaging.

It's a highly regulated and complicated industry, but the opportunity and frankly the importance of solving those problems is too important [for Nvidia] to not be investing," Buck told CRN.

Medical imaging is one such area that can benefit from AI processors, according to Buck, because the number of images being processed every day is far too much for the number of radiologists in the U.S.

"We have around 50,000 radiologists in hospitals, but a typical radiology department has to process about 8,000 images every day. So if you do the math — and 50,000 radiologist is a critical shortage — this actually works out about four seconds per image per radiologist, which is a frightening number in terms of you getting a scan," Buck said.

Nvidia is tackling AI for healthcare through Clara, a set of developer tools that allows developers to take advantage of the chipmaker's GPU-accelerated computing.

"In the imaging use case an Imaging workflow is actually relatively simple and familiar to many people in AI," Buck said at GTC. "You have unlabeled data, it has annotated data, and we can use the Clara SDK to train a system on the label data to learn how to recognize certain things inside of the images."

As part of Clara, Buck said, Nvidia has "13 world-class pre-trained models already designed to extract major organs from medical imaging data," and it includes a process called "transfer learning" that can take the work of existing training models and extrapolate it to new use cases.

"We want to look for specific kinds of tumors, for example, so we can start from a pre-trained model which already is recognizing organs and train it [to start] looking for cancers," he said.

Autonomous Vehicles

Autonomous driving is undoubtedly one of the flashiest technologies Nvidia has gotten behind, even as self-driving vehicles are taking longer to hit the market than expected.

"I know it's a hot topic. It's actually one of the hardest, which is why we don't all have self-driving cars today, because it's incredibly complicated," Buck said in his GTC talk.

Nvidia has its own fleet of self-driving vehicles that the company uses to collect data and test its own capabilities, which includes the company's Drive platform, according to Buck.

"It's basically an AI supercomputer inside every autonomous car. Every neural network and Drive stack is handling thousands of conditions," he said. "It's gathering, processing hundreds of petabytes of data and the whole thing has to be logged for traceability and safety."

An important aspect of developing autonomous vehicle systems is the training of deep learning models, which can be done with the company's DGX server platform.

"We have literally thousands of GPUs doing training every day," Buck said.

The company also has its own platform for real-world simulations, called Drive Constellation, which can simulate a self-driving vehicle's sensors and test them in a variety of conditions, including snowstorms.

"We actually run many millions of miles in simulation to test the vehicles," Buck said.

Autonomous Machines

As the chipmaker continues to drive major investments into its autonomous vehicle capabilities, Nvidia is also betting big on autonomous machines, specifically robots.

"The future of robotics is all about interaction and working with the real world," Buck told the audience during his talk at the GTC conference.

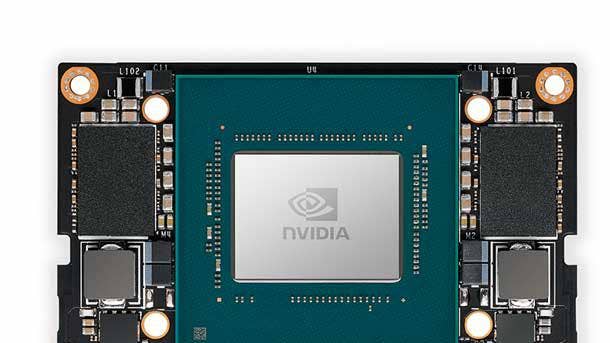

The company is enabling new intelligent capabilities in robots through its Jetson AI platform, which consists of a lineup of system-on-module boards that include a CPU, GPU and other silicon that's necessary to high performance in a variety of form factors.

Most recently, Nvidia introduced the Jetson Xavier NX, which the company calls the "world's smallest supercomputer" for AI applications at the edge. The computing board, which is roughly the size of a credit card, packs 384 CUDA cores and 48 tensor cores to deliver up to 21 Tera Operations Per Second, or TOPS, for a variety of devices constrained by size, weight and power, among other things.

"It's a [system-on-chip] that has the same GPU architecture that's in the big GPUs in [Nvidia's DGX deep learning servers]," Buck said.

Autonomous machines can benefit a wide variety of industries and use cases, ranging from space exploration to retail. For instance, a robot could take over a human employees' time-intensive job of scanning inventory at a store while also aiding customers when needed.

"They need to be able to work autonomously in dynamic environments," Buck said.

On the software side, Nvidia provides a software development kit called Isaac, which is meant to accelerate the development of AI-powered robots. One of the key features is the ability to run robots through simulated environments to ensure that they'll work properly in the real world.

"I can emulate the sensors and the physics so the first time you actually run the robot, they can work the first time out of the gate in the real world because you trained it on thousands and millions of hours in the simulator world with the Isaac SDK," Buck said.

AI Supercomputers

What encapsulates many of Nvidia's AI efforts is the work the chipmaker is doing in high-performance computing, where the company hopes to turn HPC systems into "AI supercomputers."

"We're reaching a point where supercomputers that are being built now are all going to be AI supercomputers," Buck told CRN, pointing to the U.S. government taking a leadership role on the convergence of HPC and AI as an important sign of progress.

One of the core ingredients for these efforts is Nvidia's Tesla V100 data center GPUs, which can deliver 100 teraFLOPS, or trillion floating points operations per second, in deep learning performance.

Buck said 27,000 of Nvidia's V100 GPUs are inside Oak Ridge National Laboratory's Summit supercomputer in Tennessee, where scientists can use deep learning to identify extreme weather patterns from high-resolution climate simulations.

"That's only one example of many places where AI's going to accelerate scientific discovery, whatever that might be," he said. "So that's the exciting part: getting this technology in the hands of researchers who can turn around and do something that no one ever thought was possible."

Thanks to Nvidia's high-performance AI efforts, the company has become increasingly prominent in the Top 500 list of the world's most powerful supercomputers. For instance, the company's DGX Superpod, which consists of 96 DGX-2H systems and 1,536 V100 GPUs, ranks at No. 22 on the Top 500, just above the National Center for High Performance Computing in Taiwan.

"This network actually can train the ResNet-50 in about 80 Seconds, which is ridiculously fast," Buck said in his GTC talk, referring to the well-known deep neural network.