Nvidia GTC Fall 2021: 8 Big Announcements For AI, Security And More

CRN dives into significant announcements Nvidia made around AI, security, networking, 3-D design and more at its fall GTC 2021 conference. ‘As we accelerate more applications, our network of partners experience growing demand for Nvidia platforms,’ CEO Jensen Huang says.

The Full-Stack Computing March Continues

Nvidia unleashed a smorgasbord of new hardware and software technologies at its fall GTC 2021 conference, giving enterprises everything from low-power inference GPUs and tiny supercomputers to high-speed networking platforms and collaboration platforms for 3-D design.

Jensen Huang, Nvidia’s CEO and co-founder, continued to push the Santa Clara, Calif.-based chipmaker as a “full-stack computing company” that provides the silicon, systems and software for fast-growing and critical applications ranging from threat detection to digital twins.

[Related: Intel Innovation 2021 Event: The 10 Biggest Announcements To Know]

“Starting from computer graphics, the reach of our architecture has reached deep into the world‘s largest industries,” he said in his fall GTC 2021 keynote Tuesday. “We start with amazing chips, but for each field of science, industry, and application, we create a full stack.”

Huang said the company now has more than 150 software development kits that serve everything from 5G and robotics to quantum computing and earth sciences. This is creating more opportunities for the chipmaker’s channel partners, he added.

“As we accelerate more applications, our network of partners experience growing demand for Nvidia platforms,” Huang said.

What follows is a roundup of eight significant announcements Nvidia made at the fall GTC 2021 conference that touch upon AI, security, networking, 3-D design and more.

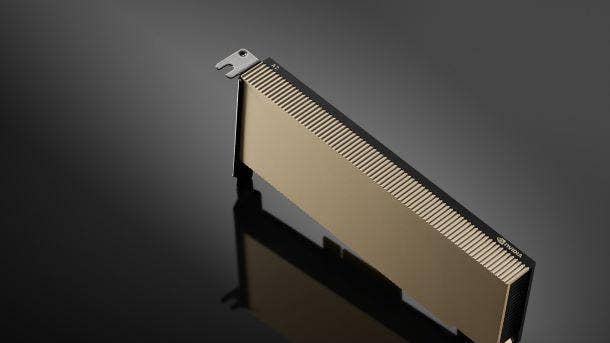

Nvidia Launches A2 Tensor Core GPU For Low-Power Inference

Nvidia expanded its portfolio of Ampere data center GPUs with A2, a “low-power, small-footprint accelerator for AI at the edge” that the company says can offer 20 times faster performance than CPUs.

The A2 GPU comes in a one-slot, low-profile PCIe 4.0 card with 10 RT Cores, 16GB of GDDR6 memory and a maximum thermal design power between 40-60 watts. Nvidia said it has a peak FP32 performance of 4.5 teraflops. With Ampere’s sparsity feature enabled, the A2 offers a TF32 performance of 18 teraflops, BFLOAT16 and FP16 performance of 36 teraflops, peak INT8 performance of 72 teraflops and peak INT4 performance of 144 teraflops.

Compared to Intel’s Xeon Gold 6330N CPU, the A2 GPU is 20 times faster for text-to-speech with the Tacotron2 and Waveglow models, seven times faster for natural language processing with the BERT-Large model and eight times faster for computer vision with the EfficientDet-D0 model, according to Nvidia. The company said the A2 is also 20 percent faster on the ShuffleNet v2 model and 30 percent faster on the MobileNet v2 model compared to its previous-generation T4 GPU.

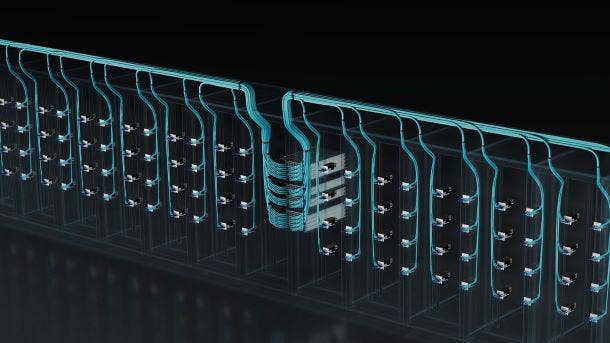

Nvidia Launches Quantum-2 InfiniBand Networking Platform

Nvidia is giving cloud service providers and high-performance computing organizations a big networking boost with Nvidia Quantum-2, the next generation of its InfiniBand networking platform.

The company said the 400Gbps networking platform provides “extreme performance, broad accessibility and strong security” by combining the Nvidia Quantum-2 switch, the ConnectX-7 network adapter, the BlueField-3 data processing unit and underlying software.

With a throughput of 400 gigabits per second, Quantum-2 provides double the network speed and triple the network ports over the previous generation, according to Nvidia. “It accelerates performance by 3x and reduces the need for data center fabric switches by 6x, while cutting data center power consumption and reducing data center space by 7 percent each,” the company added.

Quantum-2 is now available from several infrastructure and system vendors, including Atos, Dell Technologies, Gigabyte, Hewlett Packard Enterprise, IBM, Lenovo, Penguin Computing and Supermicro.

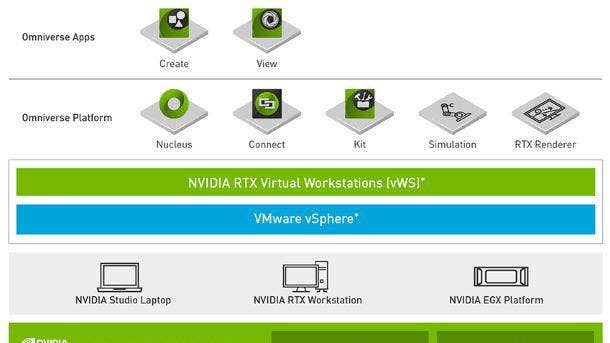

Nvidia Makes Omniverse Enterprise Generally Available

Nvidia expanded its suite of paid enterprise software with the general availability of Omniverse Enterprise, a collaboration platform that allows 3-D design teams to work together on the same files in real time across multiple software suites on any device.

Omniverse Enterprise is the enterprise version of Nvidia Omniverse, which is free for individuals, and it consists of multiple components. For end users, there’s Omniverse Create, which lets users assemble, light, simulate and render scenes using Pixar’s Universal Scene Description framework; and Omniverse View, which lets users review 3-D design projects. The back end for Omniverse Enterprise consists of Omniverse Nucleus server, which manages a database shared among clients; and Omniverse Connectors, which provides plugins to design applications.

Partners selling Omniverse Enterprise include BOXX Technologies, Dell Technologies, HP Inc., Lenovo and Supermicro as well as Arrow, Carahsoft Technology Corp and PNY.

Omniverse Enterprise consists of three subscriptions. The first, to run the Omniverse Nucleus server, costs $1,000 per named user annually, which covers the software, enterprise support and Nucleus Workstation. The second, for creators, costs $2,000 per floating user license annually, and it includes Omniverse Create, Omniverse Kit, Omniverse Extensions, batch microservices for up to 64 GPUs and enterprise support. The third, for reviewers, costs $100 per floating user license annually, and it comes with Omniverse View and enterprise support.

A subscription to Omniverse Nucleus server is required for collaboration in Omniverse Create and for editing and commenting in Omniverse View.

Nvidia Updates Triton Inference Server With New Performance Optimizer

Nvidia announced major updates for its free Triton Inference Server software, which is used by more than 25,000 customers to make it easier to run inference applications at scale.

The software is part of Nvidia’s paid software service, Nvidia AI Enterprise, and the new features include a model analyzer that automatically selects the best configurations to provide optimal performance and total cost of ownership for running AI models. The software also now has the ability to run large Transformer-based language models across multiple GPUs.

Other new features include support for Arm CPUs and RAPIDS FIL, a new backend for GPU or CPU inference of random forest and gradient-boosted decision tree models. It also now supports TensorFlow and PyTorch, and it also has new integrations with Amazon Web Services SageMaker and Alibaba Cloud.

The company also announced updates for Nvidia TensorRT, which includes new integrations with the TensorFLow and PyTorch frameworks.

Nvidia Launches Zero-Trust Cybersecurity Platform

Nvidia expanded its cybersecurity offerings with a new platform that gives developers a way to deliver zero-trust server solutions that are “up to 600 times faster than servers without Nvidia acceleration.”

The new zero-trust platform combines the company’s BlueField data processing units, the DOCA software development kit and the Morpheus cybersecurity AI framework. Nvidia’s DPUs have the ability to offload, accelerate and isolate data center infrastructure tasks, including security applications, which is made possible by taking advantage of DOCA.

Nvidia’s Morpheus framework has the ability to detect threats by monitoring telemetry from various sources, including GPUs, network traffic and application and cloud logs. The latest version of the framework includes a “new workflow that creates digital fingerprints using unsupervised learning to detect when cyber adversaries have taken over a user account or machine,” Nvidia said.

The company Juniper Networks is among the first security vendors to take advantage of BlueField DPUs and DOCA. Servers with DPUs are coming to the market soon from ASUS, Atos, Cisco, Dell Technologies, Gigabyte, Lenovo and Supermicro, according to Nvidia. Infrastructure partners supporting GPUs include Canonical, Red Hat and VMware. Independent software partners include Check Point Software, Cloudflare, Fortinet, NetApp, VAST Data and Nutanix.

Nvidia Gives Enterprises Virtual Agents, Custom Voice Software, Large Language Models

With Nvidia’s ever-increasing focus on enterprise customers, the company unleashed new solutions for developing virtual agents, custom AI voices and large language models.

The virtual agent solution is Nvidia Omniverse Avatar, an expansion of the company’s Omniverse software portfolio that brings together its technologies for speech AI, computer vision, natural language understanding, recommendation engines and simulation. Combined, Nvidia said, these technologies can create “interactive characters with ray-traced 3D graphics that can see, speak, converse on a wide range of subjects, and understand naturally spoken intent.”

The speech recognition capability is made possible by Nvidia Riva, a software development kit now available that lets enterprises create custom voices in a matter of hours. Omniverse Avatar also takes advantage of the Megatron 530B large language model, which enterprises can now customize and use alongside the new Nvidia NeMo Megatron framework for training large language models.

While the company did not say when it will launch Omniverse Avatar, it said the Nvidia Riva Enterprise program will be available next year while enterprises can sign up now for early access to the new Nvidia NeMo Megatron framework. Additionally, enterprises can develop and deploy large language models through the Nvidia LaunchPad program, the company added.

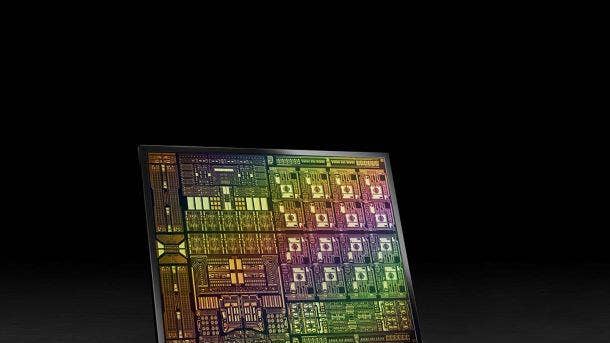

Nvidia Reveals Jetson AGX Orin Chip, A Tiny, ‘Powerful’ Supercomputer

Nvidia revealed the next generation of Jetson AGX chip, which it called the “world’s smallest, most powerful and energy-efficient AI supercomputer” for edge AI and autonomous machine applications.

The company said the Nvidia Jetson AGX Orin is six times more powerful than the previous-generation Jetson AGX Xavier while maintaining the same handheld form factor and pin compatibility. That means the system-on-chip can perform 200 trillion operations per second, which it said is “similar to that of a GPU-enabled server” that can fit in the “palm of your hand.”

Jetson AGX Orin features Nvidia’s Ampere GPU architecture and Arm Cortex-A78AE CPUs along with next-generation deep learning and vision accelerators. Developers can take advantage of the hardware using the CUDA-X- accelerated computing stack and JetPack software development kit.

The Jetson AGX Orin module and development kit will be available in the first quarter of 2022.

More Ways To Connect The Physical And Virtual Worlds

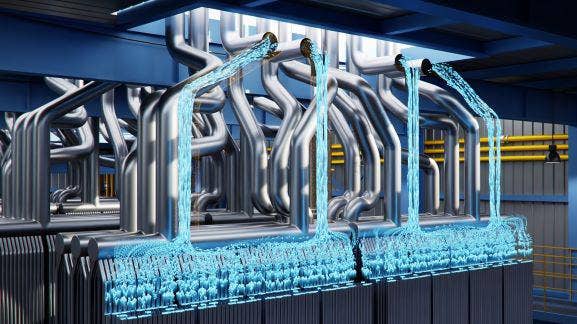

Nvidia revealed even more ways to connect the physical and virtual worlds with Nvidia Omniverse Replicator, which creates “physically simulated synthetic data” for the training of deep neural networks in simulations that are used for real-world applications like autonomous vehicles.

The company said the first two applications to benefit from Omniverse Replicator will be the Nvidia DRIVE Sim digital twin software for autonomous vehicles and the Nvidia Isaac Sim digital twin software for manipulation robots. The goal is to help developers “create diverse, massive, accurate datasets to build high-quality, high-performing and safe datasets, which is essential for AI,” said Rev Lebaredian, Nvidia’s vice president of simulation technology and Omniverse engineering.

The company also revealed Nvidia Modulus, a framework for building machine learning models that understand physics for digital twin applications, which can benefit use cases ranging from protein engineering to climate science. Nvidia said the framework gives scientists “a framework to build highly accurate digital reproductions of complex and dynamic systems that will enable the next generation of breakthroughs across a vast range of industries.”