Nvidia's 5 Biggest GTC 2020 Announcements: From A100 To SmartNICs

The chipmaker reveals the new 7-nanometer Ampere GPU architecture as well as multiple products using the architecture, including the new A100, which Nvidia says will dramatically disrupt the economics of data center infrastructure for artificial intelligence.

New Architecture, New GPU, New Systems

Despite having to hold the GPU Technology Conference as a virtual event, Nvidia pulled out all the stops and revealed a new GPU architecture, a new data center GPU and other new products that the chipmaker believes will have a dramatic impact on the data center market.

The Santa Clara, Calif.-based company's announcements for GTC 2020 largely stemmed from the company's new 7-nanometer Ampere GPU architecture, from which the chipmaker introduced its latest GPU for the artificial intelligence acceleration market, the A100.

[Related: Nvidia CEO: Mellanox Buy Will Drive New Data Center Architecture]

"The powerful trends of cloud computing and AI are driving a tectonic shift in data center designs so that what was once a sea of CPU-only servers is now GPU-accelerated computing," Jensen Huang, founder and CEO of NVIDIA, said in a statement. "NVIDIA A100 GPU is a 20X AI performance leap and an end-to-end machine learning accelerator – from data analytics to training to inference. For the first time, scale-up and scale-out workloads can be accelerated on one platform. NVIDIA A100 will simultaneously boost throughput and drive down the cost of data centers."

What follows are the five biggest announcements Nvidia made during its GTC 2020 keynote, ranging from the A100 to the new DGX A100 system and a new Mellanox SmartNIC.

New Nvidia Ampere Architecture, A100 GPU

The biggest announcement during Nvidia's GTC keynote was the reveal of the company's 7-nanometer Ampere GPU architecture and the first GPU to use the architecture, the Nvidia A100, which the company said is in full production and shipping now.

With more than 54 billion transistors, Nvidia called the A100 "the world's largest 7-nanometer processor" and said it provides the largest generation leap in artificial intelligence performance, promising a 20-fold increase in horsepower over Nvidia's Volta V100 GPU.

Unlike Nvidia's previous V100 and T4 GPUs, which were respectively designed for training and inference, the A100 was designed to unify training and inference performance. This breakthrough, combined with the ability to split the GPU into seven separate instances and link multiple A100s using the third-generation NVLink to create one large GPU, will "simultaneously boost throughput and drive down the cost of data centers," according to Nvidia CEO Jensen Huang.

The A100's other features include Nvidia's third-generation Tensor Cores, which enables up to 20 times faster peak single performance, or FP32, with the new TF32 for AI. The Tensor Cores, for the first time, also support double-precision performance, or FP64, providing 2.5 times faster performance than the V100, according to Nvidia. The A100 also uses a new efficiency technique called structure sparsity that takes advantage of the spare nature of AI math to double performance.

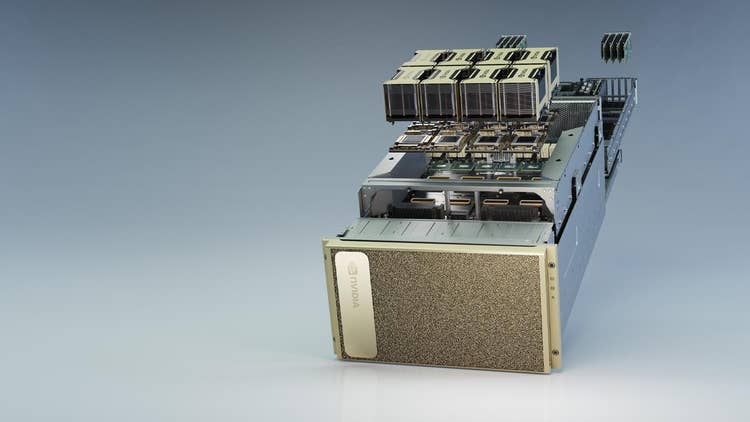

DGX A100 Provides End-To-End System For Inference, Training

With the new A100, Nvidia is refreshing its DGX deep learning system lineup with the new DGX A100, which packs eight A100s, two 64-core AMD EPYC processors and 200Gbps Mellanox HDR interconnects.

The company said the new DGX A100 provides 5 petaflops of AI performance and offers a major disruption in the economics of data centers running AI workloads.

Thanks to the performance and efficiency gains Nvidia has made with the A100 as well as the high throughput enabled by Mellanox interconnects, Nvidia said five DGX A100s can do the same amount of AI inference and training work as 50 DGX-1 systems and 600 CPU systems, bringing the cost required to complete such work to one-tenth and the power one-twentieth from what it previously was.

The eight A100s, combined, provide 320 GB in total GPU memory and 12.4 TB per second in bandwidth while the DGX A100's six Nvidia NVSwitch interconnect fabrics, combined with the third-generation NVLink, provide 4.8 TB of bi-directional bandwidth. Meanwhile, the system's nine Mellanox ConnectX-6 HD 200Gb per second network interfaces also provide 3.6 TB per second of bi-direction bandwidth.

Ecosystem Support With HGX A100 Server Building Block

To support the launch of the Nvidia A100, the company is offering the HGX A100 server building block that can help OEMs build their own A100-based systems.

The HGX A100 is an integrated baseboard that allows for GPU-to-GPU communication between eight A100s, thanks to Nvidia's NVLink and NVSwitch. With the A100's multi-instance capability, the eight GPUs can serve up to 56 GPU instances at once, east faster than the Nvidia T4. The eight A100s can also pool their resources to serve as one giant GPU capable of 10 petaflops of AI performance.

OEM partners expected to release A100-based servers include Atos, Dell Technologies, Fujitsu, Gigabyte, H3C, Hewlett Packard Enterprise, Inspur, Lenovo, Quanta and Supermicro.

Several cloud service providers are also expected to spin up A100-based instances, including Alibaba Cloud, Amazon Web Services, Baidu Cloud, Google Cloud and Tencent Cloud.

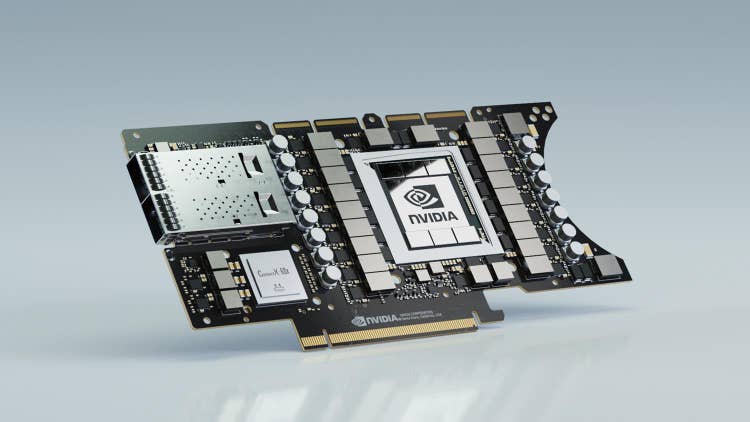

EGX A100 Combined A100 With Mellanox SmartNICs For The Edge

Nvidia is expanding its edge computing capabilities with the new EGX A100, which combines an A100 GPU with a Mellanox ConnectX-6 Dx SmartNIC.

The company said servers powered by EGX A100 can "carry out real-time processing and protection of the massive amounts of streaming data from edge sensors," which makes the product applicable for everything from inference at the edge to 5G applications.

Thanks to the on-board Mellanox network card, the EGX A100 can receive up to 200 Gbps of data and route it directly to the GPU memory for AI or 5G signal processing. Nvidia said this makes the chip the "ultimate Ai and 5G platform" for intelligent real-time decision making, from retail stores to hospitals.

The company said the EGX A100 will start shipping at the end of the year.

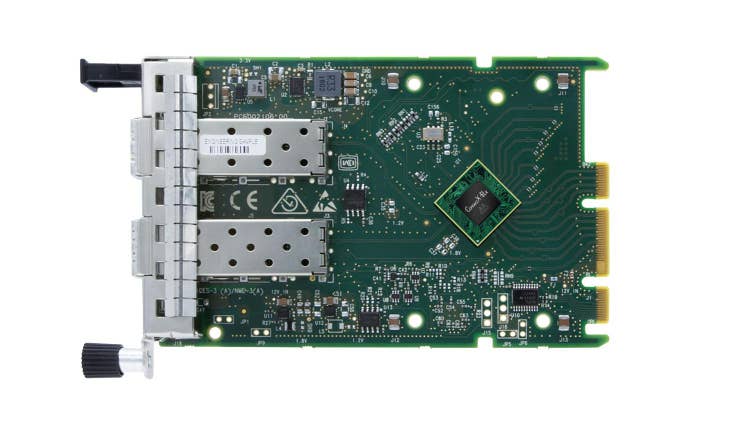

New Mellanox SmartNIC Optimized For 25 Gbps Network Speeds

With Mellanox Technologies now under the ownership of Nvidia, the company announced the new Nvidia Mellanox ConnectX-6 Lx SmartNIC. That can enable 25 Gbps speeds in data centers.

The new SmartNIC includes two ports of 25 Gbps or a single port of 50 Gbps for Ethernet connectivity with PCIe Gen 3.0 and 4.0 host connectivity. It comes with security features such as hardware root of trust, connection tracking for stateful L4 firewalls and in-line IPSec cryptography acceleration.

The network card also uses GPUDirect RDMA acceleration for NVMe over Fabrics storage, scale-out accelerated computing and high-speed video transfer applications.

Nvidia said the new SmartNIC is sampling with customers now, and it's expected to start shipping for general availability in the third quarter of this year.