Omni-Path Spin-Out Aims To Help HPC Partners Keep Nvidia In Check

‘One of our competitors is going full solution. They‘re getting deeper and deeper into not needing anybody else. And so that eliminates choice and creates opportunity for us,’ says Phil Murphy, CEO of Intel Omni-Path spin-out Cornelis Networks, in an interview with CRN.

‘We Can Make Sure That There's Choice Across The Board’

Something unexpected has happened in the high-performance computing space: Several months after Mellanox Technologies, the only high-speed interconnect player in town, became part of Nvidia’s ascendant data center business, Intel’s depreciated competing product line is getting revived in a spun-off firm that will give partners more choice again in the growing market.

Phil Murphy, CEO of the Intel spin-out, Cornelis Networks, believes the company’s Omni-Path Architecture interconnect technology can help serve as a check against the vertical integration and industry consolidation that is being pushed by Nvidia. The GPU juggernaut is increasingly pushing complete solutions like the DGX systems, he said, and could eventually own three important components in HPC — the GPU, the interconnect and the CPU — with its pending acquisition of Arm.

[Related: Nvidia CEO Jensen Huang: Our Future Is About ‘Data Center-Scale Computing’]

“What we‘ve seen is industry consolidation, so elimination of choice. We’ve seen vertical integration, which makes it really difficult for folks that are not part of that vertical integration, the other OEMs, for example, to know what path to take,” Murphy said in an interview with CRN on Tuesday. “We’ve seen one of our competitors — I guess I should be careful what I say here — but one of our competitors is going ‘full solution.’ They’re getting deeper and deeper into not needing anybody else. And so that eliminates choice and creates opportunity for us.”

Nvidia declined to comment.

Cornelis came out of stealth mode Wednesday and announced that it had raised a $20 million Series A funding round led by Downing Ventures, with participation from Chestnut Street Ventures and Intel Capital, which gained a stake in the company in exchange for transferring Omni-Path Architecture’s intellectual property, product inventory and support agreements to Cornelis.

In his interview with CRN, Murphy, one of Omni-Path’s architects, said now that the Omni-Path technology is no longer under Intel, it opens up what kinds of partnerships are possible in the future.

“What we can do as an independent company is we can make sure that there‘s choice across the board,” he said. “We can work with all the different distribution partners, but we can also work with other technologies, not necessarily Intel technologies, and broaden the reach.”

What follows is an edited transcript of CRN’s interview with Murphy, who talked about Omni-Path’s advantages over Nvidia’s Mellanox InfiniBand interconnect, the company’s ability to serve 100- and 200-gigabit needs, future product plans and how Cornelis wants to provide customers and partners more choice in the HPC market.

Cornelis Networks is based in Wayne, Pennsylvania. Is that where you and some of your other colleagues were when you worked for Intel?

I used to work for a mainframe company out here in the Philadelphia area, originally called Burroughs Corporation, eventually changed they their name to Unisys Corporation, so they were out here. In the year 2000, I actually started an InfiniBand-based company to go after high-performance computing with a new high-performance interconnect, and we ran independently here for six years. Then we got acquired by QLogic in 2006, which formed the basis of the QLogic InfiniBand team. I was the vice president of engineering there from 2016 to 2012, and that‘s when Intel brought us in and then a little bit later bought a team from Cray and created Omni-Path. We’ve been out here in Philadelphia area the whole time.

How did Cornelis Networks come to be? How were you able to get the IP and products from Intel?

The press release mentioned that we actually completed two agreements simultaneously. The first one was a company financing, a Series A financing, and we were able to complete that with a great venture firm out of the U.K. called Downing Ventures. They‘re just a really great deep tech company that shares our vision, so we’re really, really excited about working with those guys. And of course, Intel Capital also participated in this, so we’re now an Intel portfolio company as well. And the other connection we have is that the founders were all University of Pennsylvania grads. The Alumni Ventures Group has a bunch of affiliated groups, and the one out here in Philadelphia is called Chestnut Street Ventures, and they participated as well. And so we’ve completed that.

But then the second thing we completed is the spin-out of the Omni-Path group. As you can imagine, we recognized that a while ago Intel has shifting priorities. They had a certain vision for what they were going to do back in 2012 in high-performance computing, but priorities change, and they change relatively slowly, but they do change. So it started becoming apparent to me that Intel was going to de-emphasize this product line. And I spent the bulk of my career working on it, and I could see that it still had very big potential. So I approached them quite a while ago and said, “you know, I don‘t think this fits in Intel anymore. I still think is fantastic technology. And I think that if we set it free, that it can really make a big impact.” And fortunately, Intel agreed that, “yeah, becoming an independent company can actually really unlock the value here.” And so we spent some time working out the agreements between ourselves and Intel about how this will all play out. And once we got that in place, our next task was to find the right financing partner to join in as well. We’re very fortunate that we found those guys.

When did you start those conversations with Intel about spinning out Omni-Path?

I would say it was probably 18 months ago. We knew that Intel was de-emphasizing it that point in time, but there was a lot going on as well. They were de-emphasizing it, but they hadn‘t made any firm decisions on what’s going to happen. And so we started quite a while ago, and early this year, finished all the agreements with Intel on how this was going to unfold, because as you can probably imagine, Intel’s contributing a lot. They’re contributing the product line, all the inventory that goes with it, so we have access to that. We have the IP. We actually have support services agreement with Intel in which we’re going to be supporting their existing customers. There was a lot to work out between us and Intel. Once that happened, we were searching for the right financing partner to lead our round. That can sometimes take some time. It’s the second time we’ve been through it, but it’s a challenge.

The press release states that Omni-Path has more than 500 end-user sites. How many customers does that constitute?

That‘s roughly 500. 500 end users. It depends on what you mean, though. Our distribution model has always been indirect, which means there’s a business development team out making sure that end users are aware of our products and creating that demand, but we don’t sell to them directly. Direct customers are OEM and system integration partners around the world, but the number of end user sites are on the order of 500.

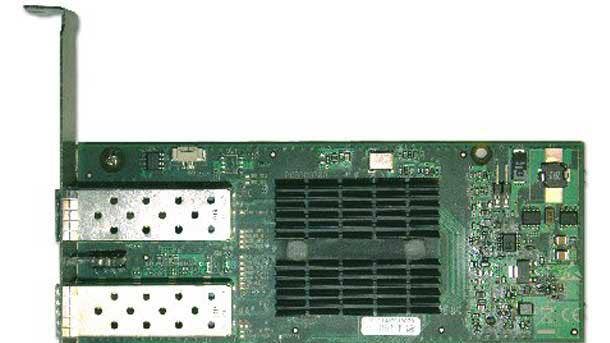

The press release mentions customers using Omni-Path to reach 200-Gbps speeds. Does that mean they are using the OPA 200 product that was canceled at Intel?

What that phrasing in the press release means is that we have 100-gig product, and we‘ve been working on that, refining that for a long time. And, because of the great cost structure with that, we do dual-rail implementations to get to 200 gigabits per second. As an example, there was a recent [supercomputer] that the [U.S. Department of Energy’s National Nuclear Security Administration] just deployed called Magma that they did their analysis and determined [to use] dual-rail Omni-Path, because what you really want to do is you want to be able to connect a 100-gig adapter to each socket of a dual-socket [Intel Xeon server] rather than have the 200-gig going into one. And so, to ensure that the product line and the cost structure of the product line is far less than, let’s say, competitive products, we can address 100- and 200-gig markets very effectively.

Can you shed any light on the product road map?

We‘re not going to give out details of the road map publicly, but we’re already engaged on next-generation development. These things take between two and three years, and that’s only if you have a fantastic starting point. So we have a foundation: Intel in 2012 brought in a team from QLogic and technology from QLogic. They also brought in a team and technology from Cray, which had been working on their Aries interconnect in that timeframe, and then [Intel] invested in that a lot for the next five years, and so that’s the technology base that we’re starting from, and, frankly, it would be impossible to try to tackle this without that.

With that, though, we think that can get to market in the next two to three years with a next-generation product, and that will be consistent with the bandwidth that you would expect to see in that timeframe. Then there‘s a lot of IP that we’re developing right now for acceleration in multiple different dimensions, for standard things like congestion control and dynamic adaptive routing, things of that nature, but also things that are specifically focused on accelerating artificial intelligence, which we think is where the huge opportunity, this convergence going on right now and in our industry. What I see happening is that the techniques that have been long in play in high-performance computing are starting to become more and more adopted in artificial intelligence. How do we scale this thing out? Well, guess what? We solved all those problems starting 20 years ago in high-performance computing. And so we just see this market coming towards us as opposed to us chasing that market, which is really exciting.

How many employees do you have?

Today we have 50 employees. That‘s our starting point. Obviously, we need to grow significantly beyond that to do the major things that we have on our plate. We’re going to continue to sell the current existing product line and support that, and of course, develop the next generation products.

Are they all from Intel, or they also coming from different places?

We have a long history in the industry and so when we did our transaction with Intel, we were permitted to make offers to quite a few people at Intel, and we were successful in recruiting those folks. But then we also have many folks that are outside of Intel that we‘ll also bring into the company. The founders have been in the industry for a long time and have a lot of contacts. And there’s a lot of excitement around this, as you can imagine, just for the reasons that you already know.

What we‘ve seen is industry consolidation, so elimination of choice. We’ve seen vertical integration, which makes it really difficult for folks that are not part of that vertical integration, the other OEMs, for example, to know what path to take. We’ve seen one of our competitors — I guess I should be careful what I say here — but one of our competitors is going full solution. They’re getting deeper and deeper into not needing anybody else. And so that eliminates choice and creates opportunity for us.

Before the spin-out was announced, it seemed like Nvidia’s Mellanox InfiniBand interconnect product line was the only game in town, and that had been a concern previously relayed by an HPC integrator to me when Nvidia’s acquisition of Mellanox was still in the process. Now with Omni-Path being revived, it's bringing back some competition to the high-speed interconnect space.

[Nvidia is] talking about and maybe they‘ll be successful bringing in Arm. And they already had DGX and other platforms, and I think they’re investing more heavily in platforms as well, which is a threat to certain players in the market. And so they need allies to help them combat that.

I would consider Mellanox, now owned by Nvidia, as your main competitor, but are there other players that you would consider competitors as well?

There are multiple choices for interconnects in high-performance computing. For workloads that are not highly sensitive to network performance, we see a lot of Ethernet being used. I think as we transition more into AI workloads, we‘re going to see less and less of that frankly. I think we’re going to see more purpose-built, because what we’re really trying to do is make sure that we can deliver the attributes that the workload really needs. But still Ethernet is a choice for some application environments.

And so then beyond that, there really is one other existing choice, and that‘s Nvidia’s Mellanox InfiniBand. It’s a strong choice, but it’s become over time only supplied by one vendor, so we say a single source technology at this point. And that technology choice has been pulled more deeply into a certain direction that’s going to support a subset of the market: obviously Nvidia’s GPUs, where InfiniBand is going to be pulled more tightly in supporting that specific GPU, maybe that specific processor if [Arm gets acquired by Nvidia]. And so, that’s fine. And then there’s one that’s under development at [Hewlett Packard Enterprise, it’s called Slingshot. It’s claimed to be an Ethernet technology, and what that really means is that when it’s communicating to an Ethernet device, it’ll have the ability to do that. At some point that could also be competitor.

What would you say are the main points of differentiation between Omni-Path and Mellanox's InfiniBand technology?

People aren‘t going to remember this, but when InfiniBand was being defined -- this was yeah 20 years ago -- it was not being defined for high-performance computing at all. It was really defined to kind of replace the channel interface in IBM mainframes. It was something to connect I/O. Back in those days, servers were based on PCI-X. It was crazy but servers had this 64-bit parallel bus that they were trying to run at 133 megahertz, which was very, very difficult. That’s where they were, and then they had to figure out what to do next. And so Intel had a technology called Next-Generation I/O. IBM, back in the day, was participating, and they had something called Future I/O, and they were both trying to solve the same problem using a high-speed serial interface. That’s where InfiniBand got born. It got born out when the warring parties put their differences [aside], they created the InfiniBand Trade Association to go after solving I/O in the enterprise.

So if you think about what was going on [from] 2000 through 2002 — the dot-com bubble burst, we had 9/11, and we had lots of terrible things going on. Because of that, the original idea behind InfiniBand just went by the wayside. Intel eventually came out with something called, we‘ll call it Arapaho at the time, but it ended up being PCI Express, and that made the whole idea that InfiniBand was originally going after not necessary. InfiniBand was going to replace Fibre Channel over Ethernet in the data center. That never happened. So InfiniBand was looking for a home, and Mellanox pivoted to high-performance computing at that point.

Anyway, the underlying architecture for InfiniBand is not really geared for high-performance computing. Our architecture was built from the ground up to focus on high-performance computing, so it‘s very different. And Mellanox will talk about, there is a war between onload and offload, and offload won. That’s not true at all. What’s really going on here is that what you need to do is you need to strike the right balance between what’s running on the server and what’s running in the adapter, especially in high-performance computing. So, in an I/O paradigm, you have a server, and it’s talking to a couple hundred peripherals, for lack of a better word. So you’ve got some finite number of things that you’re talking to. When you’re in high-performance computing, it’s very different. You’re on a node, and it might be talking to thousands or even tens of thousands of other devices. And so the problem is very different, and so you need to make sure that you have the right level of functionality in the host and the right level of functionality in the adapter. And we strike that perfect balance.

Our technology has huge wins at the at the National Nuclear Security [Administration] branch of the DOE. We won the TLCC-2 project with our [InfiniBand-based QDR] architecture. And then Omni-Path won CTS-1, which is that a follow on to that, because they were able to test our architecture and prove that it scaled far better than the competition.

[Omni-Path Architecture] is better suited for HPC because it was built from the ground up with that focus. At its inception, InfiniBand was not targeting HPC, but rather replacing PCI-X and potentially the local and storage area networks in the data center. Because of that, the initial architecture and verbs-based software infrastructure were optimized for that market. On the other hand, Omni-Path is a combination of technologies originally developed by Cray and by QLogic, each with a singular focus on the needs of the HPC market.

In terms of acquiring the IP and inventory from Intel, did you pay a sum for that?

I don‘t want to disclose any of the deal terms, but the way to look at this is that Intel is an investor in the company rather than someone that we’re paying. There are multiple ways to compensate Intel. One is cash, which we don’t have, and another is through equity, through ownership, let’s say.

What can you now do as a standalone company that the Omni-Path business under Intel couldn't?

When you‘re inside of Intel, Intel has a certain set of priorities. They have their own processors, for example, that they’re focused on. And they have certain levels of integration that they were focused on, and so that pulled the product line in a certain direction.

What we can do as an independent company is, we can make sure that there‘s choice across the board. We can work with all the different distribution partners, but we can also work with other technologies, not necessarily Intel technologies, and broaden the reach.

I need to be somewhat careful, because Intel is a big supporter, but they also recognize that the world has changed and that there are other options out there. That‘s a big piece of it: the ability to span across a lot of different technologies that are Intel and not Intel.

What are you going to use the Series A funding for?

So we have three swim lanes that are going on in parallel. One swim lane is just making sure that we continue to provide excellent support for all existing customers as well as our new customers. So that‘s No. 1. And No. 2 is continuing to improve and market and sell our current generation of products.

And then third, of course, is developing the next-generation products. Half of the company will be focused on the third part, which is the development of next-generation products, and the other half will be focused on the first two priorities.