The 10 Coolest AI Chips Of 2021

A look at the 10 coolest AI chips of 2021, which includes processors from semiconductor giants, cloud service providers and chip startups.

Featuring AI Chips From Large And Small Companies

The demand for AI applications, the ever-growing nature of deep learning models and their increasing complexity mean there is plenty of room for competition when it comes to making computer chips more powerful and efficient for such workloads. GPU juggernaut Nvidia may hold the AI chip crown in multiple respects, but that isn’t stopping semiconductor companies both large and small from designing their own AI chip architectures that offer differentiation in terms of features, performance and targeted applications.

[Related: The 10 Hottest Semiconductor Startup Companies Of 2021]

What follows are the 10 coolest AI chips of 2021, which includes processors from semiconductor giants Intel, AMD and Nvidia, computing juggernaut IBM, cloud service providers Google Cloud and Amazon Web Services and AI chip startups Cerebras Systems, Mythic and Syntiant.

Third-Generation Intel Xeon Scalable (Ice Lake)

Intel’s new third-generation Xeon Scalable CPUs are not dedicated AI accelerators like the rest of the computer chips on this list, but the chipmaker said the processors, code-named Ice Lake, are the only data center CPUs with built-in AI capabilities. This is made possible by Ice Lake’s Intel DL Boost feature, which consists of Vector Neural Network Instructions for accelerating inference workloads. Ice Lake also comes with Intel Advanced Vector Extensions 512, or Intel AVX-512, to boost machine learning performance. The chipmaker has touted that Ice Lake can provide 30 percent better performance than Nvidia’s A100 GPU “across a broad mix of 20 popular AI and machine learning workloads.”

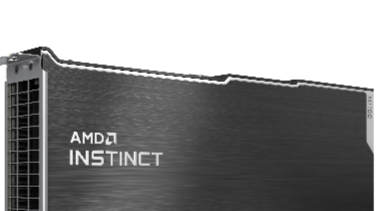

AMD Instinct MI200 GPU

AMD is challenging Nvidia’s AI and high-performance computing prowess with its new Instinct MI200 GPU accelerators, which the chipmaker revealed in November. The company said the MI200 series provides up to 4.9 times faster HPC performance and up to 20 percent faster AI performance compared with the 400-watt SXM version of Nvidia’s flagship A100 GPU configured with 80 GB of memory. The flagship GPU in the lineup, the Instinct MI250X, has the OAM form factor and features 220 compute units, 14,080 stream processors and 128 GB of HBM2e. The chips also feature up to 58 billion transistors using a 6-nanometer process and up to 880 second-generation Matrix Cores.

AWS Trainium

Amazon Web Services said it will deliver the “most cost-effective training in the cloud” with Trainium, its new custom-designed AI chip that was made available in new instances in November. The cloud service provider said its new Trainium-powered Trn1 instances provide up to 40 percent lower cost to train deep learning models in comparison to its P4d instances that are powered by Nvidia’s A100 GPUs. The Trn1 instances also provide 800 Gbps in EFA networking bandwidth, double that of GPU-based EC2 instances. In addition, Trn1 instances can be scaled to more than 10,000 Trainium accelerators with petabit-scale networking to provide supercomputer-class performance for uber-fast training of large and complex models.

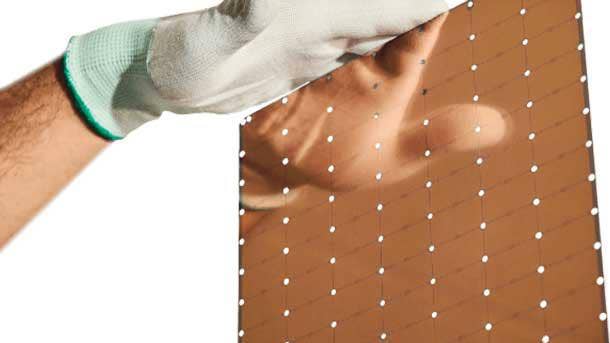

Cerebras Wafer Scale Engine 2

Cerebras Systems said its Wafer Scale Engine 2 chip is the “largest AI processor ever made,” consisting of 2.6 trillion transistors, 850,000 cores and 40 GB of on-chip memory. The startup said those specifications give the WSE-2 chip a massive advantage over GPU competitors, with 123 times more cores and 1,000 times more on-chip memory. Revealed earlier this year, the WSE-2 chip powers Cerebras’ purpose-built CS-2 system, which “delivers more compute performance at less space and less power than any other system,” according to the startup. More specifically, Cerebras said the CS-2 can deliver hundreds of thousands of times more performance than alternatives depending on the workload, and a single system can replace clusters consisting of hundreds or thousands of GPUs.

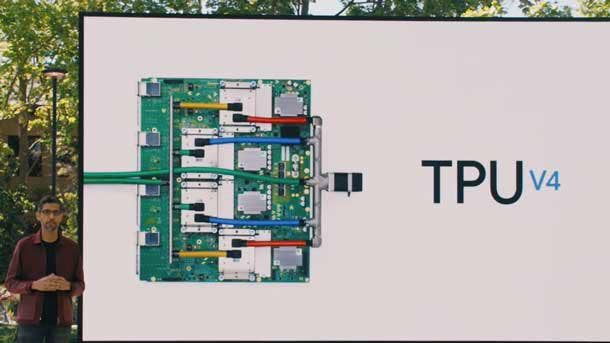

Google TPU v4

Google’s TPU v4 is the latest generation of the company’s Tensor Processing Units that are used to power internal machine learning workloads and some Google Cloud instances. Google CEO Sundar Pichai unveiled the new TPUs at the Google I/O event in May, saying that the TPU v4 provides more than double the performance of the previous generation. When 4,096 of them are combined into a TPU v4 Pod, the system can deliver 1.1 exaflops of peak performance, according to Google, which is equivalent to more than a quintillion mathematical equations per second. The company recently said that the TPU v4 was able to set new performance records in four MLPerf benchmarks, which showed that the chip was faster than Nvidia’s A100 GPU.

IBM Telum Processor

The IBM Telum Processor is the company’s first CPU to feature on-chip acceleration for AI inferencing workloads. The processor is made for the company’s next-generation IBM Z and LinuxOne mainframes, and the built-in AI acceleration allows users to conduct a high volume of inference on transactions without sacrificing performance. The processor contains eight cores, each of which has a frequency of more than 5GHz and 23 MB of private L2 cache that combines to form a 256-MB virtual L3 cache. The processor’s inference capabilities are made possible by an integrated AI accelerator that is capable of more than 6 teraflops, or 6 trillion floating point calculations per second.

Intel Loihi 2 Neuromorphic Chip

Intel’s Loihi 2 neuromorphic chip is a new kind of AI processor that aims to mimic the functions and form of the brain’s biological neural networks. The company said neuromorphic computing “represents a fundamental rethinking of computer architecture at the transistor level, and its capabilities remain unrivaled when it comes to intelligently processing, responding to and learning from real-world data “at microwatt power levels and millisecond response times.” Loihi 2 represents the second generation of Intel’s neuromorphic chips, and its features include generalized event-based messaging, which allows spike messages to carry integer-value payloads “with little extra cost in either performance or energy.” It also has greater neuron model programmability, enhanced learning capabilities, faster circuit speeds and “numerous capacity optimizations to improve resources density.”

Mythic M1076 Analog Matrix Processor

Mythic is promising best-in-class performance, scalability and efficiency with its recently revealed M1076 Analog Matrix Processor, also known as Mythic AMP. The startup said the M1076 AMP can perform up to 25 trillion operations per seconds of AI compute performance in a small 3-watt power envelope, requiring 10 times less power than GPU or system-on-chip alternatives. The new processors support use cases ranging from video analytics to augmented reality, and they come in a variety of form factors, from an ultra-compact PCIe M.2 card to a PCIe card that contains 16 AMPs.

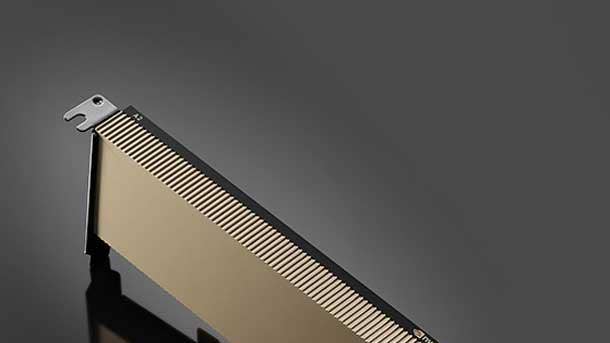

Nvidia A2 Tensor Core GPU

Nvidia is expanding its portfolio of Ampere data center GPUs with A2, a “low-power, small-footprint accelerator for AI at the edge.” The A2 GPU comes in a one-slot, low-profile PCIe 4.0 card with 10 RT Cores, 16 GB of GDDR6 memory and a maximum thermal design power between 40 and 60 watts. Compared with Intel’s Xeon Gold 6330N CPU, the A2 GPU is 20 times faster for text-to-speech with the Tacotron2 and Waveglow models, seven times faster for natural language processing with the BERT-Large model and eight times faster for computer vision with the EfficientDet-D0 model, according to Nvidia. The company said it’s also 20 percent faster on the ShuffleNet v2 model and 30 percent faster on the MobileNet v2 model compared with its previous-generation T4 GPU.

Syntiant NDP120

Syntiant wants to bring low-power edge devices to the next level with its new NDP120 Neural Decision Processor. Revealed in January, the NDP120 uses neural processing to enable battery-powered devices to run multiple applications without having a major impact on battery. The NDP120 is the first chip to use the startup’s Syntiant Core 2 tensor processor platform, which supports more than 7 million parameters and “can process multiple concurrent heterogenous networks,” according to the startup. In addition to the Syntiant Core 2 neural processor, the NDP120 includes an Arm Cortex M0 microcontroller, on-board firmware decryption and authentication and support for up to seven audio streams, among other things. Targeted use cases include mobile phones, earbuds, wearables, smart speakers, laptops, smart home applications and security devices.