11 Big Nvidia Announcements At GTC 2024: Blackwell GPUs, AI Microservices And More

CRN rounds up 11 big announcements Nvidia made at its first in-person GTC in nearly five years, which include the next-generation Blackwell GPU architecture, upcoming DGX systems, updates to its DGX Cloud service, new AI microservices and a plethora of fresh offerings from partners like Dell Technologies, Amazon Web Services and others.

After more than doubling its revenue last year thanks to high demand for its AI chips and systems, Nvidia showed no signs of slowing down at its GTC 2024 event this week.

To the contrary, the Santa Clara, Calif.-based company revealed its plan to accelerate its takeover of the tech world with its GPU-accelerated infrastructure and services.

[Related: Analysis: How Nvidia Surpassed Intel In Annual Revenue And Won The AI Crown]

Case in point: The chip designer showed off its next-generation Blackwell GPU architecture coming later this year to accelerate AI and other workloads in data centers despite the fact that its H200 GPU announced last fall hasn’t become widely available yet.

This is part of an updated stdrategy Nvidia announced last October that moved the company to a yearly release cadence for new GPUs from its previous two-year rhythm.

In his Monday keynote, Nvidia CEO Jensen Huang doubled down on his belief that the future of computing is accelerated with his company’s GPUs and systems rather than the x86 CPUs that run the majority of applications in data centers and PCs today.

“We need another way of doing computing, so that we can continue to scale, so that we can continue to drive down the cost of computing, so that we can continue to consume more and more computing while being sustainable,” he said. “Accelerated computing is a dramatic speed-up over general-purpose computing, in every single industry.”

To expand upon its dominance in the AI computing market, Nvidia not only revealed Blackwell as the successor to its successful Hopper GPU architecture. It also showed off new GPU-accelerated systems, software, services and other products meant to enable the fastest possible performance and accelerate the development of AI applications.

What follows are 11 big announcements Nvidia and its partners made at this week’s GTC 2024 event. These announcements included the new B100, B200 and GB200 chips; new Nvidia GDX systems; new microservices meant to accelerate AI software development; new high-speed network platforms; new additions to the DGX Cloud service; the winners of this year’s Nvidia Partner Network awards; and a plethora of new offerings from Nvidia partners.

Additional reporting by Joseph Kovar and Mark Haranas.

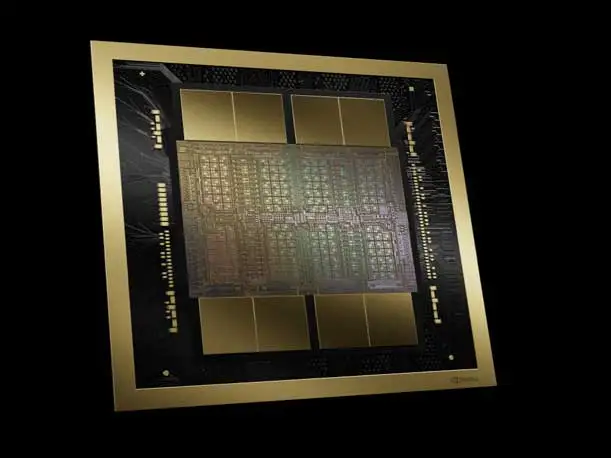

Nvidia Reveals Blackwell, Successor to Hopper GPU Architecture

Nvidia revealed its next-generation Blackwell GPU architecture as the much-hyped successor to the AI chip giant’s Hopper platform, claiming it will enable up to 30 times greater inference performance and consume 25 times less energy for massive AI models.

The first confirmed designs to use Blackwell include the B100 and the B200 GPUs, the successors to the Hopper-based H100 and H200 for x86-based systems, respectively. The B200 is expected to feature greater high-bandwidth memory capacity than the B100.

The initial designs also include the GB200 Grace Blackwell Superchip, which, on a single package, connects two B200 GPUs with the company’s Arm-based, 72-core Grace CPU that has previously been paired with a single H200 and H100.

It’s the GB200 where Nvidia sees Blackwell truly shining when it comes to the most demanding AI workloads, in particular massive and complex AI models called Mixture-of-Experts that combine multiple models, pushing the parameter count past 1 trillion.

In a liquid-cooled system with 18 GB200s, Nvidia said the system’s 36 Blackwell GPUs are capable of providing up to 30 times faster large language model inference performance compared to an air-cooled system with 64 H100 GPUs.

Nvidia said major OEMs and cloud service providers are expected to release Blackwell-based products later this year, though an exact timeline on launch plans is not yet known.

Cloud service providers expected to provide Blackwell-based instances include Amazon Web Services, Microsoft Azure, Google Cloud and Oracle Cloud Infrastructure as well as several other players, like Lambda, CoreWeave and IBM Cloud.

On the server side, Cisco Systems, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to offer a plethora of Blackwell-based systems. Other OEMs supporting the GPUs include ASRock Rack, Asus, Eviden and Gigabyte.

A CRN report published Monday provides an in-depth look at Blackwell’s architecture.

Nvidia Plans New DGX Systems With B200, GB200 Chips

Nvidia is announcing two new flavors of DGX systems with Blackwell GPUs:

- The DGX B200 is an air-cooled design that pairs eight B200 GPUs with two x86 CPUs. This system is available in a DGX SuperPod cluster.

- The DGX GB200 is a liquid-cooled design that uses GB200 Grace Hopper GPUs. Eight of these systems make up a DGX SuperPod cluster, giving it a total of 288 Grace CPUs, 576 B200 GPUs and 240TB of fast memory. This enables the DGX SuperPod to deliver 11.5 exaflops, or quadrillion calculations per second, of FP4 computing.

The GB200-based SuperPod is built using a new version of a rack-scale architecture Nvidia introduced with AWS last year to power large generative AI applications.

Called the GB200 NVL72, it’s a “multi-node, liquid-cooled, rack-scale system for the most compute-intensive workloads,” according to Nvidia, and it contains 36 GB200 Grace Blackwell Superchips as well as the company’s BlueField-3 data processing units (DPUs).

These DPUs are meant “to enable cloud network acceleration, composable storage, zero-trust security and GPU compute elasticity in hyperscale AI clouds,” the company said.

The GB200 NVL72 systems will become the basis of the infrastructure for Blackwell-based instances offered by Microsoft Azure, AWS, Google Cloud, and Oracle Cloud Infrastructure, the latter three of which will offer Nvidia’s DGX Cloud service on top of the systems.

Nvidia plans to enable OEMs and other partners build their own Blackwell-based systems with the HGX server board, which links eight B100 or B200 GPUs. The company has not yet announced plans to offer Blackwell GPUs in PCIe cards for standard servers.

Nvidia Reveals Quantum-X800 InfiniBand, Spectrum-X800 Ethernet

Nvidia announced two new high-speed network platforms that deliver speeds of up to 800 GB/s for AI systems like the upcoming DGX B200 and DGX GB200 servers: the Quantum-X800 InfiniBand platform and the Spectrum-X800 platform.

Nvidia said the Quantum-X800, which includes the Quantum 3400 switch and ConnectX-8 SuperNIC, provides five times higher bandwidth capacity and offers a nine-fold increase for in-network computing, which comes out to 14.4 teraflops.

The Spectrum-X800, on the other hand, “optimizes network performance, facilitating faster processing, analysis and execution of AI workloads,” according to Nvidia. This results in expedited “development, deployment and time to market of AI solutions,” the company said.

Nvidia Adds GenAI Microservices To AI Enterprise Platform

Nvidia announced that it’s adding generative AI microservices to its Nvidia AI Enterprise software suite with the goal of helping businesses develop and deploy AI applications faster “while retaining full ownership and control of their intellectual property.”

The microservices include Nvidia Inference Microservices, also known as NIM, which “optimize inference on more than two dozen popular AI models” from Nvidia and partners like Google, Meta, Hugging Face, Microsoft, Mistral AI and Stability AI, according to the company.

The NIM microservices will be accessible from third-party cloud services Amazon SageMaker, Google Kubernetes Engine and Microsoft Azure AI.

Manuvir Das, vice president of enterprise computing at Nvidia, said the NIM microservices meant to provide software developers with an “easy path to build production applications where they can use these models.”

He added that NIM will enable developers to build applications for an install base of hundreds of millions of GPUs, whether they’re used in the cloud, on-premises servers or PCs.

“We believe that NVIDIA NIM is the best software package, the best runtime for developers to build on top of so that they can focus on the enterprise applications and just let Nvidia do the work to produce these models for them in the most efficient, enterprise-grade manner so that they can just do the rest of their work,” Das said in a briefing.

Among the early adopters are SAP, CrowdStrike, NetApp and ServiceNow, the latter of which is expected to use NIM to “develop and deploy new domain-specific copilots and other generative AI applications faster and more cost effectively,” according to Nvidia.

Beyond NIM microservices, Nvidia is also releasing CUDA-X microservices that “provide end-to-build building blocks for data preparation, customization and training to speed production AI development across industries,” the company said.

This microservice class includes ones for the Nvidia Riva speech and translation AI software development kit, the NeMo Retriever service for retrieval augmented generation and, coming soon, a whole suite of NeMo microservices for custom model development.

Nvidia DGX Cloud Expands Beyond Training To Fine-Tuning, Inference

Nvidia’s DGX Cloud AI supercomputing service is expanding beyond its original purview of training AI models to fine-tuning and inferencing of such models.

This was confirmed to CRN by Alexis Black Bjorlin, vice president and general manager of DGX Cloud at Nvidia, in an interview at the company’s GTC 2024 event.

“You can come to DGX Cloud and have the end-to-end capability. That's a new thing. When we originally launched it before, it was really about training-as-a service and pre-training to a large degree. And now we recognize people just need an end-to-end solution set,” she said.

Nvidia giant launched DGX Cloud last year as a service that runs on top of cloud service providers and gives enterprises quick access to the tools and GPU-powered infrastructure they need to create and run generative AI applications and other kinds of AI workloads.

The cloud service is now available on Amazon Web Services, Microsoft Azure, Google Cloud and Oracle Cloud Infrastructure.

Nvidia Honors 13 Top Americas Partners With Fast-Growing AI Businesses

Nvidia named the 13 recipients of this year’s Nvidia Partner Network awards in the Americas, and they include several repeat winners, like World Wide Technology and Insight Enterprises, as well as newcomers, like International Computer Concepts and Sterling.

Standing out among Nvidia’s 350 channel partners in North America, the winners of the 2024 Nvidia Partner Network Partner of the Year Awards were recognized for selling Nvidia’s products and services at a fast clip and making major investments with the chip designer.

“I would say these partners represent probably the most robust view of accelerated computing in any form it may take in the history that I've been with this company,” Nvidia Americas Channel Chief Craig Weinstein told CRN in an exclusive interview. “And the level of investment taking place is something that I've never seen in my career.”

Nvidia Starts Storage Partner Validation Program For OVX Systems

Nvidia said it started a storage partner validation program that is meant to help businesses pair the right storage solutions with certified OVX servers for AI and graphics-intensive workloads.

The initial members of the validation program include DDN, Dell Technologies, NetApp, Pure Storage and WEKA, whose storage solutions have passed a series of testing measuring “storage performance and input/output scaling across multiple parameters that represent the demanding requirements of enterprise AI workloads,” according to the company.

Available now, these solutions have been validated to work with OVX servers from OEMs like Hewlett Packard Micro, Lenovo, Supermicro and Gigabyte in the Nvidia-Certified Systems program.

OVX is Nvidia’s reference architecture for servers powered by Nvidia L40S GPUs, Nvidia AI Enterprise software, Nvidia Quantum-2 InfiniBand or Nvidia Spectrum-X Ethernet and Nvidia BlueField-3 data processing units.

The servers are optimized for training smaller large language models such as Meta’s 70-billion-parameter Llama 2, fine-tuning existing models and high-throughput, low-latency inferencing.

Nvidia Announces Omniverse Cloud APIs For Industrial Digital Twin Apps

Nvidia said it will enable developers of digital twin and simulation applications to take advantage of core technologies within its Omniverse Cloud platform via new APIs.

Short for application programming interfaces, these APIs call specific functions within Omniverse Cloud, Nvidia’s platform-as-a-service for creating and running 3-D applications. At the foundation of Omniverse is OpenUSD, the open 3-D framework created by animation studio Pixar that lets Omniverse connect with other 3-D applications made by other organizations.

Nvidia said it plans to release later this year five Omniverse Cloud APIs for the following:

- USD Render, which enables the rendering of models and scenes based on OpenUSD data with the ray tracing capabilities of Nvidia RTX GPUs.

- USD Write, which “lets users modify and interact with OpenUSD data.”

- USD Query, which “enables scene queries and interactive scenarios.”

- USD Notify, which “tracks USD changes and provides updates.”

- Omniverse Channel, which “connects users, tools and worlds to enable collaboration across scenes.”

Among the early adopters are Siemens, Ansys, Cadence, Dassault Systèmes, Trimble, Hexagon and Rockwell Automation.

Nvidia Intros New Industrial AI Solutions, Including Jetson Computer

Nvidia expanded its portfolio of industrial AI solutions with a general-purpose foundation model for humanoid robot learning and a next-generation edge computer, among other things.

The foundation model for is called Project GR00T, and it’s designed to translate text, video and in-person demonstrations into actions performed by a humanoid robot.

To power such robots, Nvidia announced a new Jetson Thor-based “computer that is based on the company’s Thor system-on-chip. The computer features a Blackwell GPU that can provide 800 teraflops of 8-bit floating point AI performance to run multimodal models like GR00T.

The company also announced an expansion of its Isaac software platform for robotics. The new elements included Isaac Lab for enabling reinforcement learning, the OSMO compute orchestration service, Isaac Manipulator for enabling dexterity and modular AI capabilities in robotic arms and Isaac Perceptor for enabling multi-camera, 3-D surround-vision capabilities.

The new Isaac capabilities are expected to be available in the third quarter.

Nvidia Partners Announce Loads Of New Services And Products

Nvidia partners used GTC 2024 to announce a significant number of new services and products that take advantage of the GPU giant’s chips, systems, software and services.

These partners showed new servers, semiconductors, software, middleware, and other products taking advantage of the Nvidia ecosystem. Most, if not nearly all, of those new products were aimed at helping businesses take advantage of the AI capabilities of the Nvidia technologies, and the new generative AI possibilities, particularly around its latest GPUs.

The new offerings included the Balbix’s BX4 engine for analyzing millions of assets and vulnerability instances as a way to enhance cyber risk management; ClearML’s free open-source tool for optimizing GPU utilization; Kinetica’s enterprise solution for enriching generative AI applications with domain-specific analytical insights; and Pure Storage’s reference architecture for retrieval-augmented generation pipeline for AI inference.

They also included Edge Impulse’s tools for enabling Nvidia’s TAO models on any edge device; Heavy.AI’s new HeavyIQ large language model capabilities for its GPU-accelerated analytics platform; Phison’s aiDAPTV+ hybrid hardware and software technology for fine-tuning large language models; Vultr Cloud’s serverless inference platform; DDN’s AI400X2 Turbo storage system for AI software stacks; and UneeQ’s proprietary synthetic animation system.

Major OEMs announced new Nvidia-based offerings too. These included Dell Technologies’ PowerEdge XE9680 servers, Lenovo’s 8-GPU ThinkSystem AI servers, Supermicro’s GPU-powered SuperCluster systems, and ASRock Rack’s 6U8X-EGS2 AI training systems.

In addition, cloud service providers revealed new offerings with Nvidia. These included Amazon Web Services’ upcoming EC2 instances powered by Nvidia Grace Blackwell Superchips, Google Cloud’s integration of Nvidia NIM inference microservices into Google Kubernetes Engine, and Microsoft’s integration of Nvidia’s Omniverse Cloud APIs into Azure.

Other Announcements: Omniverse For Apple Vision Pro, Maxine

Nvidia made several other announcements at GTC 2024. They included:

- The ability to access OpenUSD-based enterprise digital twins created in the Nvidia Omniverse platform on the Apple Vision Pro.

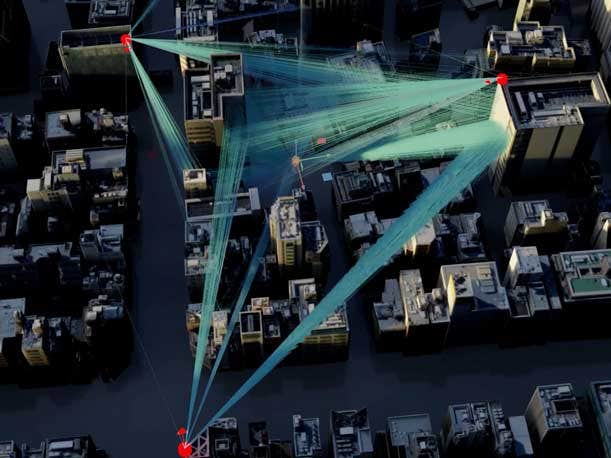

- The launch of the Nvidia 6G Research Cloud platform, which “allows organizations to accelerate the development of 6G technologies that will connect trillions of devices with the cloud infrastructures,” according to the company.

- The addition of 3-D asset generation capabilities to Nvidia’s Edify multimodal architecture for visual generative AI applications.

- New updates and improvements to Nvidia’s Maxine software development kit for GPU-accelerated video conferencing, content creation and streaming features. These updates include studio voice for enabling ordinary microphones and headsets to deliver high-quality audio as well as an improved eye contact model.