5 Big Points About 5th-Gen Intel Xeon CPUs: Claims Against AMD, AI Performance And More

CRN explains five important points about Intel’s new fifth-gen Xeon processors, including their most important specifications and features, how they improve over the previous generation, how they perform against AMD’s latest EPYC CPUs and how they perform in key AI workloads.

Intel said its newly launched fifth-generation Xeon server processors deliver AI acceleration in every core, improve performance per watt and lower total cost of ownership over previous generations – all on top of outperforming AMD’s latest EPYC chips “around the clock.”

These were among the top claims the semiconductor giant made for the latest Xeon chips announced at Intel’s AI Everywhere event last week in New York, where the company also launched its Core Ultra “Meteor Lake” chips for AI-enabled laptops.

[Related: VMware, Intel Seek To Help Businesses Build And Run AI Models On Existing Servers]

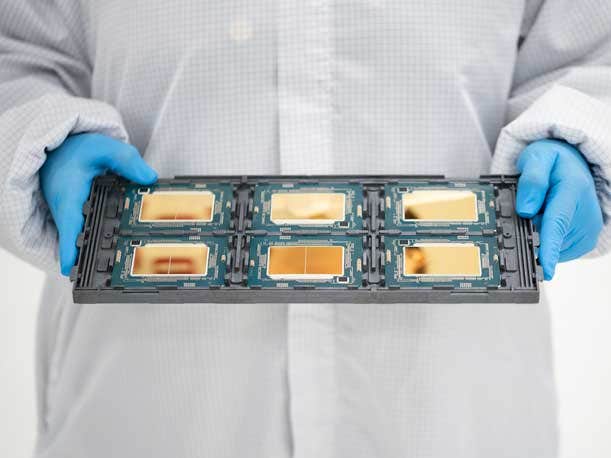

Starting in the first quarter of next year, the new Xeon CPUs are expected to go into a “broad selection” of single- and dual-socket servers from top OEMs such as Cisco Systems, Dell Technologies, Hewlett Packard Enterprises, Lenovo and Supermicro. The chips are also slated to power instances from “major” cloud service providers in 2024.

The new chips share the same server platform as fourth-gen Xeon, which will make it easy for OEMs, cloud service providers and channel partners to update system designs.

The processors feature up to 64 cores and eight channels of DDR5 memory running at up to 5,600 megatransfers per second as well as broad support for a nascent virtualization security capability called Intel Trust Domain Extensions.

The processors also support Type 3 devices in the Compute Express Link (CXL) 1.1 specification, which will enable up to four additional channels of memory.

Intel said the fifth-gen Xeon chips provide “significant performance leaps” over the fourth-generation Xeon processors, which released at the beginning of this year, across general-purpose, AI, high-performance computing as well as networking and storage workloads. The chipmaker said this was accomplished using the same power envelope.

The company also claimed that the processors outperform AMD’s fourth-generation EPYC chips, which launched a little over one year ago, in varying degrees across different workloads, including web applications, data services, storage, HPC and AI inference.

Intel launched the new Xeon processors as the company gets close to the third year of CEO Pat Gelsinger’s tenure and his comeback plan, which seeks to put it at the forefront of advanced chip manufacturing capabilities to fuel super-competitive products by 2025.

What follows are four important points about Intel’s new fifth-generation Xeon processors, including their most important specifications and features, how they improve over the previous generation, how they perform against AMD’s latest EPYC CPUs, how they run key AI workloads, and the full list of SKUs with pricing for customers.

And in a final point, CRN covers the basics around Intel’s new Xeon E-2400 processors for entry-level servers and the Xeon D-1800/2800 processors for low-power networking and edge solutions.

5th-Gen Xeon Scalable Features And Enhancements

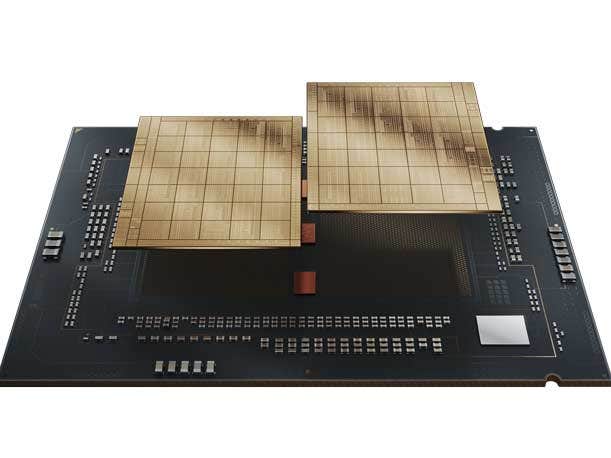

Based on the same Intel 7 manufacturing process node as the fourth-generation Xeon “Sapphire Rapids” chips from earlier this year, the fifth-generation Xeon Scalable “Emerald Rapids” processors benefit from several architectural and platform enhancements.

These enhancements include increasing the maximum core count to 64 from 60, boosting memory speed to 5,600 megatransfers per second, expanding last-level cache by three times to as much as 320MB, and pushing Intel Ultra Path Interconnect 2.0 speed to a maximum 20 gigatransfers per second, the latter of which is important for chip-to-chip communication.

The fifth generation has also gained broad support for Intel Trust Domain Extensions, a new confidential computing feature on top of Intel Software Guard Extensions that allows Xeon CPUs to run hardware-isolated virtual machines that are kept out of reach from the virtual machine manager or hypervisor. Also known as TDX, the feature was previously only available in custom variants of fourth-gen Xeon processors to support cloud service providers.

In addition, the new processors expand Xeon’s support for Compute Express Link (CXL) capabilities beyond Type 1 and Type 2 devices to Type 3 devices.

While Type 1 support enables memory coherency between the CPU and specialized accelerators lacking local memory as SmartNICs, and Type 2 support does so for general-purpose accelerators with local memory such as GPUs and FPGAs, Type 3 support enables systems to expand memory capacity and bandwidth.

In the case of fifth-gen Xeon, this translates into support for four channels of CXL memory across two Type 3 devices on top of the eight channels of DDR5 enabled by the CPU. These memory types can be split into two tiers to, for instance, increase transactions per second for in-memory databases, or combined into a single tier to expand memory bandwidth or capacity.

The fifth generation comes with improved frequencies for Intel Advanced Matrix Extensions, a feature introduced in the previous generation to accelerate AI and HPC workloads.

The lineup’s other features include PCIe Gen 5 support as well as several accelerators introduced in the previous generation, such as Intel Quick Assist Technology, Intel In-Memory Analytics Accelerator and Intel Data Streaming Accelerator.

5th-Gen Xeon Scalable Generational Performance Gains

Intel said the fifth-generation Xeon processors provide “significant performance leaps” over the previous generation, according to internal tests by the company.

At a top level, the company reported performance gains of 21 percent on average for general-purpose computing, up to 42 percent for AI inference, up to 40 percent for high-performance computing and up to 70 percent for throughput in networking and storage workloads.

Compared to third-gen Xeon, these gains extended to 84 percent on average for general-purpose computing, up to 14 times for AI inference and training, up to 2.1 times on average for HPC and up to 3.6 times for higher throughput in networking and storage.

Intel provided a breakdown of how fifth-gen Xeon performed against the fourth generation across key workload categories, ranging from web and data services to networking and AI.

For web services, fifth-gen Xeon’s gains ranged from 12 percent for the SVT HEVC video set at 1080p to 33 percent for the social network application test from the DeathStarBench suite of cloud microservices benchmarks.

For data services, these gains went anywhere from 11 percent for the SPDK NVMe storage application test to 24 percent for a MySQL database.

For HPC, the performance improvements came out between 18 percent for the Monte Carlo simulation benchmark and 42 percent for the Ansys LS-DYNA crash simulation.

For networking, these gains ranged from 50 percent for the 5G User Plane Function application to 69 percent for the Vector Packet Processing IPSec application.

Within the AI space, Intel showed a wide range of double-digit performance improvements for fifth-gen Xeon when it comes to training and inference with a variety of model types.

For training, the company showed performance improvements of 10 percent for the DLRM recommender model, 17 percent for the SSD-ResNet34 image classification model, 25 percent for the BERT-Large natural language processing model, and 29 percent for the RNN-T speech recognition model and MaskRCNN object instance segmentation model.

For real-time inference, Intel said the fifth-gen Xeon chips were 19 percent faster for the DistilBERT lightweight natural language processing model, 24 percent faster for RNN-T and the Resnext101 32x16d image classification model, 40 percent faster for SSD ResNet34 and 42 percent faster for MaskRCNN.

For batch inferencing jobs, the processors showed performance gains of 24 percent for DLRM, 26 percent for MaskRCNN, 36 percent for Resnext101 and DistilBERT, 41 percent for SSD RexNet34 and 44 percent for RNN-T.

Intel said the CPUs are also adept at running AI inference workloads at the edge, where there are power and footprint constraints. Compared to third-gen Xeon, the new generation was 2.5 times faster for the BERT-base natural language processing model, 2.8 times faster for ResNet-50, 3.7 times faster for the 3D-Unet semantic segmentation model, nearly 4 times faster for the human-pose-estimation task, and almost 5.3 times faster for SSD-ResNet34.

Some of these performance gains and consequent improvements efficiency are made possible by accelerator engines within fifth-gen Xeon chips such as Advanced Matrix Extensions-512 and DSA. Intel said these accelerators give the new chips improvements in performance-per-watt anywhere from 51 percent for ClickHouse, a column-oriented database management system, to nearly 10-fold for ResNet50.

5th-Gen Intel Xeon Competitive Claims Against AMD EPYC

Intel said the fifth-generation Xeon processors “outperform” AMD’s fourth-gen EPYC chips “around the clock” when it comes to a variety of workloads.

These workloads include common ones used for web services, such as server-side Java and NGINX TLS, as well as data services such as the RocksDB and HammerDB MySQL databases. These mainstream workloads also included storage and HPC applications.

To demonstrate its edge over the competition, Intel compared the top chip from its fourth-gen Xeon lineup, the 64-core, dual-socket Xeon Platinum 8592+, with the 64-core, dual-socket EPYC 9554, which has the fifth highest core count in AMD’s fourth-gen server CPU lineup, below 84-core, 96-core, 112-core and 128-core models.

Across mainstream workloads, Intel said its top server CPU has the following performance and efficiency advantages over its rival in the following areas:

- Web services

- 6 percent faster performance and 8 percent higher performance-per-watt for server-side Java under a service-level agreement.

- 66 percent faster performance and 41 percent faster performance-per-watt for NGINX with TLS encryption.

- Data services

- 15 percent faster performance and 11 percent higher performance-per-watt for the ClickHouse column-based database management system.

- 62 percent faster performance and 72 percent higher performance-per-watt for the RocksDB log-structured database engine.

- 72 percent faster performance and 43 percent higher performance-per-watt for the HammerDB MySQL database.

- 93 percent faster performance and 64 percent higher performance-per-watt for HammerDB Microsoft SQL 2022 database.

- Storage

- 2.5 times faster performance and 92 percent higher performance-per-watt for a Storage Performance Development Kit test with large media files.

- 2.17 times faster performance and 75 percent higher performance-per-watt for a Storage Performance Development Kit test with database requests.

- HPC

- 13 percent faster performance and 2 percent higher performance-per-watt for the Linpack linear algebra computation benchmark.

- 36 percent faster performance and 9 percent higher performance-per-watt for the Nanoscale Molecular Dynamics software.

- 48 percent faster performance and 15 percent higher performance-per-watt for LAMMPS molecular dynamics simulation software.

- 55 percent faster performance and 20 percent higher performance-per-watt for the Black-Scholes pricing model for financial services.

For virtualized environments, Intel said its flagship Xeon CPU delivers “consistent and leadership” performance, running 15 percent faster for a Redis database, 40 percent faster for Linpack, 99 percent faster for the STREAM memory bandwidth benchmark and 2.3 times faster for the ResNet-50 image classification model—all using 16 virtual CPUs.

Intel also claimed that its 64-core Xeon processor can outperform AMD’s 96-core EPYC 9654 in various deep learning inference workloads, running 2.44 times faster for ResNet-50 in real time, 80 percent faster for BERT-Large in real time, 2.6 times faster for DistilBERT and 2.34 times faster for the DLRM recommender system in batch mode. The company said its 64-core server chip is faster than AMD’s 128-core EPYC 9754 across these workloads as well.

For AI inference at the edge, Intel said its fourth-gen Xeon 6448Y and fifth-gen Xeon 6548Y+ can both outmatch AMD’s fourth-gen, 32-core EPYC 9334 in performance and efficiency.

For real-time ResNet-50, these chips can run 2.89 and 3.13 times faster and provide 2.26 and 2.28 times higher performance-per-watt, respectively, according to the company. For batched ResNet-50, these chips can run 3.07 and 3.40 faster and provide 2.71 times higher performance-per-watt, the chipmaker added.

Intel said its latest Xeon server chips also provide substantial cost savings and sustainability benefits against the fourth-gen AMD EPYC processors.

The company made this claim based on comparisons between server farms running on Intel Xeon Platinum 8592+ CPUs and AMD EPYC 9554 CPUs providing the same performance needs across five representative workloads: NGINX TLS for web services, RocksDB for data services, MySQL for data services, Monte Carlo for HPC and DistilBERT for AI.

According to Intel, whereas it would take 50 AMD servers to fulfill a certain level of performance for each workload, NGINX TLS would only require 31 Intel servers to do the same, resulting in 489.7 megawatt hours saved, a reduction of nearly 208,000 kilograms for CO2 emissions and a total cost of ownership reduction of $444,000 over four years.

For RocksDB, Intel said it would only need 31 Xeon-based servers versus AMD’s 50 EPYC-based servers, resulting in more than double the reduction in energy and CO2 emissions and a slightly higher savings for total cost of ownership.

For MySQL, Intel said a data center would require 30 Xeon-based servers versus 50 EPYC-based servers, leading to 684 megawatt hours saved, nearly 290,000 kilograms of CO2 emissions reduced and $509,000 saved in the server farm’s total cost of ownership.

For Monte Carlo, a data center could replace 50 EPYC-based servers with 28 Xeon-based servers to reduce energy consumption by 585.8 megawatt hours, CO2 emissions by more than 248,000 kilograms and total cost of ownership by $561,000, according to Intel.

When it came to running inference on the DistilBERT model, Intel reported the most significant reduction in hardware requirements and corresponding costs. By requiring only 15 Xeon-based servers versus the 50-EPYC based servers for the same level of performance, Intel said it was able to save 1496 megawatt hours in energy, more than 634,000 kilograms in CO2 emissions and $1.3 million in total cost of ownership, the latter of which resulted in a 62 percent cost reduction.

5th-Gen Intel Xeon Scalable CPU SKU List

For the lineup, Intel has split the processors into several categories based on performance, power and workload requirements: dual-socket performance general purpose, dual-socket mainline general-purpose, liquid-cooled general purpose, single-socket general purpose, 5G/networking-optimized, cloud-optimized for infrastructure- and software-as-a-service, storage- and hyperconverged infrastructure-optimized, and long-life use for IoT.

Dual-Socket Performance General Purpose

- Platinum 8592+: 64 cores; 1.9GHz base clock; 2.9GHz all-core turbo clock; 3.9GHz max turbo clock; 320MB cache; 350W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; $11,600 customer pricing.

- Platinum 8580: 60 cores; 2.0GHz base clock; 2.9GHz all-core turbo clock; 4.0GHz max turbo clock; 300MB cache; 350W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; $10,710 customer pricing.

- Platinum 8570: 56 cores; 2.1GHz base clock; 3.0GHz all-core turbo clock; 4.0GHz max turbo clock; 300MB cache; 350W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; $9,595 customer pricing.

- Platinum 8568Y+: 48 cores; 2.3GHz base clock; 3.2GHz all-core turbo clock; 4.0GHz max turbo clock; 300MB cache; 350W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; $6,497 customer pricing.

- Platinum 8562Y+: 32 cores; 2.8GHz base clock; 3.8GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 300W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; $5,945 customer pricing.

- Gold 6548Y+: 32 cores; 2.5GHz base clock; 3.5GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 250W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; long-life availability; $3,726 customer pricing.

- Gold 6542Y: 24 cores; 2.9GHz base clock; 3.6GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 250W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; $2,878 customer pricing.

- Gold 6544Y: 16 cores; 3.6GHz base clock; 4.1GHz all-core turbo clock; 4.1GHz max turbo clock; 45MB cache; 270W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; $3,622 customer pricing.

- Gold 6526Y: 16 cores; 2.8GHz base clock; 3.5GHz all-core turbo clock; 3.9GHz max turbo clock; 37.5MB cache; 195W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; long-life availability; $1,517 customer pricing.

- Gold 6534: 8 cores; 3.9GHz base clock; 4.2GHz all-core turbo clock; 4.2GHz max turbo clock; 22.5MB cache; 195W TDP; 4800 DDR5 memory speed; 128GB SGX enclave capacity; $2,816 customer pricing.

- Gold 5515+: 8 cores; 3.2GHz base clock; 3.6GHz all-core turbo clock; 4.1GHz max turbo clock; 22.5MB cache; 165W TDP; 4800 DDR5 memory speed; 128GB SGX enclave capacity; long-life availability; $1,099 customer pricing.

Dual-Socket Mainline General Performance

- Platinum 8558: 48 cores; 2.1GHz base clock; 3.0GHz all-core turbo clock; 4.0GHz max turbo clock; 260MB cache; 330W TDP; 5200 DDR5 memory speed; 512GB SGX enclave capacity; $4,650 customer pricing.

- Gold 6538Y+: 32 cores; 2.2GHz base clock; 3.3GHz all-core turbo clock; 4.0GHz max turbo clock; 60MB cache; 225W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; $3,141 customer pricing.

- Gold 6530: 32 cores; 2.1GHz base clock; 2.7GHz all-core turbo clock; 4.0GHz max turbo clock; 160MB cache; 270W TDP; 4800 DDR5 memory speed; 128GB SGX enclave capacity; long-life availability; $2,128 customer pricing.

- Gold 5520+: 28 cores; 2.2GHz base clock; 3.0GHz all-core turbo clock; 4.0GHz max turbo clock; 52.5MB cache; 205W TDP; 4800 DDR5 memory speed; 128GB SGX enclave capacity; long-life availability; $1,640 customer pricing.

- Silver 4516+: 24 cores; 2.2GHz base clock; 2.9GHz all-core turbo clock; 3.7GHz max turbo clock; 45MB cache; 185W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; long-life availability; $1,295 customer pricing.

- Silver 4514Y: 16 cores; 2.0GHz base clock; 2.6GHz all-core turbo clock; 3.4GHz max turbo clock; 30MB cache; 150W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; long-life availability; $780 customer pricing.

- Silver 4510: 12 cores; 2.4GHz base clock; 3.3GHz all-core turbo clock; 4.1GHz max turbo clock; 30MB cache; 150W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; long-life availability; $563 customer pricing.

- Silver 4509Y: 8 cores; 2.6GHz base clock; 3.6GHz all-core turbo clock; 4.1GHz max turbo clock; 22.5MB cache; 150W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; long-life availability; $563 customer pricing.

Liquid-Cooled General Purpose

- Platinum 8593Q: 64 cores; 2.2GHz base clock; 3.0GHz all-core turbo clock; 3.9GHz max turbo clock; 320MB cache; 385W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; 2S: $12,400 customer pricing.

- Gold 6558Q: 32 cores; 3.2GHz base clock; 4.1GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 350W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; 2S; $6,416 customer pricing.

Single-Socket General Purpose

- Platinum 8558U: 48 cores; 2.0GHz base clock; 2.9GHz all-core turbo clock; 4.0GHz max turbo clock; 260MB cache; 300W TDP; 4800 DDR5 memory speed; 512GB SGX enclave capacity; $3,720 customer pricing.

- Gold 5512U: 28 cores; 2.1GHz base clock; 3.0GHz all-core turbo clock; 3.7GHz max turbo clock; 52.5MB cache; 185W TDP; 4800 DDR5 memory speed; 128GB SGX enclave capacity; $1,230 customer pricing.

- Gold 3508U: 8 cores; 2.1GHz base clock; 2.2GHz all-core turbo clock; 2.2GHz max turbo clock; 22.5MB cache; 125W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; long-life availability; $415 customer pricing.

5G/Networking-Optimized

- Platinum 8571N: 52 cores; 2.4GHz base clock; 3.0GHz all-core turbo clock; 4.0GHz max turbo clock; 300MB cache; 300W TDP; 4800 DDR5 memory speed; 512GB SGX enclave capacity; 1S; long-life availability; $6,839 customer pricing.

- Gold 6548N: 32 cores; 2.8GHz base clock; 3.5GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 250W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; 2S; long-life availability; $3,875 customer pricing.

- Gold 6538N: 32 cores; 2.1GHz base clock; 2.9GHz all-core turbo clock; 4.1GHz max turbo clock; 60MB cache; 205W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; 2S; long-life availability; $3,351 customer pricing.

Cloud-Optimized For IaaS/SaaS

- Platinum 8592V: 64 cores; 2.0GHz base clock; 2.9GHz all-core turbo clock; 3.9GHz max turbo clock; 320MB cache; 330W TDP; 4800 DDR5 memory speed; 512GB SGX enclave capacity; 2S; $10,995 customer pricing.

- Platinum 8558P: 48 cores; 2.7GHz base clock; 3.2GHz all-core turbo clock; 4.0GHz max turbo clock; 260MB cache; 350W TDP; 5600 DDR5 memory speed; 512GB SGX enclave capacity; 2S; $6,759 customer pricing.

- Platinum 8581V: 60 cores; 2.0GHz base clock; 2.6GHz all-core turbo clock; 3.9GHz max turbo clock; 300MB cache; 270W TDP; 4800 DDR5 memory speed; 512GB SGX enclave capacity; 1S; $7,568 customer pricing.

Storage & Hyperconverged Infrastructure Optimized

- Gold 6554S: 36 cores; 2.2GHz base clock; 3.0GHz all-core turbo clock; 4.0GHz max turbo clock; 180MB cache; 270W TDP; 5200 DDR5 memory speed; 128GB SGX enclave capacity; 2S; $3,157 customer pricing.

Long-Life Use For General-Purpose IoT

- Silver 4510T: 12 cores; 2.0GHz base clock; 2.8GHz all-core turbo clock; 3.7GHz max turbo clock; 30MB cache; 115W TDP; 4400 DDR5 memory speed; 64GB SGX enclave capacity; 2S; long-life availability; $624 customer pricing.

Intel Xeon E-2400 CPUs For Entry-Level Servers And More

In addition to launching fifth-generation Xeon Scalable processors for mainstream servers, the company also announced the launch of the Xeon E-2400 series for entry-level servers as well as the Xeon D-1800/2800 series for low-power networking and edge solutions.

The Xeon E-2400 series is based on the new LGA 1700 socket and uses the same Raptor Cove core as Intel’s 13th- and 14th-gen Core processors for PCs.

Designed for one-socket servers, the Xeon E2400 series features up to eight cores, two DDR5 memory channels running at up to 4800 megatransfers per second and 16 lanes of PCIe 5.0 in addition to four lanes of PCIe 4.0.

Intel said the architectural improvements result in 30 percent faster general performance, 26 percent higher memory bandwidth and 28 percent faster performance for the WordPress HTTPS TLS 1.3 web service compared to the last-generation Xeon E-2300 series.

For low-power networking and edge solutions, the company’s new Xeon D-1800/2800 series brings the maximum core count up to 22 from the previous generation’s 20-core limit. This also means the chipmaker is delivering higher core counts for thermal envelopes that are well-suited for power- and space-constrained networking and edge servers.

Compared to the previous Xeon D-1700/2700 series, the new chips run 15 percent faster performance for web services and 12 percent faster for security workloads.