Run:AI Raises $30M To Split Up GPUs Into AI, HPC Containers

The Tel Aviv-based startup offers a virtualization platform that allows organizations to split up Nvidia GPUs into smaller, virtual GPU instances so that multiple workloads can run on the same GPU, speeding up the pace of GPU-based projects while also increasing the utilization of existing infrastructure.

A virtualization software startup that helps organizations split up GPUs into smaller instances to save money and maximize utilization on AI infrastructure has raised a new round of funding.

Run:AI, a startup based in Tel Aviv, Israel, with offices in New York and Boston, announced on Tuesday that it has raised a $30 million Series B funding round led by private equity firm Insight Partners, two years after the company started. Other investors included TLV Partners and S-Capital.

[Related: AI Chip Startup Tenstorrent Hires Chip Design Legend Jim Keller]

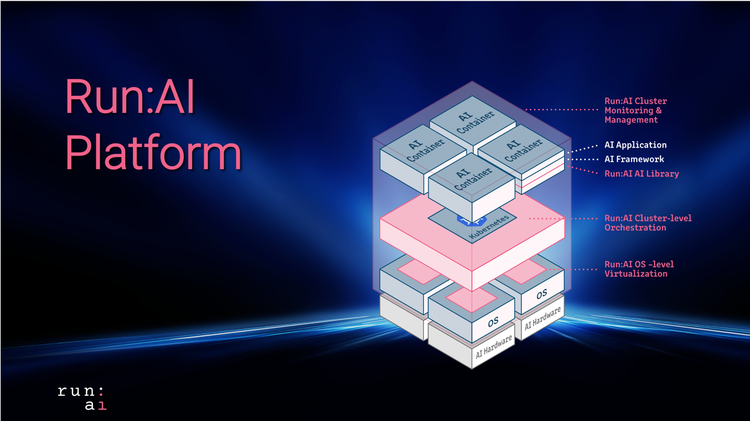

The startup offers an orchestration and virtualization software platform that allows organizations to split up Nvidia GPUs into smaller, virtual GPU instances so that multiple workloads can run on the same GPU, whether they are for AI, high-performance computing or data analytics. The virtual GPUs run as Kubernetes containers on bare metal servers, so there’s no need for a hypervisor, and they can be spun up in seconds, with no reboots or action from an administrator needed.

Omri Geller, CEO and co-founder of Run:AI, said this container-based approach to GPU virtualization comes with two major benefits for organizations running GPU servers: speeding up the pace of GPU-based projects and increasing the utilization of existing infrastructure, which, in turn, can translate to cost savings from not having to buy more GPU servers.

“We’ve seen one of our customers run 7,000 jobs. Each of the jobs took 20 percent of the GPU, which was really cool because he could reach a result five times faster and the IT organization got 500 percent more utilization out of their like infrastructure, which is insane for both sides,” he told CRN.

To help organizations understand how they can make the most out of their existing GPU infrastructure, Run:AI has developed a return-on-investment calculator that can show how much they can save and optimize by using the startup’s platform. On average, the startup said that its platform has increased GPU utilization for customers by 25-75 percent.

“When we start to work with customers, the first thing that we do is, we provide them with visibility into their current consumption of resources, and they quickly see that they can do much better than without procuring more hardware,” Geller said. “They can do much better, so they get a very significant improvement in productivity without procuring more.”

Run:AI’s Kubernetes-based platform differs from Nvidia’s Virtual Computer Server software in a few significant ways: it doesn’t require a hypervisor, which translates into better performance; it runs on Kubernetes, meaning virtual GPUs can be spun up like any other kind of container; and the virtual GPU instances can be spun up on the fly, increasing flexibility for researchers.

“We basically have a simple rule: Everything that runs on Kubernetes runs on Run:AI, because the way that we built our solution is that we‘re integrated into Kubernetes deployments,” Geller said. “So any application that runs on top of Kubernetes actually by default runs on top of Run:AI, so they don’t need to change anything.”

While there are technically no limits on how many virtual GPUs researchers can create from a single GPU, according to Geller, Run:AI recommends its customers deploy no more 15-20 virtual GPU containers due to compute and memory restraints. However, as new, more powerful GPUs come out over time, Geller said, it will become more feasible split up the GPU even more for average workloads.

“We are a software-only solution, so it‘s really up to the customer and their use case on how much they can fractionalize,” he said.

Run:AI can even take advantage of the multi-instance GPU capabilities of Nvidia’s A100, which can be divided into as many as seven GPU instances from a hardware level.

“With Run:AI, we actually have a complementary solution that allows you to take each of the pieces and extra fractionalize it,” Geller said.

With the new funding round, Run:AI plans to double its current team of 30 people across research and development and sales and marketing roles. At the same time, the company will expand its overall go-to-market efforts, which will include more outreach with OEM and channel partners.

“When it comes to channel, this is very efficient for Run:AI because [partners] that are selling Nvidia GPUs are can sell Run:AI software on top of that, and that‘s a complementary solution,” Geller said.

He added Run:AI already works with Hewlett Packard Enterprise and Scan Computers, an IT reseller based in the United Kingdom. The company is also a VMware Technology Alliance Partner and a member of the Nvidia Inception Program.

While Run:AI’s platform only currently supports Nvidia GPUs, Geller said, the startup plans to support other kinds of acceleration hardware in the future.

“From a technical aspect the way that we do the fractionalization, it will be able to work for other accelerators as well, and we‘ll expand there as the market grows and adopts new accelerators,” he said.

Thomas Sohmers, principal hardware architect at Lambda Labs, a San Francisco-based provider of GPU servers, workstations and laptops for deep learning applications, said Run:AI’s platform would be well suited for academic and industry research efforts, for cases where smaller AI models are being used and for scenarios where research environments are constrained by power and costs.

“There are plenty of times where you would be able to get better utilization of your individual researcher resources with things like that,” he said.

But Sohmers said he the larger trend in AI are models that are growing exponentially larger and require compute resources of multiple GPUs rather than fractions of a single one.

“In that case, it‘s sort of the opposite, where you’re really needing more than one GPU, and you’re needing to orchestrate which parts of your actual weights [of the model] and which parts of your [neural] network actually get allocated to different GPUs, either in one single box, or when you’re trying to distribute over multiple separate systems, all with multiple GPUs,” he said.