Pure Storage CEO On Why There Won’t Be Any Hard Disks Within Five Years

‘Up until now, storage systems have been provided for individual use cases, and competitors have generally had four, five, six, or even more unique systems, different software environments, different maintenance environments, and different API’s for different use cases. Today, we can now provide flash storage for all storage needs. We don’t think there’ll be any hard disk sold in five years,’ says Pure Storage CEO Charles Giancarlo.

A Bold Hard Disk Prediction

Pure Storage has had quite a trip in the 14 years since it was formed with an eye on developing what was then a new up-and-coming technology, flash storage. Long-established storage vendors were already experimenting with using SSDs to add flash storage to their disk-based systems or develop all-flash storage, but Santa Clara, Calif.-based Pure Storage was determined to focus exclusively on flash technology.

That bet paid off. Pure Storage is now the storage industry’s second-largest independent vendor with annual revenue approaching $3 billion. It has never taken its eye off the all-flash side of the storage market, focusing instead on bringing flash to every part of the business, including at the entry-level data protection layer.

Therefore, it would seem proclaiming the imminent demise of hard disk-based storage might be a bit of a self-serving proclamation by Pure Storage CEO Charles Giancarlo.

[Related: Pure Storage CEO Outlines What It Means To Be A Software, Cloud Company]

It’s possible.

But Giancarlo backs up his assertion by noting that hard drive sales are already falling, and that flash-based storage price per gigabyte is already lower than for spinning disk-based storage.

“Until now, flash was only price competitive at a system level for tiers zero and one. We’re now penetrating tier-two and bulk data,” he told CRN. “Today we’ve pierced the 20-cents-per-gigabyte level. By next year, we’ll pierce 15 cents per gigabyte. The year after that, we’ll pierce 10 cents per gigabyte.”

The result, Giancarlo said, is that NAND-based flash storage is now competitive price-wise with spinning disk-based storage.

“If we look at NAND versus HDD, you can see the downward trend of HDD over the last decade,” he said. “During that period of time, HDD went from being every on every type of computer from laptops to desktops to servers to now only on secondary and tertiary enterprise mass storage tiers and in hyperscalers.”

Giancarlo’s assertions are backed up by trends from the hard drive manufacturers themselves.

Seagate, for instance, in July reported that in its fiscal fourth quarter 2023 its hard drive shipments dropped 41 percent in terms of total capacity, and fell 18 percent in terms of average capacity per drive.

Rival Western Digital in July reported total fourth fiscal quarter 2023 hard drive shipments of 11.8 million units, down year-over-year from 16.5 million units. Western Digital also reported total hard drive-based capacity fell 18 percent while total flash capacity rose 15 percent year over year.

CRN once predicted tongue-in-cheek that the last hard drive sold would wind up in the Smithsonian Museum in the year 2050, give or take 20 years. But if Giancarlo is correct, that could happen much sooner.

For a look at how Giancarlo can predict the end of the hard drive in five years, click through the slideshow.

What’s your latest definition of Pure Storage?

We are quite unique in what is a very old technology business, storage, where we provide a single operating environment: all-flash for all storage needs. Up until now, storage systems have been provided for individual use cases, and competitors have generally had four, five, six, or even more unique systems, different software environments, different maintenance environments, and different API’s for different use cases. Today, we can now provide flash storage for all storage needs. We don’t think there’ll be any hard disk sold in five years.

And secondly, we do this with the most performance, AI-oriented, hundreds of gigabytes of data moved per second, all the way down to the lowest price-per-byte, with the same architecture, the same software, the same management system, and the same DirectFlash modules that we use on only two hardware architectures that scale up and scale down. And we can do it for both traditional workloads and so-called cloud-native Kubernetes and container workloads with our Portworx software. Customers generally think of storage as a box of tools for the job where each tool was completely different than the other. [But] we can consolidate, we can standardize, we can harmonize these different environments. And that’s unique in the storage market.

Assuming what you’re saying is true and that this is unique, why would it be unique? Why wouldn’t other storage vendors want to do it?

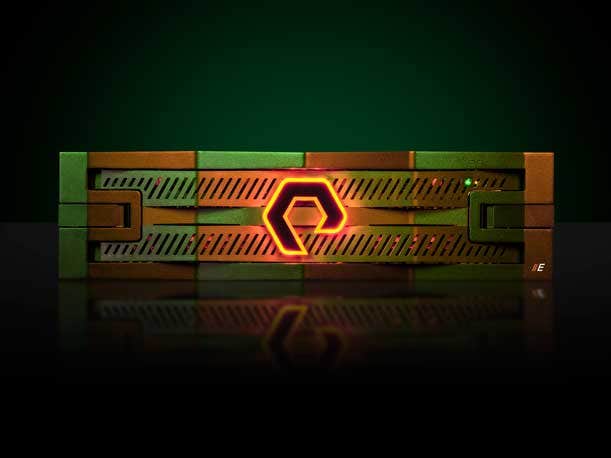

I don’t think it could have been done, first of all, without flash. And I don’t think it can be done with a mix of flash and hard disks. Hard disks have such limited characteristics, that in order to build high performance systems or low cost systems, they have to be used in very different ways. And so I don’t think it was possible in the past. We’re unique in that we started out as all-flash. We never ventured into disk. Our system can’t support disk. Our coming competitors, by contrast, started out in disk. And in order to get to all-flash, they use SSDs which make flash look like disk. So they still only manage disks, effectively. And because of that, they’re limited by all of the tuning and the jump ropes that you have to go through to manage disk-based storage. We’re managing a pure semiconductor. Our software is more efficient, more reliable. And we can focus on harmonizing all these different needs by using that semiconductor with a single system. Our operating system, Purity, does file, block, and object, so we don’t have separate code bases. Second, it manages everything from our new FlashArray and FlashBlade//E environment, which features low price per gigabyte, all the way up to our FlashBlade//S, which is supporting Meta in the most advanced AI supercomputer in the world. The same software across that entire set of environments.

If you think about the customer environment, you don’t mix and match network environments. It is a lot easier to manage with one software environment. We believe that same thing is it will happen in storage as well. One management environment, one set of API’s, to manage all of your storage needs.

On Pure Storage’s last financial conference call, and also at the Pure//Accelerate 2023 conference, you claimed that businesses will no longer be buying hard disks in five years. That’s a pretty bold statement. Why can you say that?

If we look at NAND versus HDD, you can see the downward trend of HDD over the last decade. During that period of time, HDD went from being every on every type of computer from laptops to desktops to servers to now only on secondary and tertiary enterprise mass storage tiers and in hyperscalers. Those are the only two places where hard disks are. Go back to 2007, and they were on consumer devices. Your iPod and your DVR had a hard disk. So they’ve already been supplanted in many different environments. And what we’re saying is that they’re going to be supplanted in enterprise, and also on hyperscalers.

[Pricing-wise,] at a system level—I want to be clear this is at the system level—if you’re providing storage, you just can’t put a hard disk in thin air. You have controllers, chassis, power supplies. Same with flash. You’ve got all those things. So until now, flash was only price competitive at a system level for tiers zero and one. We’re now penetrating tier-two and bulk data. Today we’ve pierced the 20-cents-per-gigabyte level. By next year, we’ll pierce 15 cents per gigabyte. The year after that, we’ll pierce 10 cents per gigabyte.

Now, the reason we know we can do this is, today we have a 48-TB DirectFlash module. We’ve just introduced and we’ll be shipping next quarter a 75-TB [module]. Next year we will ship a 150-TB module, and by 2025 we will ship a 300-TB DirectFlash module. So keep that in mind. We will grow density 6-X over the next two years, from 50 terabytes to 300 terabytes in two years. Say three years if you want. It doesn’t really matter. Today, the densest hard disk you can find is 20 terabytes. If you take what the hard disk manufacturers are projecting, they’ll be at 40 terabytes by the end of the decade. So 2-X over the next six years. So our density improvements are way beyond disk.

Now, by the way, these density improvements are way beyond what is happening with SSDs as well. The densest SSD you can buy today is about 15 terabytes. We think they’re going to have trouble getting past 60 [terabytes]. The simple story is that SSDs have to mimic hard disks. And in order to do so, they have a lot of logic, and they have a lot of DRAM on board to be able to mimic the way hard disks operate. The DRAM required is about 0.1 percent of the flash capacity. Now, that doesn’t sound like a lot, right? But now imagine a 60-TB SSD. It will require 60 gigabytes of DRAM. You don’t have 60 GBs of DRAM in your computer. This would have to be on every SSD. So, the ability of SSDs to keep advancing at the rate of flash is running out of steam.

So this makes us very unique in this environment. And it’s the reason why we’re projecting the end of end of hard disk.

Any other issues?

The other problem is, disks have two heads per platter. There’s a serial input to the platter which has to wait for the data to come around. … You can’t get data in and out of the disk any faster, even though you have more data in the disk. And that’s another limitation of disks that that people often forget. Whereas flash is parallel. We can put as many lanes, typically it’s PCIe lanes, into the DirectFlash module as we want. So we can not just increase the capacity, but we can increase the I/O.

Right now, we have up to 120 parallel flow streams going into each and every one of our DirectFlash modules. We’ve reached that with our E-series, which again, is the same Purity operating system, same management system, same FlashArray, same FlashBlade, now just with denser modules and software that’s optimized for those denser modules, providing the lower price point.

What’s stopping other vendors from doing something like your DirectFlash modules as opposed to using SSDs?

Well, nothing stops them from designing a DirectFlash module, but they don’t have the software to operate it. We effectively have a monopoly on the software. Everybody else uses SSDs. Why? They created their software decades ago, and have continued to update it and add more features and functionality. It’s the easiest thing in the world to become all-flash by throwing in an SSD, so they’ve not invested in managing flash natively. They’ve leveraged SSDs’ ability to convert hard drive signals into signals and management of flash. That’s easy. So they’ve not invested in any of the technology necessary to manage flash directly. We’ve worked at this for 10 years. About 10 percent of our staff is dedicated to understanding every new variation of flash that comes out and optimizing how we manage it. Plus I believe that it’s embedded in the core of the code. It’s not something that’s bolted on. The way code operates is entirely different when it matches flash. So they’d have to effectively start from scratch, which I just don’t think they’re going to be capable of doing.

Looking ahead for the rest of this year and into next year, what are some of the key industry changes you see?

We’re seeing one of the biggest fundamental changes in both the buying and the description and understanding of storage that’s happened in 20 years. That may sound like hyperbole, but I’m realizing it more and more as I visit customers and our field. Whether it’s sales people, engineers, or customers, they have become accustomed to buying storage on an array-by-array and use case-by-use case basis. They think nothing of having five or six different storage operating environments, each one tuned to a different use case. You know, ‘I use this for my high speed databases, I use this for my backup, I use this other thing for my HPC environment, I use this fourth thing for transactional workloads.’ They use different vendors. Even if they use all EMC or all HPE [Hewlett Packard Enterprise] storage, it’s still different vendors, because those companies have five or six different models that have completely different software, operations, and API’s. And by the way, once they’re in place, they’re not shareable or networkable. If a customer needs to use the same data somewhere else, they have to copy it, move it over, and replicate it on another system. They can’t just access it in place.

We’re selling a completely different model, a model with one environment that can run at every level of price performance, can run all of the different protocols—block, file, object—and can be networked into pools of storage, effectively a cloud of storage. And when I say a ‘cloud of storage,’ it works on-prem, across premises, in different cloud environments. And you can manage that as a single pool of storage, setting policies for how data is placed and where it’s placed. And it’s automatic. So we changed something from being very manual to something being very automatic, from something being very heterogeneous to something being very standardized and consolidated. So it’s a completely new mindset. And we’re at the beginning of it. I’m retraining our sales team and our customers on what to expect.

We’re training partners as well. It’s exactly the same. It’s a potpourri of products, and now we have to train people to both sell but also appreciate integrated standardized systems and environments.

Do you find partners pretty much receptive to the message or is there still a lot of work to do?

They’re very receptive. When they hear it, you see light bulbs going off, but it’s the very beginning. And ‘receptive’ is different from fully internalizing and making the change. We’re catching this right at the beginning of the process. And the reason why we’re at the beginning of the process is not because Purity wasn’t already there, or Pure1 wasn’t already there, or Evergreen wasn’t already there. It’s because before the release of FlashBlade//E, we could only address the primary tier of storage. Now that we can address all tiers of storage. That’s when it becomes much more meaningful. So that’s why we’re at the beginning of this.