Nvidia’s 8 Biggest GTC 2020 Product Announcements You Might Have Missed

The chipmaker’s biggest product announcements at GTC 2020 include a new type of processor Nvidia believes will benefit every data center, new GPUs for professionals, software that brings new AI capabilities to videoconferencing and an expanded partnership with VMware.

From A New Server Processor Type To New GPUs For Professionals

Nvidia is no longer just a GPU vendor, and that was made very clear by the diverse range of product announcements the company made at its fall GTC 2020 virtual conference.

“AI requires a whole reinvention of computing, full-stack rethinking, from chips to systems, algorithms, tools, the ecosystem,” Nvidia CEO Jensen Huang said in his GTC 2020 keynote Monday. “Nvidia is a full-stack computing company. We love working on extremely hard computing problems that have great impact on the world. This is right in our wheelhouse.”

[Related: Nvidia CEO Jensen Huang’s 10 Biggest Statements At GTC 2020]

As the Santa Clara, Calif.-based company has demonstrated with its acquisition of Mellanox Technologies earlier this year, its pending acquisition of Arm and its continuously growing software organization, the company has ambitions of becoming a “data center-scale company.”

That goal was particularly apparent with the introduction of a new kind of server processor, partner support for a new AI supercomputer cluster solution, expanding support for Arm platforms and an expanding partnership with one of the data center market’s biggest software players.

What follows is a roundup of the eight biggest GTC 2020 product announcements made by Nvidia that you may have missed.

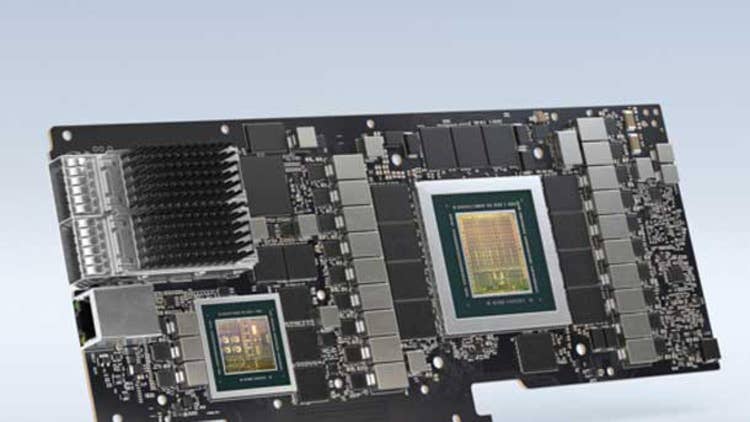

New Family Of BlueField DPUs Revealed

Nvidia revealed a new family of BlueField data processing units, or DPUs, that can offload networking, storage and security workloads from the CPU and enable new security and hypervisor capabilities.

The first product in the lineup, BlueField-2, is sampling with customers now and will be available in 2021 in servers from Asus, Atos, Dell Technologies, Fujitsu, Gigabyte, H3C, Inspur, Lenovo, Quanta and Supermicro. The second product, BlueField-2X, features an Nvidia Ampere GPU for AI-accelerated security, network and storage tasks and will also be available next year. On the software side, earlier supporters include VMware, Red Hat, Canonical and Check Point Software Technologies.

The PCIe-based products feature DOCA, a new “data-center-infrastructure-on-a-chip architecture,” and rely on a mix of Arm processor cores, ConnectX-6 SmartNIC technology from Nvidia’s Mellanox Technologies acquisition and, in the case of a few products, Nvidia’s data center GPU architecture.

With a single BlueField-2, the DPU can deliver the same level of performance for software-defined networking, software-defined security, software-defined storage and infrastructure management that 125 CPU cores can, meaning that CPU cores will have headroom to deliver additional performance for enterprise applications, according to Nvidia.

Beyond Nvidia’s plans to launch the BlueField-2 and BlueField-2X in 2021, the company revealed a DPU product road map that includes the BlueField-3 and BlueField-3X in 2022 as well as the BlueField-4, which will come out in 2023 and, for the first time in the product line, integrate the GPU and Arm cores in the silicon and provide 600 times better performance than the BlueField-2, according to the company.

Nvidia DGX SuperPODs Start Shipping Through Partners

Nvidia announced that its DGX SuperPOD supercomputer clusters are now shipping and available through certified partners.

DGX SuperPODs consist of 20 DGX A100 systems that can provide 100 petaflops of AI performance and can scale up to 140, bringing its total AI juice to 700 petaflops. Each DGX A100 system contains eight A100 Tensor Core GPUs, two 64-core AMD EPYC processors, nine Mellanox ConnectX-6 SmartNICs and a Mellanox HDR InfiniBand 200 interconnect.

The chipmaker said DGX SuperPODs are already shipping to customers in Korea, the United Kingdom, Sweden and India and are expected to be installed by the end of the year. In most cases, according to the company, DGX SuperPODs can be installed within just a few weeks.

Among the initial deployments is Cambridge-1, an 80-node DGX SuperPOD cluster that will provide 400 petaflops of AI performance, which, according to Nvidia, will make it the fastest supercomputer in the U.K. when it goes online before the end of the year.

The company said DGX SuperPOD is available as a blueprint for certified Nvidia partners to deploy their own versions of the supercomputer cluster architecture. Nvidia’s North American partner is World Wide Technology, according to a spokesperson.

Nvidia Expands Arm Support From Edge To Cloud

Nvidia is expanding its support for the Arm ecosystem for everything from edge to cloud computing ahead of the company’s planned acquisition of the British chip designer.

The company’s expanded support includes porting its Nvidia AI and RTX engines to Arm; creating platforms with Arm partners for HPC, cloud, edge and PC; and “complementing Arm partners with GPU, networking, storage and security technologies,” according to Nvidia’s Huang.

“Today, these capabilities are available only on x86. With this initiative, Arm platforms will also be leading edge at accelerated and AI computing,” he said.

This expanded support means that tools and software libraries like Nvidia Rapids and Nvidia HPC SDK will work with Arm-based processors. The company’s new BlueField DPUs will also support Arm through its Neoverse server platforms.

This builds on previous work Nvidia has done to support Arm-based processors with a new reference architecture and ecosystem for GPU-accelerated Arm servers.

Nvidia EGX Edge AI Platform Gets ’Widespread Adoption’

Nvidia said its EGX edge AI platform is getting “widespread adoption” across the tech ecosystem in what Huang said is the “iPhone moment for the world’s industries.”

Among EGX’s supporters on the OEM side are Dell Technologies, Inspur, Lenovo and Supermicro while major software infrastructure providers like Canonical, Cloudera, Red Hat, SUSE and VMware are also supporting the platform, which consists of an optimized AI software stack, the new Nvidia Fleet Command service for remote management and reference architecture.

In addition to announcing the ecosystem support, Huang said the EGX platform is getting updated to support a BlueField-2 DPU and Ampere GPU on a single PCIe card, which will turn “any standard OEM server into a secure, accelerated AI data center.”

“Nvidia EGX can control factories of robots, perform automatic checkout at retail, orchestrate a fleet of inventory movers or help nurses monitor patients,” Huang said during his Monday keynote. “EGX is a full-stack platform consisting of the AI computer, system software, AI frameworks, and fleet management cloud service. Deploying and provisioning services on EGX is simple with one-touch authentication to set up a new node. No Linux admins are required.”

New Nvidia RTX A6000, A40 Ampere GPUs For Professionals

Nvidia updated its portfolio of Ampere GPUs with the new A40 for visual computing in data centers and the new RTX A1600 for desktop workstations.

The A40 is designed to enable virtual workstations and server-based workloads for things like ray-traced rendering, simulation and virtual production. It comes with CUDA Cores, second-generation RT Cores, third-generation Tensor Cores, PCIe 4.0 support and 48 GB of GDDR6 memory with error-correction code. It also supports third-generation NVLink, which allows the connection of two A40s to bring GPU memory to 96 GB, as well as Nvidia’s virtual GPU software solutions.

The RTX A6000 shares many of the same attributes, including up to two times the power efficiency over the previous generation, except for the fact that it’s designed for desktops and not servers. The RTX A6000’s maximum power consumption is 300 watts.

Nvidia said the RTX A6000 will be available from channel partners, including PNY, Leadtek, Ingram Micro and Ryoyo, starting in mid-December. It will also be available in systems from Boxx, Dell Technologies, HP Inc. and Lenovo earlier next year, as will A40-based servers from Cisco Systems, Dell Technologies, Fujitsu, Hewlett Packard Enterprise and Lenovo.

Nvidia Maxine Brings New AI Features To Videoconferencing

Nvidia is using the power of GPUs to bring new AI features to videoconferencing, including a new compression technology to dramatically reduce bandwidth and a new technique for aligning faces.

The chipmaker is making this possible with its new Nvidia Maxine platform, which gives developers the ability to incorporate a variety of GPU-accelerated AI features to videoconferencing.

On the compression front, Nvidia said it has developed a new AI-based compression technology that can bring bandwidth consumption down to one-tenth of what it typically is for the H.264 streaming video compression standard. It does this by analyzing key facial points of the person on camera and then using AI to reanimate certain parts of the face on the server side.

Maxine can also use AI to align each participant’s face to simulate eye contact between each user. Other capabilities include animated avatars with realistic animation based on voice and tone as well super-resolution, noise cancellation and face relighting.

In combination with Nvidia’s Jarvis platform for conversational AI, Maxine can deploy virtual assistants to take notes, create action items and answer questions in human-like voices. It can also allow multiple people to talk at once, with one speaker being heard while the other participants’ voices automatically appear as chat messages through real-time transcription.

Nvidia’s $59 Jetson Nano Gives Devs An Easy Way To Start AI

Nvidia expanding its Jetson edge AI platform with a $59 entry-level developer kit that is designed for students, educators and hobbyists.

The Jetson Nano 2G-B Developer Kit comes with free online training and AI-certification programs, and it’s also supported by the Nvidia JetPack SDK, which consists of an Nvidia container runtime and a full Linux software development environment.

The Jetson Nano 2-GB is able to run a variety of AI models and frameworks, and applications can be ported to other Nvidia AI development platforms because it is powered by the CUDA-X stack.

The developer kit will be available by the end of the month.

Nvidia Omniverse 3-D Production Collaboration Platform Enters Open Beta

Nvidia announced that its Omniverse platform for real-time collaboration in 3-D production environments has entered open beta.

The platform is an Nvidia RTX-based 3-D simulation and collaboration platform that allows remote teams to collaborate on the same project in real time using different programs, and it’s supported by software from a variety of vendors, including Adobe, Autodesk and SideFX.

The platform supports real-time collaboration across multiple software programs thanks to its use of Pixar’s Universal Scene Description format for enabling universal exchange between 3-D applications.

“Physical and virtual worlds will increasingly be fused,” said Huang in a statement. “Omniverse gives teams of creators spread around the world or just working from home the ability to collaborate on a single design as easily as editing a document. This is the beginning of the Star Trek Holodeck, realized at last.”

Nvidia said more than 40 companies and 400-plus individual creators and developers had participated in a yearlong early access program. Among the early adopters are Industrial Light & Magic and Ericsson.

“Nvidia continues to advance state-of-the-art graphics hardware, and Omniverse showcases what is possible with real-time ray tracing,” said Francois Chardavoine, vice president of technology at Lucasfilm and Industrial Light & Magic, in a statement. “The potential to improve the creative process through all stages of VFX and animation pipelines will be transformative.”