The Biggest News From Supercomputing 2019

The Supercomputing 2019 provides a window into the future of computing with announcements from Intel, Hewlett Packard Enterprise, Dell EMC, Nvidia and more on new, groundbreaking technologies for high-performance computing and artificial intelligence.

The Latest And Greatest In HPC, AI

If you want to see where the future of high-performance computing and artificial intelligence is headed, look no further than Supercomputing 2019, the annual conference dedicated to supercomputers and the technologies and products that enable them.

Held Nov. 17-22, the Denver conference is the springboard for many new solutions and applications for HPC and AI from vendors, partners and researchers alike. In the first few days of the conference alone, there were new announcements from Intel, Nvidia, Hewlett Packard Enterprise and Dell Technologies.

What follows is a roundup of the biggest news from Supercomputing 2019 in its first few days, ranging from Intel's plans for a data center GPU and Nvidia's Arm server plans to Dell EMC's new HPC and AI offerings as well as the new combined HPE and Cray portfolio.

Intel Unveils 7nm Xe GPU For HPC, AI

Intel unveiled on Sunday its first Xe GPU for the data center, a 7-nanometer product code-named Ponte Vecchio that will target high-performance computing and artificial intelligence.

Industry observers have been anticipating more details of Intel's discrete GPU plans since 2017 when the chipmaker revealed its intentions to re-enter the market and take on Nvidia and AMD in the space.

Ponte Vecchio will be based on Intel's Xe architecture that will serve as the basis for products across other market segments, including cloud graphics, media transcode analytics, gaming and mobile.

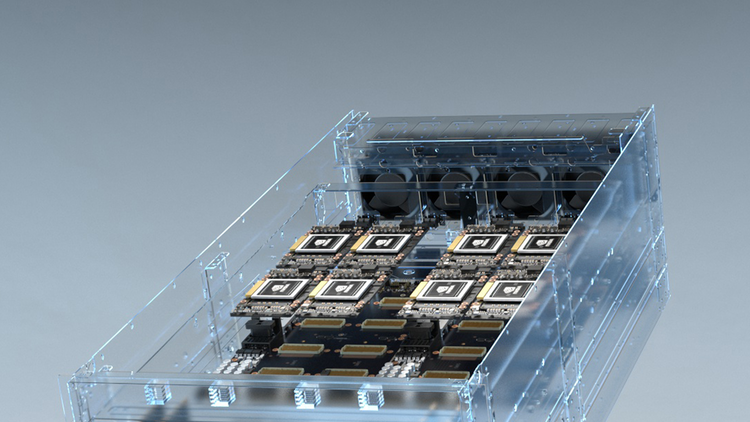

The GPU's microarchitecture, which is designed for HPC and AI specifically, will feature flexible data-parallel vector matrix engine, high double precision floating point throughput and ultra high cache and memory bandwidth. The GPU itself will use Intel's 7nm manufacturing process, its Foveros 3D chip packaging technology and its Xe Link interconnect based on the company's new CXL standard.

While Intel didn't disclose a release window, the company previously announced that it would launch a 7nm data center GPU in 2021. The GPU will also go in the U.S. Department of Energy's Aurora supercomputer, which is set for a 2021 completion.

Beyond Ponte Vecchio, Intel also announced that it would release a next-generation 10nm Xeon processor, code-named Sapphire Rapids, in 2021 after the 10nm Ice Lake in the second half of 2020. In addition, the company launched the beta of its oneAPI unified programming software.

Nvidia's Arm-Based Server Push Gets Ecosystem Boost

Nvidia announced on Monday a new reference design and ecosystem support for its bid to build GPU-accelerated Arm-based servers for high-performance computing.

The Santa Clara, Calif.-based company said its new Arm-based reference design platform is receiving support from Hewlett Packard Enterprise-owned Cray, HPE, Marvell, Fujitsu and Ampere, the latter of which is a data center chip startup founded by former Intel executive Renee James.

These companies will use Nvidia's reference design, which will consist of hardware and software building blocks, to build their own GPU-accelerated servers for everything from hyperscale cloud service providers to high-performance storage and exascale supercomputing.

The reference design and ecosystem support comes after Nvidia announced earlier this year in June that it would support Arm-based CPUs to enable "extremely energy-efficient, AI-enabled exascale supercomputers." The company is doing this by making its CUDA-X libraries, GPU-accelerated AI frameworks and software development tools available to Arm developers.

"There is a renaissance in high performance computing," Nvidia CEO Jensen Huang said. "Breakthroughs in machine learning and AI are redefining scientific methods and enabling exciting opportunities for new architectures. Bringing NVIDIA GPUs to Arm opens the floodgates for innovators to create systems for growing new applications from hyperscale-cloud to exascale supercomputing and beyond."

Nvidia also announced the launch of a new Microsoft Azure NDv2 instance that gives companies access to GPU-accelerated supercomputing in the cloud. The instance offers up to 800 of Nvidia's Tesla V100 Tensor Core GPUs interconnected on a single Mellanox InfiniBand backend network.

In addition, the chipmaker launched its Nvidia Magnum IO suite of software that is designed to eliminate data throughput bottlenecks for HPC and AI workloads. The company said the software can speed up data processing for multi-server, multi-GPU nodes by up to 20 times.

Top500: Intel Cascade Lake Triples Sites, AMD EPYC Enters Fray

Intel tripled the number of the world's top supercomputers using its second-generation Xeon Scalable processors, code-named Cascade Lake, while its top rival, AMD, saw its EPYC processors enter the fray for the first time, according to the latest list of the world's top 500 supercomputers.

The list was released Monday by Top500, the organization that has been tracking the world's top supercomputers annually since 1993. In addition to gains made by Intel and AMD, Nvidia saw the number of supercomputers using its GPU accelerators grow to 136 from 127 in June, with the number of systems using Nvidia's to data center GPU, the Tesla V100, reaching 90.

The number of supercomputers using Intel's Xeon Cascade Lake processors nearly tripled to 26 in the fall 2019 list from nine in the June 2019 list, when the server processors debuted in the top 500. The semiconductor giant continued to hold dominance over the top 500 overall, representing 470 systems, or 94 percent, split between multiple generations of Xeon and Xeon Phi processors.

Meanwhile, AMD notched its first victories in the top 500 list for its EPYC server processors that debuted in 2017 as direct competition to Intel Xeon. Four systems total use AMD EPYC, split evenly between the chipmaker's first-generation Naples processor and second-generation Rome processor. Two other supercomputers use AMD-based processors: one for AMD's now-defunct Opteron processor and another for the Higon Dhyana, a processor developed by AMD's joint venture in China.

Combined HPE-Cray Portfolio For AI, HPC Revealed

Hewlett Packard Enterprise revealed the combined portfolio of HPE and Cray for high-performance computing and artificial intelligence workloads.

The "comprehensive HPC and AI" portfolio, which comes two months after HPE completed its acquisition of supercomputing vendor Cray, encompasses everything from services and software to compute, interconnect and storage technologies that are meant to serve the growing needs of enterprises, according to Cray CEO Peter Ungaro.

This includes Cray's new ClusterStor E1000 storage system, HPE's Apollo server line, Cray's Slingshot interconnect and HPE's Data Management Framework software and Cray's container-based Shasta system management software. It also features HPE GreenLake, which HPE will use to provide HPC and AI solutions in a pay-per-use consumption model.

In addition, HPE is expanding its Apollo server lineup to include Cray's supercomputers, which are based on the supercomputing vendors Shasta architecture.

Bill Mannel, HPE's vice president and general manager for HPC and AI, said while governments and universities have represented the lion's share of supercomputers in the world, there's a lot of room in the commercial space for adoption to accelerate, citing the energy and life sciences sectors as examples.

Dell Intros New HPC Storage, AI, Networking, GPU Solutions

Dell Technologies revealed a range of new solutions for high-performance computing storage, artificial intelligence, high-speed networking and GPU-accelerated workloads.

Among the new solutions is an expansion of the Dell EMC Ready Solutions for HPC Storage with ThinkParQ's BeeGFS and ArcaStream's file systems. The company said the new solutions, which combine technology partners' software with Dell EMC hardware, will help organizations simplify and speed deployment and solutions management.

The company also introduced expanded capacity for the Dell EMC PowerVault ME4, which can now offer 16 terabytes in hard drive space with the ability to scale up to 4 petabytes in a 15U rack space, improving storage density by 25 percent.

The Dell EMC Ready Solutions for AI portfolio was also expanded with a new validated design for the Domino Data Science Platform, which was developed with Domino Data Lab to accelerate the development and delivery of models in a centralized, flexible platform. The company is also releasing five new reference architectures with AI partners, which includes DataRobot, Grid Dynamics and H20.ai.

Dell EMC's PowerSwitch Z-series receive its own expansion with the new PowerSwitch Z9332F-ON, a 400GbE open networking switch targeted for high-performance workloads. The company said the switch is designed for cloud service provider's data center networks that have intense compute and storage traffic — workloads such as HPC, AI and streaming video.

In addition, Dell EMC is providing a new option for its DSS 8440 servers to come with up to 16 Nvidia T4 Tensor Core GPUs for high-performance machine learning inference. The company is also expanding the options for its PowerEdge servers, with new support for Nvidia's Tesla V100S and RTX GPUs as well as Intel's FPGA Programmable Acceleration Card D5005.

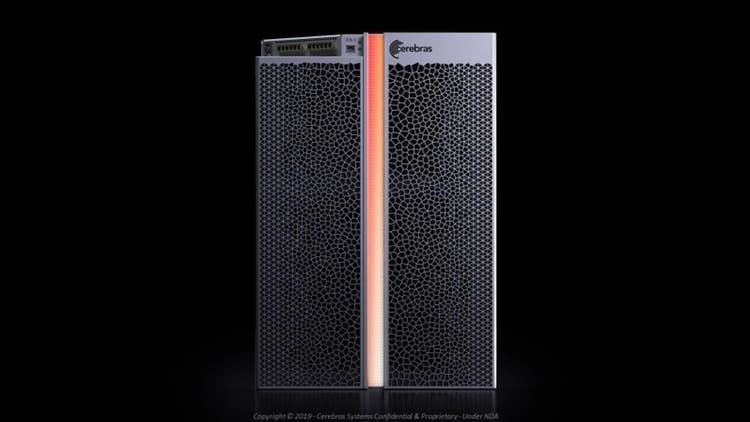

Cerebras Reveals CS-1 AI Computer For Its Large Accelerator Chip

Cerebras, an artificial intelligence chip startup, lifted the curtains on its CS-1 system, which is based on the company's large Wafer Scale Engine chip to enable what it calls the "world's fastest AI computer."

The Los Altos, Calif.-based startup said the CS-1 delivers compute performance with less space and power than any other system, representing one-third of a standard data center rack while replacing the need for hundreds of thousands of space- and power-hungry GPUs.

Cerebras said the Wafer Scale Engine is "the only trillion transistor wafer scale processor in existence," measuring at 56.7 times larger and containing 78 times more compute cores than the largest GPU. The chip contains 400,000 compute cores and 18 gigabytes of on-chip memory.

Among the startup's early customers is the U.S. Department of Energy's Argonne National Laboratory, which is using the CS-1 system to accelerate neural networks for cancer studies.

"The CS-1 is a single system that can deliver more performance than the largest clusters, without the overhead of cluster set up and management," Kevin Krewell, Principal Analyst, TIRIAS Research, said in a statement. "By delivering so much compute in a single system, the CS-1 not only can shrink training time but also reduces deployment time. In total, the CS-1 could substantially reduce overall time to answer, which is the key metric for AI research productivity."