Google Cloud’s 5 New Offers For AWS, Azure And GenAI

From Gemini Pro injected into BigQuery to new cross-cloud capabilities for AWS and Azure, here are five big launches from Google Cloud this month that partners, developers and customers should know.

Artificial intelligence is Google Cloud’s biggest focus area in 2024 with its innovation engine and R&D budget focused on expanding its AI portfolio and generative AI capabilities.

The $37 billion Mountain View, Calif.-based cloud computing giant unleashed a slew of offerings over the past month around cross-cloud data capabilities with Amazon Web Services and Microsoft Azure, as well as making Gemini Pro available in BigQuery.

Additionally, there are several new Google Cloud and Nvidia offerings as the two AI companies form a tighter partnership to drive GenAI.

[Related: ‘AI Is Google’s Birthright’: Google Cloud’s Partner Leader Explains AI Gameplan]

“The strength of our long-lasting partnership with Nvidia begins at the hardware level and extends across our portfolio—from state-of-the-art GPU accelerators, to the software ecosystem, to our managed Vertex AI platform,” said Google Cloud CEO Thomas Kurian in a recent statement. “Together with Nvidia, our team is committed to providing a highly accessible, open and comprehensive AI platform for ML developers.”

CRN breaks down five new Google Cloud offers for AWS, Azure, Nvidia and BigQuery.

Google Cloud Boosts Cross-Cloud Capabilities For AWS And Azure

Google Cloud has introduced new cross-cloud capabilities for Google Cloud and BigQuery Omni regions to make data transfers easier for AWS and Microsoft Azure.

Users can now use GoogleSQL operations to analyze data across different storage solutions for AWS, Azure and public datasets. Cross-cloud eliminates the need to copy data across sources before running queries.

Additionally, Google Cloud partners and customers can now take advantage of materialized views on BigLake tables for Amazon Simple Storage Service (Amazon S3).

Materialized views on BigLake metadata tables can reference structured data stored in Amazon S3. A materialized view replica lets customers use the Amazon S3 materialized view data in queries while avoiding data egress costs and improving query performance. This means Amazon S3 data is available locally in BigQuery.

Click through to read the other four new Google Cloud offerings.

Gemini Pro Becomes Available In BigQuery

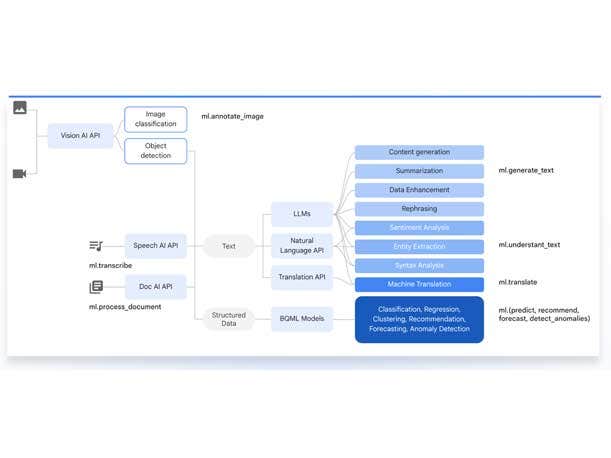

Gemini 1.0 Pro has now been integrated with BigQuery ML via Vertex AI. The goal is to simplify multimodal generative AI for data by making Gemini Pro models available through BigQuery ML.

For example, users can analyze customer reviews in real-time and combine them with purchase history and current product availability to generate personalized messages and offers, all from inside BigQuery.

BigQuery ML lets users create, train and execute machine learning models in BigQuery using familiar SQL. With customers running hundreds of millions of prediction and training queries every year, usage of built-in ML in BigQuery grew 250 percent from July 2022 to July 2023, according to Google.

The Gemini 1.0 Pro model is designed for high input/output scale and better result quality across a wide range of tasks such as text summarization and sentiment analysis. Users can now access Gemini 1.0 Pro on BigQuery ML using SQL statements or BigQuery’s embedded DataFrame API from inside the BigQuery console.

The goal is to enable customers to build data pipelines that blend structured data, unstructured data and generative AI models together to create a new class of analytical applications.

Nvidia NeMo Now Available On Google Kubernetes Engine (GKE)

Nvidia’s NeMo is an open-source platform purpose-built for developing custom generative AI models on. NeMo facilitates a complete workflow from automated distributed data processing to training of large-scale models and deployment on Google Cloud.

Customers can now train models on the Google Kubernetes Engine (GKE) using the Nvidia NeMo framework.

The NeMo framework approaches building AI models using a modular design to encourage data scientists, ML engineers and developers to mix and match core components including data curation, distributed training and model customization.

GKE provides scalability and compatibility with a diverse set of hardware accelerators including Nvidia GPUs aimed at significantly improving performance and reducing costs.

The combination of NeMo and Google GKE provides the scalability, reliability, and ease of use to train and serve AI models.

AlloyDB AI Now General Available

AlloyDB AI, which is an integrated set of capabilities to easily build enterprise GenAI applications, is now generally available.

AlloyDB is Google Cloud’s managed PostgreSQL compatible database designed for superior performance, scale, and availability.

By leveraging AlloyDB AI for enterprise grade production workloads, Google said developers have the tools they need to start adding intelligent, accurate, and helpful generative AI capabilities to their applications that are grounded on the data in their enterprise databases.

Google said AlloyDB AI runs vector queries up to 10-times faster compared to standard PostgreSQL. It supports 8-bit quantization for a three-times reduction in index size when enabled, and offers vectors with four-times more dimensions when enabled.

AlloyDB AI is now GA in both AlloyDB and AlloyDB Omni.

Nvidia’s Blackwell Platform On Google Cloud

In one of the company’s biggest project launches of 2024, Nvidia recently unveiled its new Blackwell GPU architecture. The chipmaker claimed the platform will enable up to 30-times greater inference performance and consume 25 times less energy for AI models compared to previous Nvidia GPU platforms.

Google Cloud said it has adopted Nvidia’s new Nvidia Grace Blackwell AI computing platform, as well as the Nvidia DGX Cloud service, on the Google Cloud Platform (GCP).

The new Grace Blackwell platform enables organizations to build and run real-time inference on trillion-parameter large language models.

Google Cloud is adopting the platform for various internal deployments and will be one of the first cloud providers to offer Blackwell-powered instances.