AWS’ 7 New Cloud Storage Products; NetApp, Oracle, Cloudflare Support

From the new AWS Mountpoint for Amazon S3 to expanding support for copying data to and from other clouds like Oracle and NetApp, here’s seven new AWS cloud storage offerings just launched.

Amazon Web Services has unleashed seven new cloud storage offerings and features to improve AI and ML workloads, data protection and resiliency, and new support for NetApp, Cloudfare and Oracle storage products.

Some of the biggest highlights include AWS Backup’s new air-gapped vault feature; Amazon CloudWatch injected into NetApp ONTAP; enhancements to Amazon FSx design to reducing computing costs; as well as the company’s brand new open-source Mountpoint offering for Amazon S3.

Additionally, AWS is expanding support for copying data to and from other clouds including Oracle, Wasabi, DigitalOcean and Cloudflare.

“When it comes to storage for AI/ML, data volumes are increasing at an unprecedented rate—exploding from terabytes to petabytes and even to exabytes,” said Veliswa Boya, senior developer advocate at AWS during the company’s recent AWS Storage Day 2023 virtual event. “Whether you’re just getting started with the cloud, planning to migrate applications to AWS, or already building applications on AWS, we have resources to help you protect your data and meet your business continuity objectives.”

AWS Backup’s New ‘Logically Air-Gapped Vault’

One of coolest new cloud storage launches from Amazon recently is for AWS Backup. Amazon’s backup service centralizes and automates data protection across 19 AWS services, hybrid workloads and third-party applications like VMware Cloud on AWS and SAP HANA.

Amazon just launched a new AWS Backup Vault that enables secure sharing of backups across accounts and organizations, supporting direct restore to help reduce recovery time from a data loss event.

“Logically air-gapped vault stores immutable backup copies that are locked by default, and isolated with encryption using AWS owned keys,” said AWS in a recent blog post.

The new air-gapped feature can enhance data security and defense against ransomware, as well as reduce the risk of unwanted data deletions, AWS touts. The vault can be shared for recovery with other accounts using AWS Resource Access Manager (RAM).

Click through to learn about the other six new cloud storage offerings, features and integrations AWS recently launched.

AWS DataSync Expands To Wasabi, Cloudflare, Oracle And DigitalOcean

AWS DataSync is an online data movement and discovery service designed to simplify data migration to help users quickly and securely transfer file or object data between AWS storage services.

In addition to support of data migration to and from AWS storage services, DataSync supports copying to and from other clouds such as Google Cloud Storage, Azure Files, and Azure Blob Storage.

AWS has now expanded the support for DataSync for copying data to and from other clouds to include: DigitalOcean Spaces, Wasabi Cloud Storage, Backblaze B2 Cloud Storage, Cloudflare R2 Storage, and Oracle Cloud Storage.

The mission of DataSync is to enable customers to move their object data at scale between Amazon S3 compatible storage on other clouds and AWS storage services such as Amazon S3.

New FSx File Release Feature For Lustre To Free Up Storage Capacity

Amazon FSx for Lustre offers shared storage with scalability and high performance of the open-source Lustre file systems to support Linux-based workloads. It helps customers avoid storage bottlenecks, increase utilization of compute resources, and decrease time to value for workloads like AI and ML.

AWS launched a new file release for FSx for Lustre that allows users to effectively manage their data by freeing up storage space occupied by synchronized files with Amazon S3.

“This feature helps you manage your data lifecycle by releasing file data that has been synchronized with Amazon S3,” said AWS. “File release frees up storage space so that you can continue writing new data to the file system while retaining on-demand access to released files through the FSx for Lustre lazy loading from Amazon S3.”

The new file release feature ensures that only data from the specified directory, and data meeting the minimum time since the last access, is released. AWS said file release will be a valuable tool for data lifecycle management as it helps efficiently move less frequently accessed data to S3.

New Mountpoint For Amazon S3

AWS’ new Mountpoint for Amazon Simple Storage Service (S3) is now generally available. The new open-source file client delivers high-throughput access to Amazon S3, designed to lower processing times and compute costs for data lake applications.

“Mountpoint for Amazon S3 is ideal for workloads that read large datasets (terabytes to petabytes in size) and require the elasticity and high throughput of Amazon S3,” said AWS in a recent blog post.

The new offering is a file client that translates local file system API calls to S3 object API calls, such as GET and PUT. Mountpoint for Amazon S3 supports sequential and random read operations on existing files and sequential write operations for creating new files. Use cases include machine learning training as well as reprocessing and validation in autonomous vehicle data processing.

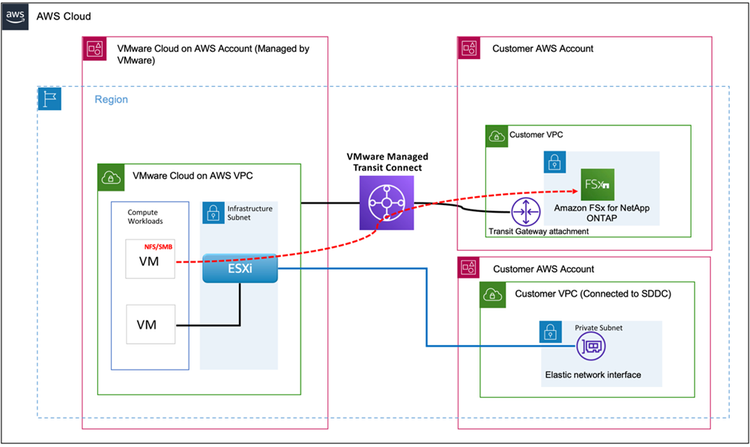

Amazon FSx For NetApp Gets New CloudWatch Metrics

Amazon FSx for NetApp ONTAP is an AWS service that provides fully managed NetApp ONTAP file systems in the cloud. The company has now injected additional Amazon CloudWatch performance metrics for improved visibility into file system activity and enhanced monitoring dashboards with performance insights and recommendations.

Users can leverage the new metrics and dashboards to more easily monitor and right-size their file systems to optimize performance and costs.

“You now have an expanded set of performance metrics available in CloudWatch—such as CPU utilization, SSD IOPS usage, and network bandwidth utilization—that enable you to understand and optimize performance and costs across an even wider range of workloads,” said AWS.

Using the enhanced monitoring dashboard, users also get improved performance insights with recommendations to help optimize performance, such as recommendations to increase a file system’s SSD I/O performance level if the workload is I/O-limited.

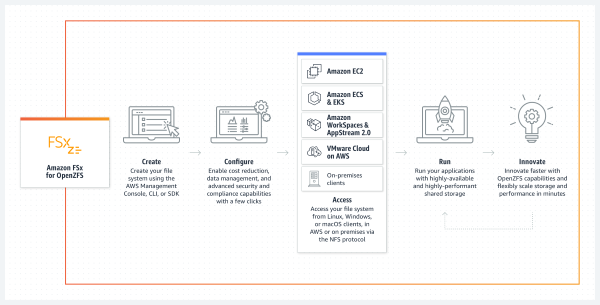

Amazon FSx For OpenZFS New Deployment Options

For the first time, customers can now use multiple AWS Availability Zones deployment options when creating file systems on Amazon FSx for OpenZFS. This makes it easier for customers to deploy file storage that spans multiple AWS Availability Zones to provide resilience for business-critical workloads.

“With this launch, you can take advantage of the power, agility, and simplicity of Amazon FSx for OpenZFS for a broader set of workloads, including business-critical workloads like database, line-of-business, and web-serving applications that require highly available shared storage that spans multiple Availability Zones,” said AWS in a blog post.

The new multiple Availability Zones file systems are designed to deliver high levels of performance to serve a broad variety of workloads, such as financial services analytics, media and entertainment workflows, semiconductor chip design, and streaming—up to 21 GB per second of throughput and over 1 million IOPS for frequently accessed cached data, and up to 10 GB per second and 350,000 IOPS for data accessed from persistent disk storage.

Scale IOPS Separately From Amazon FSx Storage

Amazon FSx for Windows File Server provides managed file storage built on the Windows Server. A new feature now enables users to select and update the level of I/O operations per second (IOPS) separately from storage capacity on the file system.

Prior to this recent launch, the IOPS performance level for accessing data on a users’ file SSD storage disks was fixed at a ratio of 3 IOPS per GiB of storage capacity. Now, customers can configure SSD IOPS independently from storage capacity up to a ratio of 500 IOPS per GiB.

“This new capability enables you to improve price-performance for IOPS-intensive workloads like SQL Server databases and optimize costs for workloads with IOPS requirements that vary over time like periodic reporting jobs,” said AWS in a blog post.

Users can update their file system’s SSD IOPS level in just a few minutes without impacting file system availability, enabling optimized costs and accelerate time-to-results for workloads.