AWS And Anthropic: 5 Key AI Chip Supply Chain Plans, Intel Hires To Know

AWS CEO Adam Selipsky said his $85 billion cloud company is ramping up production “to provide a very robust AWS-controlled supply chain for AI chips,” thanks to Amazon’s $4 billion investment in Anthropic.

AWS CEO Adam Selipsky (pictured) has massive plans in store for Amazon’s blockbuster investment of up to $4 billion in AI startup star Anthropic—from shaking up the chip industry to hiring new talent from the likes of Intel.

“It’s absolutely true that there is a huge demand for all of the different chips with which people do generative AI workloads on,” Selipsky said in an interview with Bloomberg. “So we absolutely have already been ramping up our Trainium and Inferentia supply chain and ramping up the supply that we can create as quickly as possible.”

CRN breaks down the biggest plans and initiatives Amazon has for Anthropic, CEO Selipsky’s boldest statements on his AI chip vision, and new AWS executive hires from Intel.

[Related: Adam Selipsky: AWS Is Building ‘The Ultimate’ GenAI Tool Chest]

Before jumping into AWS and Amazon’s plans for the AI startup, it’s key to note the technologies and strategy at play.

The Seattle-based $85 billion cloud giant is looking to become a global AI chip maker via its custom silicon offers Trainium and Inferentia. Anthropic is part of AWS’ plan to succeed as the popular AI startup will use AWS Trainium and Inferentia chips to build, train, and deploy its future foundation models. The two companies will also collaborate in the development of future Trainium and Inferentia technology.

“There’s still a whole lot of storage and compute and database workloads ramping up on AWS. So we have many sources of growth, I anticipate, but there’s absolutely no doubt that generative AI looks like it’s going to be an explosive additional source of growth in the years ahead,” said Selipsky.

Here’s five key things to know about AWS custom silicon chip plans and boldest remarks from Selipsky and Anthropic CEO Dario Amodei—a former vice president at OpenAI—that investors, channel partners and customers need to know.

AWS Will ‘Provide A Very Robust AWS Controlled Supply Chain For AI Chips’

Selipsky said AWS and Anthropic will provide a robust supply chain for AI chips.

“Anthropic will have access to very significant quantities of compute, which will have Trainium and Inferentia in them. So yes, that’s one of many reasons why we continue to ramp up and to provide a very robust AWS controlled supply chain for AI chips,” said the AWS’ CEO.

AWS’ Trainium and Inferentia accelerators provide high performance for deep learning inference applications like generative AI.

“It’s absolutely true that there is a huge demand for all of the different chips with which people do generative AI workloads. So we absolutely have already been ramping up our Trainium and Inferentia supply chain and ramping up the supply that we can create as quickly as possible,” he said.

As AWS helps customers and partners accelerate their efforts with generative AI there will be a variety of ways AWS will invest and grow its capabilities.

“We will continue to invest in our own Titan models, to work with a number of valuable partners who make their models available on Bedrock, and to develop generative AI-powered applications,” Selipsky said.

AWS Hires Top Intel AI Executive Kavitha Prasad

CRN learned that Kavitha Prasad recently left Intel as vice president and general manager of Intel’s Data Center and AI Cloud Execution and Strategy Group to become general manager of perimeter protection at AWS.

AWS’ perimeter protection business includes services like AWS Shield and AWS Firewall Manager that protect customers’ applications and infrastructure from attacks.

Prasad had led Intel’s data center AI strategy as part of the company’s renewed push to compete against Nvidia. She was previously Intel’s general manager of Data Center and AI Cloud Execution and Strategy Group.

In that role, Prasad led Intel’s “overall AI strategy and execution efforts” and was also responsible for “developing [the Data Center and AI Group’s] strategy for next-generation data center solutions, cloud architecture solutions, and deployment systems,” according to a 2022 memo seen by CRN.

AWS Hires Intel’s Former Cloud Services Leader Raejeanne Skillern

AWS recently hired Intel’s former cloud services leader Raejeanne Skillern as its new vice president and global Chief Marketing Officer.

“We are excited to have her on board, and we are confident that her vast experience and expertise will significantly impact AWS,” Matt Garman, senior vice president of AWS Sales and Marketing said in July.

Skillern spent a whopping 15 years at Intel, most recently as vice president of Intel’s Cloud Service Provider Business where she managed Intel’s business and products for cloud infrastructure deployments.

She led the team that worked with the world’s largest cloud providers—AWS, Microsoft and Google Cloud—to ensure that Intel’s data center portfolio of products was optimized.

Skillern first joined Intel in 2002 as director of enterprise server demand creation marketing. She climbed the executive ladder at Intel over the years to become vice president of Intel’s Cloud Service Provider Businesses in 2014.

Anthropic CEO, Former VP at OpenAI, Weighs-In On AWS Chips

Dario Amodei, co-founder and CEO of Anthropic, is bullish about Amazon’s $4 billion investment in his startup. It is key to note that Amodei previously worked at AI rival OpenAI for years, including OpenAI’s vice president of research, before creating Anthropic in early 2021.

“We are excited to use AWS’s Trainium chips to develop future foundation models,” said Amodei in a statement. “Since announcing our support of Amazon Bedrock in April, Claude has seen significant organic adoption from AWS customers.”

Anthropic Claude is the company’s next-generation AI assistant based on the company’s top-notch research into training AI systems. Claude is currently available in the U.S. and U.K. In July, Anthropic launched Claude 2 with improved performance, longer responses and accessibility via API as well as a new public-facing website.

“By significantly expanding our partnership, we can unlock new possibilities for organizations of all sizes, as they deploy Anthropic’s safe, state-of-the-art AI systems together with AWS’s leading cloud technology,” said Amodei.

Generative AI Customers Expected to ‘Ramp Up’ Quite Steeply; SageMaker Now Has Over 100,000 Customers

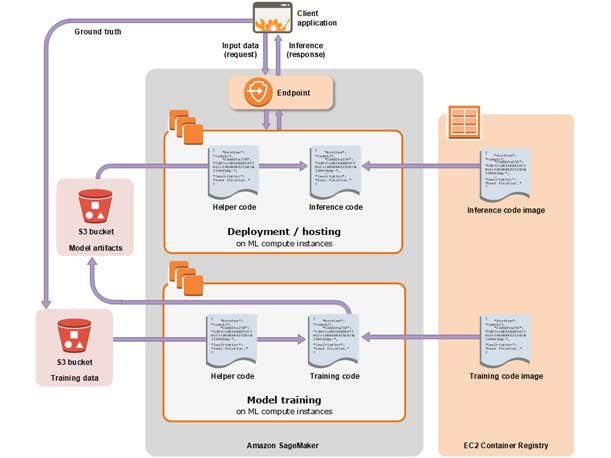

To show that AWS is already deeply connected to the generative AI world with existing customers, Selipsky let it be known that AWS SageMaker already has over 100,000 customers.

“Look, AWS has had machine learning services since at least 2017 when we released our SageMaker machine learning service, which has over 100,000 AWS customers on it. So we’ve been doing machine learning for a long time inside of AWS,” said Selipsky.

“Obviously, more recently we’ve had a significant number of generative AI customers. We will certainly continue to ramp up, I anticipate, quite steeply,” he added.

The AWS CEO said his company - already $85 billion cloud company - has many growth sources.

“We are a scaled and relatively sizable business at this point and customers are running their data platforms on AWS. They are building out more and more applications for things like supply chain and contact center management on AWS,” said Selipsky. “There’s still a whole lot of storage and compute and database workloads ramping in on AWS. So we have many sources of growth, I anticipate, but there’s absolutely no doubt that generative AI looks like it’s going to be an explosive additional source of growth in the years ahead.”