Microsoft Azure Gets New Nvidia Service For Developing Custom GenAI Apps

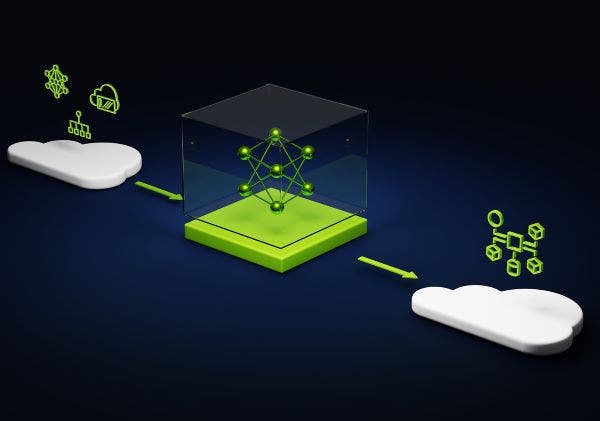

Nvidia AI Foundry gives businesses a collection of ‘commercially viable’ generative AI foundation models, developed by Nvidia and third parties, they can customize using Nvidia’s NeMo framework and tools on the company’s DGX Cloud AI supercomputing service hosted by Microsoft Azure.

Microsoft Azure is getting a new service from Nvidia that is designed to let businesses develop, fine-tune and run custom generative AI applications using proprietary data.

Announced at Microsoft Ignite 2023 on Wednesday, the new service is called Nvidia AI Foundry, and it gives businesses a collection of “commercially viable” GenAI foundation models, developed by Nvidia and third parties, they can customize using Nvidia’s NeMo framework and tools.

[Related: Nvidia ‘Doubling Down’ On Partners With DGX Cloud Service]

All of this runs on Nvidia’s DGX Cloud AI supercomputing service, which runs on top of Nvidia GPU-powered Azure instances and comes with all the software elements needed to develop and deploy AI applications in the cloud, including the Nvidia AI Enterprise software suite.

Among the first companies to use Nvidia AI Foundry are SAP, Amdocs and Getty Images.

“Any customer of Microsoft can come and do this entire enterprise generative AI workflow with Nvidia on Azure,” said Manuvir Das, vice president of enterprise computing at Nvidia, said in a briefing.

The launch of Nvidia AI Foundry on Microsoft Azure coincides with the arrival of the cloud computing giant’s NC H100 v5 VM series cloud instances, which are the first in the industry to take advantage of Nvidia’s H100 NVL GPUs. The H100 NVL GPUs combine two PCIe-based H100 GPUs to provide nearly 4 petaflops of AI compute and 188GB of HBM3 high-bandwidth memory.

Microsoft also announced that it plans to launch cloud instances next year that will use Nvidia’s recently disclosed H200 GPU, which offers 141GB of HBM3e memory and a 4.8 TB/s memory bandwidth, a big increase from the H100’s 80GB HBM3 and 3.5 TB/s memory capabilities.

How Nvidia AI Foundry Works

Nvidia AI Foundry is part of the AI chip giant’s bid to become what CEO Jensen Huang describes as a “full-stack computing company.” This translates into the chip designer providing the essential hardware and software for powering advanced computers, from GPUs, CPUs and networking components, to systems and reference designs, to various layers of software.

At the foundation of Nvidia AI Foundry is the DGX Cloud service. Announced earlier this year, the service combines Nvidia’s Base Command software platform and the Nvidia AI Enterprise software suite with the company’s DGX supercomputer systems, which are powered by either its flagship H100 data center GPUs or the previous-generation A100 GPUs.

Base Command manages and monitors AI training workloads, and it also lets users right-size the infrastructure they need for such workloads. AI Enterprise, on the other hand, comes with the AI frameworks and tools needed for developing and deploying AI applications.

“Importantly, customers of DGX Cloud have the capability to work directly with Nvidia engineering to optimize their workloads when they come and work on DGX Cloud,” Das said.

To let businesses develop custom GenAI applications, Nvidia is offering two additional components as part of Nvidia AI Foundry. The first is collection of what the company calls Nvidia AI Foundation models, which consists of large language models developed by Nvidia that businesses can customize.

The AI Foundation collection includes Nvidia’s new Nemotron-3 8B models, which includes versions tuned for different use cases such as chatbots and have multi-lingual capabilities.

“It is a mission for Nvidia on behalf of enterprise customers now to continually produce and update these variants of these models, both in terms of parameter size, in terms of the datasets they’re trained on in terms of the capabilities, and all with responsibly sourced data that we are able to share with our enterprise customers so that they know the antecedents of the model,” Das said.

Nvidia is also making available third-party AI models such as Meta’s Llama 2 that have been optimized to run on the company’s hardware and software.

To customize these models, businesses can use Nvidia’s NeMo framework and tools, which includes methods for extracting proprietary data sets to use in models, fine-tuning those models and applying guardrails to ensure proper and safe use of those models.

DGX Cloud and AI Enterprise are available on Azure Marketplace. AI Enterprise, which includes NeMo, is also integrated into Microsoft’s Azure Machine Learning service. The models developed by Nvidia and third parties are available in the Azure AI Model catalog.