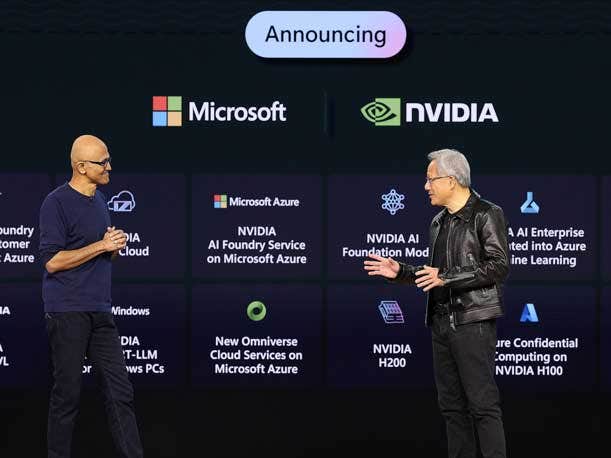

Microsoft Ignite 2023: Nvidia CEO Huang Says Microsoft Is Now ‘More Collaborative And Partner-Oriented’

‘You invited Nvidia’s ecosystem, all of our software stacks, to be hosted on Azure. … And as a result, we’re ecosystem partners. … There’s just a profound transformation in the way that Microsoft works with the ecosystem,’ says Nvidia CEO Jensen Huang while sharing the stage with Microsoft CEO Satya Nadella at Ignite.

One of the standout speakers at Microsoft’s annual Ignite conference this week wasn’t even a Microsoft employee.

Nvidia co-founder and CEO Jensen Huang had some bold proclamations on the importance of generative artificial intelligence in computing and praised Microsoft as “much more collaborative and partner-oriented” while sharing the stage with Microsoft Chairman and CEO Satya Nadella during the latter’s Ignite 2023 keynote speech.

“You really deserve so much credit for transforming Microsoft’s entire culture to be so much more collaborative and partner-oriented,” Huang told Nadella on stage. “You invited Nvidia’s ecosystem, all of our software stacks, to be hosted on Azure. … And as a result, we’re ecosystem partners. … There’s just a profound transformation in the way that Microsoft works with the ecosystem.”

[RELATED: Microsoft Azure Gets New Nvidia Service For Developing Custom GenAI Apps]

Nvidia CEO Huang Praises Microsoft CEO Nadella

Huang told the audience at Ignite that the partnership has benefited the Santa Clara, Calif.-based chipmaker’s 7 million Cuda developers and the approximately 15,000 startups worldwide that work on Nvidia’s platform.

“The fact that they could now take their stack and without modification run it perfectly on Azure—my developers become your customers,” Huang said. “My developers also have the benefit of integrating with all of the Azure APIs and services, the secure storage, the confidential computing.”

Nadella told the audience that Redmond, Wash.-based Microsoft has also benefited from the Nvidia partnership. For example, he said that “the largest install base on the edge of very powerful AI machines happens to be Windows PCs with GPUs from Nvidia.”

During Nadella’s keynote, the Microsoft CEO said that he built “the most powerful AI supercomputing infrastructure in the cloud using Nvidia GPUs.”

OpenAI, the Microsoft-backed creator of text generator ChatGPT and image generator Dall-E, “has used this infrastructure to deliver the leading LLMs [large language models] as we speak,” Nadella said.

Nadella also said that Microsoft will add Nvidia’s H200 AI accelerator to its fleet “to support even larger model inferencing with the same latency, which is so important as these models become much bigger, more powerful.”

“The ability for us to have this new generation of accelerators is a big deal,” he said.

Microsoft has a preview available of Azure Confidential GPU virtual machines co-designed with Nvidia. With these VMs, “you can run your AI models on sensitive data sets on our cloud,” Nadella said.

“If you’re doing what is referred to as retrieval augmented generation, or RAG … running on this confidential GPU VM, you can enrich, for example, your prompt with very query-specific knowledge from proprietary databases, document archives, while keeping the entirety of the process protected end to end,” he said.

As part of the Azure models-as-a-service offering Nadella detailed during his keynote, he told the Ignite crowd that Nvidia’s Nemotron-3 family of models are part of the Microsoft model catalog for users to build general-purpose AI applications.

Microsoft, Nvidia Partnership

Huang was on stage to announce that Nvidia Omniverse—a stack originally on-premises—is now available on Azure Cloud and that Nvidia offers a new service called AI Foundry, which aims to give businesses a collection of commercially viable GenAI foundation models developed by Nvidia and third parties.

On the importance of GenAI in computing, Huang called the technology “the single most significant platform transition in computing history.”

“In the last 40 years, nothing has been this big,” he said. “It’s bigger than PC. It’s bigger than mobile. It’s going to be bigger than the internet.”

GenAI is not just a technology wonder, but also good for business and grows companies’ total addressable markets, Huang said.

“This is also the largest TAM [total addressable market] expansion of the computer industry in history,” Huang said. “There’s a whole new type of data center that’s now available. Unlike the data centers of the past, this data center is dedicated to one job and one job only. Running AI models and generating intelligence. It’s an AI factory.”

AI factories are a new segment in computer hardware, Huang said, just as copilots—Microsoft’s term for tools that can generate text, code and other content from user queries—are a new segment in software.

If startups are the first wave of GenAI and copilots and enterprise technology are the second wave, GenAI in heavy industries represents the third and “largest wave of all” for the technology’s potential.

“And this is where Nvidia’s Omniverse and generative AI is going to come together to help heavy industries digitalize and benefit from generative AI,” Huang said. “So we’re really, quite frankly, barely in the middle of the first wave. Starting the second wave.”

Nvidia will benefit as a full site license customer of Microsoft copilot, the CEO told the crowd. “If you think that Nvidia is moving fast now, we are going to be turbocharged by copilot,” he said.

What Taiwan Semiconductor Manufacturing Co. (TSMC) did for chips, Huang wants Nvidia and Microsoft to do that for users building proprietary LLMs to power GenAI applications, he said.