Nvidia CEO On ‘Joining Forces’ With Google To ‘Reinvent Cloud’ For AI

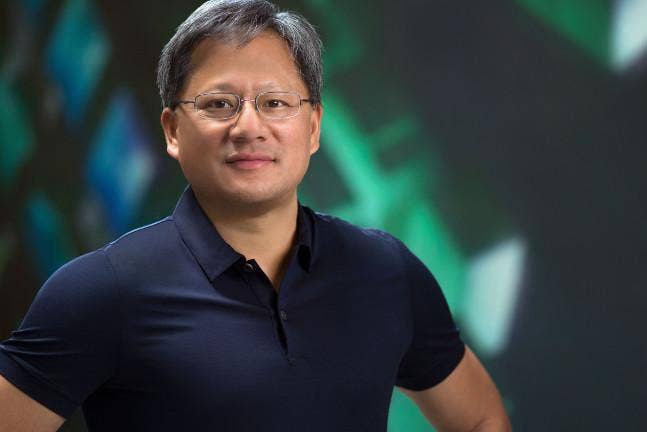

‘Generative AI is revolutionizing every layer of the computing stack, and our two companies—with two the most talented, deepest computer science and computing teams in the world—are joining forces to reinvent cloud infrastructure for generative AI,’ says Nvidia CEO Jensen Huang.

Nvidia CEO Jensen Huang told thousands of Google partners and customers this week that the two tech titans will be forming a tighter co-innovation and go-to-market relationship to “reinvent cloud infrastructure” for the generative AI era.

“Generative AI is revolutionizing every layer of the computing stack, and our two companies—with two of the most talented, deepest computer science and computing teams in the world—are joining forces to reinvent cloud infrastructure for generative AI,” said Nvidia’s CEO this week on stage with Google Cloud CEO Thomas Kurian at Google Cloud Next 2023.

“We’re starting at every single layer: starting from the chips, H100 for training and data processing, all the way to model serving with Nvidia L4 [GPUs],” Huang said. “This is a re-engineering of the entire stack—from the processors, to the systems, to the networks, and all of the software. All of this is to accelerate GCP [Google Cloud Platform’s] Vertex AI, and to create software and infrastructure for the world’s AI researchers and developers.”

[Related: Google CEO Sundar Pichai: 5 Boldest AI Remarks From Google Cloud Next Keynote]

At Google Cloud Next this week, the companies launched several new offerings aimed at helping customers build and deploy large models for generative AI.

This includes the integration of Google’s serverless Spark offering with Nvidia GPUs, new Google A3 VM instances powered by Nvidia H100 Tensor Core GPUs, and Google’s PaxML optimized for Nvidida accelerated computing.

Nvidia And Google To ‘Reinvent Software’

PaxML is Google’s new framework for building massive large language models (LLMs), which the companies have been co-engineering for some time. It enables developers to use Nvidia H100 and A100 Tensor Core GPUs for advanced and fully configurable experimentation and scale.

“We’re working together to reengineer and re-optimize the software stack and reinvent software,” said Huang. “The work that we’ve done to create frameworks that allows us to push the frontiers of large language models distributed across giant infrastructures, so that we could: save time for the AI researchers; scale up to gigantic next generation models; save money; save energy—all of that requires cutting-edge computer science.”

“So today we’re announcing the first fruits of our labor called PaxML. This is a large language models framework, built on top of JAX, built on top of OpenXLA, and the labor of love from some amazing computer scientists,” Nvidia’s CEO said. “So this is really, really groundbreaking stuff.”

Google Cloud First To Get Nvidia DGX GH200 AI Supercomputer

Google Cloud will be one of the first companies in the world to have access to the Nvidia DGX GH200 AI supercomputer—powered by the Nvidia Grace Hopper Superchip—to explore its capabilities for generative AI workloads.

“Our teams are now starting to build the next-generation processors and the next-generation AI infrastructure. Google and ourselves, we’re going to work on one of the first instances in the world of our next-generation AI supercomputer called DGX GH200, based on a revolutionary new chip: Grace Hopper,” he said. “This is really, really amazing work that we’re doing together.”

Additionally, the Nvidia DGX Cloud AI supercomputing and software is set to become available to Google Cloud customers directly from their web browser to provide speed and scale for advanced training workloads.

“We’re going to put Nvidia DGX Cloud in GCP [Google Cloud Platform],” said Huang. “This is where we do our AI research. This is where we optimize our incredible software stacks. All of this work that we do will instantly benefit GCP and all the people that work on it.”

Google Cloud CEO Thomas Kurian On Nvidia Relationship

Google Cloud and Nvidia have been partnering for years. For example, earlier in 2023, Google Cloud became the first cloud provider to offer Nvidia L4 Tensor Core GPUs with the launch of the G2 VM.

Google Cloud CEO Thomas Kurian said his cloud company is “thrilled” with the innovation it’s launching alongside Nvidia.

The CEO said people ask him all the time what exactly are the relationships between Google Cloud’s custom-design TPU accelerators and GPUs.

“Very simply put, as AI evolves, the needs on the hardware architecture and software stack evolves—from training to inferencing, to new capabilities like embeddings. And we want to offer customers the broadest, most optimized choice of accelerators,” said Kurian.

“Google is a platforms company at the heart of it. And we want to attract all those developers and customers who love Nvidia’s GPU technology and software,” he said. “So it’s a great, great partnership.”