The 10 Biggest Nvidia News Stories Of 2023 (So Far)

Nvidia is reaping big rewards for making long-term investments ‘in full-stack computing’ capabilities thanks to the explosion in popularity of generative AI models like ChatGPT that rely on the company’s GPUs. CRN reviews the biggest Nvidia news stories of 2023 so far, including developments related to generative AI and cloud.

Nvidia CEO Jensen Huang

No chip company is enjoying the spotlight this year more than Nvidia, whose GPUs have become the primary engines behind popular generative AI applications like ChatGPT.

As explained in CRN’s recent cover story about solution providers building AI businesses, Nvidia has cemented itself as a critical AI vendor in the channel thanks to how it has spent more than a decade developing chips, systems and software that handle several important aspects of AI applications, from processing and networking, to software development and management.

[Related: The 10 Hottest Semiconductor Startups Of 2023 (So Far)]

This has transformed Nvidia from a GPU designer to what CEO Jensen Huang calls a “full-stack computing company” that can deliver entire systems pre-integrated with the company’s software.

Now Nvidia is reaping big rewards for making those long-term investments and strategic decisions thanks to the explosion in popularity of generative AI models like ChatGPT that have prompted many enterprises to consider developing custom models for their own use.

These rewards include a sharp rise in Nvidia’s stock price, a surge in demand for the company’s data center GPUs and several new partnerships to deliver its full-stack computing capabilities.

“We are at the iPhone moment of AI. Startups are racing to build disruptive products and business models while incumbents are looking to respond. Generative AI has triggered a sense of urgency in enterprises worldwide To develop AI strategies,” Huang said in March.

What follows are the 10 biggest Nvidia news stories of 2023 so far, including developments related to generative AI, cloud computing, gaming, chip manufacturing and high-performance computing.

10. Nvidia Brings RTX 40 Series GPUs To Laptops

After launching the RTX 40 series GPUs for desktop PCs last year, Nvidia expanded the lineup in the first half of 2023 with models for gaming and workstation laptops.

The laptop GPUs include several high-end and mid-range options for gaming: the GeForce RTX 4090, RTX 4080, RTX 4070, RTX 4060 and RTX 4070. There are also several for mobile workstations: the RTX 5000, RTX 4000, RTX 3500, RTX 3000 and RTX 2000 GPU.

All these GPUs are powered by Nvidia’s Ada Lovelace architecture, which feature fourth-generation Tensor Cores for accelerating AI technologies such as Nvidia’s image-upscaling DLSS and third-generation RT Cores for real-time ray tracing. The GPUs also come with hardware-based AV1 encoding.

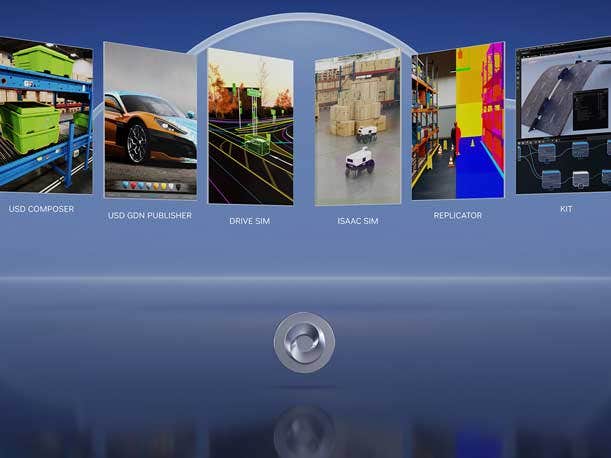

9. Nvidia Expands Omniverse To The Cloud

Nvidia’s ambition to power “industrial metaverse” applications with GPUs, systems and software continued in March with the launch of Omniverse Cloud, its platform-as-a-service for creating and running 3-D internet applications.

Microsoft Azure is the first cloud service provider to offer the subscription-based Omniverse Cloud, which provides access to Nvidia’s full suite of Omniverse software applications on its OVX infrastructure. The chip designer’s OVX servers use its L40 GPU, which is optimized for graphics-heavy applications as well as AI-powered generation of 2-D images, video and 3-D models.

While Nvidia has marketed Omniverse as benefiting a wide swath of industries in the past, the company is focusing its promotional efforts for Omniverse Cloud around the automotive industry, saying it lets design, engineering, manufacturing and marketing teams digitize their workflows.

Applications enabled by Omniverse Cloud include connecting 3-D design tools to speed up vehicle development, creating digital twins of factories to simulate changes in the manufacturing line and performing closed-loop simulations of vehicles in action.

The service will become widely available in the second half of this year.

No. 8: Nvidia CEO Signals Further Interest In Using Intel’s Fabs

In May, Nvidia CEO Jensen Huang gave another signal that he is interested in potentially using Intel’s factories to manufacture some of the company’s chips in the future.

Speaking at Computex 2023 in Taiwan, Huang said he was impressed with the test chip results on a next-generation manufacturing process from Intel, which is seeking to win business from Nvidia and other companies for its revitalized contract chip manufacturing business, Intel Foundry Services.

Huang first indicated interest in using Intel Foundry Services in March 2022, a year after Intel CEO Pat Gelsinger launched the business as part of a shift in the semiconductor giant’s integrated device manufacturing model called IDM 2.0.

“They’re interested in us using their foundries, and we’re very interested in exploring it,” Huang said at the time.

Nvidia mostly relies on Asian contract chip manufacturing giants TSMC and Samsung for chip production, so turning to Intel for some manufacturing needs would help the GPU giant shift some of its supply chain to the West, where Intel is currently expanding its fab footprint in multiple regions.

“Our strategy is to expand our supply base with diversity and redundancy at every single layer,” Huang said in 2022.

Nvidia has also expressed interest in using new TSMC fabs in Arizona that are expected to open in 2024 and 2026.

No. 7: Nvidia Seeks To Entrench Itself In Chip Manufacturing Process

While Nvidia doesn’t manufacture the chips it designs, the GPU giant is hoping to become a more crucial part of the chip-making process through software.

In March, Nvidia revealed cuLitho, a new software library that will use the company’s GPUs to accelerate the performance of computational lithography, a crucial aspect of manufacturing next-generation chips that involves creating patterns on a silicon wafer.

The chip designer said cuLitho is being integrated into processes and systems by TSMC, the world’s largest contract chip manufacturer, as well as electronic design automation vendor Synopsys and chip-making equipment vendor ASML.

Nvidia claimed that cuLitho, paired with its GPUs, can boost computational lithography performance by up to 40 times. This translates into 500 Nvidia DGX H100 systems with the ability to achieve the same amount of work as 40,000 CPU-only systems, according to the company.

No. 6: Nvidia’s Gaming Revenue Continues Recovery After 2022 Plummet

Nvidia continued to make a recovery for its gaming GPU business after revenue suffered from an industry-wide plummet in demand for the processors.

The chip designer’s gaming business began to take a hit in the second quarter of its 2023 fiscal year, which ended on July 31, 2022. In that quarter, gaming revenue dropped 44 percent year-over-year and 33 percent sequentially to $2 billion. Revenue in the third quarter last calendar year then dropped 23 percent year-over-year and 51 percent sequentially to $1.5 billion.

Nvidia then reported in February that its gaming revenue for the fourth quarter in its 2023 fiscal year dropped 46 percent year-over-year but increased 16 percent sequentially to $1.8 billion. The recovery continued in the first quarter of its 2024 fiscal year, which ended April 30, with revenue growing 22 percent sequentially to $2.3 billion, a 38 percent decline from the same period last year.

The company has said that its yearly decline in gaming GPU revenue has been due to macroeconomic issues and COVID-19-related disruptions in China. But the chip designer has been able to grow sales again sequentially thanks to its expanding lineup of GeForce RTX 40 series graphics cards.

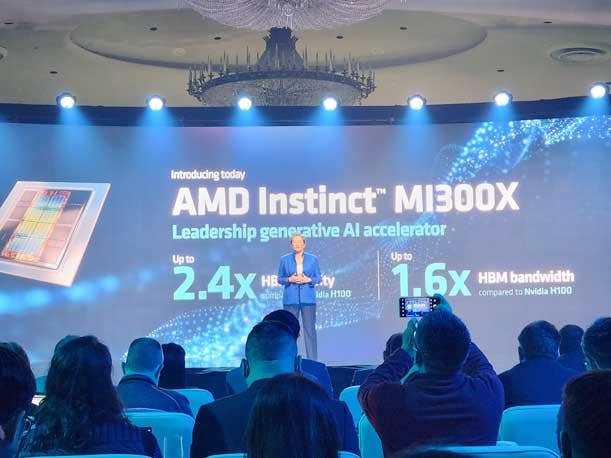

No. 5: Nvidia Faces Mounting Competition From AMD, Intel And Startups

Nvidia may hold the overall lead in market share and performance for GPUs, but Intel, AMD and a slew of startups are seeking to challenge the chip designer’s crown on multiple fronts.

AMD, for instance, revealed its latest challenge to Nvidia’s datacenter GPU this year with its Instinct MI300 accelerator series. The upcoming chips include the MI300X, which AMD said provides better efficiency and cost savings for running large language models—a type of AI model that has exploded in demand thanks to ChatGPT—than Nvidia’s flagship H100 data center GPU.

Like AMD, Intel is looking to steal GPU market share from Nvidia across PC and data center segments. The semiconductor giant’s efforts include the Intel Data Center GPU Max Series processors, which have been installed in the U.S. Department of Energy’s Aurora supercomputer, expected to become one of the fastest in the world when it comes online later this year.

There are also a slew of AI chip startups challenging Nvidia’s dominance with new architectures and computation approaches. These include Tenstorrent, which views the open standard RISC-V instruction architecture not just as a viable alternative to x86 CPUs but a winning strategy for running AI workloads faster than GPUs. There’s also Cerebras Systems, a startup that designs wafer-scale chips that size of dinner plates with hundreds of thousands of cores and dozens of gigabytes in SRAM.

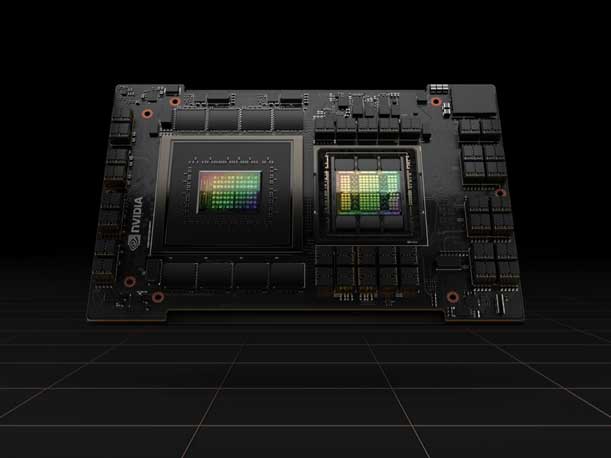

No. 4: Nvidia Begins Production For CPU-GPU Grace Hopper Superchip

In late May, Nvidia said it had begun full production for one of its most ambitious chip projects to date, the Grace Hopper Superchip for complex high-performance computing and AI workloads.

Grace Hopper brings together a Hopper H100 GPU with a 72-core Grace CPU based on Arm’s Neoverse V2 architecture on an integrated module. The CPU is attached to up to 480GB of LPDDR5x memory while the GPU is connected to a maximum 96GB of HBM3 high-bandwidth memory. The CPU and GPU communicate via a high-bandwidth NVLink chip-to-chip interconnect that runs at 900GB/s.

The chip designer is hoping to convince HPC and AI customers to use Grace Hopper in systems instead of an x86 CPU paired with a GPU for certain workloads.

Nvidia claims that Grace Hopper can run 2.5 times to 3.9 times faster on certain HPC workloads than an x86 CPU paired with the company’s discrete A100 GPU from the previous generation. It provides smaller improvements over an x86 CPU combined with an Nvidia’s latest flagship GPU, the H100.

As for AI workloads, Nvidia said Grace Hopper can run a graph neural network 1.9 times faster and a deep learning recommendation model 3.5 times faster compared to an x86 CPU paired with an H100.

Nvidia said systems with Grace Hopper Superchips are expected later this year. These systems include Nvidia’s DGX GH200 AI Supercomputer, which brings together 256 superchips to provide 1 exaflop of FP8 performance and 144TB of shared memory.

No. 3: Nvidia Wants To Play Bigger Role In Generative AI Development

While Nvidia’s GPUs have been the go-to processor for many generative AI models, the chip designer wants to play a bigger role in how such models are developed.

The company hopes to accomplish this with the introduction of new services, announced in March and collectively known under the umbrella Nvidia AI Foundations, meant to help enterprises build custom generative AI models using their own proprietary data.

Nvidia has announced partnerships with multiple vendors, including Snowflake, Microsoft Azure and Dell Technologies, to deliver these capabilities.

The services, which run on Nvidia’s new DGX Cloud AI supercomputing service, come with pretrained models, frameworks for data processing, vector databases and personalization features, optimized inference engines, APIs and enterprise support.

NeMo is a large language model customization service that includes methods for businesses to extract proprietary data sets and use them for generative AI applications such as chatbots, enterprise search and customer service. NeMo is available in early access.

Picasso is focused on generating AI-powered images, videos and 3D models from text prompts for creative, design and simulation applications. The service allows enterprises to train Nvidia’s Edify foundation models on proprietary data. The service also comes with pre-trained Edify models that are based on fully licensed data sets. Picasso is available in preview mode.

BioNeMo lets pharmaceutical and life sciences organizations develop custom AI foundation models using proprietary data and pretrained, open-source models for drug discovery as well as research in genomics, chemistry, biology and molecular dynamics.

No. 2: Nvidia Seeks Bigger Role In Cloud With DGX Cloud Platform

Nvidia wants to play a bigger role in cloud computing infrastructure and give enterprises quicker ways to build AI and metaverse applications with its new DGX Cloud AI supercomputing service.

Announced in detail at Nvidia’s GTC 2023 event in March, DGX Cloud is based on the chip designer’s purpose-built DGX systems and AI software, serving as infrastructure and software layers hosted by cloud service providers. DGX Cloud Instances are now available from Oracle Cloud Infrastructure, and more are coming later in the year from Microsoft Azure, Google Cloud and other providers.

“By putting Nvidia’s DGX supercomputers into the cloud with Nvidia DGX cloud, we’re going to democratize the access of this infrastructure and, with accelerated training capabilities, really make this technology and this capability quite accessible,” Nvidia CEO Jensen Huang said in February.

At the software layer, DGX Cloud includes Nvidia’s Base Command Platform, which manages and monitors deep learning workloads, allowing users to right-size the infrastructure for what they need. The service also includes access to Nvidia AI Enterprise, a software suite that includes AI frameworks, pretrained models and tools for developing and deploying AI applications.

When Nvidia first teased DGX Cloud in February, Huang said the service will drive demand for cloud service providers because Nvidia has many customers, including AI startups and enterprises, who want to run AI applications in the cloud, in hybrid cloud configurations or in multiple cloud services.

“We’re going to be the best AI salespeople for the world’s clouds,” he said.

No. 1: Generative AI Demand Sends Nvidia Stock Sky High

Nvidia is reaping big rewards on Wall Street so far this year thanks to generative AI applications like ChatGPT driving huge demand for the company’s profit-rich data center GPUs.

To date, the chip designer’s stock price has increased more than 180 percent, from $143.50 per share on Jan. 3 to around $412.64 on Wednesday, June 28. This has pushed Nvidia’s market capitalization, barring any pronounced downswings, past the $1 trillion mark, joining the likes of Amazon, Alphabet, Microsoft and Apple.

In May, Nvidia said it estimates revenue in the second quarter to grow 64 percent year-over-year to $11 billion, largely thanks to generative AI models driving up demand for data center GPUs and other products.

“We expect this sequential growth to largely be driven by data center, reflecting a steep increase in demand related to generative AI and large language models,” Nvidia CFO Colette Kress said in May. “This demand has extended our data center visibility out a few quarters, and we have procured substantially higher supply for the second half of the year.”

The fast growth projected by Nvidia is expected after an initial surge in popularity for generative AI models helped the chip designer recover from a slowdown in the market. This was seen in the company’s first quarter revenue of $7.2 billion, which was down 13 percent year-over-year but 19 percent higher from the previous quarter.