Google AI Head On Data Privacy, GenAI Lead Vs. AWS, Microsoft

‘There’s so many examples of this with public models and a lot of the models that are from our competitors, where information has been pushed into these models in a public way. And you can actually ask questions about that information that is supposed to be very private,’ Google’s Philip Moyer tells CRN.

After working for Microsoft, Google and Amazon Web Services for a combined 20 years, Philip Moyer believes generative AI may be the most significant opportunity in the history of IT industry with Google in a prime position to beat the competition.

Moyer, global vice president of Google’s artificial intelligence business, said his company’s AI strategy around trustless computing and providing private AI models where customers are in complete control of their data is a major advantage for Google versus public models.

“There’s so many examples of this with public models and a lot of the models that are from our competitors, where information has been pushed into these models in a public way. And you can actually ask questions about that information that is supposed to be very private,” said Moyer.

“I was with a toy manufacturer over in Europe and someone had used their price list, pushed it into one of the big public models, and people could see the precise profit they were making on every single toy,” he said. “Like, I could probably go query that model today and get the profit margin on that price. So public models are not private—that’s big.”

[Related: Google Cloud CEO On AI: ‘We Have A 10-Year Head Start On Any Competitor’]

AI Data Privacy: ‘We Actually Back It Up In Our Contracts’

Google, Microsoft and AWS have been investing billions in artificial intelligence in 2023, specifically around generative AI. The three tech giants are the worldwide leaders in cloud computing and are injecting innovative AI technology into their most popular products, while also launching brand new generative AI solutions.

“I have never seen customer demand like what I’m seeing today. And I’ve worked at all the big three,” Moyer told CRN. “I will tell you—from the developers all the way through the board room—everyone is interested in executing on generative AI and using general AI to transform their business.”

For the past four years, Moyer has been responsible for the global business and commercialization of Google Cloud’s AI offerings including generative AI, industry products and strategic AI partnerships.

Prior to joining Google in 2019, Moyer was managing director of global financial services at AWS in charge of AWS sales, technical and customer experience teams that supported some of AWS’ largest customers. He started his IT career at Microsoft, spending nearly 15 years at the software giant from 1991 until 2005 in various general manager roles.

“Google was the first to publish our privacy stance on AI: your data is your data; your training is your training; and your inference is your inference,” he said. “We back that up not just with blog posts, we actually back it up in our contracts.”

In an interview with CRN, Moyer talks about Google’s AI market differentiation versus AWS and Microsoft, as well as the “extraordinary” opportunities for Google partners today around generative AI.

What is Google’s market differentiation versus Microsoft and AWS when it comes to data privacy in AI?

Google was the first to publish our privacy stance on AI: your data is your data; your training is your training; and your inference is your inference.

We back that up not just with blog posts, we actually back it up in our contracts. We back it up in our technology. We have what’s called Access Transparency Logs. We back it up with our own SOC [Service Organization Controls compliance]. So we back it up in the contracts that we do with our customers.

Our data privacy [differentiation] came out of all the learnings that we’ve had in Google Search and with our other products like YouTube.

The privacy of information has to be a part of our brand. People trust us with searches across a wide variety of things. And if they don’t trust us with AI, they’re not going to use us. So we built that into our fabric in a whole variety of different ways.

Inside of Google, we have this notion of trustless computing, where at the lowest level, we have architected a security environment [where] the entire fabric of Google is built on this notion of trustless computing. It is foundational, which is why a lot of people trust the cloud, is that trustless-based computing.

What are some other AI market differentiation Google has versus AWS and Microsoft?

Our openness is really important. We have over 3,000 researchers that have produced 7,000 papers that we’ve open sourced them into the marketplace.

We have this deep commitment around openness when it comes to AI. The genesis of it was researchers and papers. Now you’re seeing it in terms of the actual products that we’re releasing. So that openness first and foremost, and the sheer number of choices you have on Google Cloud, I would say as a differentiator.

The second thing is: how quickly we help organizations implement safely and accurately. What we announced at Google Cloud Next with Enterprise Search and the ability to do citation is a really important thing, especially in the regulated industries.

Most organizations I find stand at the side of the swimming pool, because they’re afraid when they jump in, they’re going have a use case that’s regrettable in a regulated industry.

We give you the ability to be able to see precisely where the answer is coming from when you’re using that technology. It’s making organizations go, ‘Wow, I can do something safely and quickly.’ There’s some great examples like MSCI, a big organization in the financial data markets, being able to do things around data extraction for ESG [environmental, social, governance]. We are working with HCA Healthcare, taking clinical notes and turning it into care documentaries and summarizing clinical notes.

That quick to get started in a safe way is a really critical hallmark for us.

What are some unique AI techniques Google uses that give you a leg up in the market?

In AI, we use a technique that’s called LoRa [Low-Rank Adaptation]. LoRa creates what’s called an adapter layer, and that adapter layer is the customers.

So when I’m training all of the information and all the questions that get asked, it lives inside of that LoRa layer. It doesn’t live inside of our large model. Our model is frozen and the LoRa model is part of Google’s tenant that the customer owns. We wrap that with bring your own keys, so customer managed encryption keys. We support it with everything that we do inside of that trustless computing environment. It wraps around that LoRa layer.

Then our conversational AI, our enterprise search—all of that lives inside of your GCP tenant that you and you alone own. So when customers are submitting information in to train or questions, we don’t see those. It actually stays inside of the customers tenant.

It’s probably what I spend the most time on with customers to get them comfortable that, our model doesn’t get smarter based on their usage of it.

There’s so many examples of this with public models, and a lot of the models that are from our competitors, where information has been pushed into these models in a public way. And you can actually ask questions about that information that is supposed to be very private.

I was with a toy manufacturer over in Europe and someone had used their price list, push it into one of the big public models, and people could see the precise profit they were making on every single toy. Like, I could probably go query that model today and get the profit margin on that price.

So public models are not private—that’s big. It’s one of the reasons why we were so measured before we brought [Google AI technology] out. We wanted to make sure we were able to bring it out and adhere to all the privacy requirements that we’ve adhere to internally, and also that our brand is known for and that our cloud is known for.

What are some new Google AI products that stick out to you?

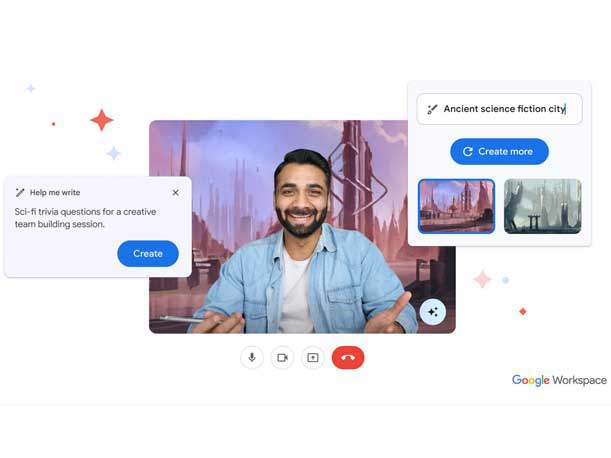

Everything we’re launching in Workspace is really excited. I don’t know about you, but I’m going to use that [Google Meet] new feature of ‘attend a meeting for me.’ It’s going to give us a day back in our lives.

Generally, we’re doing two different things. One, we’re building for the enterprise and simultaneously making AI more personal. So the things that we’re doing in Workspace, what we’re doing with Duet AI, even down to the software development—we’re making the individual more and more productive, as we’re also building out this infrastructure to make the organization more productive. You get the benefits from the individuals and the benefits from the professionals all together. Two sides of the of the AI coin, if you will.

On the enterprise side, a really important one that I’m really excited about is the Cross-Cloud Interconnect. It’s a really big statement because we can run our generative AI on Google, and we could be reaching out and actually doing vector work on other clouds. So we can be taking data from other clouds and bring it into our generative AI. So you don’t have to move all your data just to get the value out of our generative AI.

People are realizing we’re the most open cloud.

We announced BigQuery Omni so our data platform can live on other clouds. We announced our Kubernetes GKE that can live on other clouds and on-prem. We announced AlloyDB, where its PostgreSQL store can live on other clouds. Then we have over 100 models on us.

So everything from choice in TPUs and GPUs; and choice in your cloud and your infrastructure and your connectivity; choice and models; and then choice in applications and even plug-ins that live on top of that—it’s a fantastic opportunity for partners to really take advantage of. If they have done some work on other clouds, they are now able to join that together with the best of Google’s generative AI to be able to bring value to their customers.

What kind of message or drumbeat are you sending to Google partners?

I have never seen customer demand like what I’m seeing today. And I’ve worked at all the big three. I’ve never had to do as many board meetings as I’ve had to in the past 160 days. I will tell you—from the developers all the way through the board room—everyone is interested in executing on generative AI and using general AI to transform their business.

In that same past 160 days, we’ve come out with over a dozen products.

The market for system integrators is extraordinary. Every customer I speak to needs some assistance with doing their first, second, third gen AI application and then actually building this at scale inside of the organization.

Most organizations are really struggling with things like prompt management, ‘How do I do basic prompt management?’ Most organizations are trying to figure out the skills that they need in this space. And most organizations are asking how do they build these privacy foundations to be able to manage accuracy at scale. Most of the major GSIs and SI’s that are out there are seeing this opportunity.

The GSIs that we work with, there’s over 150,000 people that have been trained already in our generative AI technologies. The largest GSIs have committed them to do a 5x increase on skills. So quintuple the skillset that’s available in these products.

The need from customers from top to bottom is extraordinary. Simply put, the opportunity for SIs to bring more value to their customers has never been greater.