The 10 Coolest New AWS Tools Of 2022 (So Far)

From new deployment and configuring tools to EC2 instances, here are 10 cool AWS tools launched this year by the worldwide cloud market-share leader.

Amazon Web Services has already launched an avalanche of new tools this year to better enable customers, technologists, channel partners and developers around AWS’ ever-growing portfolio of cloud offerings.

From new automation and configuring tools to new EC2 instances, the Seattle-based public cloud market-share leader is creating new cloud offerings in 2022 at a rapid pace. AWS’ red-hot innovation engine is continuing to drive sales for the company this year.

For its recent first fiscal quarter, AWS generated sales of $18.4 billion, representing an increase of 37 percent year over year.

This means AWS is now running at an annualized revenue run rate of $74 billion. The company reported operating income of $6.5 billion for its first quarter, up significantly from $4.2 billion for the same period one year before.

AWS also continues to build out its customer base and AWS services offerings by forming new strategic partnerships with industry titans like IBM and Ingram Micro.

In addition, the cloud computing superstar was officially awarded this year with the U.S. National Security Agency cloud contract worth up to $10 billion, besting its fellow cloud rivals for the deal.

AWS, which made CRN’s 10 Hot Software-Defined Cloud Data Center Companies list in 2022, is one of the most important and influential IT companies in the world today with no plans on slowing down sales or innovation.

CRN breaks down the 10 Coolest New AWS Tools of 2022

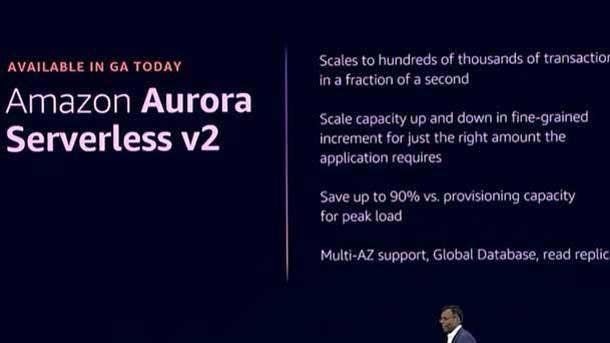

Aurora Serverless v2 For On-Demand Autoscaling Configuration

AWS’ new Amazon Aurora Serverless v2 automatically scales to hundreds of thousands of transactions in a fraction of a second to support the most demanding applications.

Amazon’s Aurora Serverless v2 scales capacity increments based on an application’s needs, providing customers with up to 90 percent cost savings compared with provisioning database capacity for peak load, AWS said. The offering also provides the full breadth of Amazon Aurora’s capabilities, including using multiple AWS Availability Zones for high availability, Global Database for local reads with low latency, Read Replicas for high performance and Parallel Query for faster querying.

“Amazon Aurora is the first relational database built from the ground up for the cloud,” said Swami Sivasubramanian, vice president of databases, analytics and machine learning at AWS, in a statement. “With the next generation of Amazon Aurora Serverless, it is now even easier for customers to leave the constraints of old-guard databases behind and enjoy the immense cost savings of scalable, on-demand capacity with all of the advanced capabilities in Amazon Aurora.”

There are no up-front commitments or additional costs to use Amazon Aurora Serverless v2, and customers only pay for the database capacity used.

Amazon Aurora Serverless v2 is generally available today for MySQL- and PostgreSQL-compatible editions of Amazon Aurora.

“AWS Cloud Quest:” Free Game To Boost AWS Cloud Skills

Amazon launched a 3-D online role-paying game where players move through a virtual city helping people solve real-world technology issues and cloud use cases, with the goal of building AWS cloud skills and preparing for the AWS Certified Cloud Practitioner exam.

“AWS Cloud Quest: Cloud Practitioner” was designed by AWS this year to help people become AWS cloud experts by solving real-world cloud business use cases in the game, as Amazon continues to invest hundreds of millions in providing free cloud computing training.

“ AWS Cloud Quest and AWS Educate intentionally move away from passive content. We want to make abstract cloud computing concepts real through interactive and hands-on activities that immediately let learners turn theory into practice,” said Kevin Kelly, director of Cloud Career Training Programs at AWS, in a blog post. “We’re continuing to innovate how learners can build their cloud knowledge and practical skills, meeting them where they are and bringing knowledge within anyone’s reach by making these programs free.”

To win “AWS Cloud Quest,” players need to complete quests to help the virtual citizens build a better city, which in turn, will build a person’s real-life cloud computing skills.

Players learn and build cloud solutions by exploring core AWS services and categories, such as storage, compute, database and security services. Gameplay includes quizzes, videos and hands-on exercises based on real-world business scenarios.

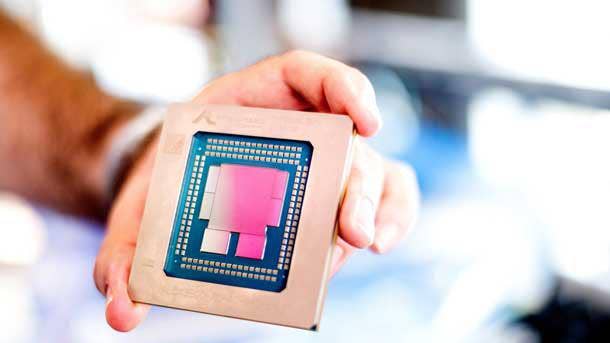

Amazon EC2 C7g Instances Powered By Graviton3 Processors

In May, AWS launched the general availability of its Amazon Elastic Compute Cloud (Amazon EC2) C7g instances powered by AWS-designed Graviton3 processors.

AWS’ new C7g instances use AWS Graviton3 processors to provide up to 25 percent better compute performance for compute-intensive applications than current generation C6g instances powered by AWS Graviton2 processors. The higher performance of C7g instances makes it possible for customers to more efficiently run a wide range of compute-intensive workloads—from web servers, load balancers and batch processing to electronic design automation (EDA), high performance computing, gaming, video encoding, scientific modeling, distributed analytics, machine learning inference and ad serving.

There are no minimum commitments or up-front fees to use C7g instances, and customers pay only for the amount of compute used.

AWS Mainframe Modernization

Launched in June, AWS Mainframe Modernization is a new service aimed at making it faster and easier for customers to modernize mainframe-based workloads by moving them to the AWS cloud.

With AWS Mainframe Modernization, customers can refactor their mainframe workloads to run on AWS by transforming mainframe-based applications into modern cloud services. Alternatively, customers can keep their applications as written and replatform their workloads to AWS by reusing existing code with minimal changes.

AWS said a managed runtime environment built into AWS Mainframe Modernization provides the necessary compute, memory and storage to run both refactored and replatformed applications while also helping to automate the details of capacity provisioning, security, load balancing, scaling and application health monitoring.

The AWS Mainframe Modernization service also provides the development, testing and deployment tools necessary to automate the modernization of mainframe applications to run on AWS. There are no up-front costs, and customers only pay for the amount of compute provisioned.

Solution Spark Program For Channel Partners

After a successful nine-month pilot, AWS’ new Solution Spark for Public Sector Partners Program provides training and resources for partners to build on top of AWS solutions to solve common customer problems in the public sector.

Through Solution Spark for Public Sector Partners, the channel has access to new and developing solutions, promotion on AWS solution pages, and go-to-market support such as joint planning, MDF and sales support. The program is set to go into effect in July.

“Partners are going to be able to leverage all of these key assets to accelerate proofs of concept and accelerate customer deployment because customers’ perspectives have changed because of COVID,” Jeff Kratz, general manager of AWS’ Worldwide Public Sector Partners and Programs, told CRN. “We’re seeing governments that act like startups in so many different ways, and they want things now. They want to move with speed.”

The program aims to help the channel build solutions that address public sector mission needs by providing partners with the ability build on top of the AWS open-source software to jump-start their solution offerings.

AWS IoT TwinMaker: Create Digital Twins Of Real-World Systems

AWS IoT TwinMaker is a new service that makes it faster and easier for developers to create digital twins of real-world systems such as buildings, factories, industrial equipment and production lines.

The digital twins are virtual representations of physical systems that use real-world data to mimic the structure, state and behavior of the objects they represent and are updated with new data as conditions change.

AWS IoT TwinMaker aims to make it easy for developers to integrate data from multiple sources like equipment sensors, video cameras and business applications—and combines that data to create a knowledge graph that models the real-world environment. AWS IoT TwinMaker customers can use digital twins to build applications that mirror real-world systems that improve operational efficiency and reduce downtime.

There are no up-front commitments or fees to use AWS IoT TwinMaker, and customers only pay for accessing the data used to build and operate digital twins.

AWS Container Rapid Adoption Assistance

AWS’ new Container Rapid Adoption Assistance Program offers technical expertise and hands-on guidance to partners to help accelerate container solution deployments on behalf of public sector customers.

“We’re seeing a shift to containers from EC2 instances and more. What [partners] were asking for is more technical assistance. So we’re providing that technical engagement on containers,” said AWS’ Kratz. “We don’t have enough partners who really understand how their customers design applications with containers. So we’re providing that service to them.”

Containerization provides a boost in application quality, portability, agility, security, fault isolation and efficiency.

The new Container Rapid Adoption Assistance Program comes after AWS’ successful 2020 rapid adoption program for artificial intelligence and machine learning, as well as AWS’ 2021 Internet of Things (IoT) rapid adoption program.

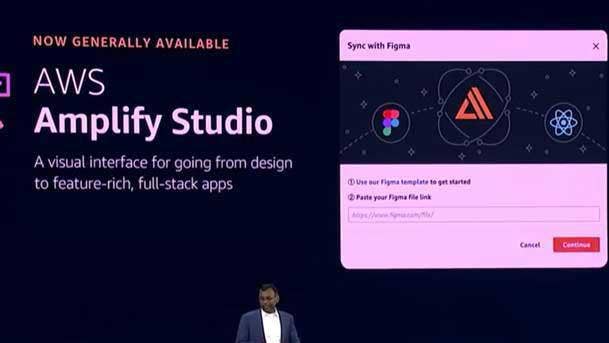

AWS Amplify Studio Simplifies Front- And Back-End Development For Web, Mobile Apps

The public cloud giant launched AWS Amplify Studio, a new visual development environment for creating web application user interfaces that extend AWS Amplify so developers can create fully customizable AWS web applications with minimal coding.

Customers leverage AWS Amplify to more easily configure and deploy AWS services including its database, compute and storage services to power their applications. AWS Amplify Studio extends the capabilities of AWS Amplify to provide a unified visual development environment for building rich web applications on AWS—from provisioning the AWS services that power the application to creating dynamic UIs.

Developers can use AWS Amplify Studio to create a UI using a library of prebuilt components, collaborate with user experience designers, and connect their UI to AWS services through a visual interface without writing any code. AWS said Amplify Studio then converts the UI into JavaScript or TypeScript code, which saves developers from writing thousands of lines of code while allowing them to fully customize their web application design and behavior using familiar programming languages.

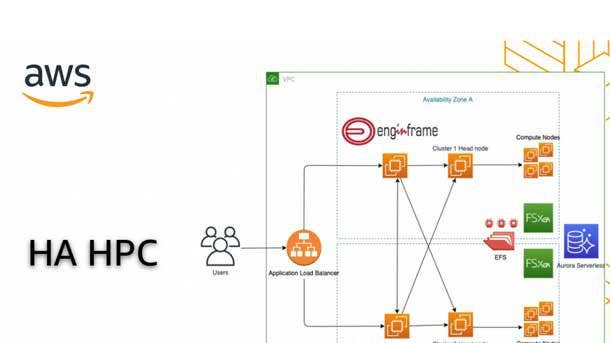

Amazon EC2 Hpc6 Instances For High-Performance Computing

This year, AWS launched the general availability of Amazon Elastic Compute Cloud (Amazon EC2) Hpc6a instances—a new instance type that is purpose-built for tightly coupled high-performance computing (HPC) workloads.

The HPC-focused instances aim to make it even more cost-efficient for customers to scale HPC clusters on AWS to run their most compute-intensive workloads such as genomics, computational fluid dynamics, weather forecasting, molecular dynamics, computational chemistry, financial risk modeling, computer-aided engineering and seismic imaging.

The new Hpc6a instances are purpose-built to offer the best price/performance for running HPC workloads at scale in the cloud. The instances are powered by third-generation AMD EPYC processors aimed at expanding AWS’ portfolio of HPC options.

AWS said Hpc6a instances deliver up to 65 percent better price/performance for HPC workloads to carry out complex calculations across a range of cluster sizes—up to tens of thousands of cores. Hpc6a instances are enabled with Elastic Fabric Adapter (EFA)—a network interface for Amazon EC2 instances—by default. With EFA networking, customers benefit from low latency, low jitter and up to 100 Gbps of EFA networking bandwidth to increase operational efficiency and drive faster time-to-results for workloads.

Amazon EMR Serverless

Launched in June, Amazon EMR Serverless is a new serverless deployment option in Amazon EMR that makes it easy and cost-effective for data engineers and analysts to run petabyte-scale data analytics in the cloud.

Amazon EMR is a big data offering customers can use to run large-scale distributed data processing jobs, interactive SQL queries, and machine learning applications built on open-source analytics frameworks such as Apache Spark, Apache Hive and Presto.

With AWS EMR Serverless, businesses can run their Spark and Hive applications without having to configure, optimize, tune or manage clusters.

EMR Serverless offers automatic scaling, which provisions and quickly scales the compute and memory resources required by the application.

“For example, if a Spark job needs two executors for the first five minutes, 10 executors for the next 10 minutes, and five executors for the last 20 minutes, EMR Serverless automatically provides the resources as needed, and you pay for only the resources used,” said AWS in a blog post.

In addition, EMR Serverless integrates with EMR Studio to provide customers with comprehensive tooling to check the status of running jobs, review job history and use familiar open-source tools for debugging.