AWS re:Invent 2023: The 10 Biggest New Product Launches

From the new Amazon Q AI-powered assistant to new thin-client hardware, here are the 10 biggest AWS product launches at re:Invent 2023 this week.

AWS’ innovation engine is on fire at re:Invent 2023 with the cloud giant launching several blockbuster new products including a thin-client hardware device and a new generative AI-powered assistant, Amazon Q, specifically designed for businesses.

“Generative AI is the next step in artificial intelligence,” said AWS CEO Adam Selipsky during his keynote this week at AWS re:Invent 2023. “And it’s going to reinvent every application that we interact with at work and at home.”

The $92 billion Seattle-based company is striving to become a leader in the red-hot generative AI market as artificial intelligence has taken over the tech industry in 2023.

[Related: AWS CEO’s 6 Boldest Re:Invent Remarks On New AI, Ships And ‘Other Clouds’]

At re:Invent 2023 in Las Vegas this week, AWS launched a slew of new GenAI products to turbocharge its AI market position, including a new cybersecurity offer for its flagship GenAI platform, Amazon Bedrock, as well as a new processor chip, Graviton4, to enable AI-powered workloads and applications.

“We were the first to develop and offer our own server processors. We’re now in our fourth generation in just five years,” said Selipsky on stage as he unveiled Graviton4 at AWS re:Invent. “Other cloud providers have not even delivered on their first server processors yet.”

From new AWS Graviton4 and Trainium2 chips for AI to new serverless innovation and AWS partner support tools, here are the 10 biggest AWS product launches this week at re:Invent 2023 that solution providers need to know about.

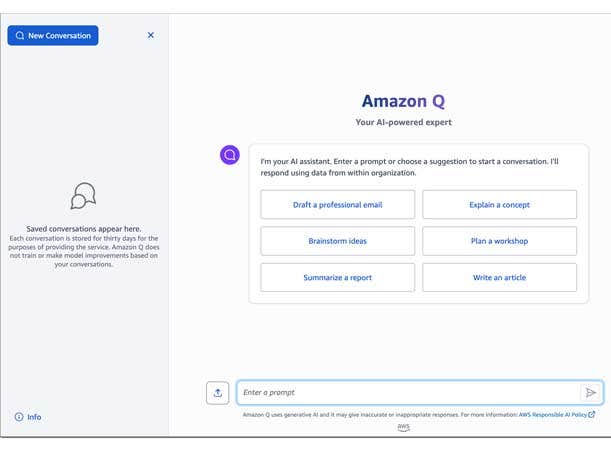

AWS Launches Amazon Q

In one of the largest announcements at AWS re:Invent, the cloud giant unveiled Amazon Q, a new generative AI-powered assistant designed for work.

Customers can use Amazon Q to have conversations, solve problems, generate content, gain insight and take action by connecting to their company’s information repositories, code, data and enterprise systems. Amazon Q helps business users complete tasks using simple natural language prompts.

“Other providers have launched tools without data privacy and security capabilities, which virtually every enterprise requires. Many CIOs actually ban the use of a lot of the most popular AI chat systems inside their organization. Just ask any Chief Information Security Officer, CISO—you can’t bolt on security after the fact and expect it to work as well,” said AWS’ CEO.

“It’s much, much better to build security into the fundamental design of the technology. So when we set out to build generative AI applications, we knew we had to address these gaps. It had to be built in from the very start,” said Selipsky. “That’s why today I’m really proud and excited to announce Amazon Q, a new type of generative AI-powered assistant designed to work for you at work.”

Amazon Q provides access controls that restrict responses to only using data or acting based on the employee’s level of access and provides citations and references to the original sources for fact-checking and traceability. Customers can choose from among more than 40 built-in connectors for popular data sources and enterprise systems, including Amazon S3, Google Drive, Microsoft SharePoint, Salesforce, ServiceNow and Slack.

Amazon Q is currently available for Amazon Connect and will soon be integrated into other AWS services.

Click through to read the nine other new products AWS launched at re:Invent 2023.

Amazon WorkSpaces Thin Client

In a bold move, AWS debuted the Amazon WorkSpaces Thin Client device for virtual desktop access for a price tag starting at $195 per device.

The workspace hardware has purpose-built firmware and software, an operating system engineered for employees who need simple and secure access to applications in the cloud, and software that allows IT to remotely manage it.

Amazon WorkSpaces Thin Client is optimized for the AWS Cloud. For the first time, AWS adapted a consumer device into an external hardware product for AWS customers.

“At first glance, it may look like a Fire TV Cube,” said Amazon in a blog post. “For a significant portion of the workforce, some form of remote and hybrid work is here to stay, particularly in industries such as customer service, technical support and health care. … Employees need quick, reliable access to a variety of business applications and data—regardless of where they are working. Enter the Amazon WorkSpaces Thin Client.

The devices are sold through Amazon Business, the company’s B2B marketplace, with customers being able to order preconfigured hardware.

Graviton4 Processors

On the processor front, AWS unveiled a new Graviton4 chip, with Selipsky dubbing it the “most energy-efficient chip that we have ever built.”

Graviton4 has 50 percent more cores and 75 percent more memory compared with Graviton3 chips. AWS said 96 Neoverse V2 cores, 2 MB of L2 cache per core and 12 DDR5-5600 channels work together to make the Graviton4 up to 40 percent faster for databases, 30 percent faster for web applications and 45 percent faster for large Java applications than the Graviton3.

Graviton4 processors also support all of the security features from the previous generations and includes some important new ones, including encrypted high-speed hardware interfaces and Branch Target Identification (BTI).

AWS created a new Amazon EC2 instance powered by Graviton4 processors, dubbed R8G instances, aiming to provide the best price/ performance for memory-optimized workloads. R8G instances are ideal for memory-intensive workloads such as databases, in-memory caches and real-time big data analytics.

Amazon S3 Express One Zone

On the cloud storage front, AWS unleashed Amazon S3 Express One Zone, a high-performance, single-Availability Zone storage class purpose-built to deliver consistent single-digit millisecond data access for customers’ most frequently accessed data and latency-sensitive applications.

“Amazon S3 Express One supports millions of requests per minute, with consistent single-digit millisecond latency—the fastest object storage in the cloud,” said AWS’ CEO. “And up to 10 times faster than S3 standard storage. … So 17 years ago, S3 reinvented storage by launching the first cloud service for AWS. With S3 Express One Zone, you can see S3 continuing to change how developers use storage.”

With S3 Express One Zone, storage automatically scales up or down based on a customer’s consumption and need, while users no longer need to manage multiple storage systems for low-latency workloads.

While AWS customers have always been able to choose a specific AWS Region to store their S3 data, S3 Express One Zone enables them to select a specific AWS Availability Zone within an AWS Region to store their data. Customers can choose to co-locate their storage with their compute resources in the same Availability Zone to further optimize performance. Data is stored in a different bucket type—an S3 directory bucket—which supports hundreds of thousands of requests per second.

Guardrails For Amazon Bedrock

On the AI security front, AWS launched Guardrails for Amazon Bedrock in a move to implement safeguards customized to a business’ application requirements.

The offering evaluates user inputs and foundational models (FM) responses based on use-case-specific policies and provides an additional layer of safeguards regardless of the underlying FM.

Guardrails can be applied across FMs, including Anthropic Claude, Meta Llama 2, Cohere Command, AI21 Labs Jurassic and Amazon Titan Text, as well as fine-tuned models. Customers can create multiple guardrails, each configured with a different combination of controls, and use these guardrails across different applications and use cases.

Guardrails can also be integrated with Agents for Amazon Bedrock to build generative AI applications aligned with responsible AI policies.

“It has capabilities to safeguard your generative AI applications with more responsible AI policies. So now you have a consistent level of protection across all of your GenAI development activities,” said Selipsky.

AWS Trainium2

AWS launched a new generation of high-performance machine learning Trainium chips specifically designed to reduce the time and cost of training generative AI models.

AWS’ new Trainium2 chips are designed to deliver up to 4X faster training than first-generation Trainium chips and will be able to be deployed in EC2 UltraClusters of up to 100,000 chips, making it possible to train FMs and large language models (LLMs) in a fraction of the time, while improving energy efficiency up to 2X.

Trainium2 chips are purpose-built for high-performance training of FMs and LLMs with up to trillions of parameters. Trainium2 delivers up to 3X more memory capacity compared with Trainium and will be available in Amazon EC2 Trn2 instances—containing 16 Trainium chips in a single instance.

Trn2 instances are intended to enable customers to scale up to 100,000 Trainium2 chips in next-generation EC2 UltraClusters, interconnected with AWS Elastic Fabric Adapter (EFA) petabit-scale networking, delivering up to 65 exaflops of compute and giving customers on-demand access to supercomputer-class performance.

With this level of scale, AWS said customers can train a 300-billion parameter LLM in weeks versus months.

Amazon Bedrock Revamped With Fine-Tuning And More

Amazon’s flagship generative AI platform, Bedrock, was injected with a slew of new features, including a new fine-tuning capability to securely customize foundation models (FMs) with a business’ own data in Bedrock to build applications that are specific to its domain, organization and use case.

With fine-tuning, customers can increase model accuracy by providing their own task-specific-labeled training data set and further specialize FMs. With continued pre-training, users can train models using their own unlabeled data in a secure and managed environment with customer- managed keys. This enables customers to create unique user experiences that reflect their company’s style, voice and services.

AWS also announced at re:Invent this week that Agents for Amazon Bedrock is now generally available. The offering helps customers accelerate GenAI application development by orchestrating multistep tasks.

In addition, Knowledge Bases for Amazon Bedrock is now generally available. With this knowledge base, customers can securely connect foundation models Bedrock to their company’s data for Retrieval Augmented Generation (RAG).

Three New Serverless Innovations

AWS unveiled three new serverless innovations across its database and analytics portfolio that make it faster and easier for customers to scale their data infrastructure.

Amazon Aurora Limitless Database is a new capability that automatically scales beyond the write limits of a single Amazon Aurora database, making it easy for developers to scale their applications and saving them months compared with building custom solutions.

A new serverless option for Amazon ElastiCache also makes it faster and easier to create highly available caches and instantly scale to meet application demand. AWS said that in under a minute this new features helps customers create highly available caches and instantly scales vertically and horizontally to support customers’ most demanding applications, without needing to manage the infrastructure.

AWS also released a new Amazon Redshift Serverless capability that uses artificial intelligence to predict workloads and automatically scale and optimize resources to help customers meet their price/performance targets.

The three new serverless offerings aim to help customers manage data at any scale and dramatically simplify their operations so they can focus on innovating for their end users without spending time and effort provisioning, managing and scaling their data infrastructure.

Amazon Connect New Generative AI

AWS’ flagship cloud contact center service, Amazon Connect, was injected with new GenAI capabilities from Amazon Q in a move to transform how customers provide customer service.

Amazon Q in Connect provides agents with recommended responses and actions based on real-time customer questions for faster and more accurate customer support.

New features in AWS’ analytics and quality management offering, Connect Contact Lens, can now help identify the essential parts of call center conversations with AI-generated summaries that detect sentiment, trends and policy compliance.

In addition, Amazon Lex was implemented into Amazon Connect to enable contact center administrators to create new chatbots and interactive voice response systems in hours by using natural language prompts and improve existing systems by generating responses to commonly asked questions.

Lastly, Amazon Connect Customer Profiles—an Amazon Connect feature that enables agents to deliver faster, more personalized customer service—now creates unified customer profiles from disparate Software-as-a-Service applications and databases.

“With just a few clicks, contact center leaders can leverage new capabilities powered by generative AI in Amazon Connect to enhance the more than 15 million customer interactions handled on Amazon Connect every day,” said Pasquale DeMaio, vice president of Amazon Connect, AWS Applications, in a blog post. “With these new capabilities, contact centers can consistently deliver improved customer support at scale.”

Partners Given Same Tools That AWS Support Engineers Use

To boost its partners’ toolset, AWS is giving them the same diagnostics tools that AWS support engineers use internally to empower partners to do an even better job supporting customers.

Partners will now be able to access a customer’s EC2 Capacity Reservations, Lambda Functions list, GuardDuty findings and Load Balancer responses. In addition, partners can get information from a customer’s RDS and Redshift clusters.

When a customer contacts its partner for support, the partner can now federate into the customer’s AWS account. They can then use the new diagnostic tools to access the customer metadata that will help them to identify and diagnose the issue. These tools can access and organize metadata and CloudWatch metrics, but they cannot access customer data and they cannot make any changes to any of the customer’s AWS resources.

The new support tools are being offered to partners in the AWS Partner-Led Support program inside the AWS Partner Network.